Learn how to Build and Deploy a Chatbot in Minutes using Rasa (IPL Case Study!)

Introduction

Have you ever been stuck at work while a pulsating cricket match was going on? You need to meet a deadline but you just can’t concentrate because your favorite team is locked in a fierce battle for a playoff spot. Sounds familiar?

I’ve been in this situation a lot in my professional career and checking my phone every 5 minutes was not really an option! Being a data scientist, I looked at this challenge from the lens of an NLP enthusiast. Building a chatbot that could fetch me the scores from the ongoing IPL (Indian Premier League) tournament would be a lifesaver.

So I did just that! Using the awesome Rasa stack for NLP, I built a chatbot that I could use on my computer anytime. No more looking down at the phone and getting distracted.

And the cherry on top? I deployed the chatbot to Slack, the popular platform for de facto team communications. That’s right – I could check the score anytime without having to visit any external site. Sounds too good an opportunity to pass up, right?

In this article, I will guide you on how to build your own Rasa chatbot in minutes and deploy it in Slack. With the ICC Cricket World Cup around the corner, this is a great time to get your chatbot game on and feed your passion for cricket without risking your job.

Table of Contents

- Why should you use the Rasa Stack for Building Chatbots?

- Anatomy of our IPL chatbot

- Extracting User Intent from a Message

- Making Interactive Conversations

- Talking to our IPL Chatbot

- Getting IPL Data using CricAPI

- Bringing our Chatbot to Life (Integrating Rasa and Slack)

Why should you use the Rasa Stack for Building Chatbots

The Rasa Stack is a set of open-source NLP tools focused primarily on chatbots. In fact, it’s one of the most effective and time efficient tools to build complex chatbots in minutes. Below are three reasons why I love using the Rasa Stack:

- It lets you focus on improving the “Chatbot” part of your project by providing readymade code for other background tasks like deploying, creating servers, etc.

- The default set up of Rasa works really well right out of the box for intent extraction and dialogue management, even with lesser data

- Rasa stack is open-source, which means we know exactly what is happening under the hood and can customize things as much as we want

These features differentiate Rasa from other chatbot building platforms, such as Google’s DialogFlow. Here’s a sneak peek into the chatbot we’ll soon be building:

Anatomy of our IPL Chatbot

Let’s understand how our Rasa powered IPL chatbot will work before we get into the coding part. Understanding the architecture of the chatbot will go a long way in helping us tweak the final model.

Overview of the Rasa Chatbot

There are various approaches we can take to build this chatbot. How about simply using the quickest and most efficient method? Check out a high-level overview of our IPL chatbot below:

Let’s break down this architecture (keep referring to the image to understand this):

- As soon as Rasa receives a message from the end user, it tries to predict or extract the “intent” and “entities” present in the message. This part is handled by Rasa NLU

- Once the user’s intent is identified, the Rasa Stack performs an action called action_match_news to get the updates from the latest IPL match

- Rasa then tries to predict what it should do next. This decision is taken considering multiple factors and is handled by Rasa Core

- In our example, Rasa is showing the result of the most recent match to the user. It has also predicted the next action that our model should take – to check with the user whether the chatbot was able to solve his/her query

Setting up the IPL Chatbot

I have created two versions of the project on GitHub:

- Complete Version – This is a complete chatbot that you can deploy right away in Slack and start using

- Practice Version – Use this version when you’re going through this article. It will help you understand how the code works

So, go ahead and clone the ‘Practice Version’ project from GitHub:

git clone https://github.com/mohdsanadzakirizvi/iplbot.git && cd iplbot

And cd into the practice_version:

cd practice_version

A quick note on a couple of things you should be aware of before proceeding further:

- Rasa currently only supports Python version <= 3.6. If you have a higher version of Python, you can set up a new environment in conda using the following command:

conda create -n rasa python=3.6 conda activate rasa

- You will need a text editor to work with the multiple files of our project. My personal favorite is Sublime Text which you can download here

Installing Rasa and its Dependencies

You can use the code below to install all the dependencies of the Rasa Stack:

pip install -r requirements.txt

This step might take a few minutes because there are quite a few files to install. You will also need to install a spaCy English language model:

python -m spacy download en

Let’s move on!

Extracting User Intent from a Message

The first thing we want to do is figure out the intent of the user. What does he or she want to accomplish? Let’s utilize Rasa and build an NLU model to identify user intent and its related entities.

Look into the practice_version folder you downloaded earlier:

The two files we will be using are highlighted above.

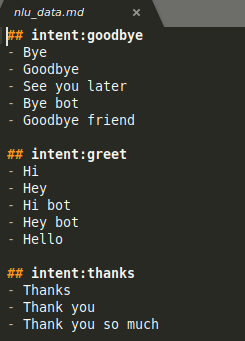

- data/nlu_data.md – This is the file where you will save your training data for extracting the user intent. There is some data already present in the file:

As you can see, the format of training data for ‘intent’ is quite simple in Rasa. You just have to:

- Start the line with “## intent:intent_name”

- Supply all the examples in the following lines

Let’s write some intent examples in Python for the scenario when the user wants to get IPL updates:

You can include as many examples as you want for each intent. In fact, make sure to include slangs and short forms that you use while texting. The idea is to make the chatbot understand the way we type text. Feel free to refer to the complete version where I have given plenty of examples for each intent type.

- nlu_config.yml – This file lets us create a text processing pipeline in Rasa. Luckily for us, Rasa comes with two default settings based on the amount of training data we have:

- “spacy_sklearn” pipeline if you have less than 1000 training examples

- “tensorflow_embedding” if you have a large amount of data

Let’s choose the former as it suits our example:

Training the NLU classifier

If you have made it this far, you have already done most of the work for the intent extraction model. Let’s train it and see it in action!

You can train the classifier by simply following the command below:

make train-nlu

Using Windows? You can run the following python code:

python -m rasa_nlu.train -c nlu_config.yml --data data/nlu_data.md -o models --fixed_model_name nlu --project current --verbose

Predicting the Intent

Let’s test how good our model is performing by giving it a sample text that it hasn’t been trained on for extracting intent. You can open an iPython/Python shell and follow the following steps:

>>> from rasa_nlu.model import Interpreter

>>> nlu_model = Interpreter.load('./models/current/nlu')

>>> nlu_model.parse('what is happening in the cricket world these days?')

Here is what the output looks like:

Not only does our NLU model perform well on intent extraction, but it also ranks the other intents based on their confidence scores. This is a nifty little feature that can be really useful when the classifier is confused between multiple intents.

Making Interactive Conversations

One of the most important aspects of a chatbot application is its ability to be interactive. Think back to a chatbot you’ve used before. Our interest naturally piques if the chatbot is able to hold a conversation, right?

The chatbot is expected to extract all the necessary information needed to perform a particular task using the back and forth conversation it has with the end user.

Designing the conversational flow

Take a moment to think of the simplest conversation our chatbot can have with a user. What would be the flow of such a conversation? Let’s write it in the form of a story!

Me: Hi Iplbot: Hey! How may I help you? Me: What was the result of the last match? Iplbot: Here are some IPL quick info: 1.The match between Rajasthan Royals and Delhi Capitals was recently held and Delhi Capitals won. 2.The next match is Warriors vs Titans on 22 April 2019 Iplbot: Did that help you? Me: yes, thank you! Iplbot: Glad that I could help! :)

Let’s see how we can teach a simple conversation like that to Rasa:

The general format is:

## news path 1 <--- story name for debugging purposes * greet <--- intent detected from the user - utter_greet <--- what action the bot should take * current_matches <--- the following intent in the conversation

This is called a user story path. I have provided a few stories in the data/stories.md file for your reference. This is the training data for Rasa Core.

The way it works is:

- Give some examples of sample story paths that the user is expected to follow

- Rasa Core combines them randomly to create more complex user paths

- It then builds a probabilistic model out of that. This model is used to predict the next action Rasa should take

Check out the data/stories.md file in the complete_version of the project for more such examples. Meanwhile, here is a nice visualization of the basic story paths generated by Rasa for our IPL chatbot:

The above illustration might look complicated, but it’s simply listing out various possible user stories that I have taught Rasa. Here are a few things to note from the above graph:

- Except for the START and END boxes, all the colored boxes indicate user intent

- All the white boxes are actions that the chatbot performs

- Arrows indicate the flow of the conversation

- action_match_news is where we hit the CricAPI to get IPL information

Write the following in your stories.md file:

Now, generate a similar graph for your stories using the following command:

python -m rasa_core.visualize -d domain.yml -s data/stories.md -o graph.html

This is very helpful when debugging the conversational flow of the chatbot.

Defining the Domain

Now, open up the domain.yml file. You will be familiar with most of the features mentioned here:

The domain is the world of your chatbot. It contains everything the chatbot should know, including:

- All the actions it is capable of doing

- The intents it should understand

- The template of all the utterances it should tell the user, and much more

Setting Policies

Rasa Core generates the training data for the conversational part using the stories we provide. It also lets you define a set of policies to use when deciding the next action of the chatbot. These policies are defined in the policies.yml file.

So, open that file and copy the following code:

Here are a few things to note about the above policies (taken from Rasa Core’s policies here):

- KerasPolicy uses a neural network implemented in Keras to select the next action. The default architecture is based on an LSTM (Long Short Term Memory) model

- MemoizationPolicy memorizes the conversations in your training data. It predicts the next action with confidence 1.0 if this exact conversation exists in the training data, otherwise, it predicts ‘None’ with confidence 0.0

- FallbackPolicy invokes a fallback action if the intent recognition has confidence below nlu_threshold or if none of the dialogue policies predict action with confidence higher than core_threshold

- One important hyperparameter for Rasa Core policies is the max_history. This controls how much dialogue history the model looks at to decide which action to take next

Training the Conversation Model

You can train the model using the following command:

make train-core

Or if you are on Windows, you can use the full Python command:

python -m rasa_core.train -d domain.yml -s data/stories.md -o models/current/dialogue -c policies.yml

This will train the Rasa Core model and we can start chatting with the bot right away!

Talking to your IPL chatbot

Before we proceed further, let’s try talking to our chatbot and see how it performs. Open a new terminal and type the following command:

make cmdline

Once it loads up, try having a conversation with your chatbot. You can start by saying “Hi”. The following video shows my interaction with the chatbot:

I got an error message when trying to get IPL updates:

Encountered an exception while running action 'action_match_news'. Bot will continue, but the actions events are lost. Make sure to fix the exception in your custom code.

The chatbot understood my intent to get news about the IPL. So what went wrong? It’s simple – we still haven’t written the backend code for that! So, let’s build up the backend next.

Getting IPL Data using CricAPI

We will use the CricAPI for fetching IPL related news. It is free for 100 requests per day, which (I hope) is more than enough to satiate that cricket crazy passion you have.

You need to first signup on the website to get access to their API:

https://www.cricapi.com/

You should be able to see your API Key once you are logged in:

Save this key as it will be really important for our chatbot. Next, open your actions.py file and update it with the following code:

Python Code:

Fill in the API_KEY with the one you got from CricAPI and you should be good to go. Now, you can again try talking to your chatbot. This time, be prepared to be amazed.

Open a new terminal and start your action server:

make action-server

This will activate the server that is running on the actions.py file and will be working in the background for us. Now, restart the chatbot in the command line:

make cmdline

And this time, it should give you some IPL news when asked. Isn’t that awesome? We have already built a complete chatbot without doing any complex steps!

Bringing the Chatbot to Life (Integrating Rasa and Slack)

So we have the chatbot ready. It’s time to deploy it and integrate it into Slack as I promised at the start of this article. Fortunately for us, Rasa handles 90% of the deployment part on its own.

Note: You need to have a workspace in Slack before proceeding further. If you do not have one, then you can refer to this.

Creating a Slack Application

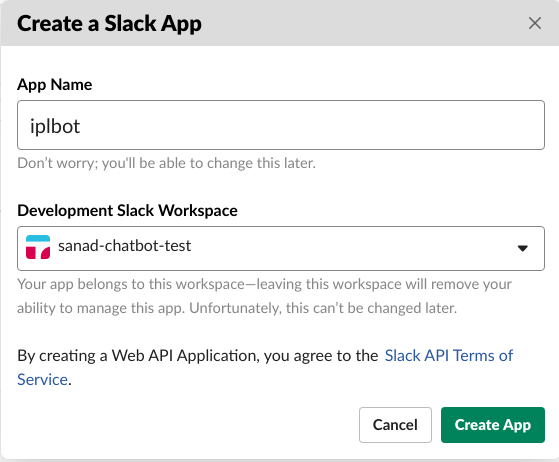

Now that we have a workspace to experiment with, we need an application to attach your bot. Create the app on the below link:

https://api.slack.com/apps

1. Click on “Create App”, give a name to the app, and select your workspace:

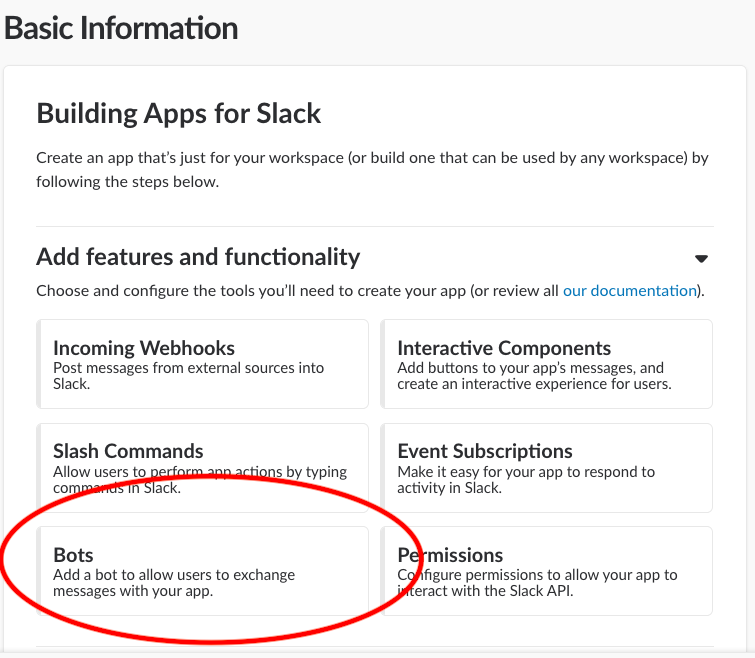

This will redirect you to your app dashboard. From there, you can select the “Bots” option:

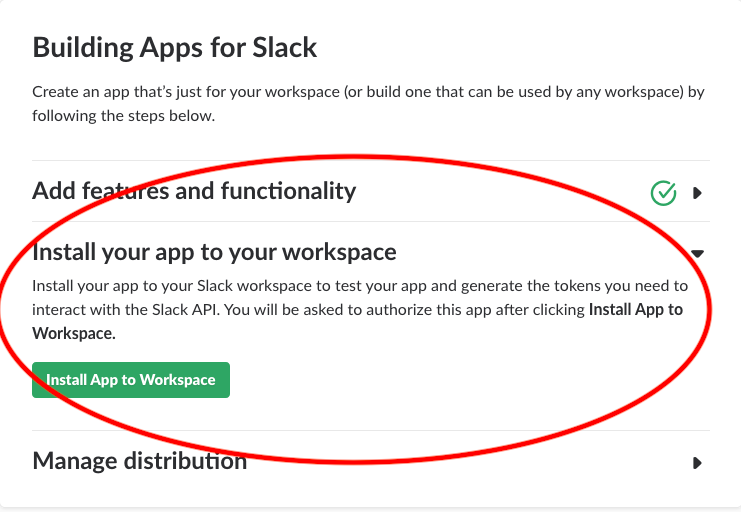

2. Click “Add a Bot User” –> Give a name to your bot. In my case, I have named it “iplbot”. Now, we need to add it to our workspace so we can chat with it! Go back to the above app dashboard and scroll down to find the “Install App to Workspace” option:

Once you do that, Slack will ask you to “authorize” the application. Go ahead and accept the authorization.

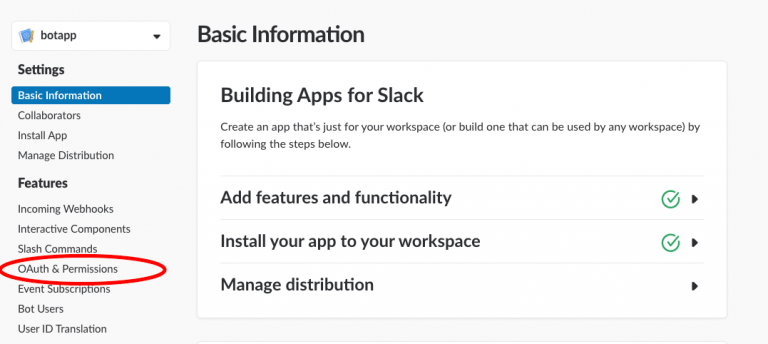

3. Before we are able to connect any external program to our Slack bot, we need to have an “auth token” that we need to provide when trying to connect with it. Go back to the “app dashboard” and select the “OAuth & Permissions” option:

4. This will open the permissions settings of the app. Select the “Bot User OAuth Access Token” and save it (I have hidden them for security reasons). This token is instrumental in connecting to our chatbot.

Installing Ngrok

Our work isn’t over yet. We need another useful tool to deploy our chatbot to Slack. That’s ngrok and you can use the following link to download it:

https://ngrok.com/download

We are now one step away from deploying our own chatbot! Exciting times await us in the next section.

Pushing the Chatbot to Slack

We need only five commands to get this done as Rasa takes care of everything else behind the scenes.

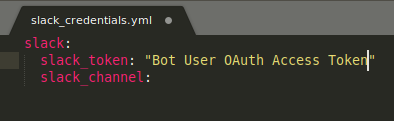

- Open your slack_credentials.yml file and paste the “Bot User OAuth Access Token” in place of the Slack token:

- Go to a new terminal and start the action-server:

make action-server

- You’ll see that the server is run on port 5055 so let’s use ngrok on this port. Open another terminal and type the following:

ngrok http 5055

This will give an output like the below image:

The highlighted link is the link on the internet that is connected to your computer’s port 5055. This is what ngrok does – it lets your computer’s local programs be exposed on the internet. In a way, this is a shortcut for using a cloud service to deploy your app.

- Open your endpoints.yml file and replace the “http://localhost:5055/webhook” with the above URL like this:

your_ngrok_url:5055/webhook

- Deploy the Rasa chatbot using the following command:

python -m rasa_core.run -d models/current/dialogue -u models/current/nlu --port 5002 --connector slack --credentials slack_credentials.yml --endpoints endpoints.yml

You will get a message like this:

Notice that the Rasa Core server is running at port 5002.

- Now, deploy port 5002 to the internet:

ngrok http 5002

- Go to your app dashboard on Slack, click on Events Subscription, and then on the “Enable Event Subscriptions” button. Paste the ngrok URL of your Rasa Core Server in this format under the Request URL field:

In the above URL, replace the ngrok part with your ngrok URL:

your_ngrok_url/webhooks/slack/webhook

- Under the Subscribe to Bot Events, click on the Add Bot User Event button. It will reveal a text field and a list of events. You can enter terms into this field to search for events you want your bot to respond to. Here’s a list of events that I suggest adding:

Once you’ve added the events, click the Save Changes button at the bottom of the screen.

Now you can just refresh your Slack page and start chatting right away with your bot! Here’s a conversation with my chatbot:

Where should you go from here?

You’ll find the below links useful if you are looking for similar challenges. I have built a Zomato-like chatbot for Restaurant Search problem using both Rasa Core and Rasa NLU models. I will be teaching this in much more detail in our course on Natural Language Processing.

The links to the course are below for your reference:

- Certified Course: Natural Language Processing (NLP) using Python

- Certified Program: NLP for Beginners

- The Ultimate AI & ML BlackBelt Program

End Notes

I would love to see different approaches and techniques from our community. Try to use different pipelines in Rasa Core, explore more Policies, fine-tune those models, check out what other features CricAPI provides, etc. There are so many things you can try! Don’t stop yourself here – go on and experiment.

Feel free to discuss and provide your feedback in the comments section below. The full code for my project is available here.

You should also check out these two articles on building chatbots:

Thanks, Sanad ...!! That's really a Great Article Which actually includes all the information about the Package and the Application deployment as well

Hey Dheeraj, Glad that you liked it! My goal was to cover everything that involves building a chatbot end to end, including how would one deploy something like that.

Hi Rizvi, Nice Article!!! How about chat responding with the ongoing match score??

Thanks Rahul, You can actually do that, I encourage you to go ahead and explore CricAPI's other APIs. They even provide detailed summary of every match and player stats and what not!

Hi Sanad, I was going through codes residing in all the files and wondering the conversation flow between * current_matches - action_match_news Observed that action_match_news is missing in templates of domain.yml then how does the flow understand that for "action_match_news" the file named actions.py needs to be called?

Hey Rahul, 1. action_match_news is actually present in the domain.yml file. 2. It is read by Rasa Core and since it’s a custom action (one that is not present in Rasa Core by default), it checks it in the actions.py file. This is the behavior of Rasa Core itself. 3. You can define multiple custom actions like these in the actions.py file and add them in the domain.yml and Rasa would pick them up 🙂

Quite Comprehensive! I am gonna try this. :)

Hey Pankaj, Glad that you liked it! :D

Wonderful chatbot. When I tried this on windows, I had to make few changes. I had got an SSL error as mentioned https://forum.rasa.com/t/rasa-core-sdk-not-working/9228 Solution that I got was to have endpoints.yml as below: action_endpoint: url: http://localhost:5055/webhook core_endpoint: url: http://localhost:5005 Also, there was no key "winning_team" from results from cricapi. So changed to "toss_winner_team" Rest worked fine. Thanks.

Hey Yogesh, Thanks for the feedback and glad that you could make it work on Windows, I will verify this and update in the article. If you are using the "/matches" endpoint of cricapi then you would get the "winning_team" key in the results.. Do let me know if you found anything else interesting! Thanks!

Hi Sanad, I am able to get the Chatbot done via command promt. Almost there with Slack interface but getting error as action server not running, got couple of questions here: Background: 1. After running ngrok http 5055, i replaced the ngrok url in endpoints.yml (keeping port 5055) 2. Later you ran rasa core on 5002 port which means rasa core is running on 5002 now ( not on 5055) ,which is then followed by ngrok http 5002 3. ngrok http 5002 now gives an another ngrok url For my Slack Interface-Final GUI Chatbot: Question 1: Now in my endpoints.yml which ngrok url and what port number should be present? point 1. ngrok url with 5055 port OR point 3. ngrokurl with port 5002 Question 2 : (similar to question 1) what should go in event subscription-enable events? point1 ngrok url or point3 ngrok url ?

Follow the flow as given in the article. Here are the answers: Q1: point 1. ngrok url with 5055 Q2: point3 ngrok url

Hey For endpoints.yml you have to give ngrok url of 5055 as endpoints file directs to the action endpoint. In case of an event subscription, you have to give the ngrok url of 5002.

The output for the section where CricAPI is used to get score, I get the 404 from the action-server. Can you help me find the issue?

Did you use the correct url of the API and the ApiKey?

Hi, Sanad, great article. Thank you for the info. I am currently trying the 'Practice version' but once I launch the action server and then cmdline, when I give my first Input ie, Hi or Hello there is an error that I see currently, make cmdline python -m rasa_core.run -d models/current/dialogue -u models/current/nlu --endpoints endpoints.yml C:\Python3.6\lib\site-packages\h5py\__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`. from ._conv import register_converters as _register_converters 2019-05-14 08:39:58 INFO root - Rasa process starting 2019-05-14 08:39:58 INFO rasa_nlu.components - Added 'nlp_spacy' to component cache. Key 'nlp_spacy-en'. 2019-05-14 08:40:04.535703: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 2019-05-14 08:40:05 INFO root - Rasa Core server is up and running on http://localhost:5005 Bot loaded. Type a message and press enter (use '/stop' to exit): Your input -> hi 127.0.0.1 - - [2019-05-14 08:40:10] "POST /webhooks/rest/webhook?stream=true&token= HTTP/1.1" 200 190 0.180946 Exception in thread Thread-1: Traceback (most recent call last): File "C:\Python3.6\lib\threading.py", line 916, in _bootstrap_inner self.run() File "C:\Python3.6\lib\threading.py", line 864, in run self._target(*self._args, **self._kwargs) File "C:\Python3.6\lib\site-packages\rasa_core\channels\console.py", line 114, in record_messages for response in bot_responses: File "C:\Python3.6\lib\site-packages\rasa_core\channels\console.py", line 71, in send_message_receive_stream with requests.post(url, json=payload, stream=True) as r: AttributeError: __enter__ Any suggestions on how to fix it please ? thanks Raj

Hey Raj, I haven't faced this problem so far, on searching it seems to be a python issue and not Rasa issue. I tried recreating this error but it works fine for me. Hope you found a solution for it! :) Sanad

I am having this issue while following your instruction for both complete_version and practice_version after using API key in actions.py: Your input -> Hi Hey! What can I do for you? 127.0.0.1 - - [2019-05-15 12:17:40] "POST /webhooks/rest/webhook?stream=true&token= HTTP/1.1" 200 227 0.156891 Your input -> i want IPL updates 2019-05-15 12:17:55 ERROR rasa_core.actions.action - Failed to run custom action 'action_match_news'. Action server responded with a non 200 status code of 404. Make sure your action server properly runs actions and returns a 200 once the action is executed. Error: 404 Client Error: NOT FOUND for url: http://localhost:5055/ 2019-05-15 12:17:55 ERROR rasa_core.processor - Encountered an exception while running action 'action_match_news'. Bot will continue, but the actions events are lost. Make sure to fix the exception in your custom code. Did that help you? 127.0.0.1 - - [2019-05-15 12:17:55] "POST /webhooks/rest/webhook?stream=true&token= HTTP/1.1" 200 218 0. I would really appreciate if you could guide me to resolve this issue.

Update your endpoints.yml file with the url of ngrok for action-server. You can do this by following the steps given in "Pushing your chatbot to Slack"

Hi Sanjay, One of the possible solution to your query is look at the post by Yogesh Kulkarni on 3rd May, if your action program tries to fetch the "winning_team" but in crickapi its using “toss_winner_team” , all of a sudden i faced this issues yesterday but when i started using “toss_winner_team” in my action program file then i was able to proceed, thanks

Hi. I think I finally found a good tutorial about rasa bot.. Just got one question. I need to build an external app to connect to this bot. How can I connect my web application through api with this ? Is it possible ? And does it handle multiple simultaneous conversation ? thanks for your help

Hey Kassim, Thanks for your feedback!. Here is how you can connect your backend to Rasa: https://rasa.com/docs/faq/#how-can-i-connect-rasa-to-my-backend-enterprise-systems I think it does, depends upon how you have deployed it in the backed.

This only worked because both of your action-server and rasa core server are running on the same laptop but this won't work if you have the servers running on different machines (say one of them/both running on a cloud). In the case of a cloud, you do not get the benefit of localhost. So, I'd suggest stick to the ngrok approach as there you are actually deploying both of your servers to the internet so you can access them from anywhere.

Hey can you explain your actions.py file a little more cause I'm confused how I will write my own actions.py if I want to perform a completely different action.

Hey Tarun, You can follow this link for info: https://rasa.com/docs/rasa/core/actions/

I tried running the files exactly as described in the tutorials. I am able to enter text on slack and see a 200 message both on ngrok and on the terminal but no response on the bot. I get the following error after I run the rasa_core.run command with the --debug flag :- 'rasa_core.processor - Encountered an exception while running action 'utter_greet'. Bot will continue, but the actions events are lost. Make sure to fix the exception in your custom code. 2019-05-28 17:11:40 DEBUG rasa_core.processor - HTTPSConnectionPool(host='slack.com', port=443): Max retries exceeded with url: /api/chat.postMessage (Caused by SSLError(SSLError("bad handshake: Error([('SSL routines', 'tls_process_server_certificate', 'certificate verify failed')],)",),))' It appears that the error is with slackclient, which is unable to pushthe output of the bot to slack. I am running an ngrok server on port 5004 , which I also mention in the rasa_core.run script using --port flag. I have the custom action running on my localhost:5005. Any ideas on how to get this bot running?

Hey Sumit, Did you try updating the endpoints.yml file with the ngrok url of action-server?

Thanks for your efforts to create this Article and sharing with us. I am also trying to build Chatbot with Rasa and i am struggling in actions.py file where i don't want to call an http API, just i want to extract data from csv and show it on browser or any csv software. I dont know what are all methods ro classes available in tracker ,dispatcher or things we used in actions file so that i can see which one does what and accordingly can use that. Can you please suggest where i can get in depth detail about anything we want to do in actions file. Thanks in advance.

Hey Tarun, Thanks for your feedback! I'd like to point out that the best place to learn about rasa is the documentation itself. Actions is specifically dealt by Rasa Core so read the documentation in greater detail. Here are some links that might help you: 1. Trackers documentation: https://rasa.com/docs/rasa/api/tracker/ 2. Also read a bit about Events: https://rasa.com/docs/rasa/api/events/#events

Hi Sanad, Great article and appreciate you doing this for us! I'm a newbie and trying to build one for a website of retail products. Do you have any suggestions on how I can use/mold your idea to accomplish my goal. Any help or suggestions will be great!

Nice Blog article i found this very useful but am trying to do something similar but i don't have any API call to make can ,so i was thinking can i still use your repo but leave the action.py

Thank you for this blog article hi i followed your steps strictly but my but does not respond on slack but my Event Subscriptions is verified .

Hey, Did you use the correct URL?

After trying the below command, python -m rasa_core.visualize -d domain.yml -s data/stories.md -o graph.html I'm getting error as DLL File "C:\Users\Dell\AppData\Local\Programs\Python\Python36\lib\site-packages\rasa_core\policies\keras_policy.py", line 5, in import tensorflow as tf File "C:\Users\Dell\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\__init__.py", line 24, in from tensorflow.python import pywrap_tensorflow # pylint: disable=unused-import File "C:\Users\Dell\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\python\__init__.py", line 59, in from tensorflow.core.framework.graph_pb2 import * File "C:\Users\Dell\AppData\Local\Programs\Python\Python36\lib\site-packages\tensorflow\core\framework\graph_pb2.py", line 6, in from google.protobuf import descriptor as _descriptor File "C:\Users\Dell\AppData\Local\Programs\Python\Python36\lib\site-packages\google\protobuf\descriptor.py", line 47, in from google.protobuf.pyext import _message ImportError: DLL load failed: The specified procedure could not be found. I even tried to import tensorflow in python there also I'm getting import Error Dll load failed. I have installed Microsoft c++ build tools full still not able to resolve this issue. Your help is much appreciated.

Hey Muthu, I did not face such issues while installing on windows

Hi Sanad ! Thanks for this very good tutorial. I am facing the same problem as Yogesh (in his comment of May 3, 2019) on Windows. I get the following error when running the bot in command line : Action server responded with a non 200 status code of 404. Make sure your action server properly runs actions and returns a 200 once the action is executed. Error: 404 Client Error: NOT FOUND for url: http://localhost:5055/ The update of the endpoints.yml that did the trick for Yogesh is not working on me (I'm using the correct API and my token is working fine) Any thoughts on this? Cheers

I am using ubuntu system .I got following error python -m rasa_core.run -d models/current/dialogue -u models/current/nlu --endpoints endpoints.yml /home/ivl/RasaChatBot/myenv/lib/python3.6/site-packages/h5py/__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`. from ._conv import register_converters as _register_converters 2019-07-18 15:02:34 INFO root - Rasa process starting 2019-07-18 15:02:34 INFO rasa_nlu.components - Added 'nlp_spacy' to component cache. Key 'nlp_spacy-en'. 2019-07-18 15:02:43 INFO root - Rasa Core server is up and running on http://localhost:5005 Bot loaded. Type a message and press enter (use '/stop' to exit): Your input -> Hi Hey! What can I do for you? 127.0.0.1 - - [2019-07-18 15:02:45] "POST /webhooks/rest/webhook?stream=true&token= HTTP/1.1" 200 195 0.169617 Your input -> Which ipl match is next 2019-07-18 15:02:55 ERROR rasa_core.actions.action - Failed to run custom action 'action_match_news'. Action server responded with a non 200 status code of 404. Make sure your action server properly runs actions and returns a 200 once the action is executed. Error: 404 Client Error: NOT FOUND for url: http://localhost:5055/ 2019-07-18 15:02:55 ERROR rasa_core.processor - Encountered an exception while running action 'action_match_news'. Bot will continue, but the actions events are lost. Make sure to fix the exception in your custom code. Did that help you? 127.0.0.1 - - [2019-07-18 15:02:55] "POST /webhooks/rest/webhook?stream=true&token= HTTP/1.1" 200 186 0.048663

Hi Sanad ! Thanks for this very good tutorial. I am facing the same problem as Yogesh (in his comment of May 3, 2019) on Windows. I get the an Action Server error when running the bot in command line. The update of the endpoints.yml that did the trick for Yogesh is not working on me (I'm using the correct API and my token is working fine) Any thoughts on this? Cheers

Hi again, solved my problem. There was a typo in my endpoints.yml file.

Hey Denis, I'm glad that you were able to solve your problem! Typos are such a thing. Please make sure to help out others in the community if they have further doubts on Windows. As my personal system is Linux!

Hi Denis, I'm working with windows, I want to ask what is the replacement for $make cmd in windows. Thanks in advance

Hi, I see that you have mentioned the corresponding commands to run on Windows Command Line. Thanks - I could not find them anywhere else. What I would Like to understand how did you conver the commands mentioned in the rasa doc to windows command line commands. For example, Rasa has a validate command called - rasa data validate -> How can I run this corresponding thing on windows?

I got the fllowing exceptions: (2:36 PM 7/19/2019) ------------------------------------------------------------------------------------ \iplbot\practice_version>python -m spacy download en Requirement already satisfied: en_core_web_sm==2.0.0 from https://github.com/explosion/spacy-models/releases/download/en_core_web_sm-2.0.0/en_core_web_sm-2.0.0.tar.gz#egg=en_core_web_sm==2.0.0 in c:\users\myusername\.conda\envs\ra20190722sa\lib\site-packages (2.0.0) You do not have sufficient privilege to perform this operation. Linking successful C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\en_core_web_sm --> C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\spacy\data\en You can now load the model via spacy.load('en') ------------------------------------------------------------------------------------ and ///////////////////////////////////////////////////////////////////////////////////////////////////// \iplbot\practice_version>python -m rasa_nlu.train -c nlu_config.yml --data data/nlu_data.md -o models --fixed_model_name nlu --project current --verbose C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\h5py\__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`. from ._conv import register_converters as _register_converters 2019-07-22 14:07:56 INFO rasa_nlu.utils.spacy_utils - Trying to load spacy model with name 'en' Traceback (most recent call last): File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\runpy.py", line 193, in _run_module_as_main "__main__", mod_spec) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\runpy.py", line 85, in _run_code exec(code, run_globals) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\rasa_nlu\train.py", line 184, in num_threads=cmdline_args.num_threads) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\rasa_nlu\train.py", line 148, in do_train trainer = Trainer(cfg, component_builder) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\rasa_nlu\model.py", line 155, in __init__ self.pipeline = self._build_pipeline(cfg, component_builder) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\rasa_nlu\model.py", line 166, in _build_pipeline component_name, cfg) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\rasa_nlu\components.py", line 441, in create_component cfg) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\rasa_nlu\registry.py", line 142, in create_component_by_name return component_clz.create(config) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\rasa_nlu\utils\spacy_utils.py", line 73, in create nlp = spacy.load(spacy_model_name, parser=False) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\spacy\__init__.py", line 21, in load return util.load_model(name, **overrides) File "C:\Users\myusername\.conda\envs\ra20190722sa\lib\site-packages\spacy\util.py", line 119, in load_model raise IOError(Errors.E050.format(name=name)) OSError: [E050] Can't find model 'en'. It doesn't seem to be a shortcut link, a Python package or a valid path to a data directory. /////////////////////////////////////////////////////////////////////////////////////////////////////

Do it through administrator command prompt mode.

I have implemented the RASA chatbot on windows 10. It is working fine on command prompt .Can anyone help me out with how to use this as an API. How can I test the chatbot through postman using GET and PSOT requests.I have Action Server hosted on port 5055 and RASA core server on 5005.

Hi Sanad, Great Article... I too faced the endpoint issue and suffixing with "/webhook" in yml file solved for me after some struggle. However, i have some other observation and questions and will be grateful if you can shed some light on it 1) I found it to be very slow. Even my Hi is responded by Bot after some anxious moments. Everything is running locally on my windows machine with good specs 2) I am assuming that teh rasa core i.e. this command will run on backend server python -m rasa_core.run -d models/current/dialogue -u models/current/nlu --endpoints endpoints.yml --enable_api And there will be multiple users initiate chat with it. Then how would it work. I am not able to put all the moving parts together in a multi user context.. Please shed some light... f i package it as a website plugin then how would it work with multiple chat sessions. Thanks again very much for such a wonderful article.

Hi first, i salute you for this work, am using your approach to build a slack chatbot for our workspace, now am looking for how to read some metadata from slack like : the message sender, date... for now i dont know if it possible, so any suggestions are welcome.

Thanks Sanad Its a great article. For me it was great support as am a beginner in rasa. I followed this for slack integration with my bot and its working fine. Thanks a lot.

Hi Rizvi Thanks a lot for this nice article I had went through many but found this best of all... Need help: While running python -m rasa_nlu.train -c nlu_config.yml --data data/nlu_data.md -o models --fixed_model_name nlu --project current --verbose Getting AttributeError: type object 'spacy.syntax.nn_parser.array' has no attribute '__reduce_cython__' errror. Could you please let me know how to resolve. Thanks with best regards, Akshay Please find error description below. ERROR DESCRIPTION .................................................................................................................................. (chatbot) C:\Users\akshay.jain23\iplbot\practice_version>python -m rasa_nlu.train -c nlu_config.yml --data data/nlu_data.md -o models --fixed_model_name nlu --project current --verbose C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\site-packages\h5py\__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`. from ._conv import register_converters as _register_converters Traceback (most recent call last): File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\runpy.py", line 193, in _run_module_as_main "__main__", mod_spec) File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\runpy.py", line 85, in _run_code exec(code, run_globals) File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\site-packages\rasa_nlu\train.py", line 184, in num_threads=cmdline_args.num_threads) File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\site-packages\rasa_nlu\train.py", line 148, in do_train trainer = Trainer(cfg, component_builder) File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\site-packages\rasa_nlu\model.py", line 152, in __init__ components.validate_requirements(cfg.component_names) File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\site-packages\rasa_nlu\components.py", line 56, in validate_requirements component_class.required_packages())) File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\site-packages\rasa_nlu\components.py", line 39, in find_unavailable_packages importlib.import_module(package) File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\importlib\__init__.py", line 126, in import_module return _bootstrap._gcd_import(name[level:], package, level) File "", line 994, in _gcd_import File "", line 971, in _find_and_load File "", line 955, in _find_and_load_unlocked File "", line 665, in _load_unlocked File "", line 678, in exec_module File "", line 219, in _call_with_frames_removed File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\site-packages\spacy\__init__.py", line 12, in from . import pipeline File "C:\Users\akshay.jain23\AppData\Local\Continuum\anaconda3\envs\chatbot\lib\site-packages\spacy\pipeline\__init__.py", line 4, in from .pipes import Tagger, DependencyParser, EntityRecognizer, EntityLinker File "pipes.pyx", line 1, in init spacy.pipeline.pipes File "stringsource", line 105, in init spacy.syntax.nn_parser AttributeError: type object 'spacy.syntax.nn_parser.array' has no attribute '__reduce_cython__' ............................................................................................................

Thanks for the info. Build & Deploy a Custom Chatbot on your Website or App in just 20 minutes. Our Expert Team Technology innovations in Artificial Intelligence Helps your business to generate 200% Increase in LEADS and Double your SALES with our VajraX Business solutions.

My preferred code editor is Codelobster - https://codelobster.com