A Comprehensive Guide to Build your own Language Model in Python!

Overview

- Language models are a crucial component in the Natural Language Processing (NLP) journey

- These language models power all the popular NLP applications we are familiar with – Google Assistant, Siri, Amazon’s Alexa, etc.

- We will go from basic language models to advanced ones in Python here

Introduction

We tend to look through language and not realize how much power language has.

Language is such a powerful medium of communication. We have the ability to build projects from scratch using the nuances of language. It’s what drew me to Natural Language Processing (NLP) in the first place.

I’m amazed by the vast array of tasks I can perform with NLP – text summarization, generating completely new pieces of text, predicting what word comes next (Google’s autofill), among others. Do you know what is common among all these NLP tasks?

They are all powered by language models! Honestly, these language models are a crucial first step for most of the advanced NLP tasks.

In this article, we will cover the length and breadth of language models. We will begin from basic language models that can be created with a few lines of Python code and move to the State-of-the-Art language models that are trained using humongous data and are being currently used by the likes of Google, Amazon, and Facebook, among others.

So, tighten your seatbelts and brush up your linguistic skills – we are heading into the wonderful world of Natural Language Processing!

Are you new to NLP? Confused about where to begin? You should check out this comprehensive course designed by experts with decades of industry experience:

Table of contents

What is a Language Model in NLP?

“You shall know the nature of a word by the company it keeps.” – John Rupert Firth

A language model learns to predict the probability of a sequence of words. But why do we need to learn the probability of words? Let’s understand that with an example.

I’m sure you have used Google Translate at some point. We all use it to translate one language to another for varying reasons. This is an example of a popular NLP application called Machine Translation.

In Machine Translation, you take in a bunch of words from a language and convert these words into another language. Now, there can be many potential translations that a system might give you and you will want to compute the probability of each of these translations to understand which one is the most accurate.

In the above example, we know that the probability of the first sentence will be more than the second, right? That’s how we arrive at the right translation.

This ability to model the rules of a language as a probability gives great power for NLP related tasks. Language models are used in speech recognition, machine translation, part-of-speech tagging, parsing, Optical Character Recognition, handwriting recognition, information retrieval, and many other daily tasks.

Types of Language Models

There are primarily two types of Language Models:

Statistical Language Models

These models use traditional statistical techniques like N-grams, Hidden Markov Models (HMM) and certain linguistic rules to learn the probability distribution of words

Neural Language Models

These are new players in the NLP town and have surpassed the statistical language models in their effectiveness. They use different kinds of Neural Networks to model language

Now that you have a pretty good idea about Language Models, let’s start building one!

Building an N-gram Language Model

What are N-grams (unigram, bigram, trigrams)?

An N-gram is a sequence of N tokens (or words).

Let’s understand N-gram with an example. Consider the following sentence:

“I love reading blogs about data science on Analytics Vidhya.”

A 1-gram (or unigram) is a one-word sequence. For the above sentence, the unigrams would simply be: “I”, “love”, “reading”, “blogs”, “about”, “data”, “science”, “on”, “Analytics”, “Vidhya”.

What is Bigram Language Model?

A bigram language model is a type of statistical language model that predicts the probability of a word in a sequence based on the previous word. It considers pairs of consecutive words (bigrams) and estimates the likelihood of encountering a specific word given the preceding word in a text or sentence.

A 2-gram (or bigram) is a two-word sequence of words, like “I love”, “love reading”, or “Analytics Vidhya”. And a 3-gram (or trigram) is a three-word sequence of words like “I love reading”, “about data science” or “on Analytics Vidhya”.

Fairly straightforward stuff!

How do N-gram Language Models work?

An N-gram language model predicts the probability of a given N-gram within any sequence of words in the language. If we have a good N-gram model, we can predict p(w | h) – what is the probability of seeing the word w given a history of previous words h – where the history contains n-1 words.

We must estimate this probability to construct an N-gram model.

We compute this probability in two steps:

- Apply the chain rule of probability

- We then apply a very strong simplification assumption to allow us to compute p(w1…ws) in an easy manner

The chain rule of probability is:

p(w1...ws) = p(w1) . p(w2 | w1) . p(w3 | w1 w2) . p(w4 | w1 w2 w3) ..... p(wn | w1...wn-1)

So what is the chain rule? It tells us how to compute the joint probability of a sequence by using the conditional probability of a word given previous words.

But we do not have access to these conditional probabilities with complex conditions of up to n-1 words. So how do we proceed?

This is where we introduce a simplification assumption. We can assume for all conditions, that:

p(wk | w1...wk-1) = p(wk | wk-1)

Here, we approximate the history (the context) of the word wk by looking only at the last word of the context. This assumption is called the Markov assumption. (We used it here with a simplified context of length 1 – which corresponds to a bigram model – we could use larger fixed-sized histories in general).

Building a Basic Language Model

Now that we understand what an N-gram is, let’s build a basic language model using trigrams of the Reuters corpus. Reuters corpus is a collection of 10,788 news documents totaling 1.3 million words. We can build a language model in a few lines of code using the NLTK package:

Python Code:

The code above is pretty straightforward. We first split our text into trigrams with the help of NLTK and then calculate the frequency in which each combination of the trigrams occurs in the dataset.

We then use it to calculate probabilities of a word, given the previous two words. That’s essentially what gives us our Language Model!

Let’s make simple predictions with this language model. We will start with two simple words – “today the”. We want our model to tell us what will be the next word:

So we get predictions of all the possible words that can come next with their respective probabilities. Now, if we pick up the word “price” and again make a prediction for the words “the” and “price”:

If we keep following this process iteratively, we will soon have a coherent sentence! Here is a script to play around with generating a random piece of text using our n-gram model:

And here is some of the text generated by our model:

Pretty impressive! Even though the sentences feel slightly off (maybe because the Reuters dataset is mostly news), they are very coherent given the fact that we just created a model in 17 lines of Python code and a really small dataset.

This is the same underlying principle which the likes of Google, Alexa, and Apple use for language modeling.

Limitations of N-gram approach to Language Modeling

N-gram based language models do have a few drawbacks:

- The higher the N, the better is the model usually. But this leads to lots of computation overhead that requires large computation power in terms of RAM

- N-grams are a sparse representation of language. This is because we build the model based on the probability of words co-occurring. It will give zero probability to all the words that are not present in the training corpus

Building a Neural Language Model

“Deep Learning waves have lapped at the shores of computational linguistics for several years now, but 2015 seems like the year when the full force of the tsunami hit the major Natural Language Processing (NLP) conferences.” – Dr. Christopher D. Manning

Deep Learning has been shown to perform really well on many NLP tasks like Text Summarization, Machine Translation, etc. and since these tasks are essentially built upon Language Modeling, there has been a tremendous research effort with great results to use Neural Networks for Language Modeling.

We can essentially build two kinds of language models – character level and word level. And even under each category, we can have many subcategories based on the simple fact of how we are framing the learning problem. We will be taking the most straightforward approach – building a character-level language model.

Understanding the problem statement

Does the above text seem familiar? It’s the US Declaration of Independence! The dataset we will use is the text from this Declaration.

This is a historically important document because it was signed when the United States of America got independence from the British. I used this document as it covers a lot of different topics in a single space. It’s also the right size to experiment with because we are training a character-level language model which is comparatively more intensive to run as compared to a word-level language model.

The problem statement is to train a language model on the given text and then generate text given an input text in such a way that it looks straight out of this document and is grammatically correct and legible to read.

You can download the dataset from here. Let’s begin!

Import the libraries

Read the dataset

You can directly read the dataset as a string in Python:

Preprocessing the Text Data

We perform basic text preprocessing since this data does not have much noise. We lower case all the words to maintain uniformity and remove words with length less than 3:

Once the preprocessing is complete, it is time to create training sequences for the model.

Creating Sequences

The way this problem is modeled is we take in 30 characters as context and ask the model to predict the next character. Now, 30 is a number which I got by trial and error and you can experiment with it too. You essentially need enough characters in the input sequence that your model is able to get the context.

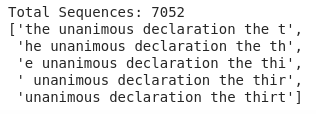

Let’s see how our training sequences look like:

Encoding Sequences

Once the sequences are generated, the next step is to encode each character. This would give us a sequence of numbers.

So now, we have sequences like this:

Create Training and Validation set

Once we are ready with our sequences, we split the data into training and validation splits. This is because while training, I want to keep a track of how good my language model is working with unseen data.

Model Building

Time to build our language model!

I have used the embedding layer of Keras to learn a 50 dimension embedding for each character. This helps the model in understanding complex relationships between characters. I have also used a GRU layer as the base model, which has 150 timesteps. Finally, a Dense layer is used with a softmax activation for prediction.

Inference

Once the model has finished training, we can generate text from the model given an input sequence using the below code:

Results

Let’s put our model to the test. In the video below, I have given different inputs to the model. Let’s see how it performs

Notice just how sensitive our language model is to the input text! Small changes like adding a space after “of” or “for” completely changes the probability of occurrence of the next characters because when we write space, we mean that a new word should start.

Additionally, when we do not give space, it tries to predict a word that will have these as starting characters (like “for” can mean “foreign”).

Also, note that almost none of the combinations predicted by the model exist in the original training data. So our model is actually building words based on its understanding of the rules of the English language and the vocabulary it has seen during training.

Natural Language Generation using OpenAI’s GPT-2

We have so far trained our own models to generate text, be it predicting the next word or generating some text with starting words. But that is just scratching the surface of what language models are capable of!

Leading research labs have trained much more complex language models on humongous datasets that have led to some of the biggest breakthroughs in the field of Natural Language Processing.

In February 2019, OpenAI started quite a storm through its release of a new transformer-based language model called GPT-2. GPT-2 is a transformer-based generative language model that was trained on 40GB of curated text from the internet.

You can read more about GPT-2 here:

So, let’s see GPT-2 in action!

About PyTorch-Transformers

Before we can start using GPT-2, let’s know a bit about the PyTorch-Transformers library. We will be using this library we will use to load the pre-trained models.

PyTorch-Transformers provides state-of-the-art pre-trained models for Natural Language Processing (NLP).

Most of the State-of-the-Art models require tons of training data and days of training on expensive GPU hardware which is something only the big technology companies and research labs can afford. But by using PyTorch-Transformers, now anyone can utilize the power of State-of-the-Art models!

Installing PyTorch-Transformers on your Machine

Installing Pytorch-Transformers is pretty straightforward in Python. You can simply use pip install:

pip install pytorch-transformers

or if you are working on Colab:

!pip install pytorch-transformers

Since most of these models are GPU-heavy, I would suggest working with Google Colab for this part of the article.

Sentence completion using GPT-2

Let’s build our own sentence completion model using GPT-2. We’ll try to predict the next word in the sentence:

“what is the fastest car in the _________”

I chose this example because this is the first suggestion that Google’s text completion gives. Here is the code for doing the same:

Here, we tokenize and index the text as a sequence of numbers and pass it to the GPT2LMHeadModel. This is the GPT2 model transformer with a language modeling head on top (linear layer with weights tied to the input embeddings).

Awesome! The model successfully predicts the next word as “world”. This is pretty amazing as this is what Google was suggesting. I recommend you try this model with different input sentences and see how it performs while predicting the next word in a sentence.

Conditional Text Generation using GPT-2

Now, we have played around by predicting the next word and the next character so far. Let’s take text generation to the next level by generating an entire paragraph from an input piece of text!

Let’s see what our models generate for the following input text:

Two roads diverged in a yellow wood, And sorry I could not travel both And be one traveler, long I stood And looked down one as far as I could To where it bent in the undergrowth;

This is the first paragraph of the poem “The Road Not Taken” by Robert Frost. Let’s put GPT-2 to work and generate the next paragraph of the poem.

We will be using the readymade script that PyTorch-Transformers provides for this task. Let’s clone their repository first:

!git clone https://github.com/huggingface/pytorch-transformers.git

Now, we just need a single command to start the model!

Let’s see what output our GPT-2 model gives for the input text:

And with my little eyes full of hearth and perfumes, I saw the blue of Scotland, And this powerful lieeth close By wind's with profit and grief, And at this time came and passed by, At how often thro' places And always this path was fresh Through one winter down. And, stung by the wild storm, Appeared half-blind, yet in that gloomy castle.

Isn’t that crazy?! The output almost perfectly fits in the context of the poem and appears as a good continuation of the first paragraph of the poem.

If you’re an enthusiast who is looking forward to unravel the world of Generative AI. Then, please register for our upcoming event, DataHack Summit 2023.

Frequently Asked Questions

A. Here’s an example of a bigram language model predicting the next word in a sentence: Given the phrase “I am going to”, the model may predict “the” with a high probability if the training data indicates that “I am going to” is often followed by “the”.

A. The formula for a bigram probability is: P(word | previous_word) = Count(previous_word, word) / Count(previous_word) where Count(previous_word, word) represents the number of occurrences of the bigram (previous_word, word), and Count(previous_word) is the count of the previous_word in the training data.

End Notes

Quite a comprehensive journey, wasn’t it? We discussed what language models are and how we can use them using the latest state-of-the-art NLP frameworks. And the end result was so impressive!

You should consider this as the beginning of your ride into language models. I encourage you to play around with the code I’ve showcased here. This will really help you build your own knowledge and skillset while expanding your opportunities in NLP.

And if you’re new to NLP and looking for a place to start, here is the perfect starting point:

- Introduction to Natural Language Processing Course

- Natural Language Processing (NLP) using Python Course

Let me know if you have any queries or feedback related to this article in the comments section below. Happy learning!

Excellent work !! I will be very interested to learn more and use this to try out applications of this program.

Hey Subra, Thanks for the feedback!

Great work sir kindly do some work related to image captioning or suggest something on that.

Hey Ruchika, Thanks for your comment. I'll try working with image captioning but for now, I am focusing on NLP specific projects!

Hello sir, I liked your article about how to build models. i would like to know about which machine learning and NLP models using python or neural networks would be best to use for auto data mapping from source dataset to target dataset. Thank you.

Yes its a great tutorial to even showcase at any NLP interview.. You are a great man.Thanks

Hey Benya, Thanks for your feedback!

Thanks for the article, I tried !python pytorch-transformers/examples/run_generation.py \ --model_type=gpt2 \ --length=100 \ --model_name_or_path=gpt2 \ and got the error ModuleNotFoundError: No module named 'transformers'. Could you share your colab notebook. ALso could you share how to run the example in windows?

Great Article MOHD Sanad. Learnt lot of information from here. Thanks !!

How to train with own text rather than using the pre-trained tokenizer. Something like training with own set of questions.

thanks! great explaination. keep up the good work

[…] on an enormous corpus of text; with enough text and enough processing, the machine begins to learn probabilistic connections between words. More plainly: GPT-3 can read and write. And not badly, either… GPT-3 is capable of generating […]

[…] on an enormous corpus of text; with enough text and enough processing, the machine begins to learn probabilistic connections between words. More plainly: GPT-3 can read and write. And not badly, either… GPT-3 is capable of generating […]

Hello, i'm very impress by this article, good job. Can you show me or point me to more advance ways of generating own text per my models predict.