Understand Random Forest Algorithms With Examples (Updated 2024)

Introduction

Random Forest is a widely-used machine learning algorithm developed by Leo Breiman and Adele Cutler, which combines the output of multiple decision trees to reach a single result. Its ease of use and flexibility have fueled its adoption, as it handles both classification and regression problems. In this article, we will understand how random forest algorithm works, how it differs from other algorithms and how to use it.

Learning Objectives

- Learn the working of random forest with an example.

- Understand the impact of different hyperparameters in random forest.

- Implement Random Forest on a classification problem using scikit-learn.

This article was published as a part of the Data Science Blogathon.

Table of contents

- What is Random forest

- What is Random Forest Algorithm?

- Real-Life Analogy of Random Forest

- Working of Random Forest Algorithm

- Important Features of Random Forest

- Difference Between Decision Tree and Random Forest

- Important Hyperparameters in Random Forest

- Coding in Python – Random Forest

- Random Forest Algorithm Use Cases

- Advantages and Disadvantages of Random Forest Algorithm

- Frequently Asked Questions

What is Random forest

A Random Forest is like a group decision-making team in machine learning. It combines the opinions of many “trees” (individual models) to make better predictions, creating a more robust and accurate overall model.

What is Random Forest Algorithm?

Random Forest Algorithm widespread popularity stems from its user-friendly nature and adaptability, enabling it to tackle both classification and regression problems effectively. The algorithm’s strength lies in its ability to handle complex datasets and mitigate overfitting, making it a valuable tool for various predictive tasks in machine learning.

One of the most important features of the Random Forest Algorithm is that it can handle the data set containing continuous variables, as in the case of regression, and categorical variables, as in the case of classification. It performs better for classification and regression tasks. In this tutorial, we will understand the working of random forest and implement random forest on a classification task.

Real-Life Analogy of Random Forest

Let’s dive into a real-life analogy to understand this concept further. A student named X wants to choose a course after his 10+2, and he is confused about the choice of course based on his skill set. So he decides to consult various people like his cousins, teachers, parents, degree students, and working people. He asks them varied questions like why he should choose, job opportunities with that course, course fee, etc. Finally, after consulting various people about the course he decides to take the course suggested by most people.

Working of Random Forest Algorithm

Before understanding the working of the random forest algorithm in machine learning, we must look into the ensemble learning technique. Ensemble simplymeans combining multiple models. Thus a collection of models is used to make predictions rather than an individual model.

Ensemble uses two types of methods:

Bagging

It creates a different training subset from sample training data with replacement & the final output is based on majority voting. For example, Random Forest.

Boosting

It combines weak learners into strong learners by creating sequential models such that the final model has the highest accuracy. For example, ADA BOOST, XG BOOST.

As mentioned earlier, Random forest works on the Bagging principle. Now let’s dive in and understand bagging in detail.

Bagging

Bagging, also known as Bootstrap Aggregation, serves as the ensemble technique in the Random Forest algorithm. Here are the steps involved in Bagging:

- Selection of Subset: Bagging starts by choosing a random sample, or subset, from the entire dataset.

- Bootstrap Sampling: Each model is then created from these samples, called Bootstrap Samples, which are taken from the original data with replacement. This process is known as row sampling.

- Bootstrapping: The step of row sampling with replacement is referred to as bootstrapping.

- Independent Model Training: Each model is trained independently on its corresponding Bootstrap Sample. This training process generates results for each model.

- Majority Voting: The final output is determined by combining the results of all models through majority voting. The most commonly predicted outcome among the models is selected.

- Aggregation: This step, which involves combining all the results and generating the final output based on majority voting, is known as aggregation.

Now let’s look at an example by breaking it down with the help of the following figure. Here the bootstrap sample is taken from actual data (Bootstrap sample 01, Bootstrap sample 02, and Bootstrap sample 03) with a replacement which means there is a high possibility that each sample won’t contain unique data. The model (Model 01, Model 02, and Model 03) obtained from this bootstrap sample is trained independently. Each model generates results as shown. Now the Happy emoji has a majority when compared to the Sad emoji. Thus based on majority voting final output is obtained as Happy emoji.

Boosting

Boosting is one of the techniques that use the concept of ensemble learning. A boosting algorithm combines multiple simple models (also known as weak learners or base estimators) to generate the final output. It is done by building a model by using weak models in series.

There are several boosting algorithms; AdaBoost was the first really successful boosting algorithm that was developed for the purpose of binary classification. AdaBoost is an abbreviation for Adaptive Boosting and is a prevalent boosting technique that combines multiple “weak classifiers” into a single “strong classifier.” There are Other Boosting techniques. For more, you can visit

Steps Involved in Random Forest Algorithm

- Step 1: In the Random forest model, a subset of data points and a subset of features is selected for constructing each decision tree. Simply put, n random records and m features are taken from the data set having k number of records.

- Step 2: Individual decision trees are constructed for each sample.

- Step 3: Each decision tree will generate an output.

- Step 4: Final output is considered based on Majority Voting or Averaging for Classification and regression, respectively.

For example

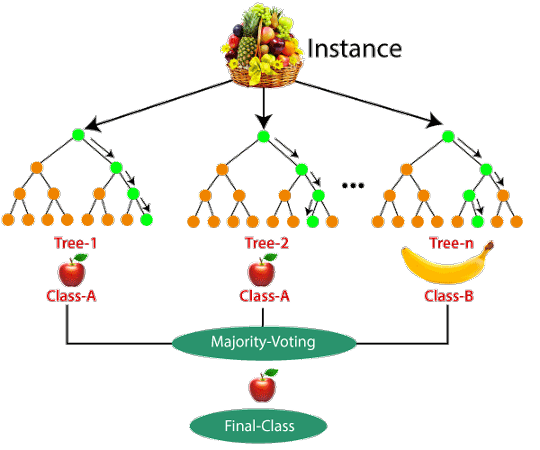

Consider the fruit basket as the data as shown in the figure below. Now n number of samples are taken from the fruit basket, and an individual decision tree is constructed for each sample. Each decision tree will generate an output, as shown in the figure. The final output is considered based on majority voting. In the below figure, you can see that the majority decision tree gives output as an apple when compared to a banana, so the final output is taken as an apple.

Important Features of Random Forest

- Diversity: Not all attributes/variables/features are considered while making an individual tree; each tree is different.

- Immune to the curse of dimensionality: Since each tree does not consider all the features, the feature space is reduced.

- Parallelization: Each tree is created independently out of different data and attributes. This means we can fully use the CPU to build random forests.

- Train-Test split: In a random forest, we don’t have to segregate the data for train and test as there will always be 30% of the data which is not seen by the decision tree.

- Stability: Stability arises because the result is based on majority voting/ averaging.

Difference Between Decision Tree and Random Forest

Random forest is a collection of decision trees; still, there are a lot of differences in their behavior.

| Decision trees | Random Forest |

| 1. Decision trees normally suffer from the problem of overfitting if it’s allowed to grow without any control. | 1. Random forests are created from subsets of data, and the final output is based on average or majority ranking; hence the problem of overfitting is taken care of. |

| 2. A single decision tree is faster in computation. | 2. It is comparatively slower. |

| 3. When a data set with features is taken as input by a decision tree, it will formulate some rules to make predictions. | 3. Random forest randomly selects observations, builds a decision tree, and takes the average result. It doesn’t use any set of formulas. |

Thus random forests are much more successful than decision trees only if the trees are diverse and acceptable.

Important Hyperparameters in Random Forest

Hyperparameters are used in random forests to either enhance the performance and predictive power of models or to make the model faster.

Hyperparameters to Increase the Predictive Power

- n_estimators: Number of trees the algorithm builds before averaging the predictions.

- max_features: Maximum number of features random forest considers splitting a node.

- mini_sample_leaf: Determines the minimum number of leaves required to split an internal node.

- criterion: How to split the node in each tree? (Entropy/Gini impurity/Log Loss)

- max_leaf_nodes: Maximum leaf nodes in each tree

Hyperparameters to Increase the Speed

- n_jobs: it tells the engine how many processors it is allowed to use. If the value is 1, it can use only one processor, but if the value is -1, there is no limit.

- random_state: controls randomness of the sample. The model will always produce the same results if it has a definite value of random state and has been given the same hyperparameters and training data.

- oob_score: OOB means out of the bag. It is a random forest cross-validation method. In this, one-third of the sample is not used to train the data; instead used to evaluate its performance. These samples are called out-of-bag samples.

Coding in Python – Random Forest

Now let’s implement Random Forest in scikit-learn.

1. Let’s import the libraries.

# Importing the required libraries

import pandas as pd, numpy as np

import matplotlib.pyplot as plt, seaborn as sns

%matplotlib inline2. Import the dataset.

Python Code:

3. Putting Feature Variable to X and Target variable to y.

# Putting feature variable to X

X = df.drop('heart disease',axis=1)

# Putting response variable to y

y = df['heart disease']4. Train-Test-Split is performed

# now lets split the data into train and test

from sklearn.model_selection import train_test_split

# Splitting the data into train and test

X_train, X_test, y_train, y_test = train_test_split(X, y, train_size=0.7, random_state=42)

X_train.shape, X_test.shape

5. Let’s import RandomForestClassifier and fit the data.

from sklearn.ensemble import RandomForestClassifier

classifier_rf = RandomForestClassifier(random_state=42, n_jobs=-1, max_depth=5,

n_estimators=100, oob_score=True)

%%time

classifier_rf.fit(X_train, y_train)

# checking the oob score

classifier_rf.oob_score_

6. Let’s do hyperparameter tuning for Random Forest using GridSearchCV and fit the data.

rf = RandomForestClassifier(random_state=42, n_jobs=-1)

params = {

'max_depth': [2,3,5,10,20],

'min_samples_leaf': [5,10,20,50,100,200],

'n_estimators': [10,25,30,50,100,200]

}

from sklearn.model_selection import GridSearchCV

# Instantiate the grid search model

grid_search = GridSearchCV(estimator=rf,

param_grid=params,

cv = 4,

n_jobs=-1, verbose=1, scoring="accuracy")

%%time

grid_search.fit(X_train, y_train)

grid_search.best_score_

rf_best = grid_search.best_estimator_

rf_best

From hyperparameter tuning, we can fetch the best estimator, as shown. The best set of parameters identified was max_depth=5, min_samples_leaf=10,n_estimators=10

7. Now, let’s visualize

from sklearn.tree import plot_tree

plt.figure(figsize=(80,40))

plot_tree(rf_best.estimators_[5], feature_names = X.columns,class_names=['Disease', "No Disease"],filled=True);

from sklearn.tree import plot_tree

plt.figure(figsize=(80,40))

plot_tree(rf_best.estimators_[7], feature_names = X.columns,class_names=['Disease', "No Disease"],filled=True);

The trees created by estimators_[5] and estimators_[7] are different. Thus we can say that each tree is independent of the other.

8. Now let’s sort the data with the help of feature importance

rf_best.feature_importances_

imp_df = pd.DataFrame({

"Varname": X_train.columns,

"Imp": rf_best.feature_importances_

})

imp_df.sort_values(by="Imp", ascending=False)

Random Forest Algorithm Use Cases

This algorithm is widely used in E-commerce, banking, medicine, the stock market, etc.

For example: In the Banking industry, it can be used to find which customer will default on a loan.

Advantages and Disadvantages of Random Forest Algorithm

Advantages

- It can be used in classification and regression problems.

- It solves the problem of overfitting as output is based on majority voting or averaging.

- It performs well even if the data contains null/missing values.

- Each decision tree created is independent of the other; thus, it shows the property of parallelization.

- It is highly stable as the average answers given by a large number of trees are taken.

- It maintains diversity as all the attributes are not considered while making each decision tree though it is not true in all cases.

- It is immune to the curse of dimensionality. Since each tree does not consider all the attributes, feature space is reduced.

- We don’t have to segregate data into train and test as there will always be 30% of the data, which is not seen by the decision tree made out of bootstrap.

Disadvantages

- Random forest is highly complex compared to decision trees, where decisions can be made by following the path of the tree.

- Training time is more than other models due to its complexity. Whenever it has to make a prediction, each decision tree has to generate output for the given input data.

Conclusion

Random forest is a great choice if anyone wants to build the model fast and efficiently, as one of the best things about the random forest is it can handle missing values. It is one of the best techniques with high performance, widely used in various industries for its efficiency. It can handle binary, continuous, and categorical data. Overall, random forest is a fast, simple, flexible, and robust model with some limitations.

Key Takeaways

- Random forest algorithm is an ensemble learning technique combining numerous classifiers to enhance a model’s performance.

- Random Forest is a supervised machine-learning algorithm made up of decision trees.

- It is used for both classification and regression problems.

Frequently Asked Questions

A. Random Forest is a supervised learning algorithm that works on the concept of bagging. In bagging, a group of models is trained on different subsets of the dataset, and the final output is generated by collating the outputs of all the different models. In the case of random forest, the base model is a decision tree.

A. The following steps will tell you how random forest works:

1. Create Bootstrap Samples: Construct different samples of the dataset with replacements by randomly selecting the rows and columns from the dataset. These are known as bootstrap samples.

2. Build Decision Trees: Construct the decision tree on each bootstrap sample as per the hyperparameters.

3. Generate Final Output: Combine the output of all the decision trees to generate the final output.

A. Random Forest tends to have a low bias since it works on the concept of bagging. It works well even with a dataset with a large no. of features since it works on a subset of features. Moreover, it is faster to train as the trees are independent of each other, making the training process parallelizable.

A. Random Forest is a popular machine learning algorithm used for classification and regression tasks due to its high accuracy, robustness, feature importance, versatility, and scalability. Random Forest reduces overfitting by averaging multiple decision trees and is less sensitive to noise and outliers in the data. It provides a measure of feature importance, which can be useful for feature selection and data interpretation.

Random forest is an ensemble learning algorithm that uses a collection of decision trees to make predictions. Each decision tree is trained on a different subset of the data, and the predictions of all the trees are averaged to produce the final prediction. This makes random forest very robust to overfitting and able to handle complex relationships between the features and the target variable.

Regression is a type of supervised learning algorithm that learns a function to map from the input features to the target variable. There are many different types of regression algorithms, such as linear regression, logistic regression, and decision trees. Each type of regression algorithm makes different assumptions about the relationship between the features and the target variable.

The media shown in this article are not owned by Analytics Vidhya and are used at the Author’s discretion.

Great article. Quiet an interesting read. A good balance of content and visuals presented in an easy to understand manner. Kudos!

Good job! Interesting article, presented in a easy to understand manner. Good luck!

Great Article. Concise and clear. Gives a good understanding of the concept with some interesting visual as well. Kudos!

Good article. Easy to understand for newbies like me.

it is nice and it helped me a lot so I'm thankful for your support

really great article with well explained stuff...thanx Shruthi

Hello and thank you for the your nice article and the material you have shared. Please note that the meaning of hyper parameters of max_features, min_sample_leaf and oob_score are most probably not exact and correct in this article.

I have just one question. If 30% is left out by the model, why did you use train_test_split, splitting 70% of the dataset to train the model? Thanks a lot for the article! It was very enlightening.

The hyperparameter section : "mini_sample_leaf– determines the minimum number of leaves required to split an internal node." Couldn't find any hyperparameter as such.

Thank you for publishing this article, it's so useful. Real-world examples are even more helpful, I really enjoyed reading it.

Very well explained. Thank you.

Well written and informative content. Why did you choose data science after doing civil engg?

Good work COMPRISED of all basic and introductory information. While reading i was thinking that both classification and regression will be covered. but at the end majority of the work was based upon classification. I need regression based work too. But no problem material is still important and good covered.

Can the author explain this in details: "Train-Test split: In a random forest, we don’t have to segregate the data for train and test as there will always be 30% of the data which is not seen by the decision tree." Thanks,

All in all, great product out here and its communicated in a digestible language. Only one concern. if we do not need a train_test split, then why do we have that in the code?

The blog titled "Understanding Random Forest" offers a clear and insightful exploration of the Random Forest algorithm, a powerful tool in machine learning. With a blend of in-depth explanations and practical examples, the blog breaks down the complexity of Random Forest into manageable concepts, making it accessible even to those new to the field.

Good work !!! Simple and clear explanation about Random Forest Algorithm.