Hyperparameter Tuning Of Neural Networks using Keras Tuner

This article was published as a part of the Data Science Blogathon

Introduction

In neural networks we have lots of hyperparameters, it is very hard to tune the hyperparameter manually. So, we have Keras Tuner which makes it very simple to tune our hyperparameters of neural networks. It is just like that Grid Search or Randomized Search that you have seen in machine learning.

In this article, you will learn about How to tune your hyperparameters of a neural network using Keras Tuner, we will start with a very simple neural network and then we will do hyperparameter tuning and compare the results. You will learn about everything you need to know about Keras Tuner.

But What are hyperparameters?

Developing deep learning models is an iterative process, You start with an initial architecture then reconfigure until you get a model that can be trained efficiently in terms of time and compute resources.

These settings that you adjust are called hyperparameters, you get the idea, you write code and see the performance, and again you to the same process until you have good performance.

So, there is a way where you can adjust the setting of your neural networks which is called hyperparameters and the process of finding a good set of hyperparameters is called hyperparameter tuning.

Hyperparameter tuning is a very important part of the building, if not done, then it might cause major problems in your model like taking lots of time, useless parameters, and a lot more.

Hyperparameters are usually two types:-

- Model-based hyperparameters:- These types of hyperparameters include, number of hidden layers, neurons, etc.

- Algorithms based:- These types influence the speed as well as efficiencies, like learning rate in Gradient Descent, etc.

The benefit of the Keras tuner is that it will help in doing one of the most challenging tasks, i.e. hyperparameter tuning very easily in just some lines of code.

Keras Tuner

Keras tuner is a library for tuning the hyperparameters of a neural network that helps you to pick optimal hyperparameters in your neural network implement in Tensorflow.

For installation of Keras tuner, you have to just run the below command,

pip install keras-tuner

But wait!, Why do we need Keras tuner?

So, the answer is hyperparameters plays an important role in developing a good model, it can make large differences, it will help you to prevent overfitting, it will help you in having good bias and variance trade-off, and a lot more.

Tuning our hyperparameter using Keras Tuner

First, we will develop a baseline model, and then we will use Keras tuner for developing our model. I will be using Tensorflow for implementation.

Step:- 1 ( Download and Prepare the dataset )

from tensorflow import keras # importing keras

(x_train, y_train), (x_test, y_test) = keras.datasets.mnist.load_data() # loading the data using keras datasets api

x_train = x_train.astype('float32') / 255.0 # normalize the training images

x_test = x_test.astype('float32') / 255.0 # normalize the testing images

Step:- 2 ( Developing the baseline model )

Now, we will build our baseline neural network using the mnist dataset that will help in recognizing the digits, so let’s build a deep neural network.

model1 = keras.Sequential() model1.add(keras.layers.Flatten(input_shape=(28, 28))) # flattening 28 x 28 model1.add(keras.layers.Dense(units=512, activation='relu', name='dense_1')) # you have 512 neurons with relu activation model1.add(keras.layers.Dropout(0.2)) # we added a dropout layer with the rate of 0.2 model1.add(keras.layers.Dense(10, activation='softmax')) # output layer, where we have total 10 classes

Step:- 3 ( Compiling and Training the model )

Now, we have built our baseline model, now it’s time to compile our model and train the model, we will use Adam optimizer with a learning rate of 0.0, for training we will run our model for 10 epochs, with the validation split of 0.2.

model1.compile(optimizer=keras.optimizers.Adam(learning_rate=0.001),

loss=keras.losses.SparseCategoricalCrossentropy(),

metrics=['accuracy'])

model1.fit(x_train, y_train, epochs=10, validation_split=0.2)

Step:- 4 ( Evaluating our model )

So, now we have trained, now we will evaluate our model on the test set, to see the model performance.

model1_eval = model.evaluate(img_test, label_test, return_dict=True)

Tuning your model using Keras Tuner

Step:- 1 (Importing the libraries)

import tensorflow as tf import kerastuner as kt

Step:- 2 (Building the model using Keras Tuner)

Now, you will set up a Hyper Model (The model you set up for hypertuning is called a hypermodel), we will define your hypermodel using the model builder function, which you can see in the function below returns the compiled model with tuned hyperparameters.

In the below classification model, we will fine-tune the model hyperparameters which are several neurons as well as the learning rate of the Adam optimizer.

def model_builder(hp):

'''

Args:

hp - Keras tuner object

'''

# Initialize the Sequential API and start stacking the layers

model = keras.Sequential()

model.add(keras.layers.Flatten(input_shape=(28, 28)))

# Tune the number of units in the first Dense layer

# Choose an optimal value between 32-512

hp_units = hp.Int('units', min_value=32, max_value=512, step=32)

model.add(keras.layers.Dense(units=hp_units, activation='relu', name='dense_1'))

# Add next layers

model.add(keras.layers.Dropout(0.2))

model.add(keras.layers.Dense(10, activation='softmax'))

# Tune the learning rate for the optimizer

# Choose an optimal value from 0.01, 0.001, or 0.0001

hp_learning_rate = hp.Choice('learning_rate', values=[1e-2, 1e-3, 1e-4])

model.compile(optimizer=keras.optimizers.Adam(learning_rate=hp_learning_rate),

loss=keras.losses.SparseCategoricalCrossentropy(),

metrics=['accuracy'])

return model

In the above code, here are some notes:-

- Int() method to define the search space for the Dense units. This allows you to set a minimum and maximum value and the step size when incrementing between these values.

- Choice() method for the learning rate. This allows you to define discrete values to include in the search space when hypertuning.

Step:-3) Instantiating the tuner and tuning the hyperparameters

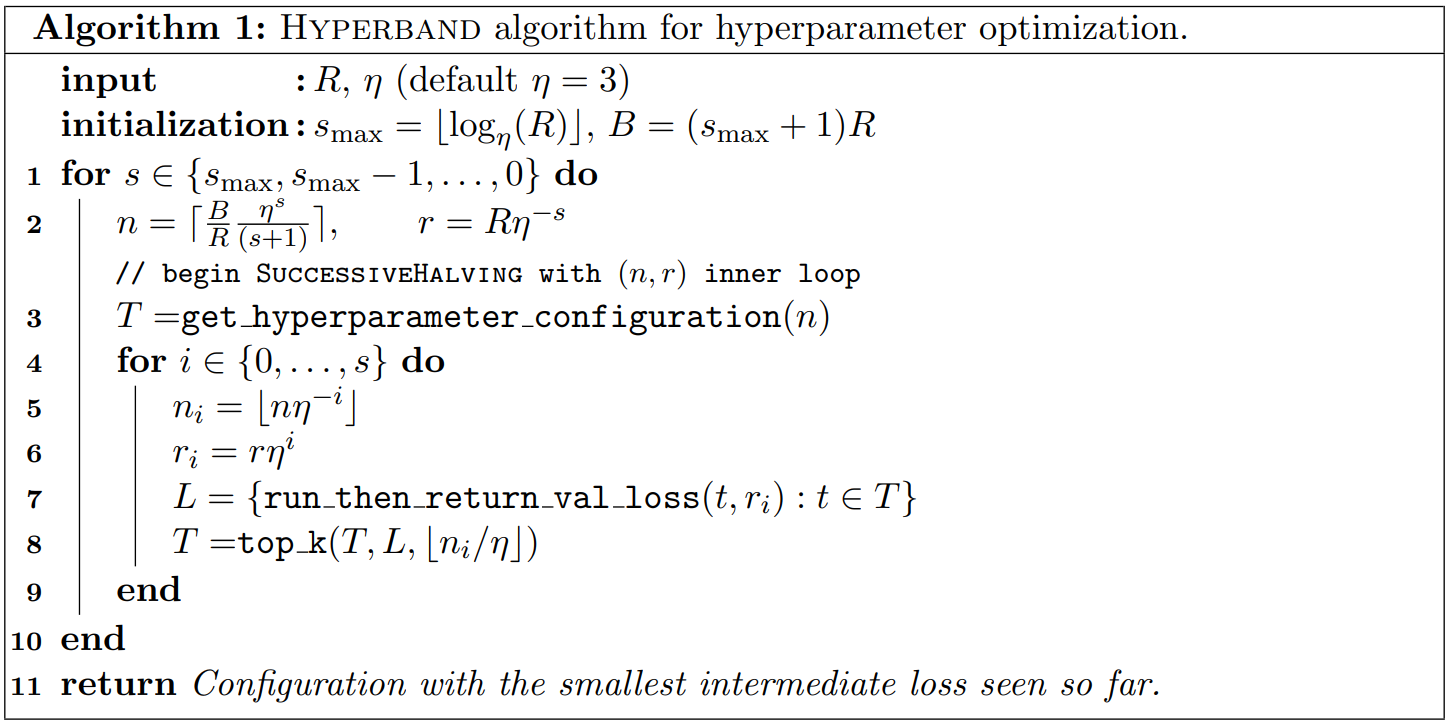

You will HyperBand Tuner, It is an algorithm developed for hyperparameter optimization. It uses adaptive resource allocation and early-stopping to quickly converge on a high-performing model. You can read more about this intuition here.

But the basic algorithm is below in the picture, if you are not able to understand, kindly ignore it and move forward. It’s a large topic that requires another blog.

Hyperband determines the number of models to train in a bracket by computing 1 + log_factor(max_epochs) and rounding it up to the nearest integer.

# Instantiate the tuner

tuner = kt.Hyperband(model_builder, # the hypermodel

objective='val_accuracy', # objective to optimize

max_epochs=10,

factor=3, # factor which you have seen above

directory='dir', # directory to save logs

project_name='khyperband')

# hypertuning settings

tuner.search_space_summary()

Output:-

# Search space summary

# Default search space size: 2

# units (Int)

# {'default': None, 'conditions': [], 'min_value': 32, 'max_value': 512, 'step': 32, 'sampling': None}

# learning_rate (Choice)

# {'default': 0.01, 'conditions': [], 'values': [0.01, 0.001, 0.0001], 'ordered': True}

Step:- 4 ( Searching the best hyperparameter )

stop_early = tf.keras.callbacks.EarlyStopping(monitor='val_loss', patience=5) # Perform hypertuning tuner.search(x_train, y_train, epochs=10, validation_split=0.2, callbacks=[stop_early])

best_hp=tuner.get_best_hyperparameters()[0]

Step:- 5 ( Rebuilding and Training the Model with optimal hyperparameters )

# Build the model with the optimal hyperparameters h_model = tuner.hypermodel.build(best_hps) h_model.summary() h_model.fit(x_train, x_test, epochs=10, validation_split=0.2)

Now, you can evaluate this model,

h_eval_dict = h_model.evaluate(img_test, label_test, return_dict=True)

Comparison of with and Without Hyperparameter Tuning

Baseline Model Performance:-

BASELINE MODEL: number of units in 1st Dense layer: 512 learning rate for the optimizer: 0.0010000000474974513 loss: 0.08013473451137543 accuracy: 0.9794999957084656

HYPERTUNED MODEL: number of units in 1st Dense layer: 224 learning rate for the optimizer: 0.0010000000474974513 loss: 0.07163219898939133 accuracy: 0.979200005531311

- If you have seen the timing of training of your baseline model that is more than this hyperparameter tuned model because it has lesser neurons, so it is faster.

- The Hyperparameter model is more robust, you can see the loss of your baseline model and see the loss of the hyper tuned model, so we can say that is a more robust model.

End Notes

Thanks for reading this article, I hope that you found this article very helpful and you will implement the Keras tuner in your neural network to get better neural nets.

About the Author

Ayush Singh

I am a 14-year-old learner and machine learning and deep learning practitioner, working in the domain of Natural Language Processing, Generative Adversarial Networks, and Computer Vision. Also, I make videos on machine learning, deep learning, Gans on my youtube channel Newera. I am also a competitive coder but still practicing all the techs and a passionate learner and educator. You can connect me on Linkedin:- Ayush Singh

Its a nice article. some corrections are needed. There are few mistakes in variable names,

Really helped. Thanks a lot. 1 question. Is the objective always 'maximized'. i.e. in a regression problem i want to minimize mse. what should change then? Thanks in advance. Cheers! Subhadip