Towards Sustainable AI: Effective LLM Compression Techniques

About

Imagine a world where AI is as eco-friendly as it is intelligent. This session is for anyone who wants to make artificial intelligence more practical and less expensive. As the computational demands of Large Language Models (LLMs) continue to grow, their deployment challenges in terms of cost, energy consumption, and hardware requirements become increasingly significant. This session aims to address these challenges by exploring a range of effective model compression techniques that reduce the size and computational overhead of LLMs without compromising their performance.

In this presentation, we will touch base the following High-Level Concepts of LLM Compression

1. Pruning: Technique to remove redundant or less important parameters from the model.

2. Knowledge Distillation: Training a smaller model (student) to replicate the behavior of a larger model (teacher).

3. Low-Rank Factorization: Decomposing large weight matrices into products of smaller matrices, reducing the number of parameters and computations.

4. Quantization: Reducing the precision of the model parameters.

Join us to explore simple, effective ways to reduce the size of these models using techniques like pruning, quantization, knowledge distillation, and low-rank factorization. We'll break down each method in easy-to-understand terms and infographics, explaining what these techniques do, why they are beneficial, what are different categories under each one of them and how they can be applied in real-life scenarios.

Key Takeaways:

- Understand various model compression techniques and their applications.

- Gain practical insights into applying these techniques to real-world scenarios

- Recognize the importance of energy efficiency & sustainability in AI practices through model compression.

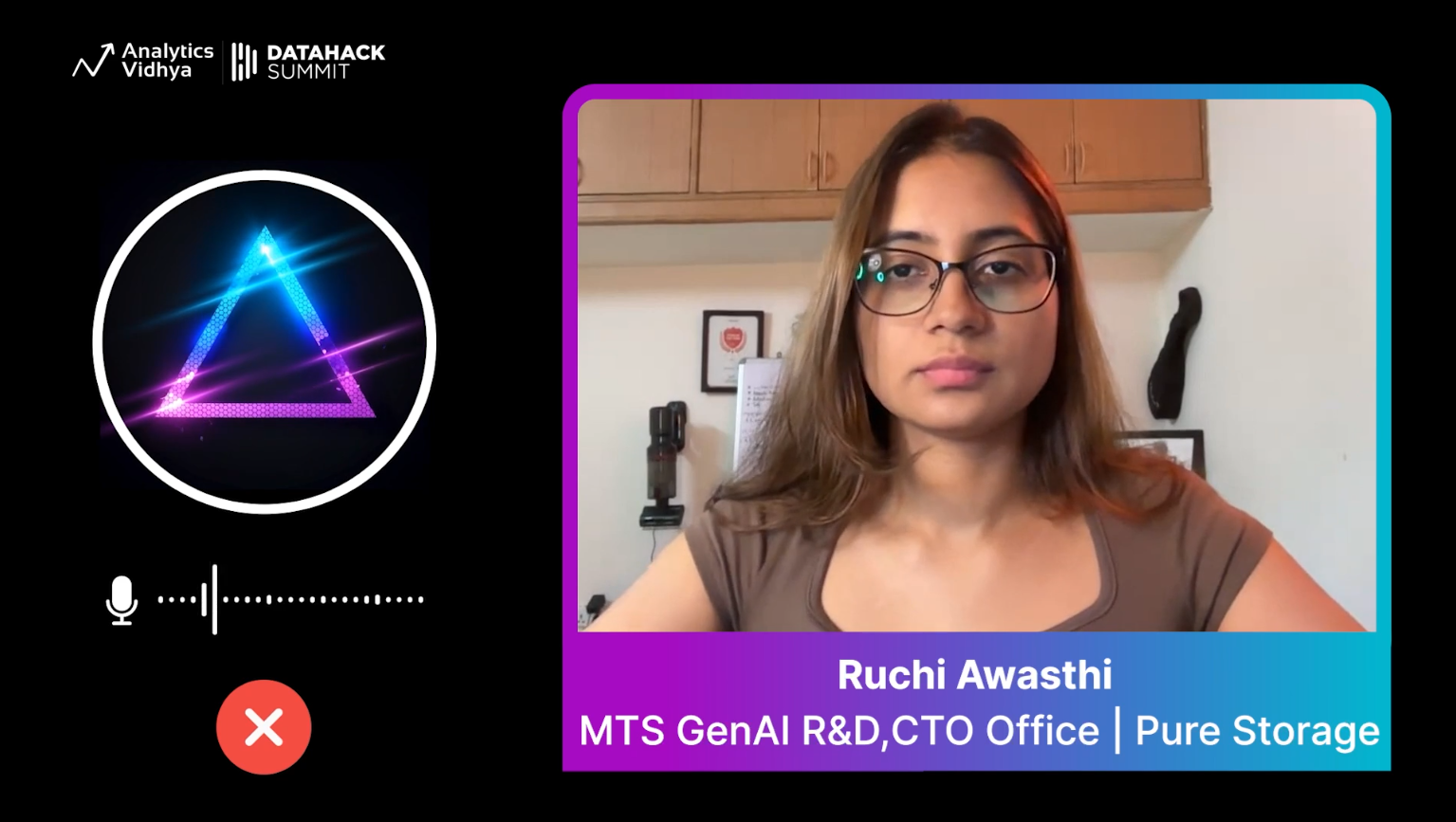

Speaker