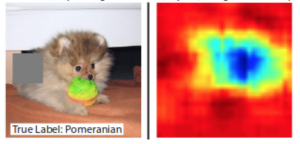

Deep learning models have always known to be a black box in nature. They lack interpretability compared to traditional machine learning models. So, there is always a hesitation in adopting deep learning models in user-facing applications (especially medical and financial applications). Researchers have however worked on techniques that are being successfully used to interpret computer vision techniques such as activation and saliency maps.

However, interpretability of NLP models is something with which a lot of practitioners struggle with. Recent progress in NLP, with the advent of Attention-based models, have made it easier for us to interpret and understand the decisions of the model. This hack session would include the techniques that can be used to interpret the decisions of RNNs, LSTMs and Transformer models. It would also include model agnostic techniques for interpretation.

Key Takeaways for the Audience:

- Learn how to leverage attention models and layers for Interpretation

- Learn model-agnostic techniques for interpretation of NLP models

Check out the video below to know more about the session.