Dynamic SQL Queries to Transform Data

This article was published as a part of the Data Science Blogathon.

“Preponderance data opens doorways to complex and Avant analytics.”

Introduction to SQL Queries

Introduction to SQL Queries

Data is the premium product of the 21st century. Enterprises are focused on data stockpiling because more data leads to meticulous and calculated decision-making and opens more doors for business transition. Heavy Accumulation of data demands excessive storage space and analytical and processing options for real-time decision-making. Traditional in-house infrastructure and storage units are no longer viable for modern-day Big data requirements. Cloud service providers are equipping an ample array of on-demand computational and storage solutions which fulfill the Big data requirements by eliminating infrastructure overhead.

After all, we live in a world where everything is real-time. Without calculated architectural design, excessive data proliferation can lead to a mountain of unmanageable data. With an increase in the amount of data need to analyze data to derive business insights will skyrocket. Enterprises cannot always afford to process the data at a later moment in time, assuming that they will attain the same business value.

Timeboxed analytical requirements often make us hardcode arguments/values in our queries. A minor code change in a complex codebase could lead to delayed deliverables.

For simplicity and easy integration options, let’s use snowflake’s Data warehouse to illustrate our problem. This example plans to show you how we can dynamically pass values to SQL queries using the snowflake python connector during run time to make our code more flexible and manageable.

What is Snowflake? How to Install Snowflake Connector?

Snowflake is the modern data warehouse with on-demand storage, sophisticated analytical, and database operations at an expeditious latency. Here is the link to install the snowflake connector: https://docs.snowflake.com/en/user-guide/python-connector-install.html#step-1-install-the-connector

Let’s get straight into it and first install version-specific libraries for the connector

pip install -r https://raw.githubusercontent.com/snowflakedb/snowflake-connector-python/v2.7.6/tested_requirements/requirements_38.reqs

now run pip install to install the desired version of the snowflake connector

pip install snowflake-connector-python==

Note: the Python connector provides integration to use the pandas’ library checkout installation docs.

Configuring Snowflake Connection

To start querying our data, first, we need to connect to the cloud data warehouse. In our case, we need to connect to the snowflake.

To ensure we are connecting to the database and querying desired tables by establishing a secure connection, snowflake has some guidelines.

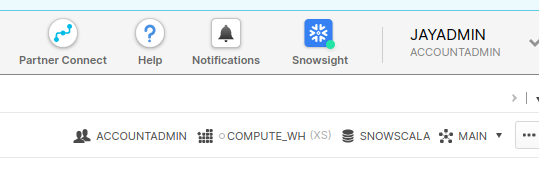

4 out of 6 parameters are found on the home page of the Snowflake,

The declaration of the connection will be equivalent to the below python variable.

Please pass relevant values to establish a connection.

conn = snowflake.connect(

user=sfUser,

password=sfPwd,

account=sf_account,

warehouse=sf_warehouse,

database=sf_database,

schema=sf_schema

)

We now have established a database connection successfully. Let’s declare our cursor and start querying.

Define Cursor Object

The cursor is a python class instance heavily used to invoke python SQL methods to fetch, query, and modify tables.

A cursor is created by calling the cursor() method of the connection

cur = conn.cursor()

Throughout the lifetime of a session, the cursor is bound to the provided connection specifications.

Assigning SQL Query to a Python Variable

The cursor connection will accept the SQL query in the form of a python string variable.

query = 'select column1, column2 from '

'test_table WHERE {0} = {1} '

Here {0} and {1} are string interpolators allowing us to pass values dynamically during run time. Dynamic values can be substituted directly to {0} and {1} by their names, such as {column_name} and {value_holder}. But it is often beneficial to avoid call by name approach.

Defining configurations / dynamic values

We declare the dynamic values we intend to pass to our SQL queries during runtime.

column_name = 'Site' value_holder = 'Analytics_vidya'

Formatting SQL query using Python’s repr

repr() is a Python built-in function that returns a printable representation of a specified object.

We will format the query and pass our dynamic values as a part of the formatting. The order of the arguments is sequential.

The first element will be at index 0 and so on.

final_query = query.format(repr(column_name), repr(value_holder))

Executing the final query

The cursor object offers a method to execute our formatted queries as below.

cur.execute(final_query)

Execute method will utilize the connection details and fetch the records from the specified table that meeds the dynamic conditions.

Points to Note

1. A query gets executed if we explicitly pass it to the cursor’s execute method.

cur.execute('select * from test_table')

2. We can use these dynamic calls through conditional statements.

if condition == 'N':

final_query = query.format('static_column_name', repr(value_holder)))

else:

final_query = query.format(repr(column_name), 'static_value_holder')

3. We can pass method/function arguments as dynamic values.

4. we can reuse the dynamic variables in multiple SQL statements

5. The execute method of the cursor is equipped with other operations like close(), fetchall(), executemany(), etc.

Advantages of this approach

- This approach helps in tackling SQL injection attacks.

- Code refactoring, Code reusability, and maintainability will increase drastically.

- Programming language Support: cloud data warehouses like Snowflake and AWS redshift provide multiple programming language support like Python.

Conclusion to SQL Queries

While building data-intensive applications, data pipelines, and Big data jobs, it is crucial to focus on efficiently designing our queries and jobs to attain dynamic essence and process our data transformations on the go.

Cloud data warehouses provide on-demand resource allocation, auto-Scaling, time travel for data recovery, and visualizations with inbuilt features like Materialized views, REGEX flavors, and flattening options to tackle complex SQL operations.

With the rise of python, we now have a python connector integration option for many popular database engines like Postgres, Cassandra, and cloud data warehouses.

The motive behind this post is to show that one does not need to run a search through the entire codebase to make a small change. Dynamically maintaining configurations and arguments separately and implementing them to your problems helps tackle countless issues during production deployment.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.