Introduction

Most of you would have heard exciting stuff happening using deep learning. You would have also heard that Deep Learning requires a lot of hardware. I have seen people training a simple deep learning model for days on their laptops (typically without GPUs) which leads to an impression that Deep Learning requires big systems to run execute.

However, this is only partly true and this creates a myth around deep learning which creates a roadblock for beginners. Numerous people have asked me as to what kind of hardware would be better for doing deep learning. With this article, I hope to answer them.

Note: I assume that you have a fundamental knowledge of deep learning concepts. If not, you should go through this article.

Table of Contents

- Fact #101: DL requires

a lot ofhardware - Training a deep learning model

- How to train your model faster?

- CPU vs GPU

- Brief History of GPUs – how did we reach here

- Which GPU to use today?

- The future looks exciting

Fact #101: Deep Learning requires a lot of hardware

When I first got introduced with deep learning, I thought that deep learning necessarily needs large Datacenter to run on, and “deep learning experts” would sit in their control rooms to operate these systems.

This is because every book that I referred or every talk that I heard, the author or speaker always say that deep learning requires a lot of computational power to run on. But when I built my first deep learning model on my meager machine, I felt relieved! I don’t have to take over Google to be a deep learning expert 😀

This is a common misconception that every beginner faces when diving into deep learning. Although, it is true that deep learning needs considerable hardware to run efficiently, you don’t need it to be infinite to do your task. You can even run deep learning models on your laptop!

Just a small disclaimer; the smaller your system, more is the time you will need to get a trained model which performs good enough. You may basically look like this:

Let’s just ask ourselves a simple question; why do we need more hardware for deep learning?

The answer is simple, deep learning is an algorithm – a software construct. We define an artificial neural network in our favorite programming language which would then be converted into a set of commands that run on the computer.

If you would have to guess which components of neural network do you think would require intense hardware resource, what would be your answer?

A few candidates from top of my mind are:

- Preprocessing input data

- Training the deep learning model

- Storing the trained deep learning model

- Deployment of the model

Among all these, training the deep learning model is the most intensive task. Lets see in detail why is this so.

Training a deep learning model

When you train a deep learning model, two main operations are performed:

- Forward Pass

- Backward Pass

In forward pass, input is passed through the neural network and after processing the input, an output is generated. Whereas in backward pass, we update the weights of neural network on the basis of error we get in forward pass.

Both of these operations are essentially matrix multiplications. A simple matrix multiplication can be represented by the image below

Here, we can see that each element in one row of first array is multiplied with one column of second array. So in a neural network, we can consider first array as input to the neural network, and the second array can be considered as weights of the network.

This seems to be a simple task. Now just to give you a sense of what kind of scale deep learning – VGG16 (a convolutional neural network of 16 hidden layers which is frequently used in deep learning applications) has ~140 million parameters; aka weights and biases. Now think of all the matrix multiplications you would have to do to pass just one input to this network! It would take years to train this kind of systems if we take traditional approaches.

How to train your neural net faster?

We saw that the computationally intensive part of neural network is made up of multiple matrix multiplications. So how can we make it faster?

We can simply do this by doing all the operations at the same time instead of doing it one after the other. This is in a nutshell why we use GPU (graphics processing units) instead of a CPU (central processing unit) for training a neural network.

To give you a bit of an intuition, we go back to history when we proved GPUs were better than CPUs for the task.

Before the boom of Deep learning, Google had a extremely powerful system to do their processing, which they had specially built for training huge nets. This system was monstrous and was of $5 billion total cost, with multiple clusters of CPUs.

Now researchers at Stanford built the same system in terms of computation to train their deep nets using GPU. And guess what; they reduced the costs to just $33K ! This system was built using GPUs, and it gave the same processing power as Google’s system. Pretty impressive right?

| Stanford | ||

| Number of cores | 1K CPUs = 16K cores | 3GPUs = 18K cores |

| Cost | $5B | $33K |

| Training time | week | week |

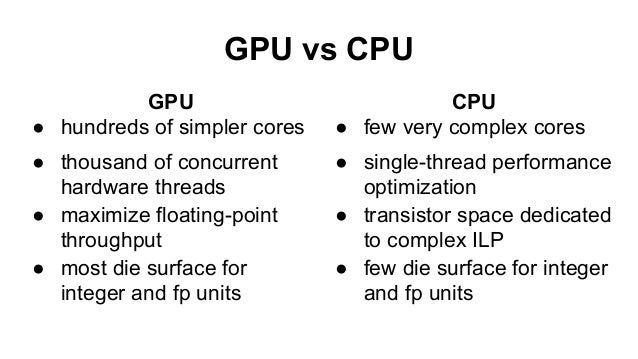

We can see that GPUs rule. But what exactly is the difference between a CPU and a GPU?

Difference between CPU and GPU

To understand the difference, we take a classic analogy which explains the difference intuitively.

Suppose you have to transfer goods from one place to the other. You have an option to choose between a Ferrari and a freight truck.

Ferrari would be extremely fast and would help you transfer a batch of goods in no time. But the amount of goods you can carry is small, and usage of fuel would be very high.

A freight truck would be slow and would take a lot of time to transfer goods. But the amount of goods it can carry is larger in comparison to Ferrari. Also, it is more fuel efficient so usage is lower.

So which would you chose for your work?

Obviously, you would first see what the task is; if you have to pick up your girlfriend urgently, you would definitely choose a Ferrari over a freight truck. But if you are moving your home, you would use a freight truck to transfer the furniture.

Here’s how you would technically differentiate the two:

Here’s another video which would make your concept even clearer.

Note: GPU is mostly used for gaming and doing complex simulations. These tasks and mainly graphics computations, and so GPU is graphics processing unit. If GPU is used for non-graphical processing, they are termed as GPGPUs – general purpose graphics processing unit

Brief History of GPUs – how did we reach here

Now, you might be asking this question that why are GPUs so much rage right now. Let us travel through a brief history of development of GPUs

Basically a GPGPU is a parallel programming setup involving GPUs & CPUs which can process & analyze data in a similar way to image or other graphic form. GPGPUs were created for better and more general graphic processing, but were later found to fit scientific computing well. This is because most of the graphic processing involves applying operations on large matrices.

The use of GPGPUs for scientific computing started some time back in 2001 with implementation of Matrix multiplication. One of the first common algorithm to be implemented on GPU in faster manner was LU factorization in 2005. But, at this time researchers had to code every algorithm on a GPU and had to understand low level graphic processing.

In 2006, Nvidia came out with a high level language CUDA, which helps you write programs from graphic processors in a high level language. This was probably one of the most significant change in they way researchers interacted with GPUs

Which GPU to use today?

Here I will quickly give a few know-hows before you go on to buy a GPU for deep learning.

Scenario 1:

The first thing you should determine is what kind of resource does your tasks require. If your tasks are going to be small or can fit in complex sequential processing, you don’t need a big system to work on. You could even skip the use of GPUs altogether. So, if you are planning to work mainly on “other” ML areas / algorithms, you don’t necessarily need a GPU.

Scenario 2:

If your task is a bit intensive, and has a handle-able data, a reasonable GPU would be a better choice for you. I generally use my laptop to work on toy problems, which has a slightly out of date GPU (a 2GB Nvidia GT 740M). Having a laptop with GPU helps me run things wherever I go. There are a few high end (and expectedly heavy) laptops with Nvidia GTX 1080 (a 8 GB VRAM) which you can check out at the extreme.

Scenario 3:

If you are regularly working on complex problems or are a company which leverages deep learning, you would probably be better off building a deep learning system or use a cloud service like AWS or FloydHub. We at Analytics Vidhya built a deep learning system for ourselves, for which we shared our specifications. Here’s the article.

Scenario 4:

If you are Google, you probably need another datacenter to sustain! Jokes aside, if your task is of a bigger scale than usual, and you have enough pocket money to cover up the costs; you can opt for a GPU cluster and do multi-GPU computing. There are also some options which may be available in the near future – like TPUs and faster FPGAs, which would make your life easier.

The future looks exciting

As mentioned above, there is a lot of research and active work happening to think of ways to accelerate computing. Google is expected to come out with Tensorflow Processing Units (TPUs) later this year, which promises an acceleration over and above current GPUs.

Similarly Intel is working on creating faster FPGAs, which may provide higher flexibility in coming days. In addition, the offerings from Cloud service providers (e.g. AWS) is also increasing. We will see each of them emerge in coming months.

End Notes

In this article, we covered the motivations of using a GPU for deep learning applications and saw how to choose them for your task. I hope this article was helpful to you. If you have any specific questions regarding the topic, feel free to comment below or ask them on discussion portal.

Thanks for the article! It could be interesting one post about training a model on AWS with python. I'm always training models on my laptop, but sometimes is exhausted.

Thanks Pedro for the suggestion

Simple explanation! Easy to follow and understand without overwhelming the reader! Appreciate it!

Thanks neeraj!

Additionally I am using my Laptop for DL learning purpose which does not have GPU..! for learning the concept and trying things - like Keras with Theano, you don't need GPU.. Yes some warnings will popup but still you can ahead and execute your code/module and learn..

Very true

Can you please help me in deep learning for weed recognition....please please help me.

very nice explanation with so intutive explanation. AV has been a terrific source of knowledge . Keep going

Thanks Jatin!

Good article. I wanted to know if GPUs are useful only for Deep Learnings or they can be useful for other modeling techniques such as simple regression, or GBM/XGBoost, randomforest etc. Also, if I have a GPU on my laptop, what do I need to do in my code to utilise the GPU. For eg, suppose I have a randomforest code in R with me and now I want to run the code using GPU for this. What kind of changes, if any would I need to make in my code? Thanks!

Thanks akrsrivastava! Practically speaking, any algorithm which can be parallelized can be run on GPUs. For example, you can have a random forest implemented on GPU. On the flipside, that implementation has to be written in a specific language that can be compiled by GPU. Most of the libraries abstract this, and give an API like interface for you to run your code on. Take an example of tensorflow - so tensorflow for GPU has been written to be compatible with NVCC, which is a compiler for NVidia GPU. When you write code, the interface seems similar to what you would see for a CPU code, but in the backend the actual code generated is very different for both. Simply speaking, use a library in R/Python which can run algorithms on GPU

very nice article

Thanks sandip

can amd radeon GPU be used for training?

Although there are efforts for supporting other GPU vendors for deep learning, they are still not as developed as the libraries for Nvidia. So answer your question, yes you can use AMD but the support is less

Hi, How does GPU computing vs distributed computing compare for deep learning?

I would still prefer GPU's. Just consider the scale what GPU can give you. An Nvidia TitanX has 3072 cores whereas a general Intel i7 has 4 cores. So you would need at least 250 i7 machines to match the power (considering that one i7 core is more powerful than one GPU core)

"We at Analytics Vidhya built a deep learning system for ourselves" So Basically Yes, you DO need large compute resources to train anything but trivial training and test cases :( This is my real worry, that the next generation of ML will ONLY be available to those who own, or have access to the data, and the resources to wield significant compute to that data. The ML wave will generate a bigger digital divide between the powerful, and those unable to afford those compute resources. Back to the old 'MainFrame' days.

Hi! Interesting insights but I beg to differ. As the requirements increase so the cost of resources will decrease. After all, the producers would want that their consumers to purchase right? Consider this, in the older days, Personal computer was a big thing and not all could afford. And then mobiles came, which now acts as a proxy for PC with almost the same compute.

Hi! You mentioned AWS and Floydhub as potential solutions for fast training or training more complex models. At GoodAILab, we are building a platform called TensorPort that basically gives you best of both worlds. AWS is a pain to setup, and FloydHub has limited capabilities when it comes to scaling to more than one instance / distributed computing. We can deploy a Cluster of GPU machines in second, without any infra setup, but we still have a very user friendly and intuitive interface. Check it out: www.tensorport.com

Thanks Malo for sharing this with us

Can NVIDIA Quadro M2000 graphics cards be used for deep learning? If yes, how will be the performance?

Hi, I suppose it can be used. I personally use a 2GB Nvidia GTX 720, and its ok for smaller toy projects. But I prefer jumping on to 12GB Nvidia Titax X of AV for serious experiments

Does higher CPU cores or frequency matters? If so, which is more important?

Both of these are equally important

Hi, I am working on non linear regression using tensor flow and neural network, do you think that I need a gpu for that?

Well that will depend upon the kind of dataset you are working on. If you have a bigger amount of dataset, you should definitely make a switch to GPUs

To start with, I believe you must question yourself if you really need a GPU. It would be interesting to know what project experience can the author cite and an explanation of why he thinks GPU is necessary for that use-case, this might help people understand better the need of GPU. If you're buying a GPU to classify cats and dogs in image then it's simply a waste of resources. Undoubtedly the GPU's are very efficient in some systems that require strong computing power, but simply throwing the 'cool' terms have become a trend these days, unfortunately.

Thanks Faizan Shaikh very nice article.

nice work good gooooooooood so nice