13 minutes

Introduction

Build a deep learning image classification model in a few minutes without the hassle of lengthy training. No need for a powerful machine! I’ve heard this countless times from aspiring data scientists who shy away from building deep learning models on their own machines.

You don’t need to be working for Google or other big tech firms to work on deep learning datasets! It is entirely possible to build your own neural network from the ground up in a matter of minutes without needing to lease out Google’s servers. Fast.ai’s students designed a model on the Imagenet dataset in 18 minutes – and I will showcase something similar in this article, focusing on image classification.

Deep learning is a vast field so we’ll narrow our focus a bit and take up the challenge of solving an Image Classification project. Additionally, we’ll be using a very simple deep learning architecture to achieve a pretty impressive accuracy score.

You can consider the Python code we’ll see in this article as a benchmark for building Image Classification models. Once you get a good grasp on the concept, go ahead and play around with the code, participate in competitions and climb up the leaderboard!

If you’re new to deep learning and are fascinated by the field of computer vision (who isn’t?!), do check out the ‘Computer Vision using Deep Learning‘ course. It’s a comprehensive introduction to this wonderful field and will set you up for what is inevitably going to a huge job market in the near future.

Project to apply Image ClassificationProblem StatementMore than 25% of the entire revenue in E-Commerce is attributed to apparel & accessories. A major problem they face is categorizing these apparels from just the images especially when the categories provided by the brands are inconsistent. This poses an interesting computer vision problem that has caught the eyes of several deep learning researchers. Fashion MNIST is a drop-in replacement for the very well known, machine learning hello world – MNIST dataset which can be checked out at ‘Identify the digits’ practice problem. Instead of digits, the images show a type of apparel e.g. T-shirt, trousers, bag, etc. The dataset used in this problem was created by Zalando Research. |

Table of Contents

- Introduction

- What Is Image Classification?

- Setting Up the Structure of Our Image Data

- Breaking Down the Process of Model Building

- Setting Up the Problem Statement and Understanding the Data

- Steps to Build Our Model

- How to build Image Classification Model?

- New Practice Problem

- Conclusion

- Frequently Asked Questions

What Is Image Classification?

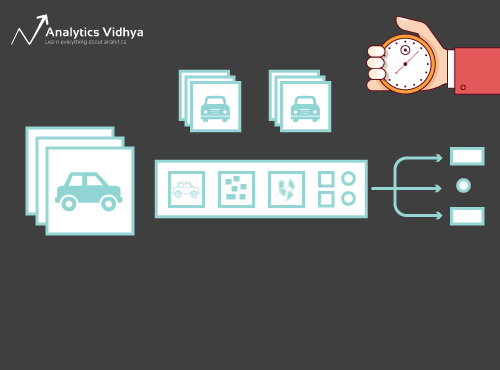

Consider the below image:

You will have instantly recognized it – it’s a (swanky) car. Take a step back and analyze how you came to this conclusion – you were shown an image and you classified the class it belonged to (a car, in this instance). And that, in a nutshell, is what image classification is all about.

There are potentially n number of categories in which a given image can be classified. Manually checking and classifying images is a very tedious process. The task becomes near impossible when we’re faced with a massive number of images, say 10,000 or even 100,000. How useful would it be if we could automate this entire process and quickly label images per their corresponding class? This is where deep learning models for image classification come into play. They offer a solution to this challenge by leveraging advanced algorithms to automatically categorize images based on their content.

Self-driving cars are a great example to understand where image classification is used in the real-world. To enable autonomous driving, we can build deep learning models for image classification that recognize various objects, such as vehicles, people, moving objects, etc. on the road. We’ll see a couple more use cases later in this article but there are plenty more applications around us. Use the comments section below the article to let me know what potential use cases you can come with up!

Now that we have a handle on our subject matter, let’s dive into how an image classification model is built, what are the prerequisites for it, and how it can be implemented in Python.

Setting Up the Structure of Our Image Data

Our data needs to be in a particular format in order to solve an image classification problem. We will see this in action in a couple of sections but just keep these pointers in mind till we get there.

You should have 2 folders, one for the train set and the other for the test set. In the training set, you will have a .csv file and an image folder:

- The .csv file contains the names of all the training images and their corresponding true labels

- The image folder has all the training images.

The .csv file in our test set is different from the one present in the training set. This test set .csv file contains the names of all the test images, but they do not have any corresponding labels. Can you guess why? Our model will be trained on the images present in the training set and the label predictions will happen on the testing set images

If your data is not in the format described above, you will need to convert it accordingly (otherwise the predictions will be awry and fairly useless).

Breaking Down the Process of Model Building

Before we deep dive into the Python code, let’s take a moment to understand how an image classification model is typically designed. We can divide this process broadly into 4 stages. Each stage requires a certain amount of time to execute:

- Loading and pre-processing Data – 30% time

- Defining Model architecture – 10% time

- Training the model – 50% time

- Estimation of performance – 10% time

Let me explain each of the above steps in a bit more detail. This section is crucial because not every model is built in the first go. You will need to go back after each iteration, fine-tune your steps, and run it again. Having a solid understanding of the underlying concepts will go a long way in accelerating the entire process.

Stage 1: Loading and pre-processing the data

Data is gold as far as deep learning models are concerned. Your image classification model has a far better chance of performing well if you have a good amount of images in the training set. Also, the shape of the data varies according to the architecture/framework that we use.

Hence, the critical data pre-processing step (the eternally important step in any project). I highly recommend going through the ‘Basics of Image Processing in Python’ to understand more about how pre-processing works with image data.

But we are not quite there yet. In order to see how our model performs on unseen data (and before exposing it to the test set), we need to create a validation set. This is done by partitioning the training set data.

In short, we train the model on the training data and validate it on the validation data. Once we are satisfied with the model’s performance on the validation set, we can use it for making predictions on the test data.

Time required for this step: We require around 2-3 minutes for this task.

Stage 2: Defining the model’s architecture

This is another crucial step in our deep learning model building process. We have to define how our model will look and that requires answering questions like:

- How many convolutional layers do we want?

- What should be the activation function for each layer?

- How many hidden units should each layer have?

And many more. These are essentially the hyperparameters of the model which play a MASSIVE part in deciding how good the predictions will be.

How do we decide these values? Excellent question! A good idea is to pick these values based on existing research/studies. Another idea is to keep experimenting with the values until you find the best match but this can be quite a time consuming process.

Time required for this step: It should take around 1 minute to define the architecture of the model.

Stage 3: Training the model

For training the model, we require:

- Training images and their corresponding true labels

- Validation images and their corresponding true labels (we use these labels only to validate the model and not during the training phase)

We also define the number of epochs in this step. For starters, we will run the model for 10 epochs (you can change the number of epochs later).

Time required for this step: Since training requires the model to learn structures, we need around 5 minutes to go through this step.

And now time to make predictions!

Stage 4: Estimating the model’s performance

Finally, we load the test data (images) and go through the pre-processing step here as well. We then predict the classes for these images using the trained model.

Time required for this step: ~ 1 minute.

Setting Up the Problem Statement and Understanding the Data

We will be taking on a fascinating challenge to grasp image classification. The task involves constructing a model capable of categorizing a set of images based on apparel types like shirts, trousers, shoes, socks, etc. This particular problem is a common challenge for many e-commerce retailers, adding an extra layer of intrigue to this computer vision problem

This challenge is called ‘Identify the Apparels’ and is one of the practice problems we have on our DataHack platform. You will have to register and download the dataset from the above link.

We have a total of 70,000 images (28 x 28 dimension), out of which 60,000 are from the training set and 10,000 from the test one. The training image Classification are pre-labelled according to the apparel type with 10 total classes. The test images are, of course, not labelled. The challenge is to identify the type of apparel present in all the test images.

We will build our model on Google Colab since it provides a free GPU to train our models.

Steps to Build Our Model

Time to fire up your Python skills and get your hands dirty. We are finally at the implementation part of our learning!

- Setting up Google Colab

- Importing Libraries

- Loading and Preprocessing Data – (3 mins)

- Creating a validation set

- Defining the model structure – (1 min)

- Training the model – (5 min)

- Making predictions – (1 min)

Let’s look at each step in detail.

Steps to Build Image Classification

Step 1: Setting up a Google Colab

Since we’re importing our data from a Google Drive link, we’ll need to add a few lines of code in our Google Colab notebook. Create a new Python 3 notebook and write the following code blocks:

!pip install PyDriveThis will install PyDrive. Now we will import a few required libraries:

import os

from pydrive.auth import GoogleAuth

from pydrive.drive import GoogleDrive

from google.colab import auth

from oauth2client.client import GoogleCredentialsNext, we will create a drive variable to access Google Drive:

auth.authenticate_user()

gauth = GoogleAuth()

gauth.credentials = GoogleCredentials.get_application_default()

drive = GoogleDrive(gauth)To download the dataset, we will use the ID of the file uploaded on Google Drive:

download = drive.CreateFile({'id': '1BZOv422XJvxFUnGh-0xVeSvgFgqVY45q'})

Replace the ‘id’ in the above code with the ID of your file. Now we will download this file and unzip it:

download.GetContentFile('train_LbELtWX.zip')

!unzip train_LbELtWX.zip

You have to run these code blocks every time you start your notebook.

Step 2 : Import the libraries we’ll need during our model building phase.

import keras

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers import Conv2D, MaxPooling2D

from keras.utils import to_categorical

from keras.preprocessing import image

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from keras.utils import to_categorical

from tqdm import tqdmStep 3: Recall the pre-processing steps we discussed earlier. We’ll be using them here after loading the data.

train = pd.read_csv('train.csv')Next, we will read all the training images, store them in a list, and finally convert that list into a numpy array.

# We have grayscale images, so while loading the images we will keep grayscale=True, if you have RGB images, you should set grayscale as False

train_image = []

for i in tqdm(range(train.shape[0])):

img = image.load_img('train/'+train['id'][i].astype('str')+'.png', target_size=(28,28,1), grayscale=True)

img = image.img_to_array(img)

img = img/255

train_image.append(img)

X = np.array(train_image)As it is a multi-class classification problem (10 classes), we will one-hot encode the target variable.

y=train['label'].values

y = to_categorical(y)Step 4: Creating a validation set from the training data.

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=42, test_size=0.2)Step 5: Define the model structure.

We will create a simple architecture with 2 convolutional layers, one dense hidden layer and an output layer.

model = Sequential()

model.add(Conv2D(32, kernel_size=(3, 3),activation='relu',input_shape=(28,28,1)))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(128, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax'))Next, we will compile the model we’ve created.

model.compile(loss='categorical_crossentropy',optimizer='Adam',metrics=['accuracy'])Step 6: Training the model.

In this step, we will train the model on the training set images and validate it using, you guessed it, the validation set.

model.fit(X_train, y_train, epochs=10, validation_data=(X_test, y_test))

Step 7: Making predictions!

We’ll initially follow the steps we performed when dealing with the training data. Load the test images and predict their classes using the model.predict_classes() function.

download = drive.CreateFile({'id': '1KuyWGFEpj7Fr2DgBsW8qsWvjqEzfoJBY'})

download.GetContentFile('test_ScVgIM0.zip')

!unzip test_ScVgIM0.zipLet’s import the test file:

test = pd.read_csv('test.csv')Now, we will read and store all the test images:

test_image = []

for i in tqdm(range(test.shape[0])):

img = image.load_img('test/'+test['id'][i].astype('str')+'.png', target_size=(28,28,1), grayscale=True)

img = image.img_to_array(img)

img = img/255

test_image.append(img)

test = np.array(test_image)# making predictions

prediction = model.predict_classes(test)We will also create a submission file to upload on the DataHack platform page (to see how our results fare on the leaderboard).

download = drive.CreateFile({'id': '1z4QXy7WravpSj-S4Cs9Fk8ZNaX-qh5HF'})

download.GetContentFile('sample_submission_I5njJSF.csv')

# creating submission file

sample = pd.read_csv('sample_submission_I5njJSF.csv')

sample['label'] = prediction

sample.to_csv('sample_cnn.csv', header=True, index=False)Download this sample_cnn.csv file and upload it on the contest page to generate your results and check your ranking on the leaderboard. This will give you a benchmark solution to get you started with any Image Classification problem!

You can try hyperparameter tuning and regularization techniques to improve your model’s performance further. I ecnourage you to check out this article to understand this fine-tuning step in much more detail – ‘A Comprehensive Tutorial to learn Convolutional Neural Networks from Scratch’.

How to build Image Classification Model?

- Install Libraries:

Install TensorFlow, NumPy, and Matplotlib.

pip install tensorflow numpy matplotlib - Prepare Data:

Collect labeled images, split into training and testing sets. - Build Model:

Create a Convolutional Neural Network (CNN) using Keras.

from tensorflow.keras import layers, models

model = models.Sequential()

… (add convolutional and dense layers)

model.compile(optimizer=’adam’, loss=’categorical_crossentropy’, metrics=[‘accuracy’]) - Train Model:

Train the model using your training data.

model.fit(train_images, train_labels, epochs=num_epochs, validation_data=(val_images, val_labels))

- Evaluate and Predict:

Evaluate the model on the test set and make predictions.

test_loss, test_acc = model.evaluate(test_images, test_labels)

predictions = model.predict(test_images) - Optional: Data Augmentation and Fine-Tuning:

Use data augmentation for variety, and fine-tune as needed.

Optional: Data Augmentation

datagen = ImageDataGenerator(…)

model.fit(datagen.flow(training_data, training_labels, batch_size=batch_size), epochs=num_epochs)

Optional: Fine-Tuning

Adjust hyperparameters or architecture based on performance.

New Practice Problem

Let’s test our learning on a different dataset. We’ll be cracking the ‘Identify the Digits’ practice problem in this section. Go ahead and download the dataset. Before you proceed further, try to solve this on your own. You already have the tools to solve it – you just need to apply them! Come back here to check your results or if you get stuck at some point.

In this challenge, we need to identify the digit in a given image. We have a total of 70,000 images – 49,000 labelled ones in the training set and the remaining 21,000 in the test set (the test images are unlabelled). We need to identify/predict the class of these unlabelled images.

Ready to begin? Awesome! Create a new Python 3 notebook and run the following code:

# Setting up Colab

!pip install PyDriveimport os

from pydrive.auth import GoogleAuth

from pydrive.drive import GoogleDrive

from google.colab import auth

from oauth2client.client import GoogleCredentialsauth.authenticate_user()

gauth = GoogleAuth()

gauth.credentials = GoogleCredentials.get_application_default()

drive = GoogleDrive(gauth)# Replace the id and filename in the below codes

download = drive.CreateFile({'id': '1ZCzHDAfwgLdQke_GNnHp_4OheRRtNPs-'})

download.GetContentFile('Train_UQcUa52.zip')

!unzip Train_UQcUa52.zip# Importing libraries

import keras

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers import Conv2D, MaxPooling2D

from keras.utils import to_categorical

from keras.preprocessing import image

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from keras.utils import to_categorical

from tqdm import tqdmtrain = pd.read_csv('train.csv')

# Reading the training images

train_image = []

for i in tqdm(range(train.shape[0])):

img = image.load_img('Images/train/'+train['filename'][i], target_size=(28,28,1), grayscale=True)

img = image.img_to_array(img)

img = img/255

train_image.append(img)

X = np.array(train_image)# Creating the target variable

y=train['label'].values

y = to_categorical(y)# Creating validation set

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=42, test_size=0.2)# Define the model structure

model = Sequential()

model.add(Conv2D(32, kernel_size=(3, 3),activation='relu',input_shape=(28,28,1)))

model.add(Conv2D(64, (3, 3), activation='relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))

model.add(Dropout(0.25))

model.add(Flatten())

model.add(Dense(128, activation='relu'))

model.add(Dropout(0.5))

model.add(Dense(10, activation='softmax'))# Compile the model

model.compile(loss='categorical_crossentropy',optimizer='Adam',metrics=['accuracy'])# Training the model

model.fit(X_train, y_train, epochs=10, validation_data=(X_test, y_test))download = drive.CreateFile({'id': '1zHJR6yiI06ao-UAh_LXZQRIOzBO3sNDq'})

download.GetContentFile('Test_fCbTej3.csv')

test_file = pd.read_csv('Test_fCbTej3.csv')test_image = []

for i in tqdm(range(test_file.shape[0])):

img = image.load_img('Images/test/'+test_file['filename'][i], target_size=(28,28,1), grayscale=True)

img = image.img_to_array(img)

img = img/255

test_image.append(img)

test = np.array(test_image)prediction = model.predict_classes(test)download = drive.CreateFile({'id': '1nRz5bD7ReGrdinpdFcHVIEyjqtPGPyHx'})

download.GetContentFile('Sample_Submission_lxuyBuB.csv')sample = pd.read_csv('Sample_Submission_lxuyBuB.csv')

sample['filename'] = test_file['filename']

sample['label'] = prediction

sample.to_csv('sample.csv', header=True, index=False)Submit this file on the practice problem page to get a pretty decent accuracy number. It’s a good start but there’s always scope for improvement. Keep playing around with the hyperparameter values and see if you can improve on our basic model.

Conclusion

Who said image classification models required hours or days to train? My aim here was to showcase that you can come up with a pretty decent image classification model in double-quick time. You should pick up similar challenges and try to code them from your end as well. There’s nothing like learning by doing!

The top data scientists and analysts have these codes ready before a Hackathon even begins. They use these codes to make early submissions before diving into a detailed analysis. Once they have a benchmark solution, they start improving their model using different techniques.

Frequently Asked Questions

A. Image classification is how a model classifies an image into a certain category based on pre-defined features or characteristics.

A. The most popular image classification model is Convolutional Neural Network (CNN).

Different types of image classification include binary classification (dividing images into two categories), multi-class classification (dividing images into more than two categories), and object detection (identifying and locating multiple objects within an image).

CNN (Convolutional Neural Network) models commonly used for image classification include AlexNet, VGGNet, ResNet, Inception, and MobileNet

The best model for image classification depends on various factors such as the specific task, the size and nature of the dataset, computational resources, and performance requirements. Generally, deeper and more complex models like ResNet and Inception tend to perform well, but the “best” model can vary based on the context of the problem.

Hi Pulkit, This is a great article and timely as far as I am concerned. Off late, I have been trying to get some guidance on how to beat the computational power issue when building models on huge datasets. Using google as mentioned in your article is exactly the concept I was wanting to get some guidance on. And not just for Deep Learning models, this will be handy for other typical ML model exercises like RF, SVM and even text mining where after creating the DTM, data size explodes. I have faced difficulties in ensuring the model training completion because my laptop memory can be just as much. However I have been a R practitioner and not quite gone into Python so much as yet. Would it possible to give the exact same codes in R. If yes, it will be very helpful.

Hi Pranov, Glad to hear that you found it helpful! Regarding the codes in R, I don't have much knowledge about R but I will look for the codes in R and will share resources with you.

Hi Pranov, same here. I also use R pretty often. I am not sure but I found that Keras has also support for R, but I never tried.

Hi, I have tried with the above mentioned code. I am getting an error for downloading the test data set. While downloading training data there was no error and model got trained well. However, while dowloading test data it is giving me an error "data not found". It may because of wrong file ID. Please mention how to find a correct file ID to download the testing data set?

Hi Charanteja, You have to upload the test file on your drive and from there you will get the ID for that file. Paste that ID in the code and it should work.

from google.colab import files file = files.upload() #upload the test zip !unzip test_ScVgIM0.zip

Hi Pulkit, Thanks for the great article, it is very helpful. When I am trying to run this line: from google.colab import auth, I get this error: No module named 'google.colab'

Hi Nouman, You should run these codes in google colab instead of using your own system. The codes are designed to run on colab which provides free GPU to run your model. So, use google colab for training your model.

Hi Great article. I have neve worked with google colab. You replied to Nouman above to run the codes in google colab. it. is there a turtorial for it or do yo have any instructions i can follow? thanks Srikar

Hi Srikar, You can refer to this tutorial.

Hi Pulkit, good article. I learnt a new thing today ie Google Colab. Can you please elaborate it further? I often find RAM issues on my laptop. Is Google Colab helpful here?

Hi Harish, Yes! You get free access to RAM as well as GPU on google colab.

hello, thank forr your course, i am a beginer but a lov dataminiing , i am blocked here To download the dataset, we will use the ID of the file uploaded on Google Drive: download = drive.CreateFile({'id': '1BZOv422XJvxFUnGh-0xVeSvgFgqVY45q'}) which ID are you speaking about?? i hav not upload file on google drive, how can i do to continue thank

Hi, You first have to upload the file on your google drive and then from sharing option, you can get the unique ID for that file.

to HERVESIYOU: I suppose you can use the code above without modifications - in this case you will be using dataset arranged by Pulkit. Once you want you use your own dataset you need to upload your own file on your google drive and then follow by Pulkit's instructions (get uniq id of your file and replace the id above with your own). hope that clarifies ;) PS. Do not forget turn on GPU for your Colab Notebook !

For those having trouble with uploading test file, download the test file from this link after signing up: https://datahack.analyticsvidhya.com/contest/practice-problem-identify-the-apparels/ Download the test file to your computer Upload it on your Google Drive and right click on the file > click share > click copy link Replace ID in drive.createfile with shareable link and delete "https://drive.google.com/file/d/" and "/view?usp=sharing" The part in the middle of the above two strings are your unique file ID

Thank you Apu for this information. It will surely be helpful for others.

Thank!!

Thanks alot!!

Thanks!

how to download the sample_cnn.csv file? can you mention command for that and process for that

Hi Hari, sample_cnn.csv will be saved in your directory, you can download it directly from there.

Hi I'm trying to run this code on my local machine but am getting the following error: FileNotFoundError: [Errno 2] No such file or directory: 'train/1.png' The following was the path used : train = pd.read_csv('E:/PGP_DS_2018/DataSets/Identify Apparels/train/train.csv') train_image =[] for i in tqdm(range(train.shape[0])): img = image.load_img('train/'+train['id'][i].astype('str') +'.png',target_size=(28,28,1),grayscale= True) img = image.img_to_array(img) img = img/255 train_image.append(img) X = np.array(train_image) Any help with the above will highly be appreciated!

Hi Kinshuk, You have to give the entire path in "img = image.load_img(‘train/’+train[‘id’][i].astype(‘str’)" this line as well just like you have given while reading the csv file.

Hi Pulkit, Thanks for this extremely helpful guide. Can i check if i were to use images with color and, i have to set the grayscale=False right? I got an error like this when i set grayscale=False. ValueError: Error when checking input: expected conv2d_1_input to have shape (28, 28, 1) but got array with shape (28, 28, 3) If i were to change the target_size=(28,28,3), will it fix the problem? Also, where does the value 28 come from? Is it dependent on the size of the image? Cause i am not sure my image is of size dimension 28.

Hi, The example which I have used here has images of size (28,28,1). These images were gray scale and hence only 1 channel. If you have RGB image, i.e. 3 channels, you can remove the grayscale parameter while reading the images and it will automatically read the 3 channeled images.

"contest page to generate your results and check your ranking on the leaderboard" i cannot understand meaning of the above sentence. Kindly brief it out thanks in advance

Hi Jawahar, You can submit the predictions that you get from the model on the competition page and check how well you perform on the test data. You can also check your rank on the leaderboard and get an idea how well you are performing.

Hi all, I want to make face detection model using edge detection what should i do step by step?

When I try to get the test data with: "download = drive.CreateFile({'id': '1KuyWGFEpj7Fr2DgBsW8qsWvjqEzfoJBY'}) download.GetContentFile('test_ScVgIM0.zip') !unzip test_ScVgIM0.zip" I get: ApiRequestError: Does the file no longer exists ? or has the id/path been modified ?

Hi Rahul, You have to upload your own file to your google drive and then replace this id in this code with the id of your file.

Hi Pulkit, I am trying to use the test data code but getting an error every time I do that. It says FileNotFoundError: [Errno 2] No such file or directory: 'test/60001.png' I tried for the train data. It got trained well. But, the problem exists for the test file. All the train and test file are in the same folder. I am using local machine. Can you help please ?

Hi Saikat, If both the train and test images are in same folder, you have to change the path of test image accordingly. Suppose the image 60001.png is in train folder, then you have to pass train/60001.png to read that image and same will apply to other images as well.

I am getting this error when I try it with my own set of images(60 training data) and no. of classes=3. How do I fix this? I tried to change the output layer's value to 4 because there are 3 classes but that just freezes the system. Error: Error when checking target: expected dense_2 to have shape (10,) but got array with shape (4,)

Hi Sowmya, As you have 3 classes to predict, the number of neurons in the output layer will be 3 and not 4. So, in the below code:

model = Sequential() model.add(Conv2D(32, kernel_size=(3, 3),activation='relu',input_shape=(28,28,1))) model.add(Conv2D(64, (3, 3), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(128, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(10, activation='softmax'))You have to change the neurons in the last layer from 10 to 3. Once you have done that, compile the model again and then fit it on your training images. This will give you the results.Hello , First of all thanks a lot for your effort for that amazing instructions i’m asking where can i show your training data (id = 1BZOv422XJvxFUnGh-0xVeSvgFgqVY45q') after i have downloaded it ?

hi, I am gettimg a No module named colab error when I run the second block of code. Am I supposed to import sometimg else to be able acces colab?

Hi Rodolfo, If you are using these codes on google colab, then you have to import colab. Otherwise, if you are using your own machine, it is not required to import colab.

Hi Pulkit, I have trained the neural net successfully yet, when I run: download = drive.CreateFile({'id': '1zHJR6yiI06ao-UAh_LXZQRIOzBO3sNDq'}) download.GetContentFile('Test_fCbTej3.csv') I get a file not found error. I can see other people have had the same problem and you have explained it. but please explain to me how to fix this as if I was 6 years old, although I am 71 but I am a bit slow in comprehending. Thank you for your patience.

Hi, Will thhis work on Windows powered computer? or just in Ubuntu? I'm using Windows. Thank you.

Hi, It will work in Windows operating system as well.

Greetings Pulkit, really appreciate the article. Just one doubt,For the submission file, I'm getting a file not found error. Am I supposed to give a different ID here too, but I'm creating a new file for submission so which ID am I supposed to use here?

Hi, Can you share some of the images (like .jpg, img, JPEG 2000 Exif. ... TIFF. ... GIF. ... BMP. ... PNG. ... )can be used in classification models. Can I use this images from my desktop. Or its should be only from cloud?

Hi Srinivasan, If you have trained a model locally then you can use the image from your desktop. But, if you have trained the model on google Colab, then you first have to upload the image on colab and then use the model to make predictions.

Hii Pulkit Thanks for sharing the valuable information about Machine learning!! I am getting this error message when I tried to run the code in colab model.compile(loss='categorical_crossentropy',optimizer='Adam',metrics=['accuracy']) ^ SyntaxError: invalid syntax

Hii Pulkit! Thanks for sharing this valuable info on image classification I encountered an error while compiling the code in colab Can u please help to resolve it? The errror is: File "", line 63 model.compile(loss='categorical_crossentropy',optimizer='Adam',metrics=['accuracy']) ^ SyntaxError: invalid syntax

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=42, test_size=0.2) Getting memory error at this step. I tried changing random_state values to 21,10,1,0, etc. but still getting the error

Hi Pulkit, i am facing problem with the following line img = image.load_img('test1/'+str(index)+'.jpg', target_size=(150,150,1), grayscale=True) OUTPUT: FileNotFoundError Traceback (most recent call last) in () 4 import numpy as np 5 for index in range(10): ----> 6 img = image.load_img('test1/'+str(index)+'.jpg', target_size=(150,150,1), grayscale=True) 7 im2arr = np.array(img) 8 im2arr = im2arr.reshape(1,150,150,1) 1 frames /usr/local/lib/python3.6/dist-packages/PIL/Image.py in open(fp, mode) 2528 2529 if filename: -> 2530 fp = builtins.open(filename, "rb") 2531 exclusive_fp = True 2532 FileNotFoundError: [Errno 2] No such file or directory: 'test1/0.jpg' i uploaded the test file also and it is able to unzip it, but i am not able to load the image with that path. please help me out this problem.

If I have a labeled test set, how can I measure my prediction performance?

Hi, If you have labeled test set, i.e. you know the actual class for each image in the test set, then you can first use the trained model and make predictions for the test images and then compare the predicted classes with the actual class or the labels that you have for test set. You can use multiple evaluation metrics like accuracy or precision or recall, etc. to compare your predicted values with the actual labels.

i am working on image classification using ANN but as a beginner i dont have any knowledge about this machine learning. Can you help me by making tutorials or step by step notes? so that i can classify my image according my classes

Hi, You can follow the steps mentioned in this article to build your image classification model.

Hi, I've try your code and find this article very helpful. When I trained 2 classes, it can predict those two classes, However is that possible if i feed a 'never seen' image, it give me a unknown class instead of the two trained class.

facing problem in downloading data set to it to google drive. It would be really helpful if you can share the link of the required data . actually I am a beginner

Hi! I got a job thanks to this tutorial! Thank you very much! Do you have books published?

Hi, Where is the prediction csv file stored? I cannot really find the final file to submit. Thanks,

Hi Sina, It will be stored in the same folder where your current jupyter notebook is.

Some of the code generates deprecation warnings. I can deal with it, but it would be nice to make the tutorial current.

Thank you for the suggestion Steve! Will surely work on that.

Can you please share the download links of train and test datasets? They are no longer available on website after signup.

Hi Meet, Here is the link of the problem page: https://datahack.analyticsvidhya.com/contest/practice-problem-identify-the-apparels/ Go to the link and register for the problem and then you can download the dataset from the Data section.

I'm having trouble with the CSV Line, or train = pd.read_csv('train.csv'). I had watched other videos for image classification, that used datasets WITH labeled images in categories, but WITHOUT numerical data. How do I go about creating an image classification system now?

Hi Sakti, This csv file which is provided to you only contains the names of all the images and their corresponding class to which they belong. This file do not contain any more information about the image. To extract the features from the images, you have to use the actual image provided to you. This .csv file is basically provided to you so that you can map the images with their corresponding class.

Hello PULKIT SHARMA, Wonderful article, i have one doubt. When i run this code in google colab i got this error. Help me to resolve Code: download.GetContentFile('/content/drive/My Drive/Colab Notebooks/ipynb/fashion-mnist-master.zip') !unzip 'fashion-mnist-master.zip' Error: FileNotDownloadableError Traceback (most recent call last) in () ----> 1 download.GetContentFile('/content/drive/My Drive/Colab Notebooks/ipynb/fashion-mnist-master.zip') 2 get_ipython().system("unzip 'fashion-mnist-master.zip'") 2 frames /usr/local/lib/python3.6/dist-packages/pydrive/files.py in FetchContent(self, mimetype, remove_bom) 263 else: 264 raise FileNotDownloadableError( --> 265 'No downloadLink/exportLinks for mimetype found in metadata') 266 267 if mimetype == 'text/plain' and remove_bom: FileNotDownloadableError: No downloadLink/exportLinks for mimetype found in metadata Thanks in advance

Hi Pulkit, I wanted to use annotated labels like x y coordinates (x1,y1,x2,y2) for my region of interest along with class labels. Can I do this following the discussed approach? Thanks, Ajay

Hi Ajay, This seems to be an object detection problem. Instead of approaching it as an image classification problem, you can try to use some object detection techniques.

Hi Pulkit, Great article, thanks. You mention that this code uses GPU provided by Colab Notebook. If I want to modify this code to run on premises - what is minimum GPU specs recommended? E.g. If I run it on a laptop - should it be a gaming laptop?

Hi, Having higher configuration will fasten the process. If you have low specifications, you can still train the model but the training time will be too high.

i have doubt in the last step of creating the sample submission file. can you please tell me how to create it in the drive.

Hi Vinoth, First of all read the sample submission file which you will find on the competition page (link is provided in the article). Replace the labels in this file with the predictions that you have got from the model and finally save the csv file using to_csv() function. Following code will help you to do that: # creating submission file sample = pd.read_csv('sample_submission_I5njJSF.csv') sample['label'] = prediction sample.to_csv('sample_cnn.csv', header=True, index=False) This will save the file in colab.