6 Exciting Open Source Data Science Projects you Should Start Working on Today

Overview

- Here are six open-source data science projects to enhance your skillset

- These projects cover a diverse set of domains, from computer vision to natural language processing (NLP), among others

- Pick your favorite open-source data science project(s) and get coding!

Introduction

I recently helped out in a round of interviews for an open data scientist position. As you can imagine, there were candidates from all kinds of backgrounds – software engineering, learning and development, finance, marketing, etc.

What stood out for me was the amazing range of projects some of these folks had already done. They didn’t have a lot of industry experience in data science per se, but their passion and curiosity to learn new concepts drove them to previously unchartered land.

A common theme – open source data science projects. I have been espousing their value for the last couple of years now! Trust me, recruiters and hiring managers appreciate the extra mile you go to take up a project you haven’t seen before and work your socks off to deliver it.

That project can be from the domain you’re currently working in or the domain you want to go to. And there is no shortage of these projects.

Here, I present six such open-source data science projects in this article. I always try to keep a diverse portfolio when I’m making the shortlist – and this article is no different. You’ll find projects from computer vision to Natural Language Processing (NLP), among others.

This is the 10th edition of our monthly GitHub series. We’ve received an overwhelmingly positive response from our community ever since we started this in January 2018. In case you missed this year’s articles, you can check them out here:

Our Pick of 6 Open Source Data Science Projects on GitHub (October Edition)

Open Source Computer Vision Projects

The demand for computer vision experts is steadily increasing each year. It has established itself as an industry-leading domain (which is no surprise to anyone who follows the latest industry trends). There is a lot to do and a lot to learn as a data science professional.

Here are three useful open-source computer vision projects you’ll enjoy working on. And if you’re new to this burgeoning field, I suggest checking out the below popular course:

Few-Shot vid2vid by NVIDIA

I came across the concept of video-to-video (vid2vid) synthesis last year and was blown away by its effectiveness. vid2vid essentially converts a semantic input video to an ultra-realistic output video. This idea has come a long way since then.

But there are currently two primary limitations with these vid2vid models:

- They require humongous amounts of training data

- These models struggle to generalize beyond the training data

That’s where NVIDIA’s Few-Shot viv2vid framework comes in. As the creator state, we can use it for “generating human motions from poses, synthesizing people talking from edge maps, or turning semantic label maps into photo-realistic videos.

This GitHub repository is a PyTorch implementation of Few-Shot vid2vid. You can check out the full research paper here (it was also presented at NeurIPS 2019).

Here’s a video shared by the developers demonstrating Few-Shot vid2vid in action:

Here’s the perfect article to start learning about how you can design your own video classification model:

Ultra-Light and Fast Face Detector

This is a phenomenal open-source release. Don’t be put off by the Chinese page (you can easily translate it into English). This is an ultra-light version of a face detection model – a really useful application of computer vision.

The size of this face detection model is just 1MB! I honestly had to read that a few times to believe it.

This model is a lightweight face detection model for edge computing devices based on the libfacedetection architecture. There are two versions of the model:

- Version-slim (slightly faster simplification)

- Version-RFB (with the modified RFB module, higher precision)

This is a great repository to get your hands on. We don’t typically get such a brilliant opportunity to build computer vision models on our local machine – let’s not miss this one.

If you’re new to the world of face detection and computer vision, I recommend checking out the below articles:

- A Simple Introduction to Facial Recognition (with Python code)

- Building a Face Detection Model from Video using Deep Learning (Python Implementation)

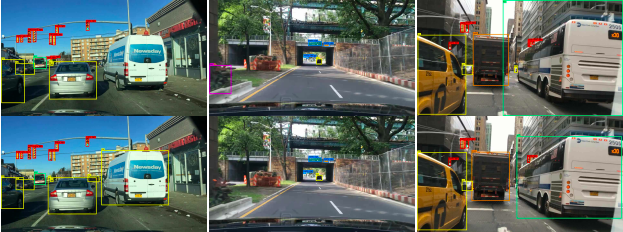

Gaussian YOLOv3: An Accurate and Fast Object Detector for Autonomous Driving

I’m a huge fan of self-driving cars. But progress has been slow due to a variety of reasons (architecture, public policy, acceptance among the community, etc.). So it’s always heartening to see any framework or algorithm that promises a better future for these autonomous cars.

Object detection algorithms are at the heart of these autonomous vehicles – I’m sure you already know that. And detecting objects at high accuracy with fast inference speed is vital to ensure safety. All this has been around for a few years now, so what differentiates this project?

The Gaussian YOLOv3 architecture improves the system’s detection accuracy and supports real-time operation (a critical aspect). Compared to a conventional YOLOv3, Gaussian YOLOv3 improves the mean average precision (mAP) by 3.09 and 3.5 on the KITTI and Berkeley deep drive (BDD) datasets, respectively.

Here are three in-depth, exhaustive and helpful articles to get you started with object detection and the YOLO framework in computer vision:

- A Step-by-Step Introduction to the Basic Object Detection Algorithms

- A Practical Guide to Object Detection using the Popular YOLO Framework (with Python code)

- A Friendly Introduction to Real-Time Object Detection using the Powerful SlimYOLOv3 Framework

Other Open Source Data Science Projects

This article isn’t just limited to computer vision! Like I mentioned in the introduction, I aim to cover the length and breadth of data science. So, here are three projects ranging from Natural Language Processing (NLP) to data visualization!

T5: Text-to-Text Transfer Transformer by Google Research

How could Google every stay out of a “latest breakthroughs” list? They have allocated vast amounts of money into machine learning, deep learning and reinforcement learning research and their results reflect that. I’m delighted they open-source their projects from time to time – there’s a lot to learn from them.

T5, short for Text-to-Text Transfer Transformer, is powered by the concept of transfer learning. In this latest NLP project, the developers behind T5 introduce a unified framework that converts every language problem into a text-to-text format.

This framework achieves state-of-the-art results on various benchmarks in the tasks of summarization, question answering, text classification, and more. They have open-sourced the dataset, pre-trained models, and the code behind T5 in this GitHub repository.

As the Google folks put it, “T5 can be used as a library for future model development by providing useful modules for training and fine-tuning (potentially huge) models on mixtures of text-to-text tasks.”

NLP is the hottest field right now, you really don’t want to miss out on these developments. Check out the below articles to learn more:

- How do Transformers Work in NLP? A Guide to the Latest State-of-the-Art Models

- Transfer Learning and the Art of using Pre-trained Models in Deep Learning

Largest Chinese Knowledge Map in History

I have come across a lot of articles on graphs recently. How they work, what are the different components of a graph, how knowledge flows in a graph, how does the concept apply to data science, etc. – these are questions I’m sure you’re asking right now.

There are certain offshoots of graph theory that we can apply in data science, such as knowledge trees and knowledge maps.

This project is a behemoth in that sense. It is the largest Chinese knowledge map in history, with over 140 million points! The dataset is organized in the form of (entity, attribute, value), (entity, relationship, entity). The data is in .csv format. It’s a wonderful open-source project to showcase your graph skills – don’t hesitate to dive right in.

If you’re wondering what graph theory and knowledge trees are, you can begin your journey here:

- An Introduction to Graph Theory and Network Analysis (with Python code)

- Knowledge Graph – A Powerful Data Science Technique to Mine Information from Text

RoughViz – An Awesome Data Visualization Library in JavaScript

I’m a huge fan of data visualization – that’s no secret. I’ve written multiple articles on the topic and I’m in the midst of creating a course on the topic (which you can check out here). So, I always jump at the chance to include a data visualization library or project in these articles.

RoughViz is one such JavaScript library to generate hand-drawn sketches or visualizations. It is based on D3v5, roughjs, and handy.

You can install roughViz on your machine using the below command:

npm install rough-viz

This GitHub repository contains detailed examples and code on how to use roughViz. Here are the different charts you can generate:

- Bar chart

- Horizontal Bar

- Donut chart

- Line chart

- Pie chart

- Scatter chart

Want to understand how JavaScript works in the data science space? Here is an intuitive article to get you on your way:

End Notes

I quite enjoyed putting this article together. I come across some really interesting data science projects, libraries, and frameworks along the way. It’s actually a great way to stay up to date with the latest developments in this field.

Which data science project was your favorite from this list? Or are there any other projects you came across that you feel the community should know about? Let me know in the comments section below!

And if you’re new to the world of data science, computer vision, or NLP, make sure you check out the below courses:

Nice article. Could you please elaborate the statement 'start working on projects'. Does that mean we have to replicate the work they have done?. Also, I'm interested to work on some deep learning projects in NLP. If AV has published similar articles on this topic i.e list of NLP projects, please point me to those articles.

Hi Santosh, You can always replicate the work these top researchers have done. It's a good way to understand how they came to the final result/model. But what will really help your learning is to play around with the code? Change a few parameters, see what results you get. Try to interpret those results - you will learn a whole lot of new things that way. We have published a ton of NLP articles. From the ULMFiT framework to Transformer, Google AI's BERT and OpenAI's GPT-2, our blog is up-to-date with the state-of-the-art in NLP. Check out all the articles here - https://www.analyticsvidhya.com/blog/category/nlp/.

Yes please elaborate about the projects from which a student can start

It's good to see new machine learning projects

Thanks, Shivam - glad you found it useful.

I quite enjoyed reading the article ;) Thanks for putting it together!

Thanks, Marcel!

I am a beginner in machine learning and i want to learn it and im highly motivated towards it. Do i have to take command over python and then start ML? Or just dive directly into it and learn python sideways?

Hi Rohan, A little bit of background in Python will definitely help you when you start learning how different algorithms work. But it honestly depends on the person studying. I personally learned Python along with ML because it kept me motivated to learn and put my learning into practice at the same time.

It's very nice

Can any of these projects be done in SAS or R language, Isn't there any scope for data science projects in SAS module or R module, am not from computer background an ex-banker and completed certificate course on data science with SAS andR, i feel like i reached nowhere and no scope not even a internship to start with for my career restart

Am new to Data Science, could you please provide me with resources that could helpful.