What if I told you that we could derive effective and impactful insights from our dataset in just a few lines of code? That’s the beauty of Python Pandas GroupBy function! I have lost count of the number of times I’ve relied on the Pandas GroupBy function to quickly summarize data and aggregate it in a way that’s easy to interpret

This helps not only when we’re working on a data science project and need quick results but also in hackathons! When time is of the essence (and when is it not?), the GroupBy function in Pandas, particularly the “pandas groupby” method, saves us a ton of effort by delivering super quick results in a matter of seconds. If you are familiar with groups in SQL, this article will be even easier for you to understand!

Loving GroupBy already? In this tutorial, I will first explain the GroupBy function using an intuitive example before picking up a real-world dataset and implementing GroupBy in Python. Let’s begin aggregating!

In this article you will get to know about the topics pandas groupby aggregate, groupby in pandas, groupby aggregate pandas with these topics you will clear your doubts and we are covering these in our article so lets dive in.

Learning Objectives

If you’re new to the world of Python and Pandas, you’ve come to the right place. Here are two popular free courses you should check out:

Pandas groupby operation is a powerful and versatile function in Python. It allows you to split your data into separate groups to perform computations for better analysis.

Let me take an example to elaborate on this. Let’s say we are trying to analyze the weight of a person in a city. We can easily get a fair idea of their weight by determining the mean weight of all the city dwellers. But here‘s a question – would the weight be affected by the gender of a person?

We can group the city dwellers into different gender groups and compute their mean weight. This would give us a better insight into the weight of a person living in the city. But we can probably get an even better picture if we further separate these gender groups into different age groups and then take their mean weight (because a teenage boy’s weight could differ from that of an adult male)!

You can see how separating people into separate groups and then applying a statistical value allows us to make better analyses than just looking at the statistical value of the entire population. This is what makes GroupBy so great!

GroupBy allows us to group our data based on different features and get a more accurate idea about your data. It is a one-stop shop for deriving deep insights from your data!

We will be working with the Big Mart Sales dataset from our DataHack platform. It contains attributes related to the products sold at various stores of BigMart. The aim is to find out the sales of each product at a particular store.

Right, let’s import the libraries and explore the data:

import pandas as pd

import numpy as np

df = pd.read_csv(‘train_v9rqX0R.csv’)We have some nan values in our dataset. These are mostly in the Item_Weight and Outlet_Size. I will handle the missing values for Outlet_Size right now, but we’ll handle the missing values for Item_Weight later in the article using the GroupBy function!

Let’s group the dataset based on the outlet location type using GroupBy, the syntax is simple we just have to use pandas dataframe.groupby:

df.groupby('Outlet_Location_Type')Output:

GroupBy has conveniently returned a DataFrameGroupBy object. It has split the data into separate groups. However, it won’t do anything unless it is being told explicitly to do so. So, let’s find the count of different outlet location types:

df.groupby('Outlet_Location_Type').count()

We did not tell GroupBy which column we wanted it to apply the aggregation function on, so we applied it to multiple columns (all the relevant columns) and returned the output.

But fortunately, GroupBy object supports column indexing just like a pandas Dataframe!

So let’s find out the total sales for each location type:

df.groupby('Outlet_Location_Type')['Item_Outlet_Sales']

Output:

Here, GroupBy has returned a SeriesGroupBy object. No computation will be done until we specify the agg function:

df.groupby('Outlet_Location_Type')['Item_Outlet_Sales'].sum()

Output:

Awesome! Now, let’s understand the work behind the GroupBy function in Pandas.

You just saw how quickly you can get an insight into grouped data using the Pandas GroupBy function. But, behind the scenes, a lot is taking place, which is important to understand to gauge the true power of GroupBy.

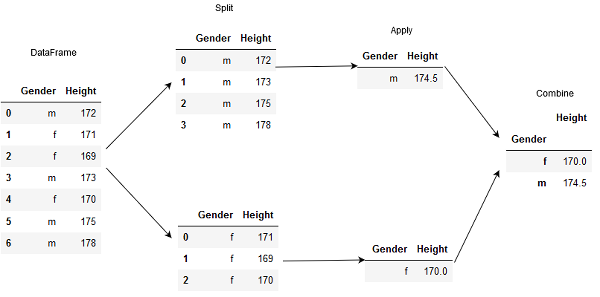

GroupBy employs the Split-Apply-Combine strategy coined by Hadley Wickham in his paper in 2011. Using this strategy, a data analyst can break down a big problem into manageable parts, perform operations on individual parts and combine them back together to answer a specific question.

I want to show you how this strategy works in GroupBy by working with a sample dataset to get the average height for males and females in a group. Let’s create that dataset:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters:

data = {

'Gender': ['m', 'f', 'f', 'm', 'f', 'm', 'm'],

'Height': [172, 171, 169, 173, 170, 175, 178]

}

df_sample = pd.DataFrame(data)

df_sampleOutput:

Splitting the data into separate groups:

f_filter = df_sample['Gender']=='f'

print(df_sample[f_filter])

m_filter = df_sample['Gender']=='m'

print(df_sample[m_filter])Applying the operation that we need to perform (average in this case):

f_avg = df_sample[f_filter]['Height'].mean()

m_avg = df_sample[m_filter]['Height'].mean()

print(f_avg,m_avg)Output:

170.0 174.5Finally, combining the result to output a DataFrame:

df_output = pd.DataFrame({'Gender':['f','m'],'Height':[f_avg,m_avg]})

df_outputOutput:

All these three steps can be achieved by using GroupBy with just a single line of code! Here’s how:

df_sample.groupby('Gender').mean()

Output:

Now that is smart! Have a look at how GroupBy did that in the image below:

You can see how GroupBy simplifies our task by doing all the work behind the scenes without us having to worry about a thing!

Now that you understand the Split-Apply-Combine strategy let’s dive deeper into the GroupBy function and unlock its full potential.

Remember the GroupBy object we created at the beginning of this article? Don’t worry, we’ll create it again:

obj = df.groupby( 'Outlet_Location_Type' )

obj Output:

We can display the indices in each group by calling the groups on the GroupBy object:

obj.groupsOutput:

We can even iterate over all of the groups:

for name,group in obj:

print(name,'contains',group.shape[0],'rows')Output:

But what if you want to get a specific group out of all the groups? Well, don’t worry. Pandas has a solution for that too.

Just provide the specific group name when calling get_group on the group object. Here, I want to check out the features for the ‘Tier 1’ group of locations only:

obj.get_group('Tier 1')

Output:

Now isn’t that wonderful! You have the entire Tier 1 features to work with and derive wonderful insights! But wait, didn’t I say that GroupBy is lazy and doesn’t do anything unless explicitly specified? Alright then, let’s see GroupBy in action with the aggregate functions.

The apply step is unequivocally the most important step of a Pandas GroupBy function where we can perform a variety of operations using aggregation, transformation, filtration, or even with your own function!

Let’s have a look at these in detail.

We have looked at some aggregation functions in the article so far, such as mean, mode, and sum. These perform statistical operations on a set of data. Have a glance at all the aggregate functions in the Pandas package:

But the agg() function in Pandas gives us the flexibility to perform several statistical computations all at once! Here is how it works:

df.groupby('Outlet_Location_Type').agg([np.mean,np.median])Output:

We can even run GroupBy with multiple indexes to get better insights from our data

df.groupby(['Outlet_Location_Type', 'Outlet_Establishment_Year'], as_index=False).agg(

{'Outlet_Size': pd.Series.mode,

'Item_Outlet_Sales': np.mean

}

)

Notice that I have used different aggregation functions for different column names by passing them in a dictionary with the corresponding operation to be performed. This allowed me to group and apply computations on nominal and numeric features simultaneously.

Also, I have changed the value of the as_index parameter to False. This way, the grouped index would not be output as an index.

We can even rename the aggregated columns to improve their comprehensibility, and we get a multi-index dataframe:

df.groupby(['Outlet_Type', 'Item_Type']).agg(

mean_MRP=('Item_MRP', np.mean),

mean_Sales=('Item_Outlet_Sales', np.mean)

)

It is amazing how a name change can improve the understandability of the output!

Transformation allows us to perform some computation on the groups as a whole and then return the combined DataFrame. This is done using the transform() function.

We will try to compute the null values in the Item_Weight column using the transform() function.

The Item_Fat_Content and Item_Type will affect the Item_Weight, don’t you think? So, let’s group the DataFrame by these columns and handle the missing weights using the mean of these groups:

df['Item_Weight'] = df.groupby(['Item_Fat_Content', 'Item_Type'])['Item_Weight'].transform(

lambda x: x.fillna(x.mean())

)

“Using the Transform function, a DataFrame calls a function on itself to produce a DataFrame with transformed values.”

You can read more about the transform() function in this article.

Filtration allows us to discard certain values based on computation and return only a subset of the group. We can do this using the filter() function in Pandas.

Let’s take a look at the number of rows in our DataFrame presently:

df.shape Output:

(8523, 12)If I wanted only those groups that have item weights within 3 standard deviations, I could use the filter function to do the job:

def filter_func(x):

return x['Item_Weight'].std() < 3

df_filter = df.groupby(['Item_Weight']).filter(filter_func)

df_filter.shapeOutput:

(8510, 12)GroupBy has conveniently returned a DataFrame with only those groups that have Item_Weight less than 3 standard deviations.

Pandas’ apply() function applies a function along an axis of the DataFrame. When using it with the GroupBy function, we can apply any function to the grouped result.

For example, if I wanted to center the Item_MRP values with the mean of their establishment year group, I could use the apply() function to do just that”:

df_apply = df.groupby(['Outlet_Establishment_Year'])['Item_MRP'].apply(lambda x: x - x.mean())

df_applyOutput:

Here, the values have been centered, and you can check whether the item was sold at an MRP above or below the mean MRP for that year.

I’m sure you can see how amazing the Pandas GroupBy function is and how useful it can be for analyzing your data. I hope this article helped you understand the function better! But practice makes perfect, so start with the super impressive datasets on our very own DataHack platform. Moving forward, you can read about how you can analyze your data using a pivot table in Pandas.

Hope you like the article and know you have clear understanding of the topics, pandas groupby aggregate, group by in pandas, groupby aggregate pandas.

Key Takeaways

A. Yes, we can use groupby without an aggregate function in pandas. In this case, groupby will return a GroupBy object that can be used to perform further operations.

A. Groupby and groupby agg are both methods in pandas that allow us to group a DataFrame by one or more columns and perform operations on the resulting groups. However, there are some important differences between the two methods. Groupby returns a GroupBy object, which can be used to perform a variety of operations on the groups, such as applying functions, resetting index, or filtering. Whereas groupby agg is a method specifically for performing aggregation operations on a grouped DataFrame. It allows us to specify one or more aggregation functions to apply to each group and returns a DataFrame containing the results.

A. You can use the dropna method to handle missing values in a grouped DataFrame. This method can be applied before or after the grouping process to ensure that the missing values do not affect the analysis.

A. Categorical data plays a significant role in groupby operations as it allows for efficient grouping and aggregation. When using categorical data, pandas can perform the groupby operation faster and use less memory. You can convert a column to a categorical type using astype('category') before grouping.

A. To get unique values within each group, you can use the nunique method, which returns the number of unique values in each group.

A. Dictionaries can be used in groupby operations to specify different aggregation functions for different columns. You can pass a dict to the agg method where keys are column names and values are aggregation functions.

Lorem ipsum dolor sit amet, consectetur adipiscing elit,

Thanks for sharing, helpful article for quick reference.

Glad you found it useful!

Well, the sample data used should be provided in the article, That would be a great help and aid in understanding the topic.

Hi Ruff, I have used the Big Mart Sales dataset from the DataHack platform, you can donwload it from there.

thanks for sharing...awesome explaination

Amazing article! Thanks for sharing

Very useful.Thanks for sharing

This is one of the best explanations I have read. Thank you!