Introduction

Language barriers can hinder global communication, but AI and natural language processing offer solutions. Language Models (LLMs) trained on vast text data have deep language understanding, enabling seamless translation between people of different languages. LLMs surpass traditional rule-based methods, improving accuracy and quality. This article explains how to build a translator using LLMs and Hugging Face, a prominent natural language processing platform.

From library installation to a user-friendly web app, you’ll learn to create a translation system. Embracing LLMs unlocks endless opportunities for effective cross-lingual communication, bridging gaps in our interconnected world.

Learning Objectives

By the end of this article, you will be able to

- Understand how to import and use the Hugging Face transformers and OpenAI Models to perform the tasks.

- Able to build your Translator in any language and tune it along with the users’ needs.

This article was published as a part of the Data Science Blogathon.

Table of contents

Understanding Translators and Their Importance

Translators are tools or systems that convert text from one language to another while preserving the meaning and context. They help us to bridge the gap between people who speak different languages and enable effective communication on a global scale.

The importance of translators is evident in various domains, including business, travel, education, and diplomacy. Whether it’s translating documents, websites, or conversations, translators facilitate cultural exchange and foster understanding.

I recently faced the same problem when I went on a Tour where I didn’t understand their language, and they couldn’t understand my language, but finally, I made it with Google Translation. (haha)

Overview of OpenAI and Hugging Face

No one needs the introduction to OpenAI, it is a well-known research group with a focus on artificial intelligence. They created language models like the GPT series and the Language Models API. These models have changed how translation and other NLP jobs are done.

There is another platform called Hugging Face that offers a variety of NLP models and tools. For jobs like translation, they provide pre-trained models, fine-tuning options, and simple pipelines. Hugging Face has emerged as a go-to source for NLP developers and researchers.

Advantages of Using LLMs for Translation

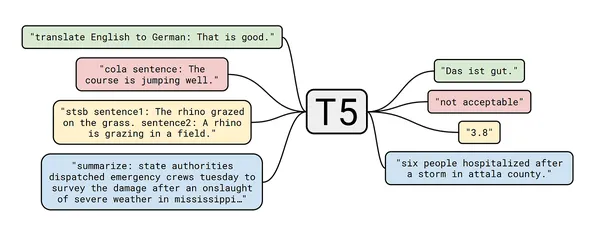

Language Models, such as OpenAI’s GPT and Hugging Face’s T5, have revolutionized the field of translation. Here are some advantages of using LLMs for translation:

- Contextual Understanding: LLMs have been trained on vast amounts of text data, enabling them to capture nuanced meanings and context.

- Improved Accuracy: LLMs have significantly improved translation quality compared to traditional rule-based methods.

- Multilingual Support: LLMs can handle translation between multiple languages, making them versatile and adaptable.

- Continual Learning: LLMs can be fine-tuned and updated with new data, improving translation quality continuously.

Now, let’s dive into the step-by-step process of building a translator using LLMs and Hugging Face.

Building a Translator

Installing the Required Libraries

To get started, we first need to install the necessary libraries to perform the tasks.

Open your preferred Python environment ( I’ve done this in base Env ). You can create a new Env by running the python -m venv “env name “.

We are installing transformers, and datasets, to load from the hugging Face. You can just run these commands in your jupyter notebook or terminal.

%pip install sacremoses==0.0.53

%pip install datasets

%pip install transformers

%pip install torch torchvision torchaudio

%pip install "transformers[sentencepiece]"Setting up the T5-Small Model with Hugging Face

As mentioned earlier, we will use Hugging Face models in our project. Specifically, we’ll be using the T5-Small model for text translation. Although other models are available in Hugging Face’s Translator models, we will focus on the T5-Small model. To set it up, use the following code:

Before Setting up the T5-Small, we import some of our installed libraries.

# import the libaries

from datasets import load_dataset

from transformers import pipelineWe are saving the model in the cache_dir, so there is no need to download it repeatedly.

# Creating a pipeline for the T5-small model and saving that into cache_dir

t5_small_pipeline = pipeline(

task="text2text-generation",

model="t5-small",

max_length=50,

model_kwargs={"cache_dir": '/Users/tarakram/Documents/Translate/t5_small' },

)Translating Text with T5-Small

Now, let’s translate some text using the T5-Small model:

Note: One drawback of the T5-Small model is that it does not support all languages. However, in this guide, I will demonstrate the process of using translation models in our projects.

# English to Romanian

t5_small_pipeline(

"translate English to Romanian : Hey How are you ?"

)

#English to Spanish

t5_small_pipeline(

"translate English to Spanish: Hey How are you ?"

)

# as we know that the model is not supported by many languages, it is limited to

# few languages onlyThe t5_small_pipeline will generate translations for the given text in the specified target language.

Enhancing Translation with OpenAI’s LLMs

Although the T5-Small model covers multiple languages, we can enhance translation quality by leveraging OpenAI’s LLMs. To do this, we need an OpenAI API key. Once you have the key, you can proceed with the following code:

# importing the libraries

import openai

from secret_key import openapi_key

import os

# Enter your openAI Key here

os.environ['OPENAI_API_KEY'] = openapi_key

# creating a function, that takes the text and asks the

#source language and also the traget language

def translate_text(text, source_language, target_language):

response = openai.Completion.create(

engine='text-davinci-002',

prompt=f"Translate the following text from {source_language} to {target_language}:\n{text}",

max_tokens=100,

n=1,

stop=None,

temperature=0.2,

top_p=1.0,

frequency_penalty=0.0,

presence_penalty=0.0,

)

translation = response.choices[0].text.strip().split("\n")[0]

return translation

# Get user input

text = input("Enter the text to translate: ")

source_language = input("Enter the source language: ")

target_language = input("Enter the target language: ")

translation = translate_text(text, source_language, target_language)

# print the translated text

print(f'Translated text: {translation}')

The provided code sets up a translation function using OpenAI’s LLMs. It sends a request to the OpenAI API for translation and retrieves the translated text.

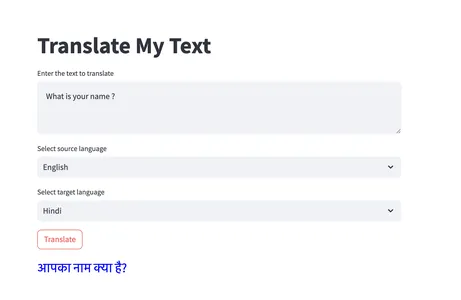

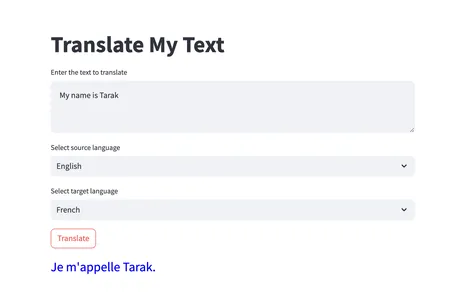

Creating a Translator Web Application with Streamlit

To make our translation accessible via a user-friendly interface, we can create a translator web application using Streamlit. Here’s an example code snippet:

# importing the libraries

import streamlit as st

import os

import openai

from secret_key import openapi_key

# Entere your openAI key here

os.environ['OPENAI_API_KEY'] = openapi_key

# Define the list of available languages

languages = [

"English",

"Hindi",

"French",

"Telugu",

"Albanian",

"Bengali",

"Bhojpuri",

# Add more languages as needed, if you want

]

# creating a function.

def translate_text(text, source_language, target_language):

response = openai.Completion.create(

engine='text-davinci-002',

prompt=f"Translate the following text from {source_language}

to {target_language}:\n{text}",

max_tokens=100,

n=1,

stop=None,

temperature=0,

top_p=1.0,

frequency_penalty=0.0,

presence_penalty=0.0

)

translation = response.choices[0].text.strip().split("\n")[0]

return translation

# Streamlit web app

def main():

st.title("Translate My Text")

# Input text

text = st.text_area("Enter the text to translate")

# Source language dropdown to select the languages

source_language = st.selectbox("Select source language", languages)

# Target language dropdown to select the languages

target_language = st.selectbox("Select target language", languages)

# Translate button to translate

if st.button("Translate"):

translation = translate_text(text, source_language, target_language)

st.markdown(f'<p style="color: blue; font-size: 25px;">

{translation}</p>', unsafe_allow_html=True)

if __name__ == '__main__':

main()

This code utilizes the Streamlit library to create a web application. Users can enter the text to translate, select the source and target languages, and click the “Translate” button to obtain the translation. Here are some of the outputs to get familiar with.

Translations are used in many ways; here are some of the successes stories of translation into real-world applications:

- Google Translate is a widely used translation service that utilizes LLMs to provide accurate translations across multiple languages.

- DeepL Translator is known for its high-quality translations and relies on LLMs to achieve accurate and natural-sounding results.

- Facebook’s translation systems employ LLMs to facilitate seamless communication across different languages on the platform.

- Automatic speech translation systems leverage LLMs to enable real-time spoken language translation, benefiting international conferences and teleconferencing.

Conclusion

This article summarizes the steps in creating a translator using Hugging Face and OpenAI LLMs. It emphasizes the value of translators in the modern world and the benefits they bring in breaking down language barriers and promoting efficient global communication. now you to develop your translation systems using LLMs and platforms like Hugging Face.

Key Takeaways

- Language Models, such as OpenAI’s GPT -3 or 4 and Hugging Face’s T5, have transformed the field of translation; they offer contextual understanding, improved accuracy, multilingual support, and the ability to learn and adapt based on the context continually.

- With Hugging Face, you can easily access pre-trained models, fine-tune them for specific tasks, and leverage ready-to-use pipelines for various NLP tasks, including translation.

- By leveraging LLMs and building translators, we can foster global understanding, facilitate cross-cultural communication, and promote collaboration and exchange in various domains.

Here is the project Link – GitHub

You can Connect with Me – Linkedin

Frequently Asked Questions

A. LLMs have a contextual understanding of language, allowing them to capture meanings and provide accurate translations. They can handle multiple languages and continuously improve through fine-tuning.

A. As I showed in this project, You can utilize platforms like Hugging Face that provide pre-trained models and easy-to-use pipelines for translation tasks. Additionally, you can leverage OpenAI’s LLMs by integrating their API into your translation systems.

A. Yes, by incorporating OpenAI’s LLMs, you can translate between a broader range of languages. OpenAI’s LLMs offer extensive language support ( 95+ languages ) and use for specific translation tasks.

A. LLMs have significantly improved translation accuracy compared to traditional methods. However, the quality of translations may vary depending on the specific language pair and the amount of training data available.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.