Mining YouTube using Python & performing social media analysis (on ALS ice bucket challenge)

If you are someone like me, you would have been swamped by the constant feed of people pouring ice buckets over them – but you still watch that next video in the feed, just to see how the person reacted to the icy shock!

Yes! you are right – I am talking about the ALS ice bucket challenge. Thankfully, the fever has not caught Linkedin (yet)! Please don’t get me wrong – I have nothing against the cause, I would donate as much as I can and would want the world to do so as well – but this icyness is getting over me!

Anyways, by this time, the analyst in me was getting excited! Why? It just found something interesting to analyze. I thought I would analyze the impact of this campaign and see the awareness it has created. This is the power of open source tools and data available freely over the internet – an analyst can sit in front of his laptop and analyze what is happening across the globe through a few pieces of codes!

Data point 1 – Google Trends

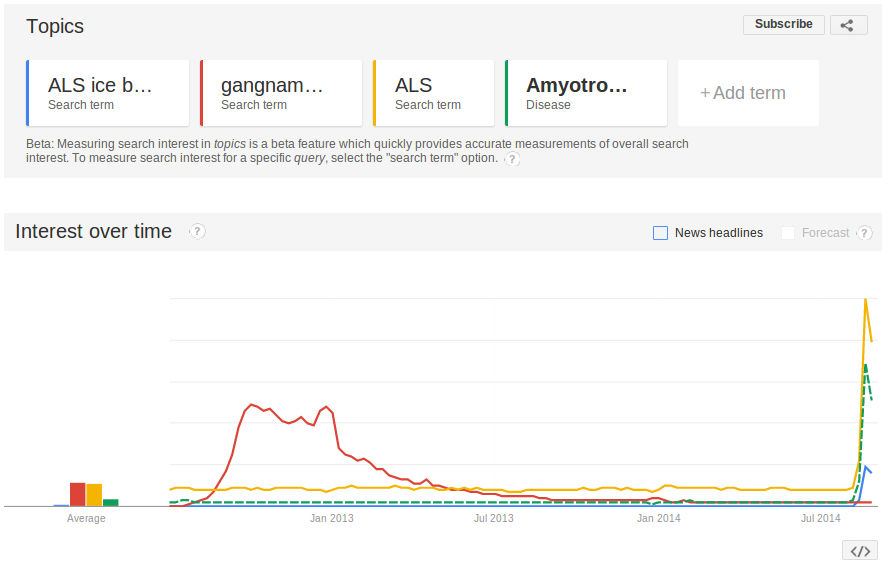

Any awareness / viral campaign can be measured by the impact it creates on Google searches related to that topic. So that provides us with the first platform to measure this impact. Let us look at what Google trends have to say about ALS awareness searches. For sake of comparison, I have added “Gangnam Style” to the mix. “Gangnam style” provided a unique word, which was almost un-heard of before the video became viral. Here is what Google throws up:

By looking at this graph, you can see the magnitude of awareness increase we are talking about. “Gangnam Style” searches, even at their peak, were just half of what only “ALS” has today. If you combine the associated keywords to this mix, we might be talking about an impact of 5x – 10x of Google searches, when compared to Gangnam style. Having said that, I expect that stickiness of these search terms would be relatively less as opposed to “Gangnam style”, so the overall effect might be lower – but only time will tell this.

By looking at this graph, you can see the magnitude of awareness increase we are talking about. “Gangnam Style” searches, even at their peak, were just half of what only “ALS” has today. If you combine the associated keywords to this mix, we might be talking about an impact of 5x – 10x of Google searches, when compared to Gangnam style. Having said that, I expect that stickiness of these search terms would be relatively less as opposed to “Gangnam style”, so the overall effect might be lower – but only time will tell this.

P.S. “Gangnam style” is probably not the best comparison – but I couldn’t think of anything else. If you have any other suggestions – please feel free to add them in the comments below.

More data points – Analyzing Social media channels:

In addition to Google searches, ALS campaign has gone viral on following social media channels:

- YouTube

Facebook doesn’t provide a way to extract universal data or any obvious way to extract data outside my nework, so I can’t use it for this analysis. Also, I haven’t used Instagram, so I’ll skip the effort to understand it. Analyzing Twitter is not that difficult – you can extract data using a few hashtags – the analysis would be very similar to what we did to find out (Who is the world cheering for?) before the recent FIFA World cup. Surprisingly, I did not find any easy tutorials / guides to mine the data available on YouTube easily. That just doubled up the fun! So here is what you need to do to mine YouTube using Python:

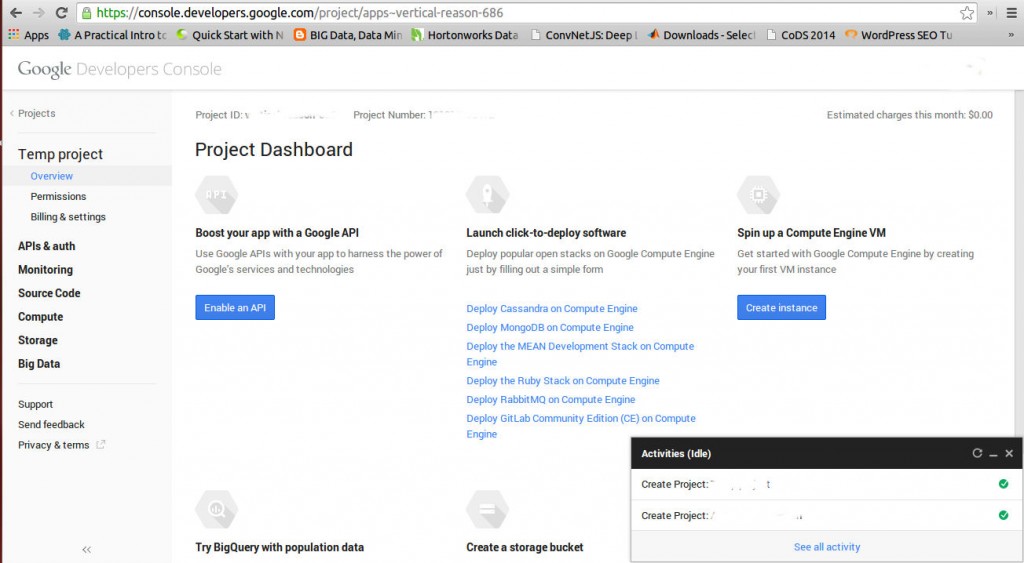

Step 1: Extracting the API key from Google Developer Console

The first thing you need to do is to extract the API key from Google Developer Console. In order to do so, you will need to activate your account for GDC. Once you have done this, you need to create a new Project:  You can provide a PROJECT ID for your easy reference. Once the project is created, you will automatically be re-directed to the dashboard for the project:

You can provide a PROJECT ID for your easy reference. Once the project is created, you will automatically be re-directed to the dashboard for the project:  Once at this stage, you need to create a key by going in APIs & auth section. In the APIs sub-section, switch on YouTube Data API v3, in case this API is OFF. Also, please note the limit Google has put on the number of units / day – it should be sufficient for any non-commercial data extraction. Next, go to the Credentials sub-section and select “Create new key” to create a Public API access.

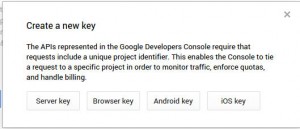

Once at this stage, you need to create a key by going in APIs & auth section. In the APIs sub-section, switch on YouTube Data API v3, in case this API is OFF. Also, please note the limit Google has put on the number of units / day – it should be sufficient for any non-commercial data extraction. Next, go to the Credentials sub-section and select “Create new key” to create a Public API access.  In the pop up, select Server key.

In the pop up, select Server key.  You should keep the key generated handy, as this would be required to be replaced in your Python code.

You should keep the key generated handy, as this would be required to be replaced in your Python code.

Step 2 – Install and import relevant libraries in Python

Google has provided a few Python scripts to mine YouTube API. However, I felt that there is a lack of good documentation [stextbox id=”grey”]

from apiclient.discovery import build #pip install google-api-python-client from apiclient.errors import HttpError #pip install google-api-python-client from oauth2client.tools import argparser #pip install oauth2client import pandas as pd #pip install pandas[/stextbox]

We will use the API provided by Google to read the search results into a nested dictionary. Next – we will convert them to Dataframes and use Pandas library to perform exploratory analysis, as explained in this article before.

Step 3 – Set YouTube Search parameters

Next, you need to plug the key you generated in Step1 in your Python script. You need to specify additional parameters like API version and service name – leave unchanged, if you are not sure. You can also specify the search term and maximum number of search results here.

[stextbox id=”grey”]

DEVELOPER_KEY = "REPLACE_ME" YOUTUBE_API_SERVICE_NAME = "youtube" YOUTUBE_API_VERSION = "v3"

argparser.add_argument("--q", help="Search term", default="ALS Ice Bucket Challenge")

#change the default to the search term you want to search

argparser.add_argument("--max-results", help="Max results", default=25)

#default number of results which are returned. It can vary from 0 - 100

args = argparser.parse_args()

options = args

[/stextbox]

You are now set to pull the results

Step 4 – Perform YouTube search and create a list

Now that all the desired options are set, we run the search, retrieve the results (id and snippet) into a list, filter out channels and playlists from the search and then join back the videos into a list. [stextbox id=”grey”]

youtube = build(YOUTUBE_API_SERVICE_NAME, YOUTUBE_API_VERSION, developerKey=DEVELOPER_KEY)

# Call the search.list method to retrieve results matching the specified # query term. search_response = youtube.search().list( q=options.q, type="video", part="id,snippet", maxResults=options.max_results ).execute()

videos = {}

# Add each result to the appropriate list, and then display the lists of

# matching videos.

# Filter out channels, and playlists.

for search_result in search_response.get("items", []):

if search_result["id"]["kind"] == "youtube#video":

#videos.append("%s" % (search_result["id"]["videoId"]))

videos[search_result["id"]["videoId"]] = search_result["snippet"]["title"]

#print "Videos:\n", "\n".join(videos), "\n"

s = ','.join(videos.keys())[/stextbox]

Step 5 – Add video statistics to the results and append further results

Once we have the list of ids of videos and the title, we add statistics to each of these videos, convert the required fields into a dictionary, which is finally converted into Pandas to give a nice DataFrame as a result.

[stextbox id=”grey”]videos_list_response = youtube.videos().list(

id=s, part='id,statistics' ).execute()

res = [] for i in videos_list_response['items']: temp_res = dict(v_id = i['id'], v_title = videos[i['id']]) temp_res.update(i['statistics']) res.append(temp_res)

pd.DataFrame.from_dict(res)

[/stextbox]

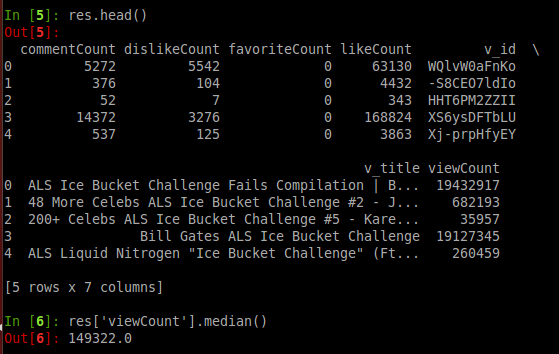

Step 6 – Performing analysis on YouTube data

Till now, we have extracted the top 500 videos from YouTube Search, added relevant statistics like views, likes, dis-likes and comments on each of these videos. Next, you can perform exploratory analysis as mentioned in this article to find out average views, Outliers (popular videos in this case), comparison against benchmarks and other similar data points from past.

If you remember, this is exactly what we did with Kaggle dataset for Titanic customers. So, I wouldn’t go into a lot of details here. But here is a simple example:

End Notes:

In this article, we looked at how to analyze social media data available on the web. We had done this for Twitter in the past. Facebook doesn’t allow access to universal data for privacy reasons. In this article, we looked at mining YouTube data specifically. Here is the complete code, in case you want to use (except the analysis in Pandas):

[stextbox id=”grey”]#complete Python script to mine YouTube data. Just replace your key and keyword you want to search

from apiclient.discovery import build #pip install google-api-python-client from apiclient.errors import HttpError #pip install google-api-python-client from oauth2client.tools import argparser #pip install oauth2client import pandas as pd #pip install pandas import matplotlib as plt

# Set DEVELOPER_KEY to the API key value from the APIs & auth > Registered apps # tab of # https://cloud.google.com/console # Please ensure that you have enabled the YouTube Data API for your project.

DEVELOPER_KEY = "REPLACE_ME" YOUTUBE_API_SERVICE_NAME = "youtube" YOUTUBE_API_VERSION = "v3"

argparser.add_argument("--q", help="Search term", default="ALS Ice Bucket Challenge")

#change the default to the search term you want to search

argparser.add_argument("--max-results", help="Max results", default=25)

#default number of results which are returned. It can very from 0 - 100

args = argparser.parse_args()

options = args

youtube = build(YOUTUBE_API_SERVICE_NAME, YOUTUBE_API_VERSION, developerKey=DEVELOPER_KEY)

# Call the search.list method to retrieve results matching the specified # query term. search_response = youtube.search().list( q=options.q, type="video", part="id,snippet", maxResults=options.max_results ).execute()

videos = {}

# Add each result to the appropriate list, and then display the lists of

# matching videos.

# Filter out channels, and playlists.

for search_result in search_response.get("items", []):

if search_result["id"]["kind"] == "youtube#video":

#videos.append("%s" % (search_result["id"]["videoId"]))

videos[search_result["id"]["videoId"]] = search_result["snippet"]["title"]

#print "Videos:\n", "\n".join(videos), "\n"

s = ','.join(videos.keys())

videos_list_response = youtube.videos().list( id=s, part='id,statistics' ).execute()

#videos_list_response['items'].sort(key=lambda x: int(x['statistics']['likeCount']), reverse=True) #res = pd.read_json(json.dumps(videos_list_response['items']))

res = [] for i in videos_list_response['items']: temp_res = dict(v_id = i['id'], v_title = videos[i['id']]) temp_res.update(i['statistics']) res.append(temp_res)

pd.DataFrame.from_dict(res)[/stextbox]

What I found out was that the awareness increase caused by ALS campaign was huge – somewhere in the magnitude of 5-10x compared to a few other viral phenomenon in the past. However, YouTube impact is significant, but still relatively small (video of Bill Gates has approx. 19 million views) compared to other YouTube viral videos. Only time will tell, how sticky the awareness is. It might be interesting to refresh the analysis and see the impact of campaign a few months down the line to see if the campaign had a long lasting impact. Till then, you now have a powerful script to analyze YouTube data!

As usual, if you have any questions / suggestions / thoughts, please feel free to put them in the comments. I hope you would enjoy this relatively rarely available script, which should enable you to perform awesome pieces of analysis.

I would like to thank my friend Jayakumar Subramanian, who helped me immensely with the scripting to get the data from YouTube. Jayakumar is an Investment banker by profession and has active research interest in Re-inforcement machine learning. He is someone I reach out to for those hour long discussions related to machine learning at the middle of the night!

Hi Kunal, I was wondering if you could publish an articule on analysis of whatsapp chat using python. I have tried my hand at it, I emailed whatsapp chat to my email id, then imoprted the text file to a data fram using pandas module. I used ": " as delimiter. I am still facing some issues in putting this into a dataframe , I am new to python and owuld like to understand how to parse a text file to put it into a dataframe. Thanks!

Helpfull

Excellent and very useful tutorial.

Hi, Is this kind of analysis also possible on sas and R? Also what do you use/prefer the most while doing analysis? Regards, Sandeep

Sandeep, I have used / seen people using R for mining several social media websites. You can see some analysis on Twitter and Facebook, which we did in past. For YouTube, since Google has not provided a direct script for R (which it has for Python), it might become slightly tricky to do it - but definitely can be done. I believe SAS also should have some solution, but because of the high costs involved, I haven't seen people talking about it. I have also not done any such analysis in SAS. Easier and simpler way to do this in SAS might be to first get data using Python / R and then import it in SAS. Regards, Kunal

Hi Kunal, thanks for article. i am trying to run this python code on canopy and am getting this error: ImportError: No module named discovery. i understand that i need to install google api python client but haven't been able to find a solution. can you help?

Hi there, I attempted to run the programme but resulted this: ''ArgumentError: argument --q: conflicting option string(s): --q'' would be great if you could help. Cheers, Clinton

Hi Kunal, Great content Again, I follow Analytics vidhya very closely. My question is, please while explaining the code also include the module associated with following scripts, I am not able to run argparser and build . I have already installed argparse and argparse2. Can you please help with this? Thanks Again, Manu

Hi, Thanks for the article. I followed the steps. But I am only able to get at most 50 videos from YouTube. I can only set "Max results" to be at most 50. But in Step6, you mentioned extracting 500 videos. Am I missing something? What should I do to collect video information for the entire search results? Thank you. June

hello! i want to do youtube sentiment analysis.. and i m begginer to machine learning. so can can i able to built code in python

Hey - You can follow a similar approach as the article do perform your task

I was diagnosed with bulbar ALS in the summer of 2019; My initial symptoms were quite noticeable. I first experienced weakness in my right arm and my speech and swallowing abilities were profoundly affected. The Rilutek (riluzole) did very little to help me. The medical team at the ALS clinic did even less. My decline was rapid and devastating.if it were not for the sensitive care and attention of my primary physician I would have been deceased,There has been little if any progress in finding a cure or reliable treatment. Acupuncture eased my anxiety a bit. Our primary physician recommended me to kycuyu health clinic and their amazing ALS treatment. My symptoms including muscle weakness, slurred speech and difficulty swallowing disappeared after 4 months treatment! The herbal treatment is a sensation.