Introduction

This article presents TensorFlow, a potent Deep Learning library unleashing the practical applications of neural networks. With hands-on implementations, readers gain valuable insights. Familiarity with neural networks and programming enhances comprehension, though the content remains language-agnostic. From self-driving engineers in the automotive industry to widespread competitions and projects, Deep Learning’s demand continues to rise. Here, the path to exploring the potential of Deep Learning commences, offering an opportunity to tap into this coveted talent pool. Through this series of articles, readers will gain proficiency in popular Deep Learning libraries and refine their skills for real-life problem-solving. Prepare to embark on this thrilling journey and embrace the limitless possibilities of TensorFlow!

In this article you will also get to know about the Neural Network Python Tensorflow and how it is implemented and how tensorflow is used in cnn.

Table of contents

- When to Apply Tensorflow Neural Networks?

- How to Solve Neural Network Problems?

- Understanding Image Data and Libraries to Solve it

- What is TensorFlow Neural Networks?

- A typical “flow” of TensorFlow

- Implementing Neural Network in TensorFlow

- Limitations of TensorFlow Neural Network

- Why TensorFlow is used in CNN?

- TensorFlow vs. Other Libraries

- Frequently Asked Questions

When to Apply Tensorflow Neural Networks?

Neural Networks have been in the spotlight for quite some time now. For a more detailed explanation on neural network and deep learning read here. Its “deeper” versions are making tremendous breakthroughs in many fields such as image recognition, speech and natural language processing etc.

The main question that arises is when to and when not to apply neural networks? This field is like a gold mine right now, with many discoveries uncovered everyday. And to be a part of this “gold rush”, you have to keep a few things in mind:

Neural Networks Require Clear and Informative Data to Train

Try to imagine Neural Networks as a child. It first observes how its parent walks. Then it tries to walk on its own, and with its every step, the child learns how to perform a particular task. It may fall a few times, but after few unsuccessful attempts, it learns how to walk. If you don’t let it walk, it might not ever learn how to walk. The more exposure you can provide to the child, the better it is.

Neural Networks for Complex Problems such as Image Processing

Neural nets belong to a class of algorithms called representation learning algorithms. These algorithms break down complex problems into simpler form so that they become understandable (or “representable”). Think of it as chewing food before you gulp. This would be harder for traditional (non-representation learning) algorithms.

Appropriate Type of Neural Network to Solve the Problem

Each problem has its own twists. So the data decides the way you solve the problem. For example, if the problem is of sequence generation, recurrent neural networks are more suitable. Whereas, if it is image related problem, you would probably be better of taking convolutional neural networks for a change.

Hardware Requirements are Essential for Running a Deep Neural Network Model

Neural nets were “discovered” long ago, but they are shining in the recent years for the main reason that computational resources are better and more powerful. If you want to solve a real life problem with these networks, get ready to buy some high-end hardware!

How to Solve Neural Network Problems?

Neural networks is a special type of machine learning (ML) algorithm. So as every ML algorithm, it follows the usual ML workflow of data preprocessing, model building and model evaluation. For the sake of conciseness, I have listed out a TO DO list of how to approach a Neural Network problem.

- Check if it is a problem where Neural Network gives you uplift over traditional algorithms (refer to the checklist in the section above)

- Do a survey of which Neural Network architecture is most suitable for the required problem

- Define Neural Network architecture through which ever language / library you choose.

- Convert data to right format and divide it in batches

- Pre-process the data according to your needs

- Augment Data to increase size and make better trained models

- Feed batches to Neural Network

- Train and monitor changes in training and validation data sets

- Test your model, and save it for future use

For this article, I will be focusing on image data. So let us understand that first before we delve into TensorFlow.

Understanding Image Data and Libraries to Solve it

Images are mostly arranged as 3-D arrays, with the dimensions referring to height, width and color channel. For example, if you take a screenshot of your PC at this moment, it would be first convert into a 3-D array and then compress it ‘.jpeg’ or ‘.png’ file formats.

While these images are pretty easy to understand to a human, a computer has a hard time to understand them. This phenomenon is called “Semantic gap”. Our brain can look at the image and understand the complete picture in a few seconds. On the other hand, computer sees image as just an array of numbers. So the problem is how to we explain this image to the machine?

In early days, people tried to break down the image into “understandable” format for the machine like a “template”. For example, a face always has a specific structure which is somewhat preserved in every human, such as the position of eyes, nose or the shape of our face. But this method would be tedious, because when the number of objects to recognise would increase, the “templates” would not hold.

Fast forward to 2012, a deep neural network architecture won the ImageNet challenge, a prestigious challenge to recognise objects from natural scenes. It continued to reign its sovereignty in all the upcoming ImageNet challenges, thus proving the usefulness to solve image problems.

Data Libraries

So which library / language do people normally use to solve image recognition problems? One recent survey I did that most of the popular deep learning libraries have interface for Python, followed by Lua, Java and Matlab. The most popular libraries, to name a few, are:

What is TensorFlow Neural Networks?

Lets start with the official definition.

“TensorFlow is an open source software library for numerical computation using dataflow graphs. Nodes in the graph represents mathematical operations, while graph edges represent multi-dimensional data arrays (aka tensors) communicated between them. The flexible architecture allows you to deploy computation to one or more CPUs or GPUs in a desktop, server, or mobile device with a single API.”

If that sounds a bit scary – don’t worry. Here is my simple definition – look at TensorFlow as nothing but numpy with a twist. If you have worked on numpy before, understanding TensorFlow will be a piece of cake! A major difference between numpy and TensorFlow is that TensorFlow follows a lazy programming paradigm. It first builds a graph of all the operation to be done, and then when a “session” is called, it “runs” the graph. It’s built to be scalable, by changing internal data representation to tensors (aka multi-dimensional arrays). Building a computational graph can be considered as the main ingredient of TensorFlow. To know more about mathematical constitution of a computational graph, read this article.

It’s easy to classify TensorFlow as a neural network library, but it’s not just that. Yes, it was designed to be a powerful neural network library. But it has the power to do much more than that. You can build other machine learning algorithms on it such as decision trees or k-Nearest Neighbors. You can literally do everything you normally would do in numpy! It’s aptly called “numpy on steroids”

The advantages of using TensorFlow are:

- It has an intuitive construct, because as the name suggests it has “flow of tensors”. You can easily visualize each and every part of the graph.

- Easily train on cpu/gpu for distributed computing

- Platform flexibility. You can run the models wherever you want, whether it is on mobile, server or PC.

A typical “flow” of TensorFlow

Every library has its own “implementation details”, i.e. a way to write which follows its coding paradigm. For example, when implementing scikit-learn, you first create object of the desired algorithm, then build a model on train and get predictions on test set, something like this:

# define hyperparamters of ML algorithm

clf = svm.SVC(gamma=0.001, C=100.)

# train

clf.fit(X, y)

# test

clf.predict(X_test)As I said earlier, TensorFlow follows a lazy approach. The usual workflow of running a program in TensorFlow is as follows:

- Build a computational graph, this can be any mathematical operation TensorFlow supports.

- Initialize variables, to compile the variables defined previously

- Create session, this is where the magic starts!

- Run graph in session, the compiled graph is passed to the session, which starts its execution. Close session, shutdown the session.

Few terminologies used in TensoFlow;

- placeholder: A way to feed data into the graphs

- feed_dict: A dictionary to pass numeric values to computational graph

Lets write a small program to add two numbers!

# import tensorflow

import tensorflow as tf

# build computational graph

a = tf.placeholder(tf.int16)

b = tf.placeholder(tf.int16)

addition = tf.add(a, b)

# initialize variables

init = tf.initialize_all_variables()

# create session and run the graph

with tf.Session() as sess:

sess.run(init)

print "Addition: %i" % sess.run(addition, feed_dict={a: 2, b: 3})

# close session

sess.close()Implementing Neural Network in TensorFlow

Note: We could have used a different neural network architecture to solve this problem, but for the sake of simplicity, we settle on feed forward multilayer perceptron with an in depth implementation.

Let us remember what we learned about neural networks first.

A typical implementation of Neural Network would be as follows:

- Define Neural Network architecture to be compiled

- Transfer data to your model

- Under the hood, the data is first divided into batches, so that it can be ingested. The batches are first preprocessed, augmented and then fed into Neural Network for training

- The model then gets trained incrementally

- Display the accuracy for a specific number of timesteps

- After training save the model for future use

- Test the model on a new data and check how it performs

Here we solve our deep learning practice problem – Identify the Digits. Let’s for a moment take a look at our problem statement.

Our problem is an image recognition, to identify digits from a given 28 x 28 image. We have a subset of images for training and the rest for testing our model. So first, download the train and test files. The dataset contains a zipped file of all the images in the dataset and both the train.csv and test.csv have the name of corresponding train and test images. Any additional features are not provided in the datasets, just the raw images are provided in ‘.png’ format.

As you know we will use TensorFlow to make a neural network model. So you should first install TensorFlow in your system. Refer the official installation guide for installation, as per your system specifications.

We will follow the template as described above. Create a Jupyter notebook with python 2.7 kernel and follow the steps below.

Let’s import all the required modules

%pylab inline

import os

import numpy as np

import pandas as pd

from scipy.misc import imread

from sklearn.metrics import accuracy_score

import tensorflow as tfLet’s set a seed value, so that we can control our models randomness

# To stop potential randomness

seed = 128

rng = np.random.RandomState(seed)The first step is to set directory paths, for safekeeping!

root_dir = os.path.abspath('../..')

data_dir = os.path.join(root_dir, 'data')

sub_dir = os.path.join(root_dir, 'sub')

# check for existence

os.path.exists(root_dir)

os.path.exists(data_dir)

os.path.exists(sub_dir)Now let us read our datasets. These are in .csv formats, and have a filename along with the appropriate labels

train = pd.read_csv(os.path.join(data_dir, 'Train', 'train.csv'))

test = pd.read_csv(os.path.join(data_dir, 'Test.csv'))

sample_submission = pd.read_csv(os.path.join(data_dir, 'Sample_Submission.csv'))

train.head()| filename | label | |

|---|---|---|

| 0 | 0.png | 4 |

| 1 | 1.png | 9 |

| 2 | 2.png | 1 |

| 3 | 3.png | 7 |

| 4 | 4.png | 3 |

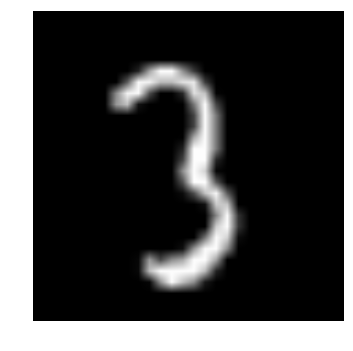

Let us see what our data looks like! We read our image and display it.

img_name = rng.choice(train.filename)

filepath = os.path.join(data_dir, 'Train', 'Images', 'train', img_name)

img = imread(filepath, flatten=True)

pylab.imshow(img, cmap='gray')

pylab.axis('off')

pylab.show()Output:

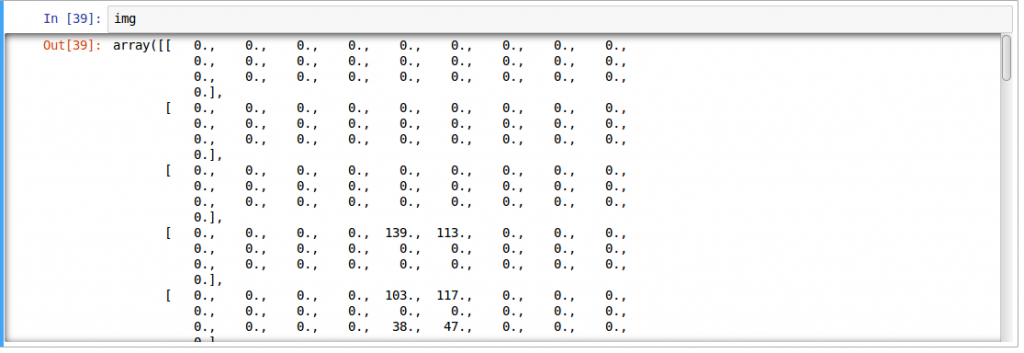

The above image is represented as numpy array, as seen below:

For easier data manipulation, let’s store all our images as numpy arrays

temp = []

for img_name in train.filename:

image_path = os.path.join(data_dir, 'Train', 'Images', 'train', img_name)

img = imread(image_path, flatten=True)

img = img.astype('float32')

temp.append(img)

train_x = np.stack(temp)

temp = []

for img_name in test.filename:

image_path = os.path.join(data_dir, 'Train', 'Images', 'test', img_name)

img = imread(image_path, flatten=True)

img = img.astype('float32')

temp.append(img)

test_x = np.stack(temp)As this is a typical ML problem, to test the proper functioning of our model we create a validation set. Let’s take a split size of 70:30 for train set vs validation set

split_size = int(train_x.shape[0]*0.7)

train_x, val_x = train_x[:split_size], train_x[split_size:]

train_y, val_y = train.label.values[:split_size], train.label.values[split_size:]Now we define some helper functions, which we use later on, in our programs

def dense_to_one_hot(labels_dense, num_classes=10):

"""Convert class labels from scalars to one-hot vectors"""

num_labels = labels_dense.shape[0]

index_offset = np.arange(num_labels) * num_classes

labels_one_hot = np.zeros((num_labels, num_classes))

labels_one_hot.flat[index_offset + labels_dense.ravel()] = 1

return labels_one_hot

def preproc(unclean_batch_x):

"""Convert values to range 0-1"""

temp_batch = unclean_batch_x / unclean_batch_x.max()

return temp_batch

def batch_creator(batch_size, dataset_length, dataset_name):

"""Create batch with random samples and return appropriate format"""

batch_mask = rng.choice(dataset_length, batch_size)

batch_x = eval(dataset_name + '_x')[[batch_mask]].reshape(-1, input_num_units)

batch_x = preproc(batch_x)

if dataset_name == 'train':

batch_y = eval(dataset_name).ix[batch_mask, 'label'].values

batch_y = dense_to_one_hot(batch_y)

return batch_x, batch_yNow comes the main part! Let us define our neural network architecture. We define a neural network with 3 layers; input, hidden and output. The number of neurons in input and output are fixed, as the input is our 28 x 28 image and the output is a 10 x 1 vector representing the class. We take 500 neurons in the hidden layer. This number can vary according to your need. We also assign values to remaining variables. Read the article on fundamentals of neural network to know more in depth of how it works.

### set all variables

# number of neurons in each layer

input_num_units = 28*28

hidden_num_units = 500

output_num_units = 10

# define placeholders

x = tf.placeholder(tf.float32, [None, input_num_units])

y = tf.placeholder(tf.float32, [None, output_num_units])

# set remaining variables

epochs = 5

batch_size = 128

learning_rate = 0.01

### define weights and biases of the neural network (refer this article if you don't understand the terminologies)

weights = {

'hidden': tf.Variable(tf.random_normal([input_num_units, hidden_num_units], seed=seed)),

'output': tf.Variable(tf.random_normal([hidden_num_units, output_num_units], seed=seed))

}

biases = {

'hidden': tf.Variable(tf.random_normal([hidden_num_units], seed=seed)),

'output': tf.Variable(tf.random_normal([output_num_units], seed=seed))

}Now create our neural networks computational graph

hidden_layer = tf.add(tf.matmul(x, weights['hidden']), biases['hidden'])

hidden_layer = tf.nn.relu(hidden_layer)

output_layer = tf.matmul(hidden_layer, weights['output']) + biases['output']

Also, we need to define cost of our neural network

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(output_layer, y))And set the optimizer, i.e. our backpropogation algorithm. Here we use Adam, which is an efficient variant of Gradient Descent algorithm. There are a number of other optimizers available in tensorflow (refer here)

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

After defining our neural network architecture, let's initialize all the variables

init = tf.initialize_all_variables()Now let us create a session, and run our neural network in the session. We also validate our models accuracy on validation set that we created

with tf.Session() as sess:

# create initialized variables

sess.run(init)

### for each epoch, do:

### for each batch, do:

### create pre-processed batch

### run optimizer by feeding batch

### find cost and reiterate to minimize

for epoch in range(epochs):

avg_cost = 0

total_batch = int(train.shape[0]/batch_size)

for i in range(total_batch):

batch_x, batch_y = batch_creator(batch_size, train_x.shape[0], 'train')

_, c = sess.run([optimizer, cost], feed_dict = {x: batch_x, y: batch_y})

avg_cost += c / total_batch

print "Epoch:", (epoch+1), "cost =", "{:.5f}".format(avg_cost)

print "\nTraining complete!"

# find predictions on val set

pred_temp = tf.equal(tf.argmax(output_layer, 1), tf.argmax(y, 1))

accuracy = tf.reduce_mean(tf.cast(pred_temp, "float"))

print "Validation Accuracy:", accuracy.eval({x: val_x.reshape(-1, input_num_units), y: dense_to_one_hot(val_y)})

predict = tf.argmax(output_layer, 1)

pred = predict.eval({x: test_x.reshape(-1, input_num_units)})This will be the output of the above code

Epoch: 1 cost = 8.93566

Epoch: 2 cost = 1.82103

Epoch: 3 cost = 0.98648

Epoch: 4 cost = 0.57141

Epoch: 5 cost = 0.44550

Training complete!

Validation Accuracy: 0.952823To test our model with our own eyes, let’s visualize its predictions

img_name = rng.choice(test.filename)

filepath = os.path.join(data_dir, 'Train', 'Images', 'test', img_name)

img = imread(filepath, flatten=True)

test_index = int(img_name.split('.')[0]) - 49000

print "Prediction is: ", pred[test_index]

pylab.imshow(img, cmap='gray')

pylab.axis('off')

pylab.show()Output:

Prediction is: 8

We see that our model performance is pretty good! Now let’s create a submission

sample_submission.filename = test.filename

sample_submission.label = pred

sample_submission.to_csv(os.path.join(sub_dir, 'sub01.csv'), index=False)And done! We just created our own trained neural network!

Limitations of TensorFlow Neural Network

- Even though TensorFlow is powerful, it’s still a low level library. For example, it can be considered as a machine level language. But for most of the purpose, you need modularity and high level interface such as keras

- It’s still in development, so much more awesomeness to come!

- It depends on your hardware specs, the more the merrier

- Still not an API for many languages.

- There are still many things yet to be included in TensorFlow, such as OpenCL support.

Most of the above mentioned are in the sights of TensorFlow developers. They have made a roadmap for specifying how the library should be developed in the future.

Why TensorFlow is used in CNN?

There are a few explanations as to why TensorFlow is a favored option for constructing Convolutional Neural Networks (CNNs):

Flexibility is offered by TensorFlow through a low-level API, allowing for more control in constructing CNN architecture. This is especially beneficial for researchers creating new CNN designs or requiring precise control over their models. Rewrite the text in the same language with the same number of words.

Incorporating Keras into TensorFlow simplifies the process of constructing and training CNNs with a user-friendly high-level API. Keras offers ready-made blocks for typical CNN layers, helping you save time and effort while constructing your models. Please rewrite the following passage using the same language and maintaining the word count:

“His reputation as a hard worker is well-deserved.”

“The recognition he receives for his diligent work is rightfully earned.”

Open Source: You can freely use and modify TensorFlow since it is an open-source framework. This has led to its widespread use in both commercial and academic settings. Due to being open source, there exists a sizable developer community that actively contributes to enhancing the library’s quality.

TensorFlow vs. Other Libraries

TensorFlow is built on similar principles as Theano and Torch of using mathematical computational graphs. But with the additional support of distributed computing, TensorFlow comes out to be better at solving complex problems. Also deployment of TensorFlow models is already supported which makes it easier to use for industrial purposes, giving a fight to commercial libraries such as Deeplearning4j, H2O and Turi. TensorFlow has APIs for Python, C++ and Matlab. There’s also a recent surge for support for other languages such as Ruby and R. So, TensorFlow is trying to have a universal language support.

Conclusion

So you saw how to build a simple neural network with TensorFlow. This code is meant for people to understand how to get started implementing TensorFlow, so take it with a pinch of salt. Remember that to solve more complex real life problems, you have to tweak the code a little bit.

Many of the above functions can be abstracted to give a seamless end-to-end workflow. If you have worked with scikit-learn, you might know how a high level library abstracts “under the hood” implementations to give end-users a more easier interface. Although TensorFlow has most of the implementations already abstracted, high level libraries are emerging such as TF-slim and TFlearn.

Hope you like the article tensorflow neural network and how neural network python tensorflow is implemented.

Useful Resources

- TensorFlow official repository

- Rajat Monga (TensorFlow technical lead) “TensorFlow for everyone” video

- A curated list of dedicated resources

Frequently Asked Questions

A. TensorFlow is an open-source library widely used in neural networks. It provides a platform for building and training machine learning models, particularly deep learning models. TensorFlow offers a flexible framework for constructing computational graphs and efficiently performing mathematical operations on multi-dimensional arrays called tensors. It simplifies the development of neural networks by providing a high-level interface and optimization tools for efficient model training and deployment.

A. We use TensorFlow in neural networks because it provides a user-friendly and efficient platform for building and training machine learning models. TensorFlow offers a wide range of tools, libraries, and pre-built functions specifically designed for neural networks. It simplifies the process of implementing complex mathematical operations, optimizing model performance, and deploying models across different devices. TensorFlow helps researchers and developers harness the power of neural networks for various applications, from image recognition to natural language processing.

Hi Faizan, I'm new to deep learning and really appreciate your effort for sharing this article. I've downloaded the mnist dataset you used in this tutorial. There are 4 .gz files only, so I can't understand that how to have the Train.csv, Test.csv and Sample_Submission.csv. Please help advise me more, thanks.

Hi Jerry! Thanks for reading the article. This article is released as a solution to our practice problem "Identify the Digits". So the datasets (Train.csv, Test.csv) belong to that (https://datahack.analyticsvidhya.com/contest/practice-problem-identify-the-digits/). Download the datasets from there. Thanks!

Hi... I am having problem in reading Train and Test CSV files. I am unable to program it properly. I have the files located at E:\AV\TensorFlow\Test.csv and I want the above code to read this path. How do I set the path in the code above. Please help.

Hello Pmitra, There are three main directory paths to specified in the code, * root_dir : This is the main directory in which all your codes and datasets are situated * data_dir : This is where your csv files and images are * sub_dir : This is where the submission you create are stored The structure would look similar to this: root_dir | |-----data_dir |-----| |-----|-----Train |-----|-----| |-----|-----|----- |-----|-----Test.csv |-----sub_dir There are checks provided in the code to check whether you have loaded the correct paths. Having said that, the code provided is for your ease, and you could easily modify it for your purposes. For example, you could set directory paths as: root_dir = "E:\AV\TensorFlow" data_dir = "E:\AV\TensorFlow\data" sub_dir = "E:\AV\TensorFlow\sub" If you have any more problems, feel free to ask!

Hi Fazan, thanks for the really good tutorial. I'm usually work with R and Weka and I am very interested to have a better knowledge of tensorflow. I've had only the need to change two lines in your code to make it works for me: from: index_offset = numpy.arange(num_labels) * num_classes labels_one_hot = numpy.zeros((num_labels, num_classes)) to index_offset = np.arange(num_labels) * num_classes labels_one_hot = np.zeros((num_labels, num_classes)) Thanks again Mino

Updated the code. Thanks for notifying!

Train csv file zip is giving error . kindly check the file plz. Error msg "error occured while loading the zip" https://datahack.analyticsvidhya.com/contest/practice-problem-identify-the-digits/media/train_file/Train_HI6auGp.zip

Hi sahu, the download works fine for me. Could you redownload and try again?

GUI interface of ubuntu was unable to extract the file so i use CLI and solved it. Method 1 But my problem did not end here. %pylab inline is causing error and its showing unresolved reference in my case. I have created tensor flow virtual environment for running this code but its not resolving. method 2 I tried to run the code with ipython and all the dependencies installed but here error is occurring at while checking directories. Please guide me regarding it.

The code is designed to be run on an ipython notebook. Running magic functions (for example %pylab inline) would not work on CLI. For issues with checking directories, refer the comments above.

Hi Faizan, It was great article. Got a so much help and I am new in deep learning. I have a problem with "preproc" method. Why do we need this method and why the value of seed and batch_size is 128. It will be great help for getting an answer. Thanks.

Hey Sumit, I'm glad you like it. The "preproc" method in simple words, is a data preprocessing step in which we do standardization (explained in detail here https://www.analyticsvidhya.com/blog/2016/07/practical-guide-data-preprocessing-python-scikit-learn/) If you preprocess the data before sending it to the network, it helps in training (i.e. neural network converges faster) For batch_size and seed value, they can be set as per your choice. In fact, I would suggest you to try changing the values to see what happens. Let me know if you need more help

predict = tf.argmax(output_layer, 1) pred = predict.eval({x: test.reshape(-1, 784)}) " Cannot evaluate tensor using `eval()`: No default session is registered. Use `with sess.as_default()` or pass an explicit session to `eval(session=sess)`" why this error is showing?

Have you kept the code in the same level of indentation? with tf.Session() as sess: (indent) ... (indent) ... (indent) ... (indent) predict = tf.argmax(output_layer, 1) (indent) pred = predict.eval({x: test_x.reshape(-1, 784)})

Hi Faizan, Nice article! A stupid question perhaps: I see that you save the prediction results in submission.csv. Is there a way to save the trained network itself (as a config perhaps) so I can use it for subsequent runs without having to retrain the network ? Thanks!

Thanks Anand. Actually that's a good question. The answer is Yes, you can save all the individual weights and biases of a neural network in Tensorflow. There's a function included called train.Saver() which does exactly this for you. Refer here (https://www.tensorflow.org/api_docs/python/state_ops/saving_and_restoring_variables)

Dear SIr, Thanks For your guide, I have tried to modify the code by myself in order to input a matrix that have a different size, And it turns out that this part of the code is not functioning: print "Validation Accuracy:", accuracy.eval({x: val_x.reshape(-1, 841), y:dense_to_one_hot(val_y)}) with the error message ValueError: Cannot feed value of shape (60, 841) for Tensor u'Placeholder_1:0', which has shape '(?, 200)' At the moment, I feel that I don't understand how this accuracy.eval works, Can you please explain more about it? Thank You.

Hey! Its great that you've tried to modify the code to meet your needs. Could you specify which parts you changed? There might be something you've left that's causing the problem. (PS: posting your code here http://nbviewer.jupyter.org/ would be a good choice.) Anyways, so I would describe "eval" method similar to "run" method. viz to compile a computational graph and pass values through it. Here accuracy.eval passes our input feed val_x and val_y through the "accuracy" graph (specified as >>> accuracy = tf.reduce_mean(tf.cast(pred_temp, "float")) ). The main difference between run and eval is that run is a lazy evaluation method, whereas eval does it as soon as it is called. Hope it helps. If there's anything you would like to clarify, feel free to comment here

hi Faizan, really good guide. but i try to execute the code with some changes: i.e: resize the images to 28*28 : temp = [] with open('train.csv') as trainFile: readCSV = csv.reader(trainFile, delimiter=',') for row in readCSV: if (row[0] != 'filename' and row[1] != 'label' ): image_path = os.path.join(data_dir + '/' + row[1], row[0]) img = imread(image_path, flatten=True) img.resize(size, refcheck=False) img = img.astype('float32') temp.append(img) train_x = np.stack(temp) and changed the num of classes to be 50 (as the letters and the signs i have to recognize). i changed also this: input_num_units = 28*28 hidden_num_units = 500 output_num_units = 50 epochs = 5 batch_size = 60 learning_rate = 0.01 and every time i run the code the accuracy is 0. could you help me with that ? :) do you have an idea why? and another qouestion is what is the meaning of seed ? thank you in advance

Hi Igor, Make sure are vectorizing your output (aka train_y). In the article, the function "dense_to_one_hot" does this for you

Hi Fizan, I applied your code into my dataset. My train set has 112 images and 8 labels. size of image is 128*128. And I only train with single layer (not use multilayer as your above code). My problem as below Epoch: 1 cost = 0.00000 Epoch: 2 cost = 0.00000 Epoch: 3 cost = 0.00000 Epoch: 4 cost = 0.00000 Epoch: 5 cost = 0.00000 My code: from collections import Counter import os import numpy as np import pandas as pd from scipy.misc import imread import tensorflow as tf import cv2 seed = 128 rng = np.random.RandomState(seed) root_dir = "/home/trantrunghieu/lv" train = pd.read_csv(os.path.join(root_dir,"train", 'train.csv')) test = pd.read_csv(os.path.join(root_dir,"test",'test.csv')) # sample_submission = pd.read_csv(os.path.join(data_dir, 'Sample_Submission.csv')) train.head() temp = [] for img_name in train.filename: image_path = os.path.join(root_dir, 'train', img_name) img = imread(image_path) img = img.astype('float32') img = tf.reshape(img,[-1]) temp.append(img) train_x = np.stack(temp) for img_name in test.filename: image_path = os.path.join(root_dir, 'test', img_name) img = imread(image_path) img = img.astype('float32') img = tf.reshape(img,[-1]) temp.append(img) test_x = np.stack(temp) # take a split size of 70:30 for train set vs validation set split_size = int(train_x.shape[0]*0.7) train_y = train.label.values[0:] # train_x, val_x = train_x[:split_size], train_x[split_size:] # train_y, val_y = train.label.values[:split_size], train.label.values[split_size:] print(train_y) print (Counter(train_y)) # Define some function def dense_to_one_hot(labels_dense, num_classes=8): """Convert class labels from scalars to one-hot vectors""" num_labels = labels_dense.shape[0] index_offset = np.arange(num_labels) * num_classes labels_one_hot = np.zeros((num_labels, num_classes)) labels_one_hot.flat[index_offset + labels_dense.ravel()] = 1 return labels_one_hot def preproc(unclean_batch_x): """Convert values to range 0-1""" temp_batch = unclean_batch_x / unclean_batch_x.max() return temp_batch def batch_creator(batch_size, dataset_length, dataset_name): """Create batch with random samples and return appropriate format""" batch_mask = rng.choice(dataset_length, batch_size) batch_x = eval(dataset_name + '_x')[[batch_mask]].reshape(-1, input_num_units) batch_x = preproc(batch_x) if dataset_name == 'train': batch_y = eval(dataset_name).ix[batch_mask, 'label'].values batch_y = dense_to_one_hot(batch_y) return batch_x, batch_y input_num_units = 128*128 output_num_units = 8 # define placeholders # placeholder of image x = tf.placeholder(tf.float32, [None, input_num_units]) # placeholder of label y = tf.placeholder(tf.float32, [None, output_num_units]) # set remaining variables epochs = 5 batch_size = 128 learning_rate = 0.01 # Create the model # Weight W = tf.Variable(tf.zeros([input_num_units, output_num_units])) # bias b = tf.Variable(tf.zeros([output_num_units])) output_layer = tf.matmul(x, W) + b cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(output_layer, y)) optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost) init = tf.global_variables_initializer() with tf.Session() as sess: # create initialized variables sess.run(init) for epoch in range(epochs): avg_cost = 0 total_batch = int(train.shape[0]/batch_size) for i in range(total_batch): batch_x, batch_y = batch_creator(batch_size, train_x.shape[0], 'train') _, c = sess.run([optimizer, cost], feed_dict = {x: batch_x, y: batch_y}) avg_cost += c / total_batch # if epoch % 200 ==0: print "Epoch:", (epoch+1), "cost =", "{:.5f}".format(avg_cost) print "\nTraining complete!" Could you help me with this problem? And How is batch_size value assigned? Is it dependent anything? Thank you in advance

I fixed above problem. Cause is that my dataset length is 112 less than batch_size is 128. So total_batch is always 0. But, When I change batch_size to a number less than dataset length( such as 8), a new error appeard as below: Traceback (most recent call last): File "draw_shape.py", line 124, in batch_x, batch_y = batch_creator(batch_size, train_x.shape[0], 'train') File "draw_shape.py", line 71, in batch_creator batch_x = eval(dataset_name + '_x')[[batch_mask]].reshape(-1, input_num_units) ValueError: total size of new array must be unchanged Please help me to fix this prolem.

Hey can you print the shape of batch_mask variable and check if its not zero?

I use tensorflow for the Review and Rating and get only 60% accuracy.I have a data of size 5000 and vary hidden layer from100-1000 and iteration 10000-100000.so how can i improve the accuracy with this data ??

You can try using a better architecture than MLP, for example, you can use RNN if the review data is textual sentences. I have discussed some of these tweaks in this article: https://www.analyticsvidhya.com/blog/2016/10/tutorial-optimizing-neural-networks-using-keras-with-image-recognition-case-study/

1. Why biases don't have their own weights? 2. hidden_layer = tf.add(tf.matmul(x, weights['hidden']), biases['hidden']) output_layer = tf.matmul(hidden_layer, weights['output']) + biases['output'] Is there any difference between tf.add and +? Thanks

1. Bias have been defined separately from weights. You can refer the code 2. Even when you use "+" operator, it is converted to "tf.add" which is a more optimized function. So for all practical purposes, they are the same

A.A! Thank you for this helpful material. I was working on this project and I found the following error InvalidArgumentError: logits and labels must be same size: logits_size=[512,10] labels_size=[128,10] [[Node: SoftmaxCrossEntropyWithLogits_2 = SoftmaxCrossEntropyWithLogits[T=DT_FLOAT, _device="/job:localhost/replica:0/task:0/cpu:0"](Reshape_6, Reshape_7)]] During handling of the above exception, another exception occurred: InvalidArgumentError Traceback (most recent call last) in () 14 for i in range(total_batch): 15 batch_x, batch_y = batch_creator(batch_size, train_x.shape[0], 'train') ---> 16 _, c = sess.run([optimizer, cost], feed_dict = {x: batch_x, y: batch_y}) 17 18 avg_cost += c / total_batch I tried my best(apply different google solutions) to solve it but it still remain. I will be grateful if you help me to solve it. :

Check if the length of input (batch_x) you are passing is the same as length of output (batch_y)

HI Faizan, I am currently working on image dataset. Eg. Train Dataset has multiple sub folders like Automobiles, Flowers, Bikes and each folders having 100 images of different size. Labels are given as subfloders name. How do i read these images in python from each folders and create single training set. As i read online we need to resize all images into same size to input in tensorflow. I am using windows machine so not be able to use OpenCV3 also. Please help me out.

Hi Deepak. You can use keras for this purpose, as it directly reads subfolders and assigns classes with respect to it

Hi Faizan, Great Post. A quick question , I was going through the nn network from various materials and it got me a bit confused. Why are we not using an relu activation in the output layer ? I did try implement it and my cost stopped reducing after a point leading to poor accuracy(clearly it is incorrect). Can you sum up or point me in a direction where I can better understand this.

In the output layer, your aim is to predict classes (if its a classification problem) or to predict continuous values (if its a regression problem). So you would use an appropriate activation function. In a classification problem, you generally use sigmoid or softmax function, whereas in regression you use a linear function

Hello Faizan,Can u please give a clear picture of what you are doing in the batch_creator function. batch_y = eval(dataset_name).ix[batch_mask, 'label'].values what is the .ix here and what is 'label'? Since I am trying use this code for training my own dataset,it will be useful for me to know this function. thank you.

Hi Atana, .ix is a pandas function (http://pandas.pydata.org/pandas-docs/version/0.19.2/generated/pandas.DataFrame.ix.html) and 'label' represents the label column that is that target variable. Here I am simply trying to extract the respective targets for the batches

Faizan, Can you explain what this part of code is performing, i had encountered an error "logits and labels must be same size: logits_size=[118,3] labels_size=[128,3]". I tracked where was my tensor variable size going incorrect and it was below code which dint calculated expected batch size as per the specified inputs. batch_x = eval(dataset_name + '_x')[[batch_mask]].reshape(-1, (input_num_units)) Some below debug variables size : - batch_mask: 128 dataset_name: train input_num_units: 9216 batch_x: (118, 9216) unclean_batch_x: (118, 9216) unclean_batch_x max: 160.0 batch_y: (128, 3)

The number of elements in batch_x and batch_y should match. In your problem, one is 128 and the other 118. Both should be the same

That was a very informative post as I am just getting started with TF. It would be very much appreciated if you elaborate this line of code: filepath = os.path.join(data_dir, 'Train', 'Images', 'train', img_name)

Hi Sayak, Here I am defining the file path. So instead of directly setting it as "E:\workspace\Train\Images"... , I am writing a more generalized code by using python's "os" library

Getting the following error after running the code for assigning the cost: --------------------------------------------------------------------------- ValueError Traceback (most recent call last) in () ----> 1 cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(output_layer, y)) 2 optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost) 3 4 init = tf.initialize_all_variables() /home/sayak/anaconda3/envs/py27/lib/python2.7/site-packages/tensorflow/python/ops/nn_ops.pyc in softmax_cross_entropy_with_logits(_sentinel, labels, logits, dim, name) 1605 """ 1606 _ensure_xent_args("softmax_cross_entropy_with_logits", _sentinel, -> 1607 labels, logits) 1608 1609 # TODO(pcmurray) Raise an error when the labels do not sum to 1. Note: This /home/sayak/anaconda3/envs/py27/lib/python2.7/site-packages/tensorflow/python/ops/nn_ops.pyc in _ensure_xent_args(name, sentinel, labels, logits) 1560 if sentinel is not None: 1561 raise ValueError("Only call `%s` with " -> 1562 "named arguments (labels=..., logits=..., ...)" % name) 1563 if labels is None or logits is None: 1564 raise ValueError("Both labels and logits must be provided.") ValueError: Only call `softmax_cross_entropy_with_logits` with named arguments (labels=..., logits=..., ...)

Hi, write this code line instead: cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=output_layer, labels=y))

Hi This blog is very useful for me. and which type Neural Network it is, i mean it CNN or RNN. Regards, Kishore

hi, its a simple neural network; a multi layer perceptron

Hi Faizan, Thank you for the article. It is really practical. My problem is I can't download the data from the practice problem page. I clicked Data on the left, but it does nothing. Thank you

Problem fixed. Thank you.

Hi Faizan, Recently i started learning, about deep learning, neural network and possible way to accelerate all computation through GPU, and i went through lots of IEEE paper and then i come across this blog and i must appreciate that this is the only place (being beginner) where i found all required information presented very cleanly right from start, till character prediction. Great work!!

Thanks Vijay

CAN Anyone tell why this code is not producing poper output import tensorflow as tf x=tf.placeholder(tf.float32,shape=[None,1]) y_=tf.placeholder(tf.float32,shape=[None,1]) W=tf.Variable(tf.zeros([1,1])) b=tf.Variable(tf.zeros([1])) y=tf.matmul(x,W)+b init=tf.global_variables_initializer() cross_entropy=tf.nn.softmax_cross_entropy_with_logits(labels=y_,logits=y) train_step=tf.train.GradientDescentOptimizer(0.6).minimize(cross_entropy) sess=tf.InteractiveSession() sess.run(init) for e in range(100): sess.run([train_step,cross_entropy],feed_dict={x:[[1],[2]],y_:[[1],[2]]}) print(sess.run([W,b])) correct_prediction=tf.equal(tf.arg_max(y,1),tf.arg_max(y_,1)) accuracy=tf.reduce_mean(tf.cast(correct_prediction,tf.float32)) print(accuracy.eval(feed_dict={x:[[2]], y_: [[6]]})) sess.close()

Hi, Assuming there's no syntax errors/indentation errors; What error does the code show?

I face this problem, I don't know why other people did not face it. temp = [] for img_name in test.filename: image_path = os.path.join(data_dir, 'Train', 'Images', 'test', img_name) img = imread(image_path, flatten=True) img = img.astype('float32') temp.append(img) test_x = np.stack(temp) Error OutPut AttributeError Traceback (most recent call last) in () 9 10 temp = [] ---> 11 for img_name in test.filename: 12 image_path = os.path.join(data_dir, 'Train', 'Images', 'test', img_name) 13 img = imread(image_path, flatten=True) ~\Anaconda3\envs\tensorflow\lib\site-packages\pandas\core\generic.py in __getattr__(self, name) 3079 if name in self._info_axis: 3080 return self[name] -> 3081 return object.__getattribute__(self, name) 3082 3083 def __setattr__(self, name, value): AttributeError: 'DataFrame' object has no attribute 'filename' I checked the test.csv file and there is no filename field. I may have wrong test.csv file, can you please mention me the correct file to download. Thanks

Hi Sohail, You can find the dataset here: https://datahack.analyticsvidhya.com/contest/practice-problem-identify-the-digits/

hello sir, I am rewriting this code to train another set of data which is image dataset of 20,000. But the image size varying in every image so I am unable to create the training and test set. can you please suggest a solution.

Hey - you can force all the images to be of the same size using scipy's misc package

Hey ,I Get the following error while trying to run the session and train network:InvalidArgumentError: You must feed a value for placeholder tensor 'Placeholder_4' with dtype float and shape [4096,375] As for right now, what I am doing is that i feed a numpy.ndarray of the appropiate size as per the network, do i need to take care of the datatype as well of the feeder placeholder?

Dear Faizan, I read your post and is very good. I am new to NN and TF, what I am trying to do with TF is a little bit different. I want to make a model which is a Forward Neural Network. I have a labeled data - 130 000 samples and 90 features (all floats or integers) and using these I want to predict 7 values as accurate as possible. I ran a PCA and found which feature is good for which prediction, but from here on I am confused how to place my csv file in TF and work with it. I have one file and is not separated to train or test, but I am planing to use a cross-validation 90 % train and 10% test. Can you give me hints what to do and where to look as a reference? Thank you very much!

Dear Faizan, I read your post and is very good. I am new to NN and TF, what I am trying to do with TF is a little bit different. I want to make a model which is a Forward Neural Network. I have a labeled data - 130 000 samples and 90 features (all floats or integers) and using these I want to predict 7 values as accurate as possible. I ran a PCA and found which feature is good for which prediction, but from here on I am confused how to place my csv file in TF and work with it. I have one file and is not separated to train or test, but I am planing to use a cross-validation 90 % train and 10% test. Can you give me hints what to do and where to look as a reference? Thank you very much!

Hi ! The datasets (Train.csv, Test.csv) belong to that (https://datahack.analyticsvidhya.com/contest/practice-problem-identify-the-digits/) can not to be download .How can I get it ? . Thanks!

Hi - You would have to register to the hackathon to access the dataset

Hi!! I want to create an own dataset from images i have collected to identify pot holes on the road. How should i do and how to feed into the code. Could you please help me...

Hello! This article is just great! I'm trying to train a NN to interpreter face emotions. I got around 95% accuracy, but now I need to use this NN in my real project. How can I 'export' that neural network in order to use it in another Python code? I can't just wait to it to train everytime I want to use the software, and I know it's possible because I've done it in Matlab.

Thank you. Please post same for text processing also. train and use a model for seq2seq text matching using tensorflow in python. example corpus is {"intents": [ {"tag": "greeting", "patterns": ["Hi", "what's up"], "responses": ["Hello", "Can i"], "context_set": "" }, {"tag": "goodbye", "patterns": ["Bye", "See you later"], "responses": ["See you later, thanks for visiting"] } ] } in this corpus, "pattern" is input data to train model and expect "tag" in output.