Modern Data Engineering with MAGE: Empowering Efficient Data Processing

Introduction

In today’s data-driven world, organizations across industries are dealing with massive volumes of data, complex pipelines, and the need for efficient data processing. Traditional data engineering solutions, such as Apache Airflow, have played an important role in orchestrating and controlling data operations in order to tackle these difficulties. However, with the rapid evolution of technology, a new contender has emerged on the scene, Mage, to reshape the landscape of data engineering.

Learning Objectives

- To integrate and synchronize 3rd party data seamlessly

- To build real-time and batch pipelines in Python, SQL, and R for transformation

- A modular code that’s reusable and testable with data validations

- To run, monitor and orchestrate several pipelines while you are sleeping

- Collaborate on cloud, version control with Git, and test pipelines without waiting for an available shared staging environment

- Fast deployments on Cloud providers like AWS, GCP, and Azure via terraform templates

- Transform very large datasets directly in your data warehouse or through native integration with Spark

- With built-in monitoring, alerting, and observability through an intuitive UI

Wouldn’t it be so easy as falling off a log? Then you should definitely try Mage!

In this article, I will talk about the features and functionalities of Mage, highlighting what I have learned so far and the first pipeline I’ve built using it.

This article was published as a part of the Data Science Blogathon.

Table of contents

What is Mage?

Mage is a modern data orchestration tool powered by AI and built on Machine Learning models and aims to streamline and optimize data engineering processes like never before. It is an effortless yet effective open-source data pipeline tool for data transformation and integration and can be a compelling alternative to well-established tools like Airflow. By combining the power of automation and intelligence, Mage revolutionizes the data processing workflow, transforming the way data is handled and processed. Mage strives to simplify and optimize the data engineering process, unlike anything that has come before with its unmatched capabilities and user-friendly interface.

Step 1: Quick Installation

The mage can be installed using Docker, pip, and conda commands, or can be hosted on Cloud services as a Virtual Machine.

Using Docker

#Command line for installing Mage using Docker

>docker run -it -p 6789:6789 -v %cd%:/home/src mageai/mageai /app/run_app.sh mage start [project_name]

#Command line for installing Mage locally at on a different port

>docker run -it -p 6790:6789 -v %cd%:/home/src mageai/mageai /app/run_app.sh mage start [project_name]Using Pip

#installing using pip command

>pip install mage-ai

>mage start [project_name]

#installing using conda

>conda install -c conda-forge mage-ai

There are also additional packages for installing Mage using Spark, Postgres, and many more. In this example, I have used Google Cloud Compute Engine to access Mage(as VM) via SSH. I ran the following commands after installing the necessary Python packages.

#Command for installing Mage

~$ mage sudo pip3 install mage-ai

#Command for starting the project

~$ mage start nyc_trides_project

Checking port 6789...

Mage is running at http://localhost:6789 and serving project /home/srinikitha_sri789/nyc_trides_proj

INFO:mage_ai.server.scheduler_manager:Scheduler status: running.Step 2: Build

Mage provides several blocks with built-in code that has test cases, which can be customized as per your project requirements.

I used Data Loader, Data Transformer, and Data Exporter blocks (ETL) to load the data from API, transforming the data and exporting it to Google Big Query for further analysis.

Let’s learn how each block works.

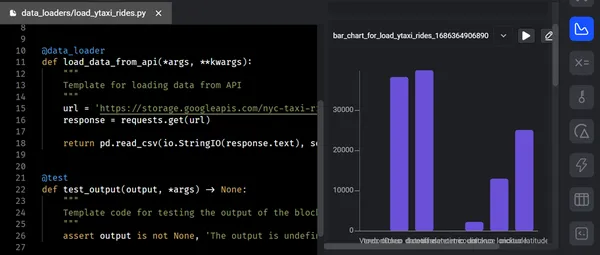

I) Data Loader

The “Data Loader” block serves as a bridge between the data source and succeeding stages of data processing within the pipeline. The data loader ingests data from sources and transforms it into a suitable format to make it available for further processing.

Key Functionalities

- Data Source Connectivity: The data Loader block allows connectivity to a wide range of databases, APIs, Cloud Storage Systems(Azure Blob Storage, GBQ, GCS, MySQL, S3, Redshift, Snowflake, Delta Lake, etc), and other streaming platforms.

- Data Quality Checks and Error Handling: During the data loading process, it performs data quality checks to ensure that the data is accurate, consistent, and compliant with established validation standards. The provided data pipeline logic can be used to log, flag, or address any errors or abnormalities that are discovered.

- Metadata Management: The metadata related to the ingested data is managed and captured by the data loader block. The data source, extraction timestamp, data schema, and other facts are all included in this metadata. Data lineage, auditing, and tracking of data transformations across the pipeline are made easier by effective metadata management.

The screenshot below displays the loading of raw data from the API into Mage using the data loader. After executing the data loader code and successfully passing the test cases, the output is presented in a tree structure within the terminal.

II) Data Transformation

The “Data Transformation” block performs manipulations on the incoming data and derive meaningful insights and prepares it for downstream processes. It has a generic code option and a standalone file containing modular code that’s reusable and testable with data validations in Python templates for data exploration, rescaling, and necessary column actions, SQl, and R.

Key Functionalities

- Combining of Data: The data transformer block makes it easier to combine and merge data from different sources or different datasets. Data engineers can combine data based on similar key qualities because it enables a variety of joins, including inner joins, outside joins, and cross joins. When undertaking data enrichment or merging data from several sources, this capability is really helpful.

- Custom Functions: It allows to define and apply customized functions and expressions to manipulate the data. You can leverage built-in functions or write user-defined functions for advanced data transformations.

After loading the data, the transformation code will perform all the necessary manipulations(in this example – converting a flat file to fact and dimension tables) and transforms the code to the data exporter. After executing the data transformation block, the tree diagram is shown below

III) Data Exporter

The “Data Exporter” block exports and delivers processed data to various destinations or systems for further consumption, analysis, or storage. It ensures seamless data transfer and integration with external systems. We can export the data to any storage using default templates provided for Python (API, Azure Blob Storage, GBQ, GCS, MySQL, S3, Redshift, Snowflake, Delta Lake, etc), SQL, and R.

Key Functionalities

- Schema Adaptation: It allows engineers to adapt the format and schema of the exported data to meet the requirements of the destination system.

- Batch Processing and Streaming: Data Exporter block works in both batch and streaming modes. It facilitates batch processing by exporting data at predefined intervals or based on specific triggers. Additionally, it supports real-time streaming of data, enabling continuous and nearly instantaneous data transfer to downstream systems.

- Compliance: It has features such as encryption, access control and data masking to protect sensitive information while exporting the data.

Following the data transformation, we export the transformed/processed data to Google BigQuery using Data Exporter for advanced analytics. Once the data exporter block is executed, the tree diagram below illustrates the subsequent steps.

Step 3: Preview/ Analytics

The “Preview” phase enables data engineers to inspect and preview processed or intermediate data at a given point in the pipeline. It offers a beneficial chance to check the accuracy of the data transformations, judge the quality of the data, and learn more about the data.

During this phase, each time we run the code, we receive feedback in the form of charts, tables, and graphs. This feedback allows us to gather valuable insights and information. We can immediately see results from your code’s output with an interactive notebook UI. In the pipeline, every block of code generates data that we can version, partition, and catalog for future utilization.

Key Functionalities

- Data Visualization

- Data Sampling

- Data Quality Assessment

- Intermediate Results Validation

- Iterative Development

- Debugging and troubleshooting

Step 4: Launching

In the data pipeline, the “Launch” phase represents the final step where we deploy the processed data into production or downstream systems for further analysis. This phase ensures that the data is directed to the appropriate destination and made accessible for the intended use cases.

Key Functionalities

- Data Deployment

- Automation and Scheduling

- Monitoring and Alerting

- Versioning and Rollback

- Performance Optimization

- Error Handling

You can deploy Mage to AWS, GCP, or Azure with only 2 commands using maintained Terraform templates, and can transform very large datasets directly in your data warehouse or through native integration with Spark and operationalize your pipelines with built-in monitoring, alerting, and observability.

The below screenshots show the total runs of pipelines and their statuses such as successful or failed, logs of each block, and its level.

Furthermore, Mage prioritizes data governance and security. It provides a secure environment for data engineering operations. Thanks to sophisticated built-in security mechanisms such as end-to-end encryption, access limits, and auditing capabilities. Mage’s architecture is based on strict data protection rules and best practices, protecting data integrity and confidentiality. Additionally, you can apply real-world use cases and success stories that highlight Mage’s potential in a variety of industries, including finance, e-commerce, healthcare, and others.

Miscellaneous Differences

| MAGE | OTHER SOFTWARES |

| Mage is an engine for running data pipelines that can move and transform data. That data can then be stored anywhere (e.g. S3) and used to train models in Sagemaker. | Sagemaker: Sagemaker is a fully managed ML service used to train machine learning models. |

| Mage is an open-source data pipeline tool for integration and transforming data(ETL). | Fivetran: Fivetran is a closed-source Saas(software-as-a-service) company providing a managed ETL service. |

| Mage is an open-source data pipeline tool for integration and transforming data. Mage’s focus is to provide an easy developer experience. | AirByte: AirByte is one of the open-source leading ELT platforms that replicates the data from APIs, applications, and databases to data lakes, data warehouses, and other destinations. |

Conclusion

In conclusion, data engineers and analytical experts can efficiently load, transform, export, preview, and deploy data by utilizing the features and functionalities of each phase in the Mage tool and its efficient framework for managing and processing data. This capability enables the facilitation of data-driven decision-making, extraction of valuable insights, and ensures readiness with production or downstream systems. It is widely recognized for its cutting-edge capabilities, scalability, and strong focus on data governance, making it a game-changer for data engineering.

Key Takeaways

- Mage provides a pipeline for comprehensive data engineering, which includes data ingestion, transformation, preview, and deployment. This end-to-end platform ensures quick data processing, effective data dissemination, and seamless connectivity.

- Mage’s data engineers have the capability to apply various operations during the data transformation phase, ensuring that the data is cleansed, enriched, and prepared for subsequent processing. The preview stage allows for validation and quality assessment of the processed data, guaranteeing its accuracy and reliability.

- Throughout the data engineering pipeline, Mage gives efficiency and scalability top priority. To improve performance, it makes use of optimization techniques such as parallel processing, data partitioning, and caching.

- Mage’s launch stage enables the effortless transfer of processed data to downstream or production systems. It has tools for automation, versioning, resolving errors, and performance optimization, providing trustworthy and timely data transmission.

Frequently Asked Questions

A. Features that set Mage apart (some of the others might eventually have these features):

1. Easy UI/IDE for building and managing data pipelines. When you build your data pipeline, it runs exactly the same in development as it does in production. Deploying the tool and managing the infrastructure in production is very easy and simple, unlike Airflow.

2. Extensible: We designed and built the tool with developers in mind, making sure it’s really easy to add new functionality to the source code or through plug-ins.

3. Modular: Every block/cell you write is a standalone file that is interoperable; meaning it can be used in other pipelines or in other code bases.

A.Mage currently supports Python, SQL, R, PySpark, and Spark SQL(in the future).

A. Databricks provides an infrastructure to run Spark. They also provide notebooks that can run your code in Spark as well. The mage can execute your code in a Spark cluster, managed by AWS, GCP, or even Databricks.

A. Yes, Mage seamlessly integrates with existing data infrastructure and tools as part of its design. It supports various data storage platforms, databases, and APIs, allowing for smooth integration with your preferred systems.

A. Yes, Mage can handle. It can accommodate fluctuating data volumes and processing needs because of its scalability and performance optimization capabilities, making it suited for organizations of various sizes and data processing complexity levels.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.