MIT’s AI Agents Pioneer Interpretability in AI Research

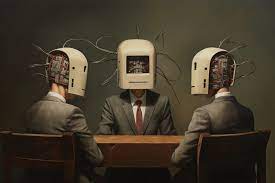

In a groundbreaking development, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have introduced a novel method leveraging artificial intelligence (AI) agents to automate the explanation of intricate neural networks. As the size and sophistication of neural networks continue to grow, explaining their behavior has become a challenging puzzle. The MIT team aims to unravel this mystery by employing AI models to experiment with other systems and articulate their inner workings.

The Challenge of Neural Network Interpretability

Understanding the behavior of trained neural networks poses a significant challenge, particularly with the increasing complexity of modern models. MIT researchers have taken a unique approach to address this challenge. They will introduce AI agents capable of conducting experiments on diverse computational systems, ranging from individual neurons to entire models.

Agents Built from Pretrained Language Models

At the core of the MIT team’s methodology are agents constructed from pretrained language models. These agents play a crucial role in producing intuitive explanations of computations within trained networks. Unlike passive interpretability procedures that merely classify or summarize examples, the MIT-developed Artificial Intelligence Agents (AIAs) actively engage in hypothesis formation, experimental testing, and iterative learning. This dynamic participation allows them to refine their understanding of other systems in real-time.

Autonomous Hypothesis Generation and Testing

Sarah Schwettmann, Ph.D. ’21, co-lead author of the paper on this groundbreaking work and a research scientist at CSAIL, emphasizes the autonomy of AIAs in hypothesis generation and testing. The AIAs’ ability to autonomously probe other systems can unveil behaviors that might otherwise elude detection by scientists. Schwettmann highlights the remarkable capability of language models. Additionally, they are equipped with tools for probing, designing, and executing experiments that enhance interpretability.

FIND: Facilitating Interpretability through Novel Design

The MIT team’s FIND (Facilitating Interpretability through Novel Design) approach introduces interpretability agents capable of planning and executing tests on computational systems. These agents produce explanations in various forms. This includes language descriptions of a system’s functions and shortcomings and code that reproduces the system’s behavior. FIND represents a shift from traditional interpretability methods, actively participating in understanding complex systems.

Real-Time Learning and Experimental Design

The dynamic nature of FIND enables real-time learning and experimental design. The AIAs actively refine their comprehension of other systems through continuous hypothesis testing and experimentation. This approach enhances interpretability and surfaces behaviors that might otherwise remain unnoticed.

Our Say

The MIT researchers envision the FIND approach’s pivotal role in interpretability research. It is similar to how clean benchmarks with ground-truth answers have driven advancements in language models. The capacity of AIAs to autonomously generate hypotheses and perform experiments promises to bring a new level of understanding to the complex world of neural networks. MIT’s FIND method propels the quest for AI interpretability, unveiling neural network behaviors and advancing AI research significantly.