Overview

- A data-science-driven product consists of multiple aspects every leader needs to be aware of

- Machine learning algorithms are one part of a whole – we need to consider things like interpretability, the trade-off between computation cost and accuracy, among other things

- This article is written by a data science leader with over a decade of experience working in this field

Introduction

Practical data science is a multi-dimensional field. Machine learning algorithms are essentially one part of the entire end-to-end data-science-driven project. I often come across early-stage data science enthusiasts who don’t quite have the full picture in mind during their initial days.

There are multiple practical considerations we need to account for while building a data-science-driven solution for real-world situations. And here’s the curious part – it isn’t just limited to the data side of things!

Some of the more crucial cogs in a data-science-driven project include:

- A seamless user experience

- The trade-off between computational cost and our machine learning model’s accuracy

- How well can we interpret the model, among other things

So in this article, I draw on my own experience to talk about how a leader needs to think about a data-science-driven project. Getting to a data-driven solution isn’t a straight and pre-defined path. We need to be aware of the various facets of maintaining and deploying our data-driven solution.

This article is the continuation of a four article series that focuses on sharing insights on various components involved in successfully implementing a data science project.

Table of Contents

- A Quick Recap of what we have Covered in this Data Science Leaders Series

- Data Science is a Part of the Whole

- Synergies with the User Interface (UI) module and overall User Experience (UX)

- The Trade-off between Compute Cost and System Accuracy

- Model Interpretability

A Quick Recap of what we have Covered in this Data Science Leaders Series

I want to quickly summarize the first two articles in this series in case you have not read them yet.

In the first article, we spoke about the three key constituents whose goals need to be aligned for the successful development and deployment of data-driven products. These constituents are:

- Customer-facing teams

- Executive teams

- Data science team

These are essentially the key stakeholders in a data science project. You can read the full article, and a detailed breakdown, of each stakeholder here.

In the second article, we discussed ways to bridge the gap between qualitative business requirements and quantitative inputs to machine learning models. In particular, we spoke about defining the success criteria of data-driven products in a way that progress can be measured in tangible quantitative terms.

The article also provided a framework to capture the appropriate granularity of data with consistent human labels to train accurate machine learning models. Finally, the article reflected on how the right team composition is critical for the end-to-end success.

You can read the second article here. And now it’s time to dive into the third article of this series!

Data Science is a Part of the Whole

Take a moment to think about some of the data-driven products that you use regularly. There’s a good chance you might have thought of one of the following:

- An online search engine that provides relevant responses to your search term but also helps you refine your search as you enter the query

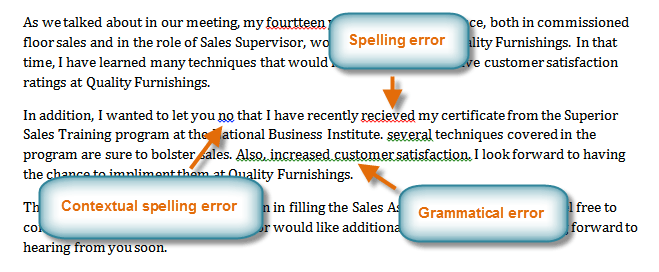

- A word processor that checks the spellings and grammatical construct of your text and either auto-corrects or makes recommendations for corrections

- A social media platform that personalizes content or people you should connect with based on relevancy to your interactions on the platform

- An e-commerce portal that recommends what you should purchase based on your current shopping basket and/or your shopping history

Two more specialized examples would be:

- A financial lending institution has developed a data-driven solution that decides whether an applicant is worthy of a loan. If yes, what is the optimal loan amount?

- A huge data center with thousands of computer servers crunching numbers for a variety of critical business requirements has developed a data-driven solution that analyses the logs across the servers, databases, and network traffic to predict which servers to switch on (or switch off) and how to regulate the cooling units

A critical component in the data science module of each of these data-driven products would be able to perform content organization and information retrieval.

And yet, the Information Retrieval (IR) component will need to be significantly tailored based on the end-use case. Likewise, the flow of information will need to be modified based on the functioning of the IR component.

Let’s understand this using the examples we mentioned above:

- Let’s say a search engine is making real-time recommendations for refining the search query as the user is typing. The IR component is worthless if it can’t return a meaningful piece of information in a couple of seconds

- For the data center management solution, the IR component needn’t bother about the speed but must weigh its outputs based on their impact to the business continuity of the clients that it caters to

- In the loan-decisioning solution mentioned above, the IR component has to pay particular attention not to speed of execution or ‘business-expediency’ but most importantly to be ‘explainable and fair in the eyes of the regulating authorities’

As the data science delivery owner, you have to know the end-to-end use case, evaluate the various constraints that it may impose on your solution and also identify the various degrees of freedom it may give you. We will discuss some of the specifics below.

I like to think of the data science component as one piece of a jigsaw puzzle: significant in its own right but needs to fit in snugly with the rest of the puzzle!

Synergies with the User Interface Module and Overall User Experience

In all practical applications, the data science component is only an enabling technology and never the complete solution by itself.

Users interact with the end application through a User Interface (UI). The User Experience (UX) should be designed in a way that is synergistic with the powers of the underlying data science component while camouflaging its shortcomings.

Let me illustrate an optimal way of synergizing UI/UX with the data science component using two different examples:

- Search engine

- Word processor

Search Engine

A typical web search engine uses heavy data science machinery to rank and categorize WebPages. It then returns the most relevant ones in response to the user’s query.

If the data science component can interpret the query with high confidence and extract the exact specific answer, the user interface can utilize this confidence to just display the answer as a ‘zero-click-result’. This will lead to a seamless UX.

Google applies this. For queries like ‘prime minister of India,’ it returns the answers as ‘knowledge panels’. On the other hand, when the data science confidence on the exact answer is below a certain threshold, it is safe to let the user interact with the system through a few more clicks to get to the specific answer rather than risking a bad user experience.

When we search for ‘where do MPs of India meet’, Google’s first link has the right answer but because the confidence on the exact answer snippet is lower, it doesn’t show a ‘knowledge panel’.

There is another way to exploit UI/UX synergy with the data science component. The users’ interactions with the system can also be used to generate indirect ‘labeled data’ and also as a proxy on the system’s performance evaluation.

For example, imagine a scenario where a web search engine returns the top 10 results for a given query and the user almost always clicks on either the second or the third link. This implies that the underlying data science component needs to revisit its ranking algorithm so that the second and the third links are ranked higher than the first one.

The ‘wisdom-of-crowd’ also provides the labeled pair of ‘query-and-relevant-Webpage’. Admittedly, labeled pairs inferred in such a way will include a variety of user biases. Hence, a nontrivial label normalization process is needed before these labels can be used for training the data science component.

Word Processor

Similarly, consider a typical spell-checker in a word processor. The underlying data science machinery is tasked with recognizing when a typed word is likely a spelling mistake, and if so, highlighting the misspelled word and suggesting likely correct words.

- When the data science machinery finds only a single likely correct spelling and that too with high confidence, it should auto-correct the spelling to provide a seamless user experience

- On the other hand, if there are multiple likely correct words for the misspelled word, each with a reasonably high confidence score, the UI should show them all and let the user choose the right one

- Similarly, if the multiple likely correct words have low confidence scores, the UI should camouflage this shortcoming by highlighting the spelling error without suggesting any corrections. This again makes for a pleasant user experience

Thus, the data science team must understand all transformations of the data science-driven output that will go through before reaching the end user’s hands. And the UI designers and engineers should understand the nature of likely errors that the data science component will make.

The data science delivery leader has to drive this collaboration across the teams to provide an optimal end solution. Also, notice that I mentioned “high confidence” and “low confidence” above, whereas what the machines will need is “confidence above 83%”. This is the ‘qualitative to quantitative gap’ that we discussed in the previous article of this series.

The Trade-off between Compute Cost and System Accuracy

The next aspect that the teams have to build a common understanding of is about the nature of the user’s interaction with the end-to-end system.

Let’s take the example of a speech-to-text system. Here, if the expected setup is such that the user uploads a set of speech files and expects an auto-email-alert when the speech-to-text outputs are available, the data science system can take a considerable amount of time to generate the best quality output.

On the other hand, what happens if the user interaction is such that the user speaks a word/phrase and waits for the system to respond? The data science system architecture will have to be such that it trades for a higher compute cost to generate instantaneous results with high accuracy.

Knowing the full context in which the data science system will be deployed can also help to make informed trade-offs between the data science system’s ability to compute efficiency and overall accuracy.

In the above example of speech-to-text, we know that the end-to-end system restricts the user to speak only the names of people in his/her phone-book. So here, the data science component can restrict its search space to the names in the phone-book rather than searching through millions of people’s names.

The amount of computing power needed for training and executing the machine learning component typically grows linearly at lower accuracy numbers and then grows exponentially at higher accuracy numbers.

The cost of running and maintaining the solution should be a lot lesser than the revenue attributed to the solution for the machine learning solution to be monetarily viable.

This can be achieved in a couple of ways:

- Holding discussions with the product team, the client team and the engineering teams to establish the sensitivity of the overall system to the accuracy of the data science solution. This can help establish what is a reasonable accuracy to aim for

- Reducing the number of times the most complex data science sub-component gets invoked. Once the most complex data science sub-component is identified, we need to identify the data samples on which this data science sub-component gets invoked repeatedly. Creating a lookup table of these common input-output pairs will increase the overall efficiency of the system

- As an example, in enriching financial transactions in my current setup, such optimizations led to a drop of about 70% in the compute cost at an expense of only a few GB increase in the RAM for the lookup table

- A popular example where implementation and maintenance costs trump the gained accuracy is Netflix’s decision to not use the million-dollar-award-winning solution which would have otherwise led to about a 10% increase in its movie-recommendation accuracy

Model Interpretability

Yet another practical consideration that should be high on our list is ‘model interpretability‘.

By being able to interpret why a given data science model behaved in a particular way helps prioritize changes in the model, changes in the training samples, and/or changes to the architecture to improve the overall performance.

In several applications like the loan-eligibility prediction we discussed above, or precision medicine, or forensics, the data science models are, by regulation, required to be interpretable so that human experts can check for unethical biases.

Interpretable models also go a long way in building stakeholder trust in the data-driven paradigm of solving business problems. But, the flip side is that often the most accurate models are also the ones that are most abstract and hence least interpretable.

Thus, one fundamental issue that the data science delivery leader has to address is the compromise between accuracy and interpretability.

Deep Learning-based models fall in the category of higher-abstraction-and-lower-interpretability models. There is a tremendous amount of active research in making deep learning models interpretable (e.g., LIME and Layer wise Relevance Propagation).

End Notes

In summary, a high accuracy data science component by itself may not mean much even if it solves a pressing business need. On one extreme, it could be that the data science solution achieves high accuracy at the cost of high compute power or high turnaround time, neither of which are acceptable by the business.

On the other extreme, it could be that the component that the end-user interacts with has minimal sensitivity to the errors of the data science component and thus a relatively simpler model would have sufficed the business needs.

A good understanding of how the data science component fits into the overall end-to-end solution will undoubtedly help make the right design and implementation decisions. This, in turn, increases customer acceptance of the solution within a reasonable operational budget.

I hope you liked the article. Do post your comments and suggestions below. I will be back with the last article of this series soon.

Great Article on practical challenges in Implementation !!

Thanks, Preeti. Yes, addressing these practical challenges is the key!