Creating Ad copies and blog content, enabling data collection, optimizing campaigns, processing customer data to build detailed personas, and even automating your entire marketing workflow from lead nurturing to conversion tracking.

AI is growing so fast that it can heavy-lift the majority of your marketing tasks.

However, non-compliant use of AI for marketing, like pasting sensitive customer data into public LLMs without consent or not informing your audience of how you process their data, can result in fines, lawsuits, and reputational damage.

In this article, we’ll share some key regulations that guard-rail AI use for marketing, ethical frameworks to consider, and tips for using AI responsibly while protecting your brand against legal implications.

Key regulations and laws for AI use in Marketing

AI is like an intern in the marketing space and can occasionally make mistakes or be used to make mistakes when handling consumer data, resulting in disastrous marketing outcomes.

That’s why there are global and regional regulations, such as GDPR, CAN-SPAM, CCPA, AND EU AI Act, to guard against these errors. Let us understand these one by one.

1. General Data Protection Regulation

The GDPR is an act designed by the European Union to protect individuals and their data within the European Union or the European Economic Area. And it applies to businesses transacting within the European borders and interacting with consumer data, whether the company is physically situated there or not.

If you will be using AI to interact with your customers’ data, you’re mandated to explain why, how, and which tools you want to employ. You must also grant users the ability to erase their data from your records at any time they choose. A breach can cost as much as €20 million or up to 4% of your total global turnover.

Another such example in the US is the California Consumer Privacy Act (CCPA).

2. CAN-SPAM

The Controlling the Assault of Non-Solicited Pornography and Marketing Act regulates how businesses send commercial emails. This includes using truthful subject lines, headers, and bodies, allowing users to opt out easily and at will, and only sending emails with consent.

However, 34% of marketers, according to a State of Email Trends by Litmus, use generative AI tools like ChatGPT to create email copy. This increases the risk of deceptive and clickbait-like headings or non-compliant content slipping into your emails. Breach of the CAN-SPAM Act can result in fines of up to $53,088 per email violation.

3. EU AI Act

The European Union created the AI Act to guide and guard against the harmful use of AI. Most importantly for marketers using AI, your customers must be informed when they are interacting with AI tools or synthetic media on your channels.

Synthetic media includes generated images, cloned or synthesized voices, deepfakes, and even virtual influencers who don’t exist in real life. So, if you’re using any of these exclusively in your marketing content, you should specify and inform your customers. Breach can be as costly as in the case of GDPR and CAN-SPAM.

Ethical considerations when using AI in marketing

AI regulations focus on implementing legal laws protecting your business against legal implications, while AI ethics highlights moral obligations you should follow to gain your consumer trust. These obligations include promoting inclusivity, eliminating bias, and ensuring transparency when using AI for marketing purposes.

1. Inclusivity

Because the internet contains biased and discriminatory patterns across gender, race, colour, and ability, AI models trained on this data can mirror those patterns. This can lead to biased outputs in ads, social media posts, and website content.

This can result in public backlash, broken consumer trust, and damaged PR. Sometimes, even fines. That’s why you should train your AI tool to block anti-inclusivity sentiments or content from its outputs.

If usage consistently violates inclusivity, you may need to retrain the model, adjust its prompts, or switch to a tool with stronger safeguards and better moderation controls.

2. Bias elimination

AI bias can result from data bias, algorithm bias, or human bias. Data bias occurs when your AI system is trained on incomplete or unbalanced data from the outset. So all its outputs are biased as well.

On the other hand, algorithms become biased when you train an AI to favour specific outcomes, even when the logic does not support them. For instance, ChatGPT will keep producing campaign content aimed only at men if you train it on ICP data that mostly includes male customers.

As for human bias, an example of it was when Grok, xAI’s chatbot, allegedly began incorporating Elon Musk’s views before responding to users.

You need to eliminate these biases by training your AI on audited customer data and setting clear guidelines for neutrality in data labeling and prompt design.

3. Transparency

Be transparent about your AI use. Tell users why you use AI, what data it processes, how long you retain it, and when it is used. Also disclose when content is AI-generated, especially if AI plays a major role.

This also applies to AI-powered ad targeting. Add a reason as to why they’re seeing a particular advertisement or recommendation—maybe because they visited your website, they used a tool, or liked similar items, and your AI algorithm picked that up.

The more transparent you are about AI involvement, the more confident consumers feel about trusting you with their data.

4 Practical tips for using AI responsibly and compliantly

AI is here to stay, but your business might not if you fail to use it responsibly. Here are four tips to avoid that and stay compliant.

1. Get explicit consent

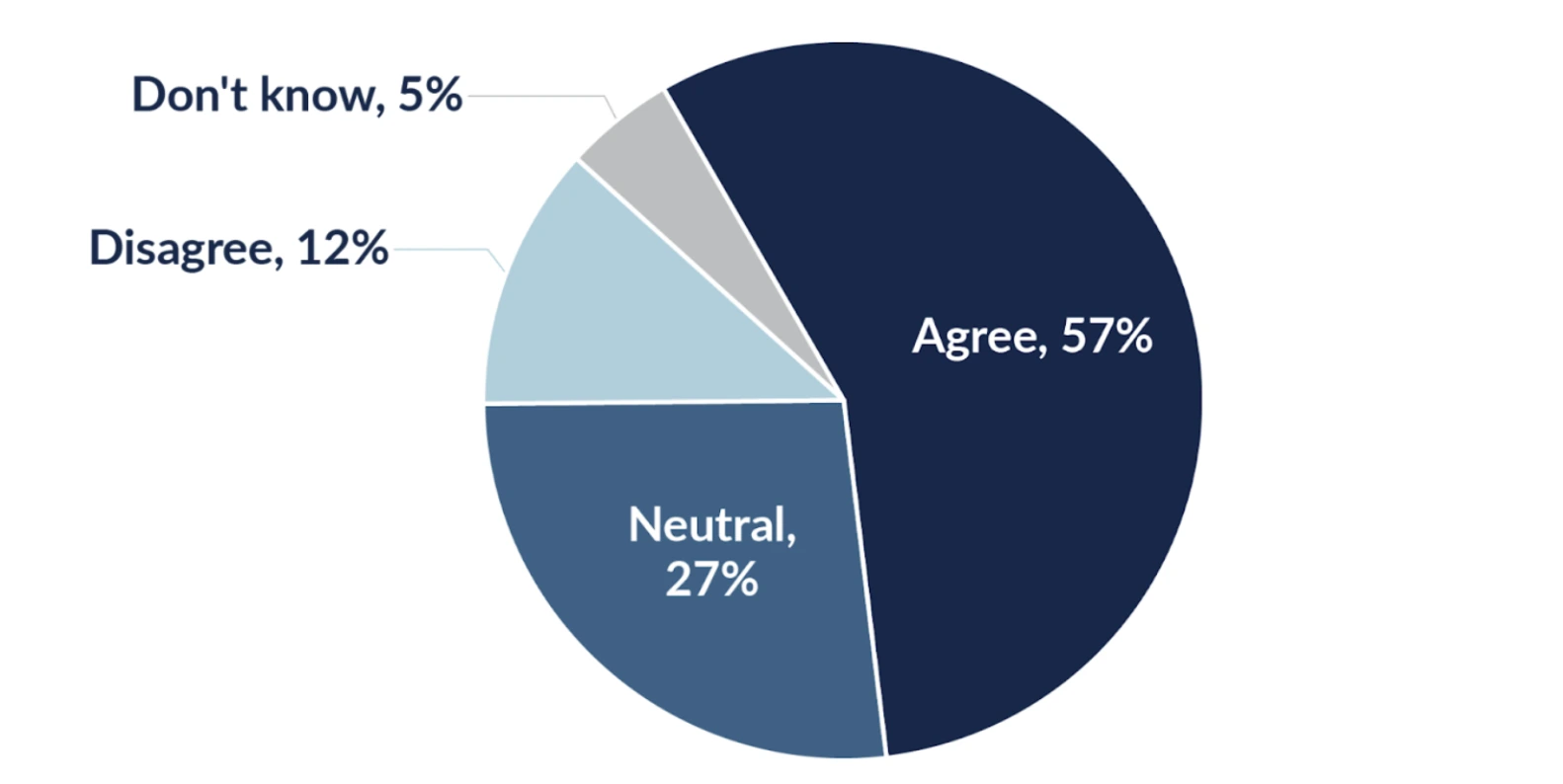

According to the IAPP’s 2023 Privacy and Consumer Trust Report, 68% of consumers are concerned about their online data privacy, and 57% of them agree that AI poses a significant threat to this privacy.

So, using AI to process data for marketing purposes without proper consent or careful use can erode an already fragile trust. Moreover, the unconsented use of consumer data, whether with or without AI, breaches the GDPR, CCPA, and many other data protection acts.

To prevent that:

- Create a standard privacy policy page and notice detailing AI use

- Request proper consent before data collection and processing

- Set up a double confirmation process before data usage and AI involvement

- Regularly review and re-ask users for consent in case they want to opt out

You should also make it easy for people to delete or request the deletion of their data.

2. Use secure AI platforms

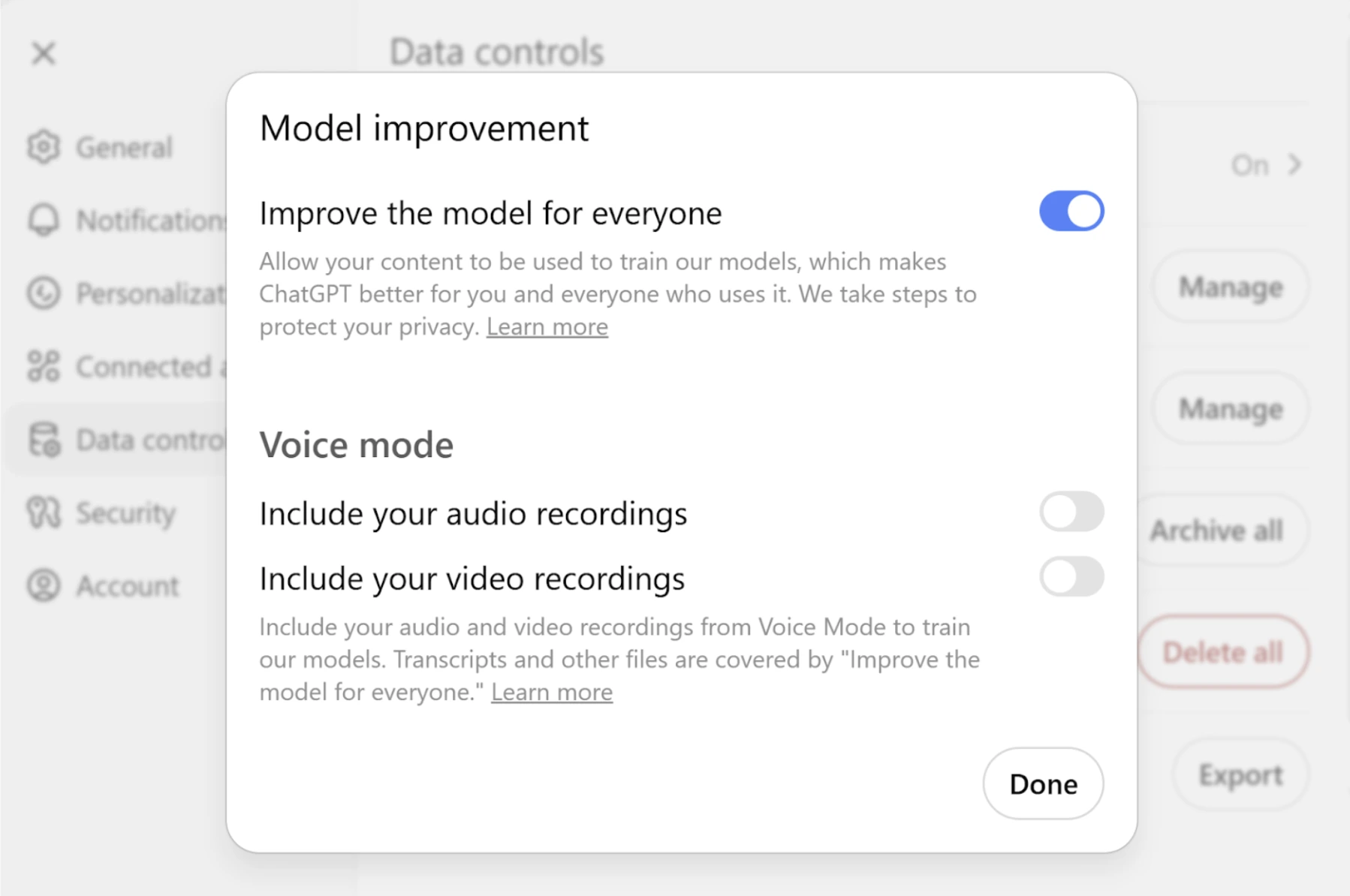

Public LLMs, such as the free version of ChatGPT, Perplexity, and Gemini, enable you to process a large amount of marketing data and generate frontline content to attract leads. The only problem is that these free subscription also automatically opts you into a data-sharing agreement unless you manually turn it off.

Your data is used to train these AI tools and, in some cases, fine-tune their outputs for other users. While not absolute, you might end up finding parts of your customer information, patterns, or behaviors reflected in responses given to others. And this breaches consumer privacy laws.

To avoid such outcomes:

- Only make use of the paid version of credible LLMs

- Always opt out of data-sharing agreements before pasting your data

- Utilize AI-powered data analysis tools like Snowflake to process your marketing data in a private space

Most importantly, steer clear of free online chatbots and AI tools, even if they save you thousands in cost. Before you let an AI marketing tool gain access to your most sensitive customer data, you need to do your due diligence and vet the company behind it. Review security whitepapers, privacy policies, and their online reputation, which they’ve built over the years.

You can also use a free tool to check Google’s position for their brand name and see how a vendor performs their core services. If they’re buried on page ten of SERPs rather than the first page, proceed with caution. A company with strong organic rankings is often, though not always, a more stable and lower-risk partner that has served its clients well. They have an authentic brand to protect and are less likely to take liberties with your data and their legal obligations.

3. Regularly audit AI outputs

AI is versatile and excels in many areas, achieving perfection. However, it still requires human input and oversight to stay safe. For instance, your AI tool might hallucinate—generate information that sounds correct but is actually false, misleading, or entirely fabricated—despite providing it with the best possible prompt.

If you don’t review your outputs before publishing them on your channel, you risk going viral with the wrong posts or drawing the ire of your audience. Besides, false marketing information can erode consumers’ trust in your business.

Prevent this by:

- Creating a standby content review team to monitor every AI output before it goes live

- Equip your team with an SOP for AI use that specifies which words to avoid and which tone or brand voice AI-generated content must follow.

Your team should also conduct a retrospective review of previous content or campaigns that were in part or wholly generated with AI, to ensure brand consistency.

Consult your legal team before using new AI marketing tools. Treat compliance as the first filter when vetting vendors, because the risk is not worth a small performance lift.

4. Stay updated on laws

Treat AI compliance as a recurring task. Laws around data privacy and AI use evolve frequently, so review applicable regulations in your operating regions every quarter.

Broader regulations, such as GDPR and the EU AI Act, should be at the top of your quarterly review if you operate in Europe. In countries like the United States of America, different states provide their own data privacy laws. An example is the CCPA for California.

Additionally, ensure you are aware of the laws and regulations enacted by your state, beyond regional rules and regulations.

Conclusion

AI has slowly crept into the core of marketing, and that’s not going to change anytime soon. However, the more you rely heavily on it to manage your marketing operations, the more it will come into contact with consumer data, and the easier it is to make mistakes or breach the legal guardrails surrounding this data.

Stay safe by updating your organization from sales to marketing on all relevant regulations and data privacy laws. Ensure you get explicit consent before collecting or processing any consumer’s data with AI. Also, for complete legal compliance, regularly audit every output, whether generated in part or fully by AI.

Lastly, stay updated on the laws and be aware of new regulations as AI adoption in marketing continues to scale up.

Frequently Asked Questions

The biggest legal risks involve feeding consumer data into unsafe AI tools, which might result in a leak or publishing unvetted and misleading AI-generated content on your marketing channel. Either can result in legal implications, including monetary fines, lawsuits, and eroded customer trust.

No, it’s not. Public AI tools, especially the free models, automatically opt you into a data-sharing agreement. To be on the safe side, use private data analytics AI platforms like Snowflake. You can also use paid subscriptions to platforms like ChatGPT, Gemini, and Perplexity.

Start by training your AI system on unbiased data, set guardrails to keep it from making decisions that do not align with your brand goals, and establish a human review team for proper auditing.

No, AI should not handle all your marketing processes, and marketing decisions should be made solely by your organization. However, you can employ credible AI tools to assist in analyzing the data necessary for making pivotal decisions.