If you are searching for free LLM APIs, chances are you already want to build something with AI. A chatbot. A coding assistant. A data analysis workflow. Or a quick prototype without burning money on infrastructure. The good news is that you no longer need paid subscriptions or complex model hosting to get started. Many leading AI providers now offer free access to powerful LLMs through APIs, with generous rate limits and OpenAI-compatible interfaces. This guide brings together the best free LLM APIs available right now, including their model options, request limits, token caps, and real code examples.

Table of contents

- Understanding LLM APIs

- Free LLM APIs Resources

- OpenRouter

- Google AI Studio

- Mistral (La Plateforme)

- HuggingFace Serverless Inference

- Cerebras

- Groq

- Scaleway Generative Free API

- OVH AI Endpoints

- Together Free API

- GitHub Models Free API

- Fireworks AI Free API

- Cloudflare Workers AI

- NVIDIA NIM APIs / build.nvidia.com inference endpoints

- Cohere

- AI21 Labs

- Benefits of Using Free APIs

- Tips for Efficient Use of Free APIs

- Conclusion

Understanding LLM APIs

LLM APIs operate on a straightforward request-response model:

- Request Submission: Your application sends a request to the API, formatted in JSON, containing the model variant, prompt, and parameters.

- Processing: The API forwards this request to the LLM, which processes it using its NLP capabilities.

- Response Delivery: The LLM generates a response, which the API sends back to your application.

Pricing and Tokens

- Tokens: In the context of LLMs, tokens are the smallest units of text processed by the model. Pricing is typically based on the number of tokens used, with separate charges for input and output tokens.

- Cost Management: Most providers offer pay-as-you-go pricing, allowing businesses to manage costs effectively based on their usage patterns.

Free LLM APIs Resources

To help you get started without incurring costs, here’s a comprehensive list of LLM-free API providers, along with their descriptions, advantages, pricing, and token limits.

1. OpenRouter

OpenRouter provides a variety of LLMs for different tasks, making it a versatile choice for developers. The platform allows up to 20 requests per minute and 200 requests per day.

Some of the notable models available include:

- DeepSeek R1

- Llama 3.3 70B Instruct

- Mistral 7B Instruct

All available models: Link

Documentation: Link

Advantages

- High request limits.

- A diverse range of models.

Pricing: Free tier available.

Example Code

from openai import OpenAI

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key="<OPENROUTER_API_KEY>",

)

completion = client.chat.completions.create(

model="cognitivecomputations/dolphin3.0-r1-mistral-24b:free",

messages=[

{

"role": "user",

"content": "What is the meaning of life?"

}

]

)

print(completion.choices[0].message.content)Output

The meaning of life is a profound and multifaceted question explored through

diverse lenses of philosophy, religion, science, and personal experience.

Here's a synthesis of key perspectives:

1. **Existentialism**: Philosophers like Sartre argue life has no inherent

meaning. Instead, individuals create their own purpose through actions and

choices, embracing freedom and responsibility.

2. **Religion/Spirituality**: Many traditions offer frameworks where meaning

is found through faith, divine connection, or service to a higher cause. For

example, in Christianity, it might relate to fulfilling God's will.

3. **Psychology/Philosophy**: Viktor Frankl proposed finding meaning through

work, love, and overcoming suffering. Others suggest meaning derives from

personal growth, relationships, and contributing to something meaningful.

...

...

...

2. Google AI Studio

Google AI Studio is a powerful platform for AI model experimentation, offering generous limits for developers. It allows up to 1,000,000 tokens per minute and 1,500 requests per day.

Some models available include:

- Gemini 2.0 Flash

- Gemini 1.5 Flash

All available models: Link

Documentation: Link

Advantages

- Access to powerful models.

- High token limits.

Pricing: Free tier available.

Example Code

from google import genai

client = genai.Client(api_key="YOUR_API_KEY")

response = client.models.generate_content(

model="gemini-2.0-flash",

contents="Explain how AI works",

)

print(response.text)Output

/usr/local/lib/python3.11/dist-packages/pydantic/_internal/_generate_schema.py:502: UserWarning: <built-in

function any> is not a Python type (it may be an instance of an object),

Pydantic will allow any object with no validation since we cannot even

enforce that the input is an instance of the given type. To get rid of this

error wrap the type with `pydantic.SkipValidation`.

warn(

Okay, let's break down how AI works, from the high-level concepts to some of

the core techniques. It's a vast field, so I'll try to provide a clear and

accessible overview.

**What is AI, Really?**

At its core, Artificial Intelligence (AI) aims to create machines or systems

that can perform tasks that typically require human intelligence. This

includes things like:

* **Learning:** Acquiring information and rules for using the information

* **Reasoning:** Using information to draw conclusions, make predictions,

and solve problems.

...

...

...

3. Mistral (La Plateforme)

Mistral offers a variety of models for different applications, focusing on high performance. The platform allows 1 request per second and 500,000 tokens per minute. Some models available include:

- mistral-large-2402

- mistral-8b-latest

All available models: Link

Documentation: Link

Advantages

- High request limits.

- Focus on experimentation.

Pricing: Free tier available.

Example Code

import os

from mistralai import Mistral

api_key = os.environ["MISTRAL_API_KEY"]

model = "mistral-large-latest"

client = Mistral(api_key=api_key)

chat_response = client.chat.complete(

model= model,

messages = [

{

"role": "user",

"Content": "What is the best French cheese?",

},

]

)

print(chat_response.choices[0].message.content)Output

The "best" French cheese can be subjective as it depends on personal taste

preferences. However, some of the most famous and highly regarded French

cheeses include:

1. Roquefort: A blue-veined sheep's milk cheese from the Massif Central

region, known for its strong, pungent flavor and creamy texture.

2. Brie de Meaux: A soft, creamy cow's milk cheese with a white rind,

originating from the Brie region near Paris. It is known for its mild,

buttery flavor and can be enjoyed at various stages of ripeness.

3. Camembert: Another soft, creamy cow's milk cheese with a white rind,

similar to Brie de Meaux, but often more pungent and runny. It comes from

the Normandy region.

...

...

...

4. HuggingFace Serverless Inference

HuggingFace provides a platform for deploying and using various open models. It is limited to models smaller than 10GB and offers variable credits per month.

Some models available include:

- GPT-3

- DistilBERT

All available models: Link

Documentation: Link

Advantages

- Wide range of models.

- Easy integration.

Pricing: Variable credits per month.

Example Code

from huggingface_hub import InferenceClient

client = InferenceClient(

provider="hf-inference",

api_key="hf_xxxxxxxxxxxxxxxxxxxxxxxx"

)

messages = [

{

"role": "user",

"content": "What is the capital of Germany?"

}

]

completion = client.chat.completions.create(

model="meta-llama/Meta-Llama-3-8B-Instruct",

messages=messages,

max_tokens=500,

)

print(completion.choices[0].message)Output

ChatCompletionOutputMessage(role='assistant', content='The capital of Germany

is Berlin.', tool_calls=None)

5. Cerebras

Cerebras provides access to Llama models with a focus on high performance. The platform allows 30 requests per minute and 60,000 tokens per minute.

Some models available include:

- Llama 3.1 8B

- Llama 3.3 70B

All available models: Link

Documentation: Link

Advantages

- High request limits.

- Powerful models.

Pricing: Free tier available, join the waitlist

Example Code

import os

from cerebras.cloud.sdk import Cerebras

client = Cerebras(

api_key=os.environ.get("CEREBRAS_API_KEY"),

)

chat_completion = client.chat.completions.create(

messages=[

{"role": "user", "content": "Why is fast inference important?",}

],

model="llama3.1-8b",

)Output

Fast inference is crucial in various applications because it has several

benefits, including:

1. **Real-time decision making**: In applications where decisions need to be

made in real-time, such as autonomous vehicles, medical diagnosis, or online

recommendation systems, fast inference is essential to avoid delays and

ensure timely responses.

2. **Scalability**: Machine learning models can process a high volume of data

in real-time, which requires fast inference to keep up with the pace. This

ensures that the system can handle large numbers of users or events without

significant latency.

3. **Energy efficiency**: In deployment environments where power consumption

is limited, such as edge devices or mobile devices, fast inference can help

optimize energy usage by reducing the time spent on computations.

...

...

...

6. Groq

Groq offers various models for different applications, allowing 1,000 requests per day and 6,000 tokens per minute.

Some models available include:

- DeepSeek R1 Distill Llama 70B

- Gemma 2 9B Instruct

All available models: Link

Documentation: Link

Advantages

- High request limits.

- Diverse model options.

Pricing: Free tier available.

Example Code

import os

from groq import Groq

client = Groq(

api_key=os.environ.get("GROQ_API_KEY"),

)

chat_completion = client.chat.completions.create(

messages=[

{

"role": "user",

"content": "Explain the importance of fast language models",

}

],

model="llama-3.3-70b-versatile",

)

print(chat_completion.choices[0].message.content)Output

Fast language models are crucial for various applications and industries, and

their importance can be highlighted in several ways:

1. **Real-Time Processing**: Fast language models enable real-time processing

of large volumes of text data, which is essential for applications such as:

* Chatbots and virtual assistants (e.g., Siri, Alexa, Google Assistant) that

need to respond quickly to user queries.

* Sentiment analysis and opinion mining in social media, customer feedback,

and review platforms.

* Text classification and filtering in email clients, spam detection, and content moderation.

2. **Improved User Experience**: Fast language models provide instant responses, which is vital for:

* Enhancing user experience in search engines, recommendation systems, and

content retrieval applications.

* Supporting real-time language translation, which is essential for global

communication and collaboration.

* Facilitating quick and accurate text summarization, which helps users to

quickly grasp the main points of a document or article.

3. **Efficient Resource Utilization**: Fast language models:

* Reduce the computational resources required for training and deployment,

making them more energy-efficient and cost-effective.

* Enable the processing of large volumes of text data on edge devices, such

as smartphones, smart home devices, and wearable devices.

...

...

...

7. Scaleway Generative Free API

Scaleway offers a variety of generative models for free, with 100 requests per minute and 200,000 tokens per minute.

Some models available include:

- BGE-Multilingual-Gemma2

- Llama 3.1 70B Instruct

All available models: Link

Documentation: Link

Advantages

- Generous request limits.

- Variety of models.

Pricing: Free beta until March 2025.

Example Code

from openai import OpenAI

# Initialize the client with your base URL and API key

client = OpenAI(

base_url="https://api.scaleway.ai/v1",

api_key="<SCW_API_KEY>"

)

# Create a chat completion for Llama 3.1 8b instruct

completion = client.chat.completions.create(

model="llama-3.1-8b-instruct",

messages=[{"role": "user", "content": "Describe a futuristic city with advanced technology and green energy solutions."}],

temperature=0.7,

max_tokens=100

)

# Output the result

print(completion.choices[0].message.content)Output

**Luminaria City 2125: A Beacon of Sustainability**

Perched on a coastal cliff, Luminaria City is a marvel of futuristic

architecture and innovative green energy solutions. This self-sustaining

metropolis of the year 2125 is a testament to humanity's ability to engineer

a better future.

**Key Features:**

1. **Energy Harvesting Grid**: A network of piezoelectric tiles covering the

city's streets and buildings generates electricity from footsteps,

vibrations, and wind currents. This decentralized energy system reduces

reliance on fossil fuels and makes Luminaria City nearly carbon-neutral.

2. **Solar Skiescraper**: This 100-story skyscraper features a unique double-

glazed facade with energy-generating windows that amplify solar radiation,

providing up to 300% more illumination and 50% more energy for the city's

homes and businesses.

...

...

...

8. OVH AI Endpoints

OVH provides access to various AI models for free, allowing 12 requests per minute. Some models available include:

- CodeLlama 13B Instruct

- Llama 3.1 70B Instruct

Documentation and All available models:https://endpoints.ai.cloud.ovh.net/

Advantages

- Easy to use.

- Variety of models.

Pricing: Free beta available.

Example Code

import os

from openai import OpenAI

client = OpenAI(

base_url='https://llama-2-13b-chat-hf.endpoints.kepler.ai.cloud.ovh.net/api/openai_compat/v1',

api_key=os.getenv("OVH_AI_ENDPOINTS_ACCESS_TOKEN")

)

def chat_completion(new_message: str) -> str:

history_openai_format = [{"role": "user", "content": new_message}]

return client.chat.completions.create(

model="Llama-2-13b-chat-hf",

messages=history_openai_format,

temperature=0,

max_tokens=1024

).choices.pop().message.content

if __name__ == '__main__':

print(chat_completion("Write a story in the style of James Joyce. The story should be about a trip to the Irish countryside in 2083, to see the beautiful scenery and robots.d"))Output

Sure, I'd be happy to help! Here's a story in the style of James Joyce, set

in the Irish countryside in 2083: As I stepped off the pod-train and onto

the lush green grass of the countryside, the crisp air filled my lungs and

invigorated my senses. The year was 2083, and yet the rolling hills and

sparkling lakes of Ireland seemed unchanged by the passage of time. The only

difference was the presence of robots, their sleek metallic bodies and

glowing blue eyes a testament to the advancements of technology. I had come

to this place seeking solace and inspiration, to lose myself in the beauty

of nature and the wonder of machines. As I wandered through the hills, I

came across a group of robots tending to a field of crops, their delicate

movements and precise calculations ensuring a bountiful harvest. One of the

robots, a sleek and agile model with wings like a dragonfly, fluttered over

to me and offered a friendly greeting. "Good day, traveler," it said in a

melodic voice. "What brings you to our humble abode?" I explained my desire

to experience the beauty of the Irish countryside, and the robot nodded

sympathetically.

9. Together Free API

Together is a collaborative platform for accessing various LLMs, with no specific limits mentioned. Some models available include:

- Llama 3.2 11B Vision Instruct

- DeepSeek R1 Distil Llama 70B

All available models: Link

Documentation: Link

Advantages

- Access to a range of models.

- Collaborative environment.

Pricing: Free tier available.

Example Code

from together import Together

client = Together()

stream = client.chat.completions.create(

model="meta-llama/Meta-Llama-3.1-8B-Instruct-Turbo",

messages=[{"role": "user", "content": "What are the top 3 things to do in New York?"}],

stream=True,

)

for chunk in stream:

print(chunk.choices[0].delta.content or "", end="", flush=True)Output

The city that never sleeps - New York! There are countless things to see and

do in the Big Apple, but here are the top 3 things to do in New York:

1. **Visit the Statue of Liberty and Ellis Island**: Take a ferry to Liberty

Island to see the iconic Statue of Liberty up close. You can also visit the

Ellis Island Immigration Museum to learn about the history of immigration in

the United States. This is a must-do experience that offers breathtaking

views of the Manhattan skyline.

2. **Explore the Metropolitan Museum of Art**: The Met, as it's

affectionately known, is one of the world's largest and most famous museums.

With a collection that spans over 5,000 years of human history, you'll find

everything from ancient Egyptian artifacts to modern and contemporary art.

The museum's grand architecture and beautiful gardens are also worth

exploring.

3. **Walk across the Brooklyn Bridge**: This iconic bridge offers stunning

views of the Manhattan skyline, the East River, and Brooklyn. Take a

leisurely walk across the bridge and stop at the Brooklyn Bridge Park for

some great food and drink options. You can also visit the Brooklyn Bridge's

pedestrian walkway, which offers spectacular views of the city.

Of course, there are many more things to see and do in New York, but these

three experiences are a great starting point for any visitor.

...

...

...

10. GitHub Models – Free API

GitHub offers a collection of various AI models, with rate limits dependent on the subscription tier.

Some models available include:

- AI21 Jamba 1.5 Large

- Cohere Command R

Documentation and All available models: Link

Advantages

- Access to a wide range of models.

- Integration with GitHub.

Pricing: Free with a GitHub account.

Example Code

import os

from openai import OpenAI

token = os.environ["GITHUB_TOKEN"]

endpoint = "https://models.inference.ai.azure.com"

model_name = "gpt-4o"

client = OpenAI(

base_url=endpoint,

api_key=token,

)

response = client.chat.completions.create(

messages=[

{

"role": "system",

"content": "You are a helpful assistant.",

},

{

"role": "user",

"content": "What is the capital of France?",

}

],

temperature=1.0,

top_p=1.0,

max_tokens=1000,

model=model_name

)

print(response.choices[0].message.content)Output

The capital of France is **Paris**.

11. Fireworks AI – Free API

Fireworks offer a range of various powerful AI models, with Serverless inference up to 6,000 RPM, 2.5 billion tokens/day.

Some models available include:

- Llama-v3p1-405b-instruct.

- deepseek-r1

All available models: Link

Documentation: Link

Advantages

- Cost-effective customization

- Fast Inferencing.

Pricing: Free credits are available for $1.

Example Code

from fireworks.client import Fireworks

client = Fireworks(api_key="<FIREWORKS_API_KEY>")

response = client.chat.completions.create(

model="accounts/fireworks/models/llama-v3p1-8b-instruct",

messages=[{

"role": "user",

"content": "Say this is a test",

}],

)

print(response.choices[0].message.content)Output

I'm ready for the test! Please go ahead and provide the questions or prompt

and I'll do my best to respond.

12. Cloudflare Workers AI

Cloudflare Workers AI gives you serverless access to LLMs, embeddings, image, and audio models. It includes a free allocation of 10,000 Neurons per day (Neurons are Cloudflare’s unit for GPU compute), and limits reset daily at 00:00 UTC.

Some models available include:

- @cf/meta/llama-3.1-8b-instruct

- @cf/mistral/mistral-7b-instruct-v0.1

- @cf/baai/bge-m3 (embeddings)

- @cf/black-forest-labs/flux-1-schnell (image)

All available models: Link

Documentation: Link

Advantages

- Free daily usage for quick prototyping

- OpenAI-compatible endpoints for chat completions and embeddings

- Big model catalog across tasks (LLM, embeddings, image, audio)

Pricing: Free tier available (10,000 Neurons/day). Pay-as-you-go above that on Workers Paid.

Example Code

import os

import requests

ACCOUNT_ID = "YOUR_CLOUDFLARE_ACCOUNT_ID"

API_TOKEN = "YOUR_CLOUDFLARE_API_TOKEN"

response = requests.post( f"https://api.cloudflare.com/client/v4/accounts/{ACCOUNT_ID}/ai/v1/responses",

headers={"Authorization": f"Bearer {AUTH_TOKEN}"},

json={

"model": "@cf/openai/gpt-oss-120b",

"input": "Tell me all about PEP-8"

}

)

result = response.json()

from IPython.display import Markdown

Markdown(result["output"][1]["content"][0]["text"]) Output

13. NVIDIA NIM APIs / build.nvidia.com inference endpoints

NVIDIA’s API Catalog (build.nvidia.com) provides access to many NIM-powered model endpoints. NVIDIA states that Developer Program members get free access to NIM API endpoints for prototyping, and the API Catalog is a trial experience with rate limits that vary per model (you can check limits in your build.nvidia.com account UI).

Some models available include:

- deepseek-ai/deepseek-r1

- ai21labs/jamba-1.5-mini-instruct

- google/gemma-2-9b-it

- nvidia/llama-3.1-nemotron-nano-vl-8b-v1

All available models: Link

Documentation: Link

Advantages

- OpenAI-compatible chat completions API

- Large catalog for evaluation and prototyping

- Clear note on prototyping vs production licensing (AI Enterprise for production use)

Pricing: Free prototyping access via NVIDIA Developer Program; production use requires appropriate licensing.

Example Code

from openai import OpenAI

client = OpenAI(

base_url = "https://integrate.api.nvidia.com/v1",

api_key="YOUR_NVIDIA_API_KEY"

)

completion = client.chat.completions.create(

model="deepseek-ai/deepseek-v3.2",

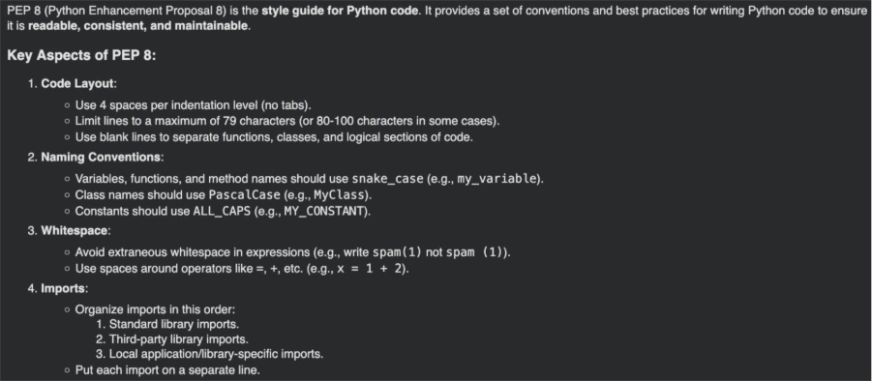

messages=[{"role":"user","content":"WHat is PEP-8"}],

temperature=1,

top_p=0.95,

max_tokens=8192,

extra_body={"chat_template_kwargs": {"thinking":True}},

stream=True

)

for chunk in completion:

if not getattr(chunk, "choices", None):

continue

reasoning = getattr(chunk.choices[0].delta, "reasoning_content", None)

if reasoning:

print(reasoning, end="")

if chunk.choices[0].delta.content is not None:

print(chunk.choices[0].delta.content, end="")Output

14. Cohere

Cohere provides a free evaluation/trial key experience, but trial keys are rate-limited. Cohere’s docs list trial limits like 1,000 API calls per month and per-endpoint request limits.

Some models available include:

- Command A

- Command R

- Command R+

- Embed v3 (embeddings)

- Rerank models

All available models: Link

Documentation: Link

Advantages

- Strong chat models (Command family) plus embeddings and rerank for RAG/search

- Simple Python SDK setup (ClientV2)

- Clear published trial limits for predictable testing

Pricing: Free trial/evaluation access available (rate-limited), paid plans for higher usage.

Example Code

import cohere

co = cohere.ClientV2("YOUR_COHERE_API_KEY")

response = co.chat(

model="command-a-03-2025",

messages=[{"role": "user", "content": "Tell me about PEP8"}],

)

from IPython.display import Markdown

Markdown(response.message.content[0].text) Output

15.AI21 Labs

AI21 offers a free trial that includes $10 in credits for up to 3 months (no credit card required, per their pricing page). Their foundation models include Jamba variants, and their published rate limits for foundation models are 10 RPS and 200 RPM (Jamba Large and Jamba Mini).

Some models available include:

- jamba-mini

- jamba-large

All available models: Link

Documentation: Link

Advantages

- Clear free-trial credits to experiment without payment details

- Straightforward SDK + REST endpoint for chat completions

- Published per-model rate limits for predictable load testing

Pricing: Free trial credits available; paid usage after credits are consumed.

Example Code

from ai21 import AI21Client

from ai21.models.chat import ChatMessage

messages = [

ChatMessage(role="user", content="What is PEP8?"),

]

client = AI21Client(api_key="YOUR_API_KEY")

result = client.chat.completions.create(

messages=messages,

model="jamba-large",

max_tokens=1024,

)

from IPython.display import Markdown

Markdown(result.choices[0].message.content) Output

Benefits of Using Free APIs

Here are some of the benefits of using Free APIs:

- Accessibility: No need for deep AI expertise or infrastructure investment.

- Customization: Fine-tune models for specific tasks or domains.

- Scalability: Handle large volumes of requests as your business grows.

Tips for Efficient Use of Free APIs

Here are some tips. to make efficient use of Free APIs, dealing with their shortcoming and limitations:

- Choose the Right Model: Start with simpler models for basic tasks and scale up as needed.

- Monitor Usage: Use dashboards to track token consumption and set spending limits.

- Optimize Tokens: Craft concise prompts to minimize token usage while still achieving desired outcomes.

Also Read:

- Guide to OpenAI API Models and How to Use Them

- How to Generate Your Own OpenAI API Key and Add Credits?

Conclusion

With the availability of these free APIs, developers and businesses can easily integrate advanced AI capabilities into their applications without significant upfront costs. By leveraging these resources, you can enhance user experiences, automate tasks, and drive innovation in your projects. Start exploring these APIs today and unlock the potential of AI in your applications.

Frequently Asked Questions

A. An LLM API allows developers to access large language models via HTTP requests, enabling tasks like text generation, summarization, and reasoning without hosting the model themselves.

A. Free LLM APIs are ideal for learning, prototyping, and small-scale applications. For production workloads, paid tiers usually offer higher reliability and limits.

A. Popular options include OpenRouter, Google AI Studio, Hugging Face Inference, Groq, and Cloudflare Workers AI, depending on use case and rate limits.

A. Yes. Many free LLM APIs support chat completions and are suitable for building chatbots, assistants, and internal tools.