OpenAI finally dropped their agent, chasing Sam Altman’s AGI vision from earlier this year. But they’re late to the party. General-purpose agents are already everywhere, doubling as personal assistants. I’ve been using Manus for quiet some time for tasks like sorting data, crafting presentations, simplifying course content, taking notes, and more. It has streamlined my work like a dream. Naturally, I was curious to see how OpenAI’s new ChatGPT Agent stacks up, so I put it head-to-head with Manus. Here’s a no-nonsense breakdown of how they performed.

Table of contents

Manus vs ChatGPT Agent: Pricing

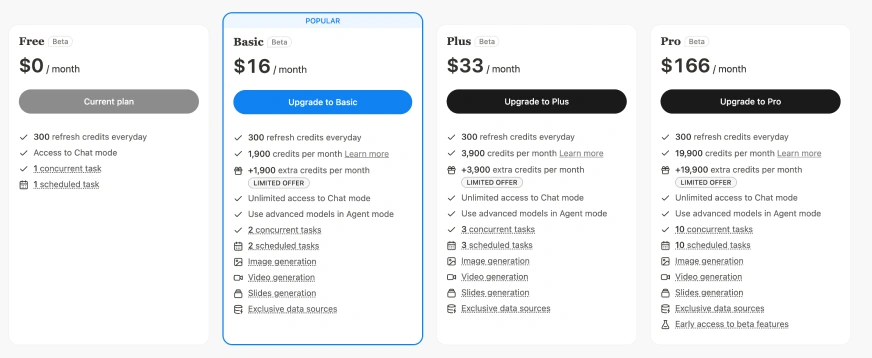

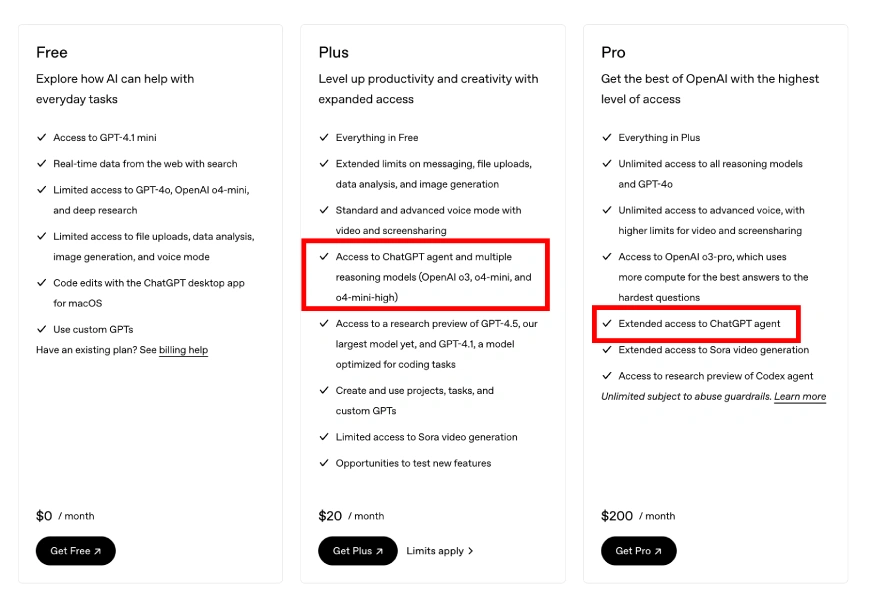

ChatGPT Plus, at $20/month, provides extended (though not unlimited) access to the ChatGPT Agent, along with GPT-4.1 mini, web browsing, and advanced voice capabilities. On the other hand, Manus offers a Free Beta plan with 300 daily refresh credits and 1 concurrent task, enough for light experimentation, but easy to exhaust. The Manus Basic Beta plan, priced at $16/month, increases the limit to 1,900 credits and 2 concurrent tasks. While it’s slightly cheaper than ChatGPT Plus, heavy workflows can consume credits quickly, which might limit long sessions or complex agent tasks.

Manus AI

ChatGPT Agent

If you don’t know what ChatGPT Agent is, checkout our detailed blog – ChatGPT Agent has Arrived and it’s so Cool!

Now that the cost part is sorted, let’s test both the models on same prompts and see which one performs better. For this, I am using the free plan of Manus and ChatGPT plus account.

Task 1: Creating a PPT

Prompt: “Create a concise, data-driven PowerPoint presentation (10-12 slides) on “Career and Salary Growth in Generative AI” for professionals entering or advancing in the field, covering – a title slide (“Career and Salary Growth in Generative AI,” subtitle: Opportunities & Trends, name, date); an intro defining Generative AI, its tech (LLMs, GANs, Diffusion Models), and applications (ChatGPT, Midjourney); market growth ($50B+ by 2025), adoption (tech, healthcare, finance), and AI startup funding; key roles (AI Research Scientist, ML Engineer, NLP Engineer, Prompt Engineer, AI Product Manager) with skills; salary trends (2024-2025, e.g., $80K-$300K US, ₹20L-₹80L India) vs. traditional AI roles; top employers (Google, OpenAI, Anthropic, healthcare/gaming); essential skills (Python, PyTorch, creativity, certifications); future trends (AI ethics, remote work); challenges (evolving tech, competition) with solutions; and a roadmap (learning, portfolio, networking). Use a modern template (dark/light, AI visuals), charts (salaries/market), icons, infographics, minimal text, and bullet points for clarity.”

ChatGPT Agent:

Manus:

Observation:

Manus delivered a more detailed and visually appealing presentation, whereas the ChatGPT Agent fell short in both content and design, making Manus the clear winner for this task.

Task 2: Data Analysis and Reporting

Prompt: “Collect reliable datasets on global warming, including key metrics such as:

- Global temperature anomalies (yearly averages)

- Atmospheric CO2 levels (ppm)

- Sea level rise (mm/year)

- Arctic ice extent (monthly averages)

Use reputable sources like NASA (https://climate.nasa.gov), NOAA (https://www.ncdc.noaa.gov), or the IPCC. Clean and preprocess the data if needed. Then, generate visualizations to highlight trends, such as:

- A line graph showing global temperature changes over time (e.g., 1880–present).

- A dual-axis plot comparing CO2 levels and temperature anomalies.

- A bar or line chart showing Arctic ice melt over decades.

- A map-based visualization of regional temperature variations.”

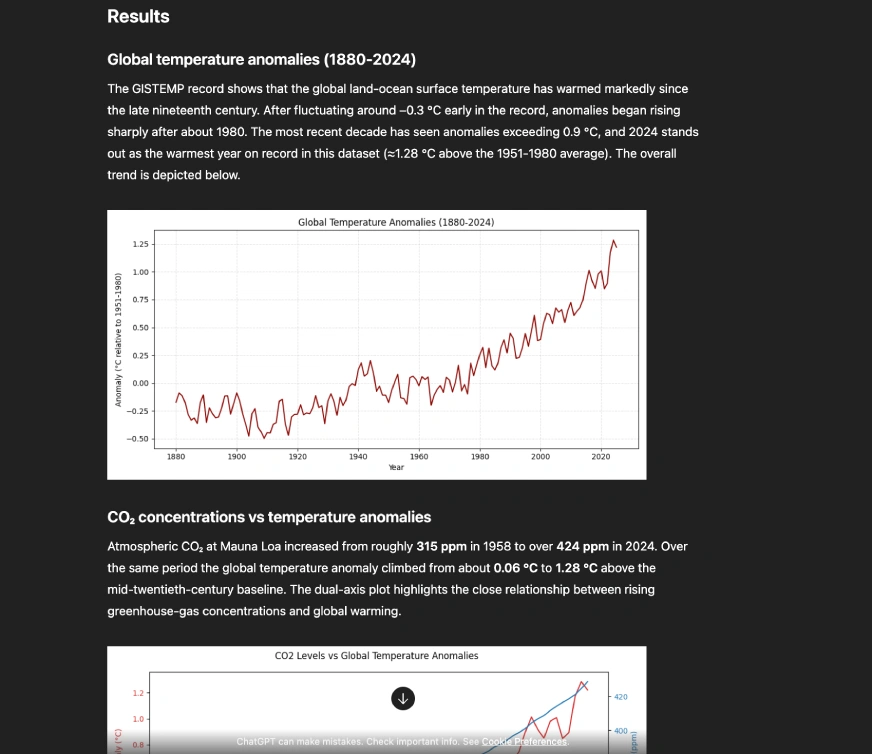

ChatGPT Agent:

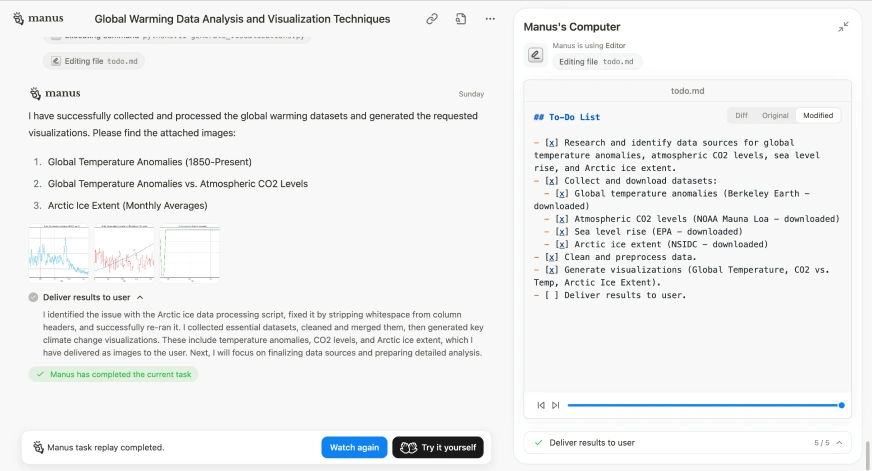

Manus:

Observation:

Manus provided only the graphs, without any explanation of what each graph represents or what insights to derive from them. In contrast, the ChatGPT Agent included an overview for each plot—explaining what it shows, the insights it offers, and the data being used.

For this task, the ChatGPT Agent clearly takes the win!

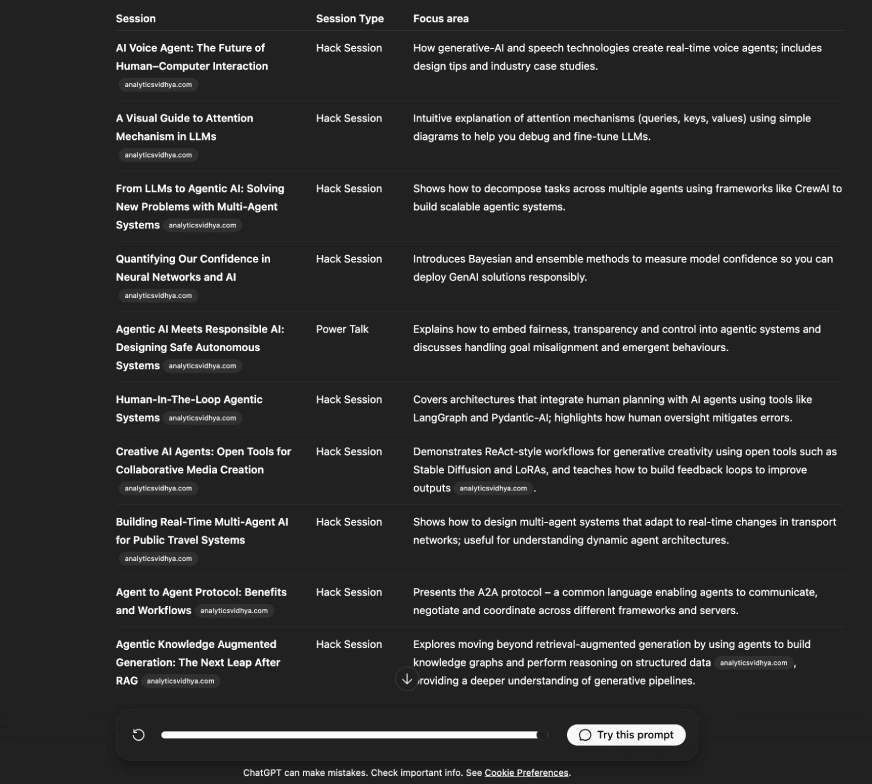

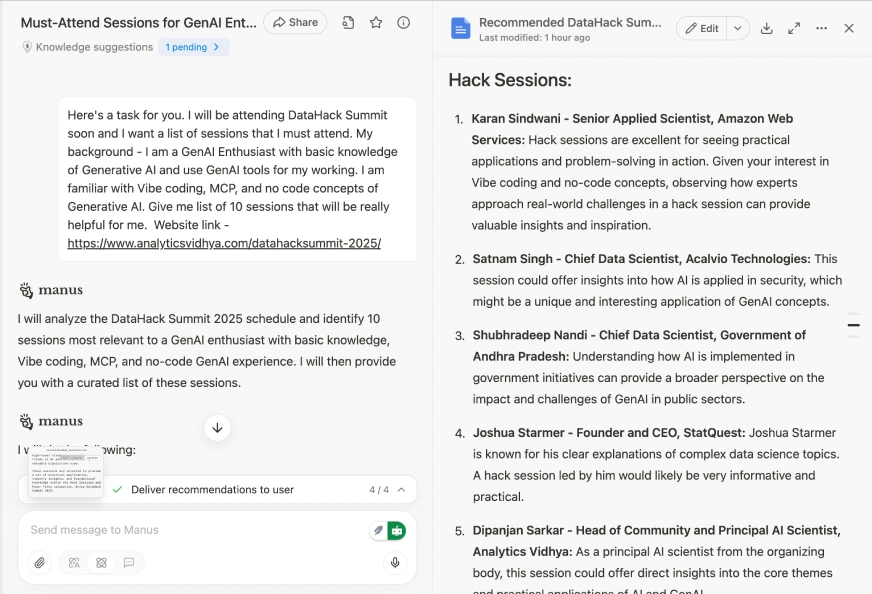

Task 3: Make my DHS Session Schedule

Prompt: “I’m attending DataHack Summit 2025 and need help picking the best sessions for my skill level. I’m a GenAI enthusiast with basic knowledge – I use AI tools daily and understand Vibe coding, MCP, and no-code concepts. Could you review the summit schedule here and suggest 10 must-attend sessions? I’m looking for: beginner-to-intermediate GenAI content (like prompt engineering or model fine-tuning), no-code/low-code demos, real-world case studies, emerging trends (multimodal AI, agentic workflows), and preferably some hands-on workshops. For each suggestion, please include why it’s relevant to me. Thanks!”

ChatGPT Agent:

Manus:

Observation:

Both tools were able to scan the website, but their approaches differed significantly. Manus organized the sessions by speaker rather than focusing on the hack sessions themselves, an unhelpful approach, as the priority should have been the sessions, not the speakers.

Meanwhile, the ChatGPT Agent did list the hack sessions, but the output was underwhelming. Many sessions were technical and didn’t align well with the prompt I provided.

In the end, neither model succeeded in creating a workable plan for me.

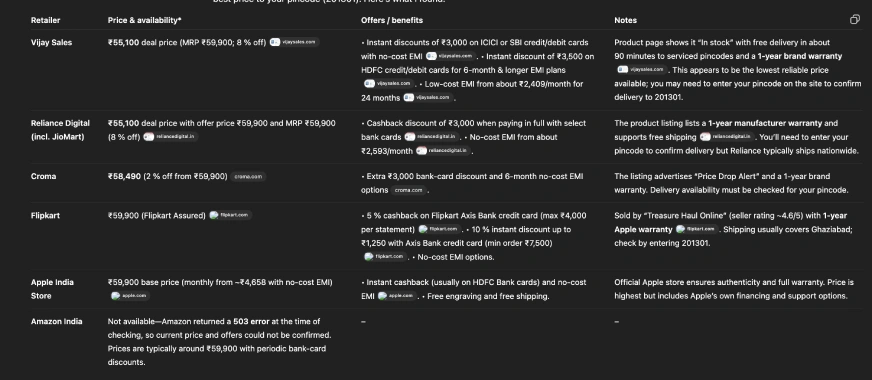

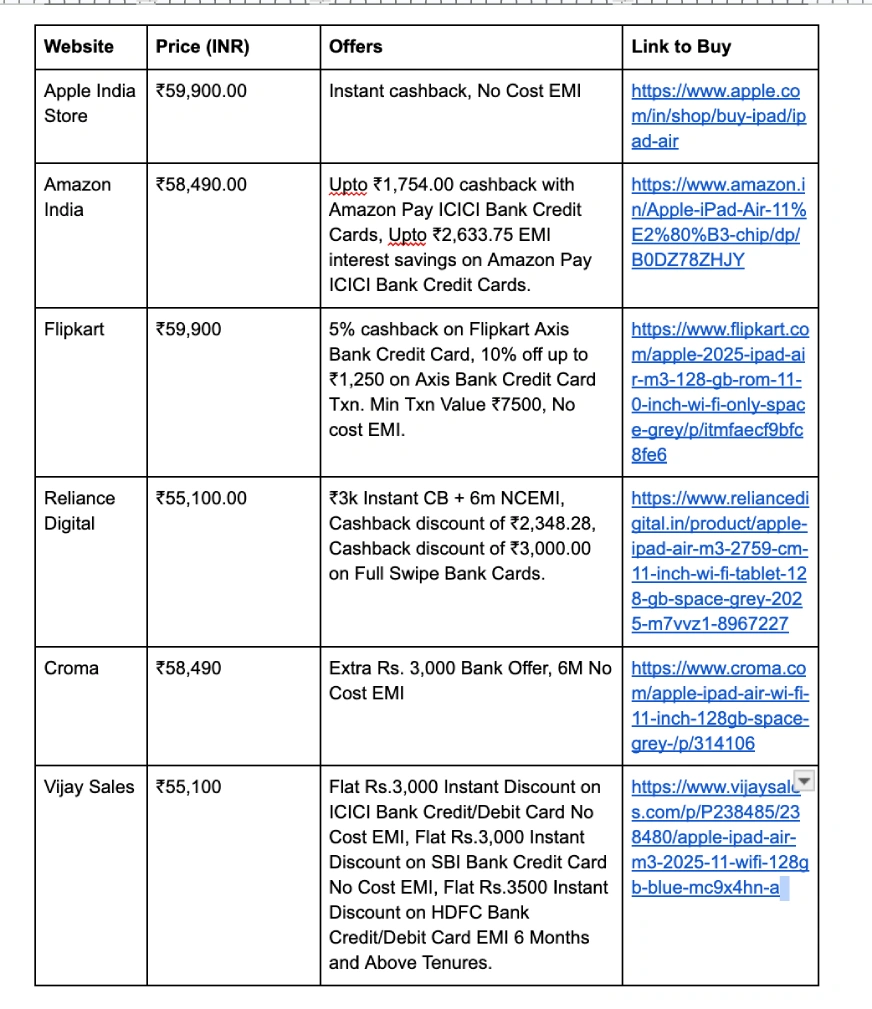

Task 4: Shopping Support

Prompt: “I want to buy the new iPad Air (M3 model) in India and need help finding the best deal. My delivery pincode is 201301. Please check major platforms like Amazon, Flipkart, Apple India Store, Croma, Vijay Sales, and Reliance Digital for the lowest price. Include any ongoing bank discounts, cashback offers, or exchange deals in the comparison. Also verify seller authenticity and warranty coverage. Focus on finding the cheapest reliable option with fast delivery to my location. Let me know if you need any other details.“

ChatGPT Agent:

Manus:

Observation:

Both Manus and the ChatGPT Agent provided accurate responses with correct model specifications, pricing, and purchase links. However, Manus delivered a cleaner output with a single, clear link, while the ChatGPT Agent included multiple links, making it harder to identify the correct one for placing an order. Additionally, some of the links from the ChatGPT Agent were not functioning properly.

So for this task, Manus is a clear winner.

Final Verdict

| Task | ChatGPT Agent | Manus |

|---|---|---|

| Creating a PPT | Fail | Pass |

| Data Analysis and Reporting | Pass | Fail |

| Make my DHS Session Schedule | Fail | Fail |

| Shopping Support | Fail | Pass |

Conclusion

In this head-to-head comparison, Manus comes out as the more reliable agent for handling general-purpose tasks, especially if you take the time to customize your prompts well. It consistently delivers cleaner outputs, is easier to navigate, and has been around long enough to iron out some of the rough edges that still show in OpenAI’s newly launched ChatGPT Agent. While the ChatGPT Agent shows promise, particularly in reasoning and contextual understanding, it lacks polish in execution and still feels early-stage in terms of usability. That said, OpenAI is likely to iterate quickly, and future updates could significantly improve its performance. For now, if you’re looking for a more mature, practical agent to help with everyday workflows, Manus has the edge. You can also checkout my previous articles on Manus:

Do try these agents on your own and let me know your thoughts in the comment section below.