The agentic AI sector is booming, valued at over $5.2 billion and projected to reach $200 billion by 2034. We’re entering an era where AI will be as commonplace as the internet, but there’s a critical flaw in its foundation. Today’s AI revolution relies on massive, power-hungry LLMs – a problem that SLMs for Agentic AI are uniquely positioned to solve. While LLMs’ near-human capabilities are impressive, they’re often overkill for specialized tasks, like using a sledgehammer to crack a nut. The result? Sky-high costs, energy waste, and stifled innovation – challenges that SLMs for Agentic AI directly address.

But there’s a better way. NVIDIA’s research paper, “Small Language Models Are the Future of Agentic AI,” reveals how SLMs (Small Language Models) offer a smarter, more sustainable path forward. Let’s dive into why smaller is often better and how SLMs are reshaping AI’s future.

Table of contents

Why SLMs?

The future isn’t about brute-force scale, it’s about right-sized intelligence.

– NVIDIA Research Paper

Before we understand why SLMs are the right choice, let’s first understand what exactly an SLM is. The paper defines it as a language model that can fit on a common consumer electronic device and perform inference with a low enough latency to be practical for a single user’s agentic requests. As of 2025, this generally includes models with under 10 billion parameters.

The authors of the paper argue that SLMs are not just a viable alternative to LLMs; they are a superior one in many cases. They lay out a compelling case, based on three key pillars:

- SLMs are powerful enough

- SLMs are more economical

- SLMs are more flexible

Let’s break down each of these arguments.

The Surprising “Power” of SLMs

It’s easy to dismiss SLMs as less capable than their larger counterparts. After all, the “bigger is better” mantra has been a driving force in the AI world for years. But recent advances have shown that this is no longer the case.

Well-designed SLMs are now capable of meeting or even exceeding the performance of much larger models on a wide range of tasks. The paper highlights several examples of this, including:

- Microsoft’s Phi series: The Phi-2 model, with just 2.7 billion parameters, achieves commonsense reasoning and code generation scores on par with 30-billion-parameter models, while running about 15 times faster. The Phi-3 small model (7 billion parameters) is even more impressive, with language understanding, commonsense reasoning, and code generation scores that rival models up to 10 times its size.

- NVIDIA’s Nemotron-H family: These hybrid Mamba-Transformer models, with sizes ranging from 2 to 9 billion parameters, achieve instruction following and code-generation accuracy comparable to dense 30 billion parameter LLMs, at a fraction of the inference cost.

- Huggingface’s SmolLM2 series: This family of compact language models, ranging from 125 million to 1.7 billion parameters, can match the performance of 14 billion parameter models of the same generation and even 70 billion parameter models from two years prior.

These are just a few examples, but the message is clear: when it comes to performance, size isn’t everything. With modern training techniques, prompting, and agentic augmentation, SLMs can pack a serious punch.

Also Read: Top 17 Small Language Models (SLMs)

The “Economic” Case for Going Small

This is where the argument for SLMs gets really compelling. In a world where every dollar counts, the economic advantages of SLMs are simply too big to ignore.

- Inference Efficiency: Serving a 7 billion parameter SLM is 10 to 30 times cheaper than serving a 70 to 175 billion parameter LLM, in terms of latency, energy consumption, and FLOPs. This means you can get real-time agentic responses at scale, without breaking the bank.

- Fine-tuning Agility: Need to add a new behavior or fix a bug? With an SLM, you can do it in a matter of hours, not weeks. This allows for rapid iteration and adaptation, which is crucial in today’s fast-paced world.

- Edge Deployment: SLMs can run on consumer-grade GPUs, which means you can have real-time, offline agentic inference with lower latency and stronger data control. This opens up a whole new world of possibilities for on-device AI.

- Modular System Design: Instead of relying on a single, monolithic LLM, you can use a combination of smaller, specialized SLMs to handle different tasks. This “Lego-like” approach is cheaper, faster to debug, easier to deploy, and better aligned with the operational diversity of real-world agents.

When you add it all up, the economic case for SLMs is overwhelming. They’re cheaper, faster, and more efficient than their larger counterparts, making them the smart choice for any organization that wants to build cost-effective, modular, and sustainable AI agents.

Why One “Size” Doesn’t Fit All

The world is not a one-size-fits-all place, and neither are the tasks we’re asking AI agents to perform. This is where the flexibility of SLMs really shines.

Because they’re smaller and cheaper to train, you can create multiple specialized expert models for different agentic routines. This allows you to:

- Adapt to evolving user needs: Need to support a new behavior or output format? No problem. Just fine-tune a new SLM.

- Comply with changing regulations: With SLMs, you can easily adapt to new regulations in different markets, without having to retrain a massive, monolithic model.

- Democratize AI: By lowering the barrier to entry, SLMs can help to democratize AI, allowing more people and organizations to participate in the development of language models. This will lead to a more diverse and innovative AI ecosystem.

The Road Ahead: Overcoming the Barriers to Adoption

If the case for SLMs is so strong, why are we still so obsessed with LLMs? The paper identifies three main barriers to adoption:

- Upfront investment: The AI industry has already invested billions of dollars in centralized LLM inference infrastructure, and it’s not going to abandon that investment overnight.

- Generalist benchmarks: The AI community has historically focused on generalist benchmarks, which have led to a bias towards larger, more general-purpose models.

- Lack of awareness: SLMs simply don’t get the same level of marketing and press attention as LLMs, which means many people are simply unaware of their potential.

But these are not insurmountable obstacles. As the economic benefits of SLMs become more widely known, and as new tools and infrastructure are developed to support them, we can expect to see a gradual shift away from LLMs and towards a more SLM-centric approach.

The LLM-to-SLM Conversion Algorithm

The paper even provides a roadmap for making this transition, a six-step algorithm for converting agentic applications from LLMs to SLMs:

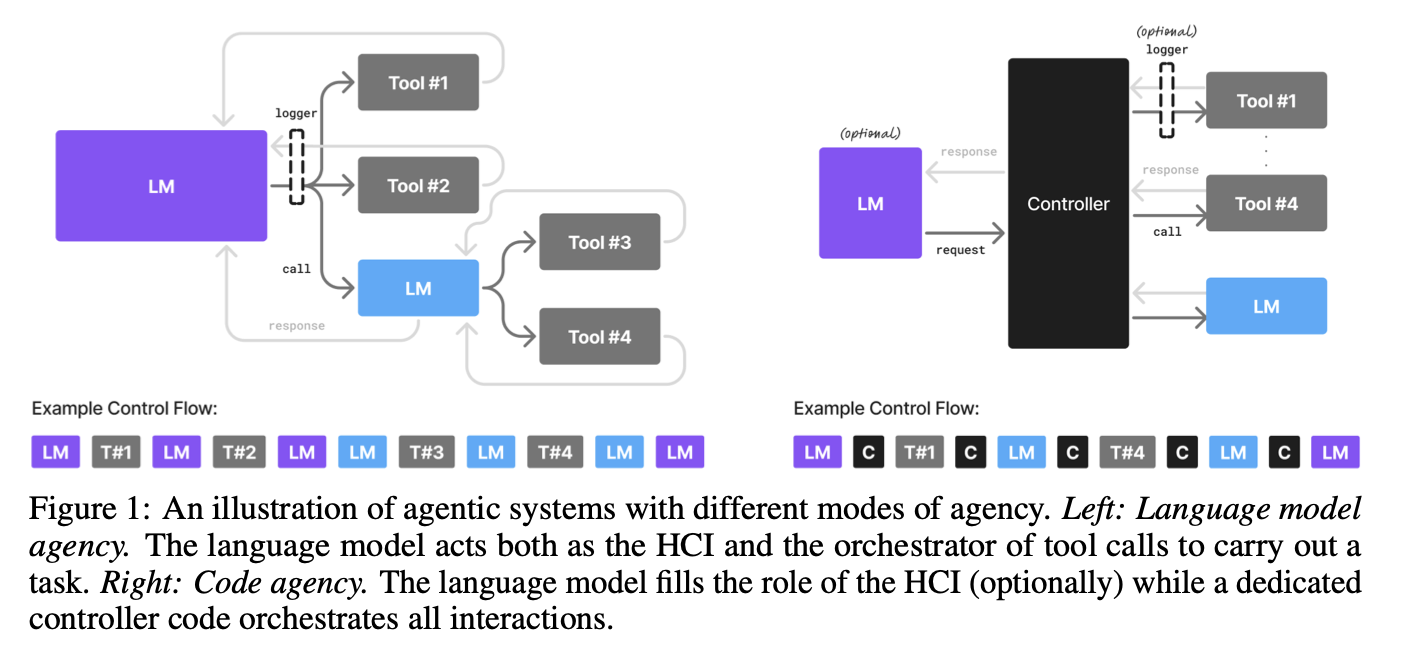

- Secure usage data collection: Log all non-HCI agent calls to capture input prompts, output responses, and other relevant data.

- Data curation and filtering: Remove any sensitive data and prepare the data for fine-tuning.

- Task clustering: Identify recurring patterns of requests or internal agent operations to define candidate tasks for SLM specialization.

- SLM selection: Choose the best SLM for each task, based on its capabilities, performance, licensing, and deployment footprint.

- Specialized SLM fine-tuning: Fine-tune the chosen SLMs on the task-specific datasets.

- Iteration and refinement: Continuously retrain the SLMs and the router model with new data to maintain performance and adapt to evolving usage patterns.

This is a practical, actionable plan that any organization can use to start reaping the benefits of SLMs today.

Also Read: SLMs vs LLMs

Conclusion

The AI revolution is here, but it can’t be scaled sustainably using energy-intensive LLMs. The future will instead be built on SLMs for Agentic AI – small, efficient, and flexible by design. NVIDIA’s research serves as both a wake-up call and roadmap, challenging the industry’s LLM obsession while proving SLMs for Agentic AI can deliver comparable performance at a fraction of the cost. This isn’t just about technology – it’s about creating a more sustainable, equitable, and innovative AI ecosystem. The coming wave of SLMs for Agentic AI will even drive hardware innovation, with NVIDIA reportedly developing specialized processing units optimized specifically for these compact powerhouses.