Welcome to the showdown of the (AI) century. Two rockstars go head-to-head in this headliner battle, in the search for the one true champion of AI models. On one side, you’ve got OpenAI’s flagship brainchild – the GPT-5 – polished, powerful, and dripping with experience, and on the other, Google’s latest prodigy – the Gemini 2.5 Pro – fast, flashy, and promising to rewrite the rules.

Both dropped onto the scene recently, and the crowd hasn’t stopped buzzing since. But don’t be mistaken. We are not here for polite introductions and the fan favourites. We are here for “battle!” A no-nonsense, blood-thirsty battle, where both the models prove their might across four high-stakes rounds. The winner takes home the glory!

So, our honored audiences, gear up, for the battle is about to begin. GPT-5 or Gemini 2.5 Pro, who will it be?

Table of contents

Round 1: Content (text)

The very medium in which these models face us every day. Content was among the first few expertise that these AI models brought to the human table. Years down the line, they are now more elaborate, expressive, and way more nuanced than ever before.

Also read: You can check out how I make ChatGPT write like a human here

But can they take on this monumental challenge by this human expert (yours truly)? Time to find out.

Prompt

Hi,

Please give me a 3500-word article on how AI may replace human jobs by 2030.

Take into account, information from the following source – https://www.weforum.org/stories/2025/01/future-of-jobs-report-2025-jobs-of-the-future-and-the-skills-you-need-to-get-them/

Highlight the exact spots where you cite information from this source.

Make sure the article has optimum divisions into subheads. Start it with a super-catchy and funky introduction of 2 paragraphs (150 words max), followed by a section titled “Is AI-Threat Real?” give me support and counter arguments for the same in the section.

Include at least 1 section which mentions the use of AI in distant future (2050), and what we can envision in terms of work being done by humans vs work done by AI at the time.

Performance

GPT-5 output –

Gemini 2.5 Pro output –

Score: Round 1 goes to Gemini 2.5 Pro

The criterias both the models were judged on are –

| Metric | GPT-5 | Gemini 2.5 Pro |

|---|---|---|

| Words | 965 | 2163 |

| Funky Intro | Yes | A bit |

| Future | Yes | Yes |

| Highlight | Yes | Yes |

| Time | Instant | Around 30 seconds |

Headline: Sturdy Gemini 2.5 Pro beats Skillful GPT-5

Although it came out with a dwindling start, falling way too short of the mentioned word limit, GPT-5 bounced back quickly to win 2 out of 4 criteria we judged these performances on. It articulated some of the best AI writing I have seen in a long time for the introduction, and was totally on-point with all the details within the article. All this, with a lightning-fast speed of less than 5 seconds. It fell massively short of the mentioned word limit, though.

Gemini 2.5 Pro, on the other hand, did extremely well, showing a well-rounded performance by taking its sweet time and coming up with double the length of the article than GPT-5. Yet, even Google’s darling AI fell short of the mentioned word count and took around 30 seconds to come up with the answer. It even tried to be creative in the intro paragraph, but was nowhere near close to what GPT-5 came up with.

Where GPT-5 stumbled is inserting citations from the reference link by itself. Instead of doing so, it somehow left brackets for me to insert the citations as per my preference. If I have to go through the content myself, it beats the entire purpose of using an AI for summarising/ writing content based on a source.

Gemini 2.5 Pro played it safe here, and simply did what it was asked to – highlight the areas/ information you pick from the source. 10 on 10!

Though it had its own weak moments, and so its lead may be short-lived if it is not careful going forward.

Will Gemini 2.5 Pro hold its ground? Or will GPT-5 bounce back? Stay tuned, as it is time for…

Round 2: Image Generation

Beauty is part of brains in the AI land, so we’re kicking things off with a look at their image generation chops. Let us see how these models fare in turning prompts to pixels.

Prompt

Give me an image

Character – an Indian boy – around 25 years of age, standing on one edge of a busy road. The boy is dressed smartly in formals, a beige suit, but no tie and shirt unbuttoned at the top.

Background setting – the backdrop shows cars zooming on an expansive road, in front of a row of sky-rises made of glass and having neon boards. The tallest building reads “Stark Industries” at the very top.

Action – The boy is watching at his hand in amazement, as sparks fly out of his hands and all around his arm. He is discovering a strange and new superpower that he always had but never knew.”

Performance

GPT-5 output –

Gemini 2.5 Pro output –

Score: Round 2 goes to GPT-5

The judges scored the two models on:

| Metric | GPT-5 | Gemini 2.5 Pro |

|---|---|---|

| Time | 2 min 18 seconds | 43 seconds |

| Details | 10/10 | 10/10 |

| Quality | 9/10 | 8/10 |

| Accuracy | 10/10 | 8/10 |

| Expressions | 9/10 | 8/10 |

| Wow Factor | 7/10 | 7/10 |

Headline: Charismatic Gemini 2.5 Pro falls to Composed GPT-5

The OpenAI contender has skillfully managed to fight its way to the top this time. In a surprising switch of fighting styles, both models completely flipped their output times. GPT-5 took way longer this time, but clearly came out with a much more nuanced and visually appealing output.

As is clear from the images, GPT-5 has managed to come up with a tad bit better quality of image than the Gemini 2.5 Pro, which is a bit of a surprise as the latter is renowned for its image generation capabilities. Where it fell short was in very slight technical details, like the fact that the prompt specifically mentioned the boy to be “standing on one edge of a busy road,” and not in the middle of it, as the model displayed in its image. Even the quality of its image could’ve been better, with the sparks flying out from the boy’s hand not being very impressive. Honestly, both models fell short of that “Wow” factor one would look for in an AI image.

Yet, the battle is not over. Is GPT-5 already the clear winner? Or will Gemini 2.5 Pro come back with a bang? Let us see, after this break.

Interval

Love AI Fight Nights? Check out our ultimate AI warriors, going head-to-head for their weight class, in these articles below:

- The best general purpose AI agent – Manus vs ChatGPT Agent: Which is Better?

- Battle of the AI coding assistants – Codex CLI vs Gemini CLI vs Claude Code

- Best open-source model. – Kimi K2 vs Llama 4

- Which is Better – Perplexity vs ChatGPT

Round 3: Internet Research

We are back, with one of the toughest challenges for the AI models yet – internet research. Arguably the most practical use-case, internet research through an AI chatbot can put you at the top of your writing/ documentation right from the beginning. We are about to see the one AI champion that does better than the rest in this regard.

Prompt

Give me 10 website article/ blog/ research sources (very high credibility) – that talk about the hollow Earth theory and how it might be a possibility

Performance

GPT-5 output –

Gemini 2.5 Pro output –

Score: Round 3 to GPT-5

Here are the criteria the 2 models were judged on:

| Metric | GPT-5 | Gemini 2.5 Pro |

|---|---|---|

| Time | <10 seconds | 32 seconds |

| Accuracy | Bang on! | Very good |

| Quality of Sources | Brilliant | Good |

| Blunder | None | Didn’t give the links |

Headline: GPT-5 Takes Easy Lead

It seemed like GPT-5 had mastered this move a 1000 times over, giving out lightning-fast results with bang-on accuracy and absolutely brilliant quality of links.

Gemini 2.5 Pro put up a good fight, yielding equally great sources for articles around the topic, and even sharing a detailed YouTube video on the topic. Yet, it was too slow, taking 3x time of GPT-5. Plus, it made one big blunder that lost it the fight – Gemini 2.5 Pro didn’t share clickable links for any of the sources. Lack of talent, or lack of common sense, you be the judge.

GPT-5, on the other hand, shared clickable links of great quality content sources from reputed publications. It went a step ahead and summarised its findings in an easy-to-grasp table format. It even shared an overall outcome that no literature “supports” the hollow-earth theory but they all explain it in much detail. On-point job and those extra steps made it the clear winner here.

With that, we reach the final round for the night. With GPT-5 in the lead till now, there is little left to do for Gemini 2.5 Pro but give its all. Will it? We find out in…..

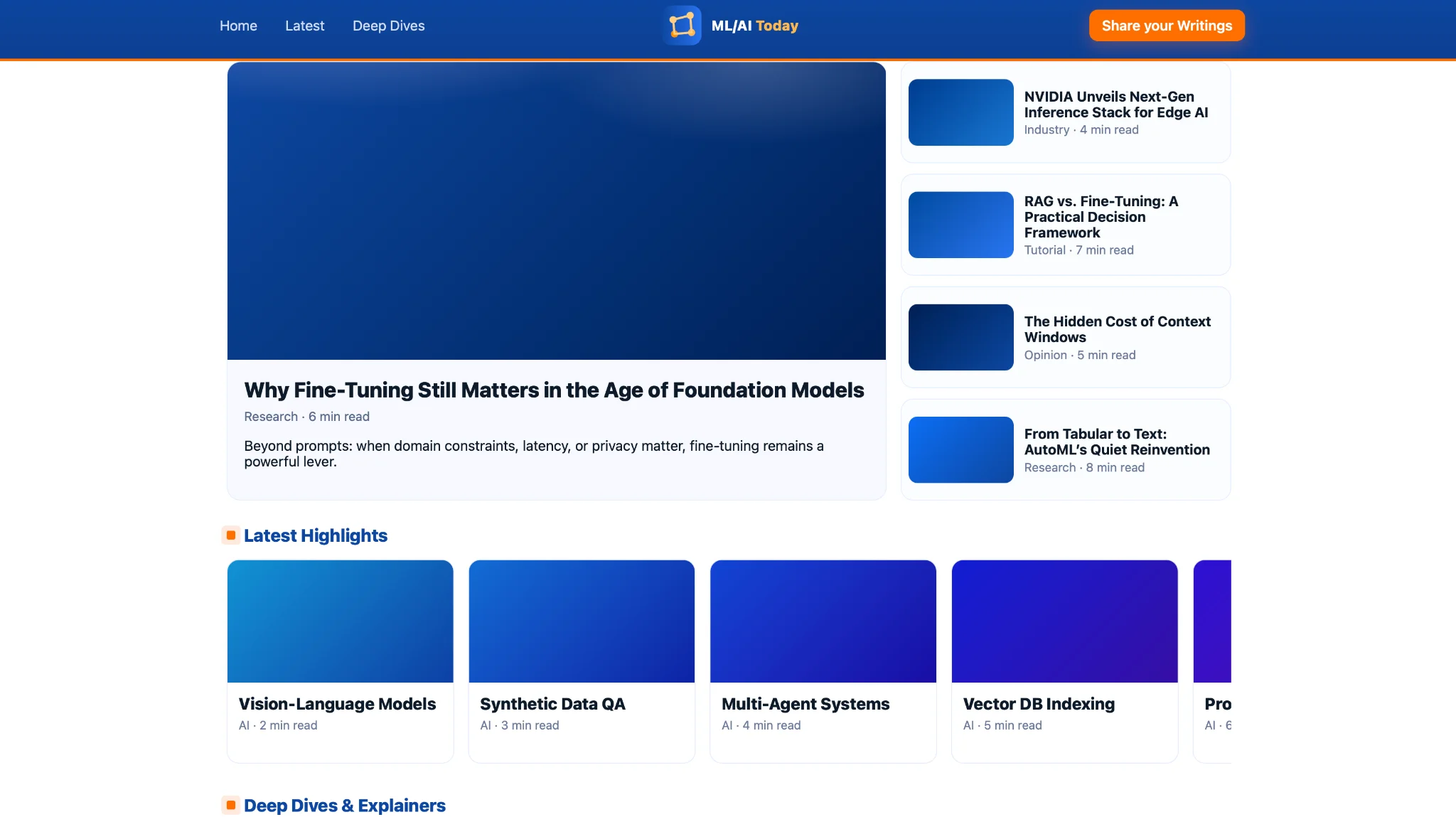

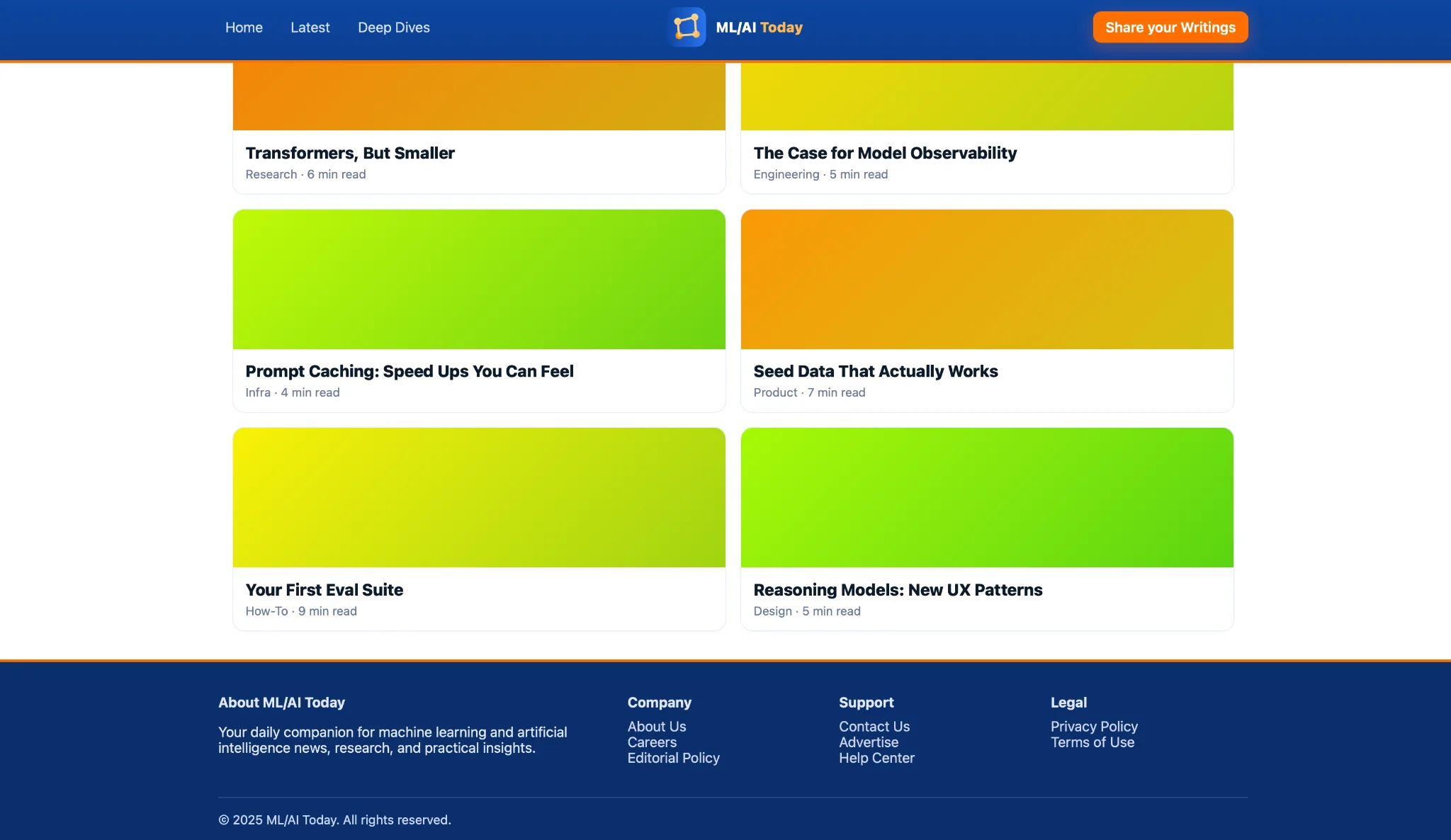

Round 4: Coding

In the ultimate test for AI models, their coding skills will be tested next. Note that both the models come with enhanced coding capabilities than their predecessors. But is that evolution enough to beat the competition? Here is the challenge:

Prompt

Write an html code for a blog website covering topics on machine learning and artificial intelligence. The first section of the home page should have a carousel with the Main news in a big container, adjacent to a list of small thumbnails of 4 other primary news. Whichever box you click on, expands into the big box of the Main news. The previous Main news story shifts to the side in place of that small thumbnail.

Make 2 more sections following this. One – a horizontal scrolling list with 10 news stories. Another, 6 news stories in 2 columns of 3 thumbnails each. End the page off with a footer showcasing the usual elements, About Us, Contact Us, etc.

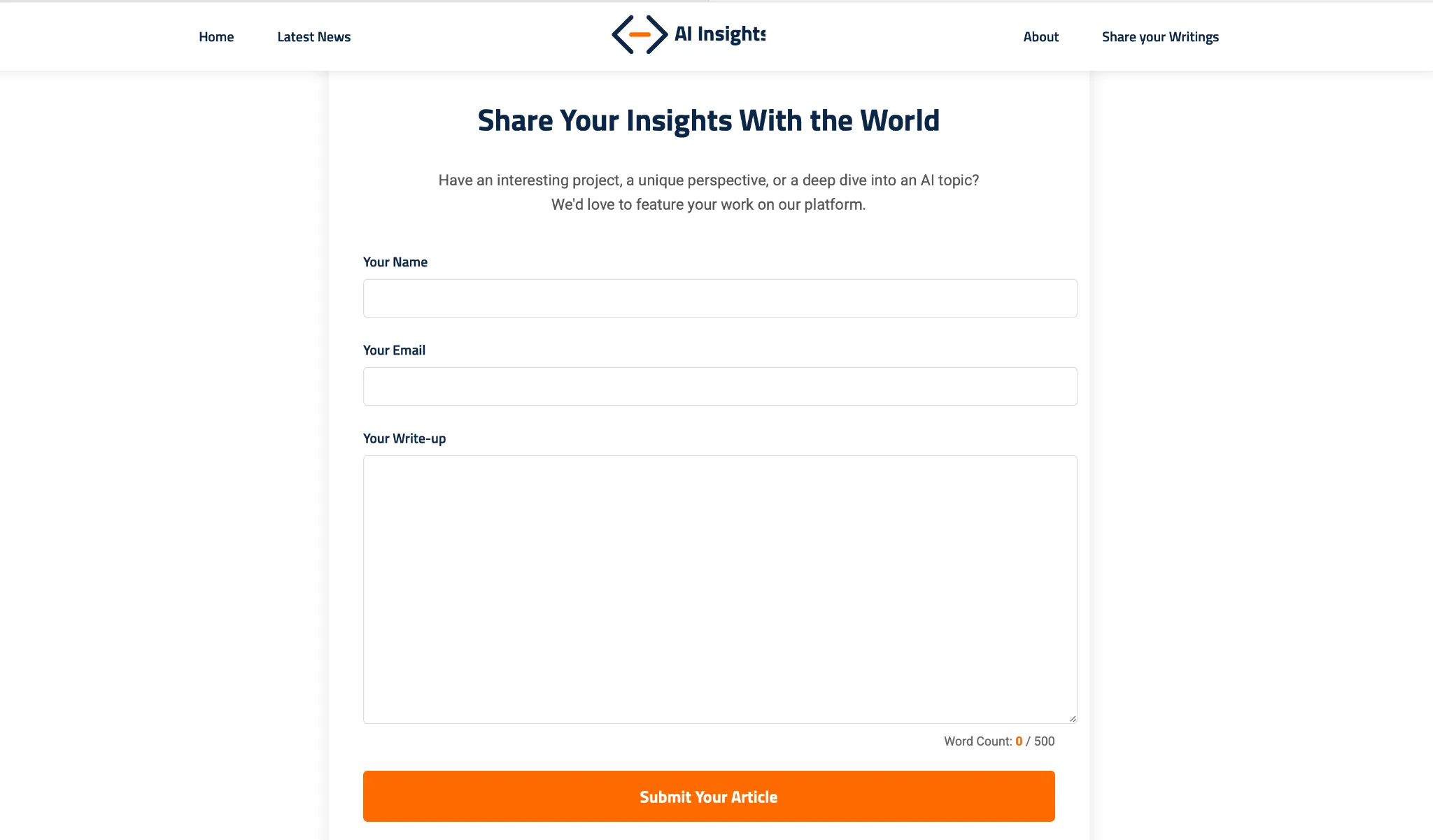

Make one more page titled “Share your Writings” The page should have a 2-line appeal to readers to share their write-ups with us. Following this, have a contact form with the fields – Name, Email, and Your Write-up. Keep a word limit of 500 words on the write-up entries.

The theme of the website should be a mix of blue, white, and orange. Make sure it is as appealing as possible. Mandatory – have a logo at the middle of the header space. make a logo of your own

Performance

GPT-5 output –

Gemini 2.5 Pro output –

Score: It’s a Draw!

First, a look at the scoreboard.

| Metric | GPT-5 | Gemini 2.5 Pro |

|---|---|---|

| Time | 3 min 30 seconds | Under 2 minutes |

| Accuracy | 10/10 | 10/10 |

| Visual Appeal | 7/10 | 9/10 |

| Functionality | 10/10 | 8/10 (Didn’t give downloadable file) |

| Creativity | 9/10 | 9/10 |

Headline: Gemini 2.5 Pro Started Strong but Slipped

Both the AI models did a fantastic job this time, producing a bang-on accurate result with all the details just as mentioned in the prompt. The websites were neat, seemed user-friendly, and were fully functional, complete with nominal details around each element. All the sections were also made and functioned exactly as per the prompt given. Yet, there were areas/ times when one AI champion beat the other, and vice versa.

Gemini 2.5 Pro, for instance, was way too quick with the results, producing the entire code within 2 minutes of prompt. It even generated the website that was (at least as per me) better looking of the two results, with a more professional touch to it as a blog around such topics should have. However, since design is a subjective thing, I wouldn’t differentiate too much between the two outputs in this regard.

While it started strong, Gemini 2.5 Pro yet again fell short of its counterpart in an important aspect. GPT-5 was able to share the entire code in a downloadable folder format. I could easily download it and run the code to check the website. Gemini 2.5 Pro, meanwhile, couldn’t provide any such folder, even upon specifically asking for it. I then had to manually make new text files within a folder, copy/ paste the codes, and then run them. Practicality compromised.

And with that, we wrap this fight night up with an obvious winner.

Conclusion: GPT-5 Wins!

In an epic exchange of intense AI action, both the top models have proved their might here. They are fast, on-point, and versatile across use cases for all practical purposes. Between the two though, the GPT-5 still seems to have a slight edge. And why not, after all, it is the OG chatbot that introduced AI to the world in the way we know it today.

While many other models made big names in the initial wave of AI tools and services (Midjourney, for instance), most of them are now lost in the crowd. Not ChatGPT, though. With timely updates to its popular chatbots (just like GPT-5), and additions of services that shape the cutting-edge of AI technology, like Codex and GPTs, ChatGPT has made sure it is still as relevant as it was at the time of its arrival.

Here is what the winner had to say about its victory.