AI Agents are being widely adopted across industries, but how many agents are needed for an Agentic AI system? The answer can be 1 or more. What really matters is that we pick the right number of Agents for the task at hand. Here, we will try to look at the cases where we can deploy Single-Agent systems and Multi-Agent systems, and weigh the positives and negatives. This blog assumes you already have a basic understanding of AI agents and are familiar with the langgraph agentic framework. Without any further ado, let’s dive in.

Table of contents

Single-Agent vs Multi-Agent

If we are using a good LLM under the hood for the Agent, then a Single-Agent Agentic system is good enough for many tasks, provided a detailed step-by-step prompt and all the necessary tools are present.

Note: A Single-Agent system has one agent, but it can have any number of tools. Also, having a single agent does not mean there will be only one LLM call. There can be multiple calls.

And we use a Multi-Agent Agentic when we have a complex task at hand, for instance, cases where a few steps can confuse the system and result in hallucinated answers. The idea here is to have multiple agents where each agent performs only a single task. We orchestrate the agents in a sequential or hierarchical manner and use the responses of each agent to produce the final output.

One might ask, why not use Multi-Agent systems for all use cases? The answer is costs; it’s important to keep the costs under check by picking only the required number of agents and using the right model. Now let’s take a look at use cases and examples of both Single-Agent and Multi-Agent agentic systems in the following systems.

Overview of Single-Agent vs Multi-Agent System

| Aspect | Single-Agent System | Multi-Agent System |

|---|---|---|

| Number of Agents | One agent | Multiple specialized agents |

| Architecture Complexity | Simple and easy to manage | Complex, requires orchestration |

| Task Suitability | Simple to moderately complex tasks | Complex, multi-step tasks |

| Prompt Design | Highly detailed prompts required | Simpler prompts per agent |

| Tool Usage | Single agent uses multiple tools | Each agent can have dedicated tools |

| Latency | Low | Higher due to coordination |

| Cost | Lower | Higher |

| Error Handling | Limited for complex reasoning | Better via agent specialization |

| Scalability | Limited | Highly scalable and modular |

| Best Use Cases | Code generation, chatbots, summarization | Content pipelines, enterprise automation |

Single-Agent Agentic System

Single-Agent systems rely on only a single AI Agent to carry out tasks, often by invoking tools or APIs in a sequence. This simpler architecture is faster and also easier to manage. Let’s take a look at a few applications of Single-Agent workflows:

- Code Generation: An AI coding assistant can generate or refactor code using a single agent. For example, given a detailed description, a single agent (LLM along with a code execution tool) can write the code and also run tests. However, one-shot generation can miss edge cases, which can be fixed by using few-shot prompting.

- Customer Support Chatbots: Support Chatbots can use a single agent that retrieves information from a knowledge base and answers the user queries. A customer Q&A bot can use one LLM that calls a tool to fetch relevant information, then formulates the response. It’s simpler than orchestrating multiple agents, and often good enough for direct FAQs or tasks like summarizing a document or composing an email reply based on provided data. Also, the latency will be much better when compared to a Multi-Agent system.

- Research Assistants: Single-Agent systems can excel in guided research or writing tasks, provided the prompts are good. Let’s take an example of an AI researcher agent. It can use tools (web search, etc.) to gather facts and then summarize findings for the final answer. So, I recommend a Single-Agent system for tasks like research automation, where one agent with dynamic tool use can compile information into a report.

Now, let’s walk through a code-generation agent implemented using LangGraph. Here, we will implement a single agent that uses GPT-5-mini and give it a code execution tool as well.

Pre-requirements

If you want to run it as well, ensure that you have your OpenAI key, and you can use Google Colab or Jupyter Notebook. Just ensure you’re passing the API key in the code.

Python Code

Installations

!pip install langchain langchain_openai langchain_experimentalImports

from langchain.agents import create_agent

from langchain_openai import ChatOpenAI

from langchain.tools import tool

from langchain.messages import HumanMessage

from langchain_experimental.tools.python.tool import PythonREPLTool Defining the tool, model, and agent

# Define the tool

@tool

def run_code(code: str) -> str:

'''Execute python code and return output or error'''

return repl.invoke(code)

# Create model and agent

model = ChatOpenAI(model="gpt-5-mini")

agent = create_agent(

model=model,

tools=[run_code],

system_prompt="You are a helpful coding assistant that uses the run_code tool. If it fails, fix it and try again (max 3 attempts)."

) Running the agent

# Invoking the agent

result = agent.invoke({

"messages": [

HumanMessage(

content="""Write python code to calculate fibonacci of 10.

- Return ONLY the final working code

"""

)

]

})

# Displaying the output

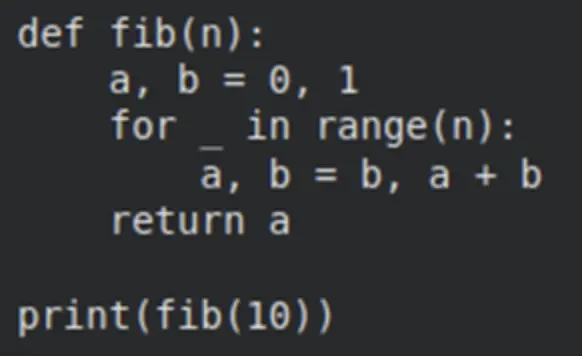

print(result["messages"][-1].content) Output:

We got the response. The agent reflection helps check if there’s an error and tries fixing it on its own. Also, the prompt can be customized for the naming conventions in the code and the detailing of the comments. We can also pass the test cases as well along with our prompt.

Note: create_agent is the recommended way in the current LangChain version. Also worth mentioning is that it uses the LangGraph runtime and runs a ReAct-style loop by default.

Multi-Agent Agentic System

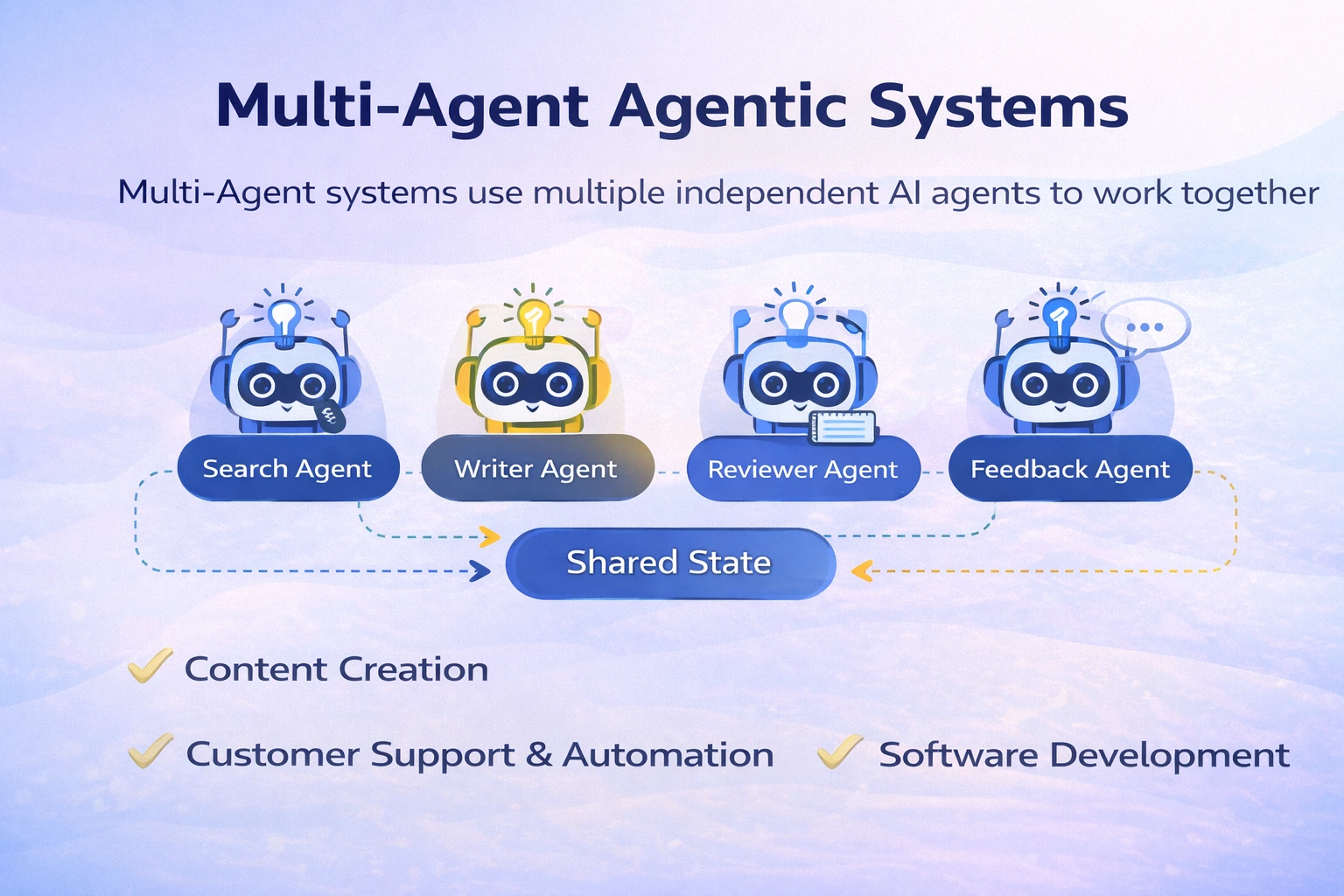

In contrast to Single-Agent systems, Multi-Agent systems, as discussed, will have multiple independent AI agents, each with its own role, prompt, and maybe each with a different model, working together in a coordinated manner. In a multi-agent workflow, each agent specializes in a subtask; for example, one agent might focus on writing, and the other does fact-checking. These agents pass information via a shared state. Here are some cases where we can use the Mult-Agent systems:

- Content Creation: We can make a Multi-Agent system for this purpose, for instance, if we’re making a system to craft News Articles: It’ll have a Search Agent to fetch the latest information from the web, a Curator Agent that can filter the findings by relevance, and a Writer Agent to draft the articles. Then, a Feedback Agent reviews each draft, providing feedback, and the writer can then revise until the article passes quality checks. More agents can be added or removed according to the need in content creation.

- Customer Support and Service Automation: Multi-Agent architectures can be used to build more robust support bots. For example, let’s say we are building an insurance support system. If a user asks about billing, the query is automatically handed to the “Billing Agent,” or if it’s about claims, it will be routed to the “Claims Agent.” Similarly, they can have many more agents in this workflow. The workflow can involve passing prompts to multiple agents at once if there is a need for quicker responses.

- Software Development: Multi-Agent systems can assist with complex programming workflows that can go beyond a single code generation or refactoring task. Let’s take an example where we have to make an entire pipeline from creating test cases to writing code and running the test cases. We can have three agents for this: ‘Test Case Generation Agent’, ‘Code Generation Agent’, and ‘Tester Agent’. The Tester Agent can delegate the task again to the ‘Code Generation Agent’ if the tests fail.

- Business Workflows & Automation: Multi-Agent systems can be used in enterprise workflows that involve multiple steps and decision points. One example is security incident response, where we would need a Search Agent that scans the logs and threat intel, an Analyzer Agent that reviews the evidence and hypotheses about the incident, and a Reflection Agent that evaluates the draft report for quality or gaps. They work in harmony to generate the final response for this use case.

Now let’s walk through the code of the News Article Creator using the Multi-Agents, this is to get a better idea of agent orchestration and the workflow creation. Here also, we would be using LangGraph, and I’ll be taking the help of Tavily API for web search.

Pre-Requisites

- You’ll need an OpenAI API Key

- Sign up and create your new Tavily API Key if you already don’t have one: https://app.tavily.com/home

- If you are using Google Colab, I would recommend you add the keys to the secrets as ‘OPENAI_API_KEY’ and ‘TAVILY_API_KEY’ and give access to the notebook, or you can directly pass the API key in the code.

Python Code

Installations

!pip install -U langgraph langchain langchain-openai langchain-community tavily-pythonImports

from typing import TypedDict, List

from langgraph.graph import StateGraph, END

from langchain_openai import ChatOpenAI

from langchain_community.tools.tavily_search import TavilySearchResults

from langchain.messages import HumanMessage

from google.colab import userdata

import osLoading the API keys into the environment

os.environ["OPENAI_API_KEY"] = userdata.get('OPENAI_API_KEY')

os.environ["TAVILY_API_KEY"] = userdata.get('TAVILY_API_KEY') Initialize the tool and the model

llm = ChatOpenAI(

model="gpt-4.1-mini"

)

search_tool = TavilySearchResults(max_results=5) Define the state

class ArticleState(TypedDict):

topic: str

search_results: List[str]

curated_notes: str

article: str

feedback: str

approved: bool This is an important step and helps store the intermediate results of the agents, which can later be accessed and modified by other agents.

Agent Nodes

Search Agent (Has access to the search tool):

def search_agent(state: ArticleState):

query = f"Latest news about {state['topic']}"

results = search_tool.run(query)

return {

"search_results": results

} Curator Agent (Processes the information received from the search agent):

def curator_agent(state: ArticleState):

prompt = f"""

You are a curator.

Filter and summarize the most relevant information

from the following search results:

{state['search_results']}

"""

response = llm.invoke([HumanMessage(content=prompt)])

return {

"curated_notes": response.content

} Writer Agent (Drafts a version of the News Article):

def writer_agent(state: ArticleState):

prompt = f"""

Write a clear, engaging news article based on the notes below.

Notes:

{state['curated_notes']}

Previous draft (if any):

{state.get('article', '')}

"""

response = llm.invoke([HumanMessage(content=prompt)])

return {

"article": response.content

} Feedback Agent (Writes feedback for the initial version of the article):

def feedback_agent(state: ArticleState):

prompt = f"""

Review the article below.

Check for:

- factual clarity

- coherence

- readability

- journalistic tone

If the article is good, respond with:

APPROVED

Otherwise, provide concise feedback.

Article:

{state['article']}

"""

response = llm.invoke([HumanMessage(content=prompt)])

approved = "APPROVED" in response.content.upper()

return {

"feedback": response.content,

"approved": approved

} Defining the Routing Function

def feedback_router(state: ArticleState):

return "end" if state["approved"] else "revise" This will help us loop back to Writer Agent if the Article is not good enough, else it willbe approved as the final article.

LangGraph Workflow

graph = StateGraph(ArticleState)

graph.add_node("search", search_agent)

graph.add_node("curator", curator_agent)

graph.add_node("writer", writer_agent)

graph.add_node("feedback", feedback_agent)

graph.set_entry_point("search")

graph.add_edge("search", "curator")

graph.add_edge("curator", "writer")

graph.add_edge("writer", "feedback")

graph.add_conditional_edges(

"feedback",

feedback_router,

{

"revise": "writer",

"end": END

}

)

content_creation_graph = graph.compile()

We defined the nodes and the edges, and used a conditional edge near the feedback node and successfully made our Multi-Agent workflow.

Running the Agent

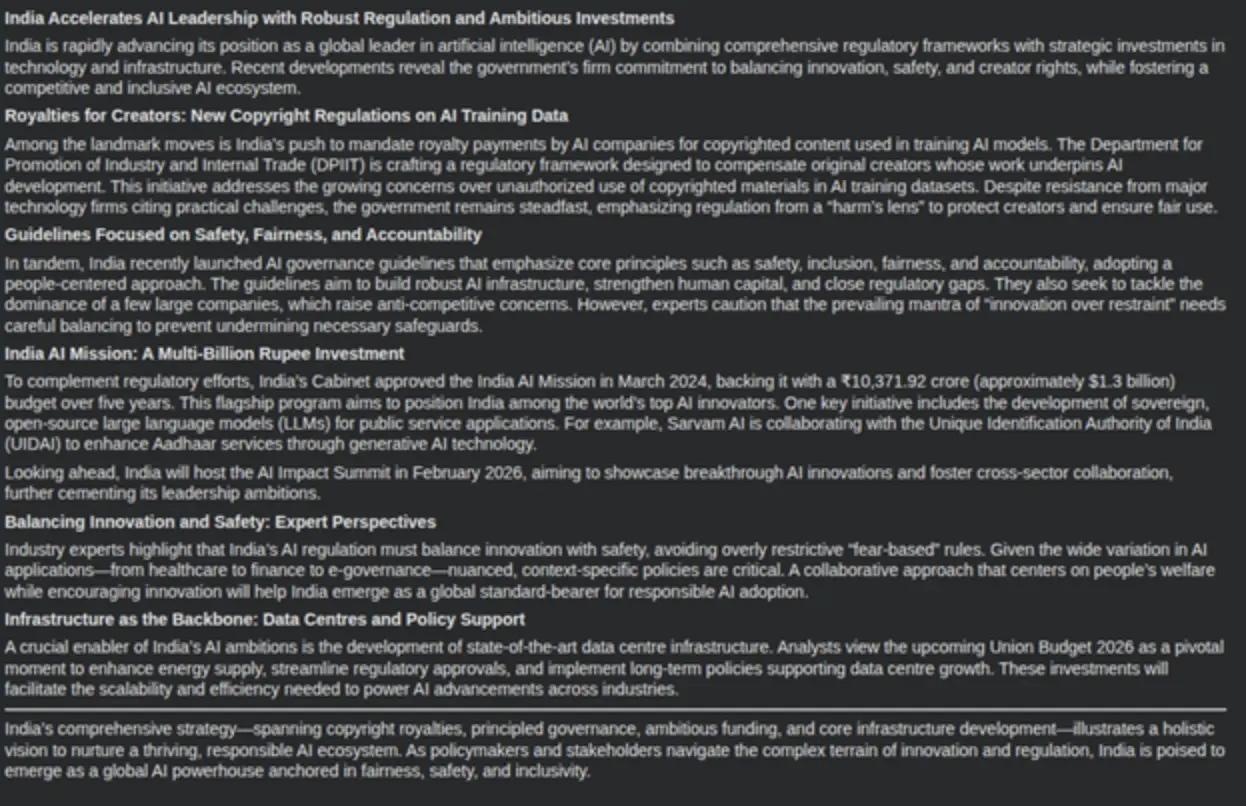

result = content_creation_graph.invoke({

"topic": "AI regulation in India"

})

from IPython.display import display, Markdown

display(Markdown(result["article"]))

Yes! We have the output from our Agentic System here, and the output looks good to me. You can add or remove agents from the workflow according to your needs. For instance, you can add an Agent for image generation as well to make the article look more appealing.

Advanced Multi-Agent Agentic System

Previously, we looked at a simple sequential Multi-Agent Agentic system, but the workflows can get really complex. Advanced Multi-Agent systems can be dynamic, with intent-driven architectures where the workflow can be autonomous with the help of an Agent.

In LangGraph, you implement this using the Supervisor pattern, where a lead node can dynamically route the state between specialized sub-agents or standard Python functions based on the outputs. Similarly, AutoGen achieves dynamic orchestration through the GroupChatManager. And CrewAI leverages the Process.hierarchical, requiring a manager_agent to oversee delegation and also validation.

Let’s create a workflow to understand manager agents and dynamic flows better. Here, we will create a Writer & Researcher agent and a Supervisor agent that can delegate tasks to them and complete the process.

Python Code

Installations

!pip install -U langgraph langchain langchain-openai langchain-community tavily-python Imports

import os

from typing import Literal

from typing_extensions import TypedDict

from langchain_openai import ChatOpenAI

from langgraph.graph import StateGraph, MessagesState, START, END

from langgraph.types import Command

from langchain.agents import create_agent

from langchain_community.tools.tavily_search import TavilySearchResults

from google.colab import userdata Loading the API Keys to the Environment

os.environ["OPENAI_API_KEY"] = userdata.get('OPENAI_API_KEY')

os.environ["TAVILY_API_KEY"] = userdata.get('TAVILY_API_KEY') Initializing the model and tools

manager_llm = ChatOpenAI(model="gpt-5-mini")

llm = ChatOpenAI(model="gpt-4.1-mini")

tavily_search = TavilySearchResults(max_results=5) Note: We will be using a different model for the manager and a different model for the other agents.

Defining the tool and agent functions

def search_tool(query: str):

"""Fetches market data."""

query = f"Fetch market data on {query}"

results = tavily_search.invoke(query)

return results

# 2. Define Sub-Agents (Workers)

research_agent = create_agent(

llm,

tools=[tavily_search],

system_prompt="You are a research agent that finds up-to-date, factual information."

)

writer_agent = create_agent(

llm,

tools=[],

system_prompt="You are a professional news writer."

)

# 3. Supervisor Logic (Dynamic Routing)

def supervisor_node(state: MessagesState) -> Command[Literal["researcher", "writer", "__end__"]]:

system_prompt = (

"You are a supervisor. Decide if we need 'researcher' (for data), "

"'writer' (to format), or 'FINISH' to stop. Respond ONLY with the node name."

)

# The supervisor analyzes history and returns a Command to route

response = manager_llm.invoke([{"role": "system", "content": system_prompt}] + state["messages"])

decision = response.content.strip().upper()

if "FINISH" in decision:

return Command(goto=END)

goto_node = "researcher" if "RESEARCHER" in decision else "writer"

return Command(goto=goto_node) Worker Nodes (Wrapping agents to return control to the supervisor)

def researcher_node(state: MessagesState) -> Command[Literal["manager"]]:

result = research_agent.invoke(state)

return Command(update={"messages": result["messages"]}, goto="manager")

def writer_node(state: MessagesState) -> Command[Literal["manager"]]:

result = writer_agent.invoke(state)

return Command(update={"messages": result["messages"]}, goto="manager") Defining the workflow

builder = StateGraph(MessagesState)

builder.add_node("manager", supervisor_node)

builder.add_node("researcher", researcher_node)

builder.add_node("writer", writer_node)

builder.add_edge(START, "manager")

graph = builder.compile() As you can see have only added the “manager” edge and other edges will be dynamically created on execution.

Running the system

inputs = {"messages": [("user", "Summarize the market trend for AAPL.")]}

for chunk in graph.stream(inputs):

print(chunk)

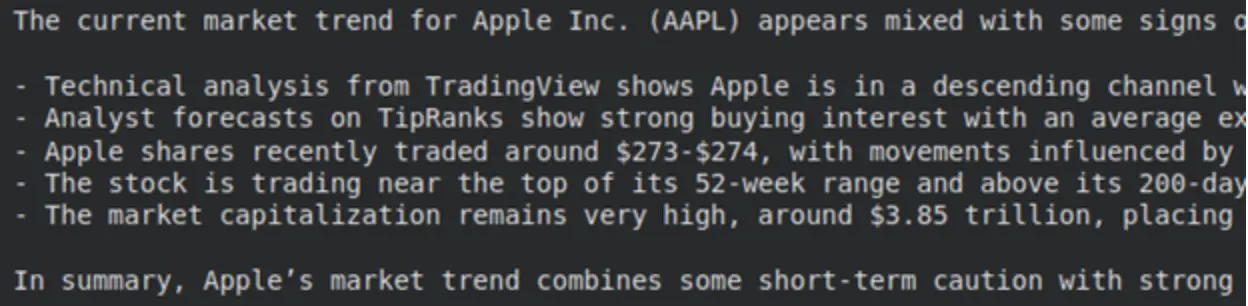

As you can see, the manager node executed first, then the researcher, then again the manager, and finally the graph completed execution.

Note: Manager Agent doesn’t return anything explicitly, it uses ‘Command()’ to decide whether to direct the prompt to other agents or end the execution.

Output:

inputs = {"messages": [("user", "Summarize the market trend for AAPL.")]}

result = graph.invoke(inputs)

# Print final response

print(result["messages"][-1].content) Great! We have an output for our prompt, and we can successfully create a Multi-Agent Agentic Sysem using a Dynamic workflow.

Note: The output can be improved by using a stock market tool instead of a search tool.

Conclusion

Finally, we can say that there’s no universal system for all tasks. The answer to choosing Single-Agent or Multi-Agent Agentic systems depends on the use case and other factors. The key here is to choose a system according to the task complexity, required accuracy, and also the cost constraints. And make sure to orchestrate your agents well if you are using a Multi-Agent Agentic system. Also, remember that it is equally important to pick the right LLMs for your Agents as well.

Frequently Asked Questions

Yes. Alternatives include CrewAI, AutoGen, and many more.

Yes. You can build custom orchestration using plain Python, but it requires more engineering efforts.

Stronger models can reduce the need for multiple agents, while lighter models can be used as specialized agents.

They can be, but latency increases with more agents and LLM calls, so real-time use cases require careful optimization and lightweight orchestration.