My favourite open-source AI model just got a major upgrade..Kimi K2.5 is here!

LLMs excel at answering questions and writing code, but real work spans messy documents, images, incomplete data, and long decision chains. Most AI systems still struggle in these environments. Moonshot AI built Kimi K2.5 to close this gap by bringing multimodal, agentic intelligence to the open-source ecosystem. More than a model upgrade, Kimi K2.5 actively reasons, acts, and coordinates entire workflows using parallel agent swarms.

In this article, we examine what sets Kimi K2.5 apart, how to get started, real-world demonstrations, benchmark performance, and why it matters for the future of agentic AI.

Table of contents

What is Kimi K2.5?

Kimi K2.5 is a next-generation open-source multimodal model for agentic reasoning, vision, and large-scale execution. Built on architectural and training upgrades over Kimi K2, it significantly improves how the model processes and integrates text, images, videos, and tools.

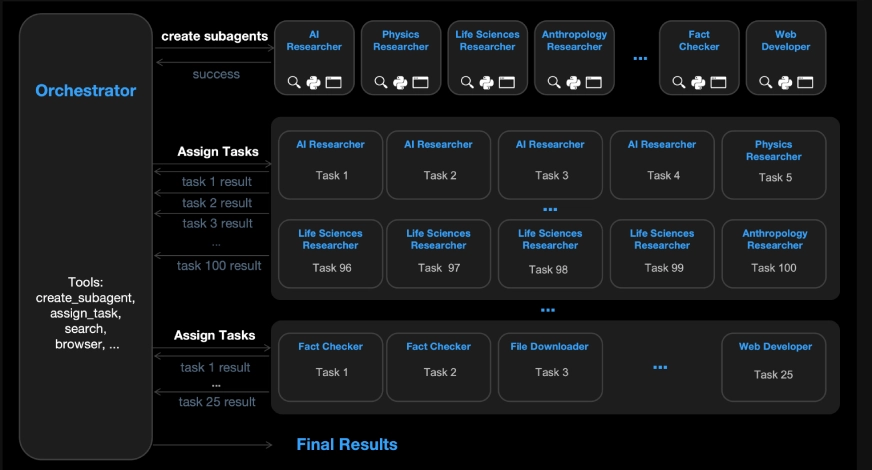

A defining feature of Kimi K2.5 is its self-directed agent swarm paradigm. Instead of relying on predefined workflows, the system can autonomously spawn and coordinate up to 100 sub-agents, enabling thousands of synchronized operations to run in parallel. This allows Kimi K2.5 to operate independently across complex, multi-step tasks without requiring manual orchestration.

Key Features of Kimi K2.5

Native Multimodal Architecture

Kimi K2.5 is trained at scale on text, images, and videos, allowing it to reason seamlessly across screenshots, diagrams, documents, and video inputs. It can convert visual inputs directly into working code and debug UI issues by inspecting rendered outputs, without sacrificing language reasoning performance. Unlike earlier models, Kimi K2.5 improves both visual and text reasoning simultaneously.

Coding with Vision

One of Kimi K2.5’s standout capabilities is vision-based coding. The model can transform images or videos into functional front-end interfaces with animations and interactivity. This includes reconstructing websites from screen recordings, generating UI layouts from design images, debugging visual components, and solving visual puzzles using algorithmic reasoning. This makes it especially valuable for front-end developers, designers, and engineers working between design and code.

Video Source: Kimi K2.5

Agent Swarm Intelligence

Kimi K2.5 introduces Agent Swarm as a research preview, enabling concurrent task execution through Parallel-Agent Reinforcement Learning (PARL). The system autonomously decomposes complex tasks, spawns specialized sub-agents, and coordinates parallel execution without reverting to sequential workflows. This results in up to 4.5× faster execution, improved long-term planning, and higher reliability on complex, multi-step tasks.

Real-World Office Productivity

Beyond benchmarks, Kimi K2.5 excels at real-world knowledge work. It can create and edit Word documents, spreadsheets with formulas and Pivot Tables, PDFs with LaTeX equations, and presentation slides with long-form content. The system comfortably handles large files, including 100-page documents and 10,000-word texts.

Tool-Augmented Reasoning

Kimi K2.5 is built to work natively with tools. It can browse the web, execute code, manage files, and verify results while maintaining long-context reasoning up to 256k tokens, making it a strong autonomous assistant for research, engineering, and analytical workflows.

How to Access Kimi K2.5?

The process of getting started with Kimi K2.5 proves easy for beginners even for those who possess no previous experience with agentic AI technology.

Access Options

- The interactive features of Kimi application become accessible through Kimi.com and Kimi App.

- The API provides users with capabilities to connect their applications through the integration system.

- The API provides users with capabilities to connect their applications through the integration system.

Available Modes

- K2.5 Instant, which provides users immediate answers to common questions, delivers its response.

- K2.5 Thinking provides users with a deep reasoning capacity which enables extended thought processes.

- K2.5 Agent enables users to create independent workflows which use multiple tools for execution.

- The K2.5 Agent Swarm Beta offers users the ability to run multiple agents simultaneously for their advanced task execution requirements.

The combination of Kimi K2.5 and Kimi Code provides developers with maximum benefits because it supports both software development processes and multimodal operational procedures.

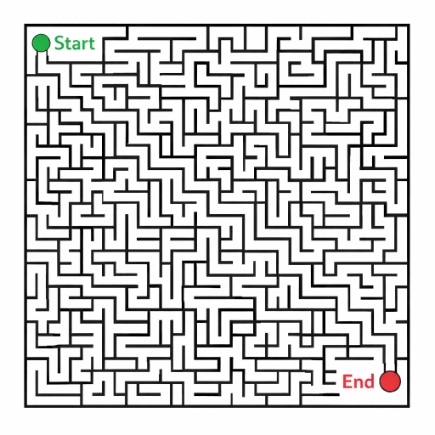

Task 1: Solving a Maze using Vision and Code

The task requires finding the shortest path through a maze which has a green starting point and a red ending point according to given software instructions.

How Kimi K2.5 Approaches It?

Now, I will provide the prompt to the model with the maze image and we’ll try to observe the steps it follows:

- It analyzes the image to identify the start and end points.

- It converts the maze into a binary grid representation.

- It applies a BFS algorithm to compute the shortest path.

- It overlays the computed path on the maze for visual verification.

- Finally, it validates and stores the output.

Output Review

- The shortest path length is 1,645 steps.

- BFS ensures optimal results for an unweighted graph.

- Gradient-based visualization improves clarity and interpretability.

- The solution is generated end to end without manual intervention.

This example highlights how Kimi K2.5 seamlessly combines visual understanding, algorithmic reasoning, and code execution to solve problems autonomously.

Task 2: Agent Swarm for Large-Scale Research

The task requires generating slide decks, research-style PDF documents, and structured spreadsheets that capture key insights. It reflects real-world research workflows where teams deliver the same findings in multiple formats for different audiences.

How Kimi K2.5 Agent Approaches It?

- The agent first understands the research objective and expected outputs.

- It designs an end-to-end workflow covering research, synthesis, and document formatting.

- Relevant and trustworthy sources are identified and analyzed.

- Large volumes of information are processed while maintaining full contextual awareness.

- Insights are organized into a clear, structured framework.

- Using its tools, the agent generates multiple output formats:

- Presentation-ready slides with a clear narrative

- A structured research PDF suitable for formal documentation

- A spreadsheet for analysis, reporting, and sharing

Output Review

- The slide deck follows a coherent storyline and is ready for presentation.

- The PDF serves as a concise yet comprehensive research document.

- The spreadsheet presents insights in a structured, analysis-friendly format.

- All outputs maintain consistent tone, accuracy, and structure across formats.

This demonstration highlights Kimi K2.5’s ability to deliver complete knowledge assets, rather than isolated text responses.

Kimi K2.5 vs Other Models

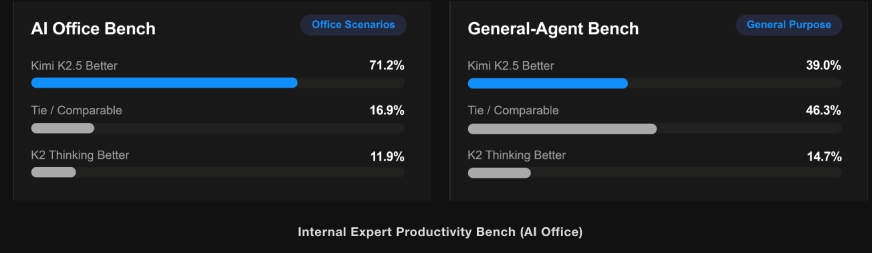

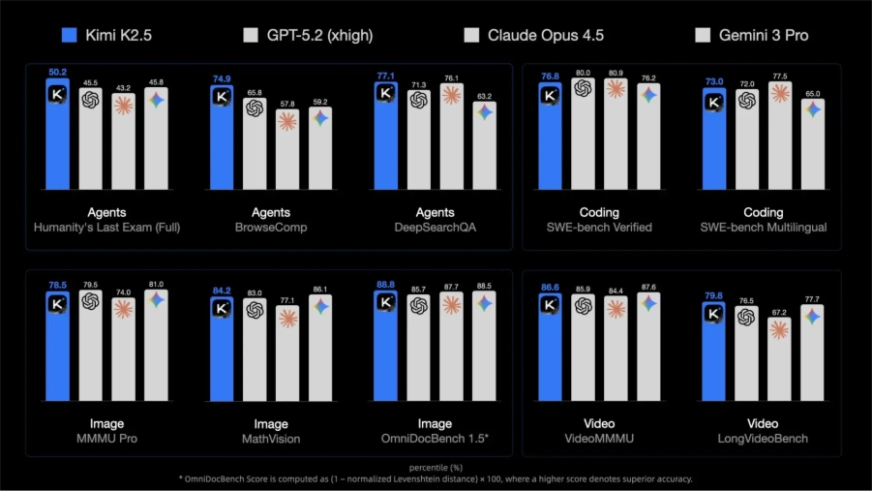

Kimi K2.5 delivers strong, reliable performance across benchmarks. Key results include:

- HLE-Full, AIME 2025, and GPQA-Diamond show competitive scores, with noticeable gains when tool-augmented reasoning is enabled.

- MMMU-Pro, OmniDocBench 1.5, OCRBench, and VideoMMMU highlight robust image, document, and video understanding.

- SWE-Bench Verified and Multilingual confirm dependable performance on debugging, refactoring, and end-to-end development tasks.

- BrowseComp and DeepSearchQA show significant improvements due to Agent Swarm’s parallel execution, reducing latency on complex search tasks.

Overall, Kimi K2.5 performs competitively against GPT-5.2, Claude Opus 4.5, Gemini 3 Pro, and DeepSeek V3.2, while standing out in multimodal reasoning and scalable agentic workflows.

Conclusion

Kimi K2.5 represents a meaningful shift in open-source AI. By treating agentic intelligence, parallel execution, and multimodal reasoning as first-class capabilities, it moves beyond static model behavior toward real-world execution. Its design enables vision-based coding and large-scale, coordinated agent workflows in practical settings.

More than a routine model release, Kimi K2.5 offers developers, researchers, and organizations a clear view of what autonomous AI systems can become. Machines that reason, act, and collaborate with humans across complex, large-scale workflows.