The artificial intelligence revolution is no longer just on the horizon; it is here, disrupting industries and creating unique career opportunities. AI job postings increased by 56.1% in 2025, and individuals with AI skills are commanding an incredible 56% premium on wages, so there has never been a better time to learn and master AI! This guide is designed to help you progress from a total novice to an AI-fluent expert by 2025, utilizing a detailed seven-step process.

Why AI Skills Are Essential in 2025?

Demand for AI professionals has increased exponentially. As per “The 2025 AI Index Report” by Stanford University, AI fluency is becoming a baseline requirement across job roles; in 2024, 78% of organizations report using AI in their activities, up from 55% in 2023. AI technologies increase productivity, and productivity growth in AI-exposed sectors has nearly quadrupled from 2022 to date. AI skills are now a basic requirement, not just an admirable attribute, for a successful career.

Primary market signals demonstrate spectacular growth in AI:

- Job postings related to AI are exploding, and mentions in US job postings skyrocketed 56.1% in 2025

- The global AI job market is showing productivity growth at a fourfold rate in AI-exposed industries

- Revenue per worker is 3x higher in AI-exposed industries compared to industries without AI

- AI skill penetration is highest in India, and India is the second largest in the world for AI talent pool

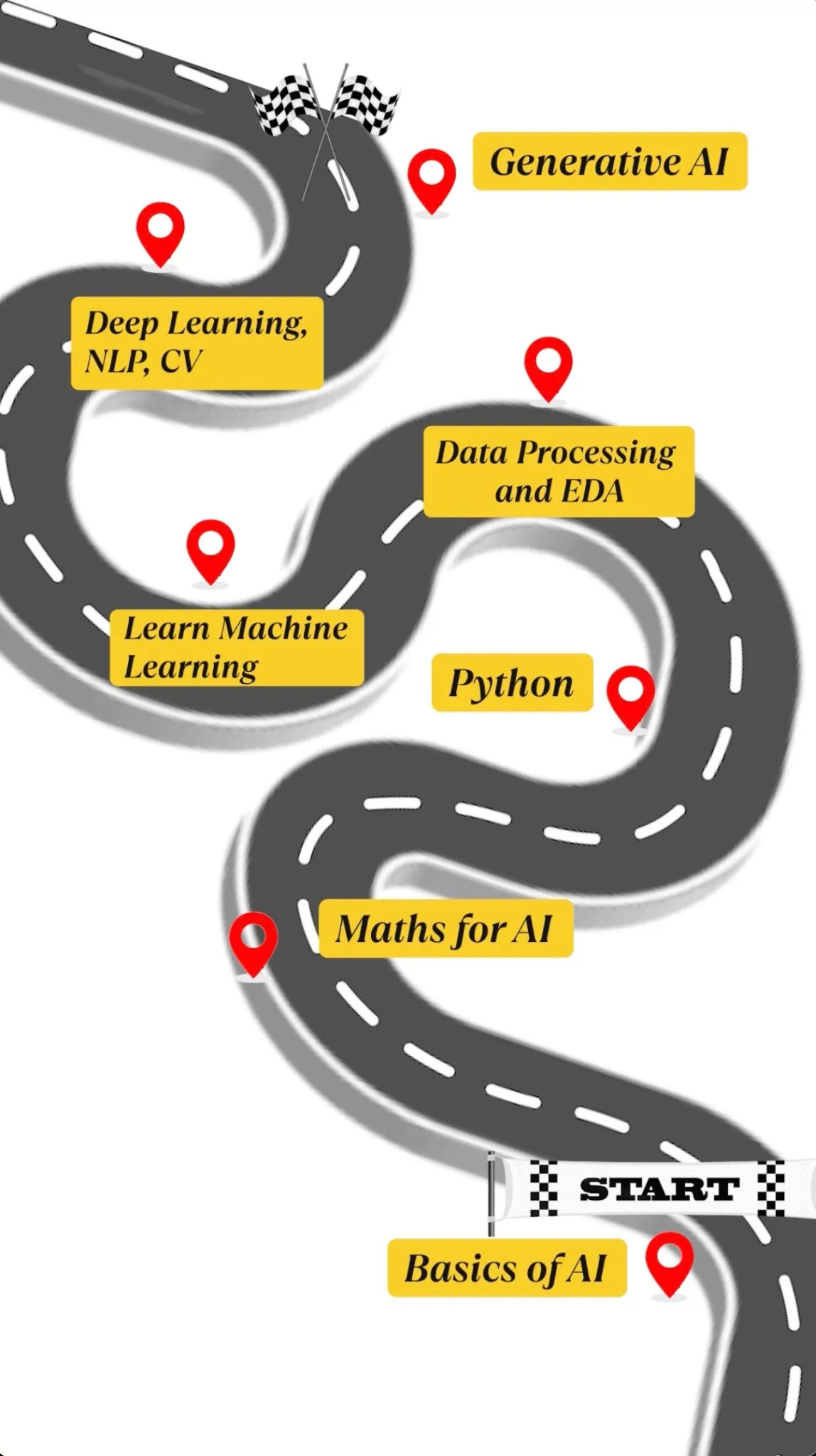

7 Step Roadmap to Master AI

Here are the 7 steps that will help you build your basics and transform you from novice to an AI master.

Step 1: Understanding AI fundamentals

There is nothing but conceptual complexity here, so it is essential to build a strong conceptual basis first. Artificial Intelligence is much more than algorithms; AI is all about understanding intelligent systems from the perspective of information processing, data-based learning, and decision-making that affects the real world. Some of the concepts to learn are:

Artificial Intelligence, Machine Learning, & Deep Learning

It’s essential to understand how Artificial Intelligence (AI) encompasses the broader goal of building intelligent systems, whereas Machine Learning (ML) is a subset focused on data-driven learning methods, and Deep Learning (DL) is a further subfield of ML using multi-layered neural networks. Grasping this hierarchy clarifies the technology stack and research directions.

The Three Main Learning Paradigms

A solid foundation in machine learning comes from three core methods. Supervised learning maps inputs to known outputs using labeled data. Unsupervised learning uncovers hidden patterns or clusters in unlabeled data. Read more about supervised and unsupervised learning here.

Reinforcement learning (details here) teaches agents by trial and error, rewarding good actions and penalizing poor ones. Understanding these distinctions is key to seeing how each method tackles different real-world problems.

Real-World Applications and Impact

Learn how AI impacts diverse fields such as healthcare (medical diagnostics), finance (fraud detection), transportation (autonomous vehicles), entertainment (recommendation systems), and more. This shows the scope and transformative power of AI in modern industries.

Ethical AI Principles

Study bias, fairness, transparency, privacy, and responsible development practices. Ethical frameworks are critical as AI becomes embedded in decision-making that directly impacts individuals, requiring careful attention to these principles.

Step 2: Mathematical Foundations

Mathematics forms the backbone of AI, and while it might seem scary, you will have to focus on three core areas os mathematics to be an AI master. These are:

Linear Algebra

Linear algebra is essential for many machine learning algorithms, providing the tools to manipulate and process data represented as vectors and matrices. Key concepts to include are: Vectors and Matrices, Matrix Operations, Linear Transformations, Dimensionality Reduction

Probability and Statistics

Understanding uncertainty and data analysis is crucial for AI model performance evaluation and decision-making: Probability Distributions, Bayesian Thinking, Hypothesis Testing, Statistical Inference

Step 3: Python Programming Excellence

Python has emerged as the primary language for AI development, and mastering Python is non-negotiable in your journey to AI mastery. The language’s simplicity, combined with powerful libraries, makes Python ideal for AI applications. You can go through our complete Python tutorial here.

Fundamental Python Skills

Start by learning basic programming concepts of Python, like basics of Python including variables, data types, and control structures. Then, progress to object-oriented programming with classes, inheritance, and encapsulation. Next, focus on error handling through debugging and exception management, and finally, master file I/O by reading, writing, and processing data files, foundational Python skills for AI applications.

Essential AI Libraries

The real power of Python for AI development is in its ecosystem of specialized libraries: NumPy, Pandas, Matplotlib/Seaborn, Scikit-learn, TensorFlow/PyTorch.

Step 4: Data Processing and Exploratory Data Analysis

Data fuels every intelligent system, but raw data is messy and needs careful pre-processing. This step focuses on mastering the core skills of data cleaning, visualization, and analysis to make data usable.

Cleaning and Preparing Data

The process begins with data cleaning and preparation, including handling missing values to avoid gaps in information. Outlier treatment follows, where anomalous points are identified and corrected. Finally, data normalization ensures features are scaled properly for better model performance.

Feature Engineering

Here, raw inputs are transformed into meaningful variables that help models capture deeper relationships. Strong feature engineering often makes the difference between an average and a high-performing model.

Exploratory Data Analysis (EDA)

EDA is about understanding data through visualization and statistics. It starts with univariate analysis of single variables, moves to bivariate analysis of relationships, and expands into correlation analysis to uncover linear and non-linear associations. This step reveals patterns and hidden insights within the dataset.

Applying Knowledge in Real Projects

Platforms like Kaggle offer hands-on experience. You can practice on diverse datasets, from business to scientific research, engage with the community through shared notebooks, and participate in competitions to test your skills. A strong Kaggle portfolio demonstrates your ability to process and analyze real-world data effectively.

Step 5: Machine Learning Mastery

Machine learning is at the heart of modern AI applications. In this step, you will learn the theoretical underpinnings as well as practical implementations of machine learning algorithms.

Supervised Learning

Algorithms that learn from labeled data. Begin with linear and logistic regression to understand relationships and probabilities, then explore decision trees for straightforward classification and regression tasks. Move on to random forests, which use ensemble methods to enhance prediction accuracy, and finally, study support vector machines, powerful approaches for tackling complex classification problems.

Then start using Advanced Techniques here. You will then progress to advanced techniques such as bagging and boosting, which enhance accuracy by combining multiple models. Cross-validation helps prevent overfitting and ensures your models generalize well. Tuning hyperparameters optimizes model performance, while variable selection focuses on choosing the most relevant features for prediction.

Unsupervised learning

With unsupervised learning, you’ll work with methods designed to process unlabeled data.

Which makes up most real-world information. Techniques include clustering with algorithms like k-means, hierarchical clustering, and DBSCAN; dimension reduction using PCA, t-SNE, and UMAP; and extracting association rules for market basket analysis and recommendations. You’ll also use anomaly detection to find unusual patterns in your data. Scikit-learn serves as the key library for efficiently implementing these approaches.

Step 6: Deep Learning and Neural Networks

Deep learning is the more advanced branch, supporting applications from image recognition to natural language processing. This component will provide some background on neural network structures and their specific uses.

You can check out our complete guide on deep learning here.

Neural Network Basics

At its core, deep learning begins with perceptrons, the simplest units of a neural network. Stack them up, and you get multi-layered networks powered by activation functions like ReLU or sigmoid that add flexibility. Learning happens through backpropagation, where errors are traced backwards and weights updated using gradient descent. Loss functions guide the process by measuring how far predictions stray from reality, keeping the model on track.

Specialised Neural Networks

Different problems demand different architectures. CNNs dominate image tasks and computer vision. RNNs handle sequential data like time series, with LSTMs extending their memory to capture long-term dependencies. But the real leap forward is Transformers, now the backbone of advanced AI. Their ability to process data in parallel makes them unmatched for translation, text generation, and even complex financial predictions.

Natural Language Processing (NLP)

For machines to understand human language, text first goes through pre-processing like tokenisation, stemming, and lemmatisation. Words are then embedded as vectors, letting models capture their meaning and relationships. Sequence-to-sequence models power translation and text generation, while attention mechanisms help zero in on the most important parts of data. Tools like TensorFlow and PyTorch make all this practical — with TensorFlow better for deployment and PyTorch favoured in research.

Step 7: Generative AI and Advanced Applications

This final step brings you into the frontier of AI – the technologies that are rewriting what machines can do. We’re talking about large language models (LLMs), retrieval-augmented generation (RAG), and AI agents: the engines behind the current AI revolution. These aren’t just academic experiments anymore; they’re tools reshaping industries, businesses, and workflows in real time.

Large Language Models (LLMs)

LLMs like GPT stand at the centre of today’s AI boom. Based on transformer architectures, they learn language through massive pre-training and then adapt to specific tasks with fine-tuning. The hottest skill here is prompt engineering – knowing how to craft instructions that unlock the model’s potential. But with great power comes responsibility, so careful evaluation and safety checks are vital to ensure outputs remain trustworthy.

Retrieval-Augmented Generation (RAG)

RAG takes LLMs further by linking them to external knowledge bases. Instead of answering purely from memory, the system fetches information in real time from vector databases and embeddings, then blends it with generated text. The result is a model that not only reasons but also grounds its responses in facts – crucial for accuracy in professional use cases.

AI Agents

If LLMs answer, agents act. These systems bring planning, reasoning, and execution together, often tying into APIs or coordinating with multiple agents to complete complex workflows. Their value lies in autonomy – running tasks end-to-end – but their risk lies in unpredictability, which is why safety measures and oversight are essential. Frameworks like LangChain, LangGraph, and CrewAI are already shaping this new frontier.

The Road Ahead

The rise of agentic AI signals a shift toward systems that don’t just respond but anticipate, strategise, and execute. With trends like inference-time computing – where models can “pause to think” before giving an answer – 2025 is pushing AI closer to machines that reason like us, but at machine speed.

Conclusion

The AI revolution is generating millions of new jobs while reshaping current roles across virtually every industry. If you follow this roadmap and stay focused on lifelong learning, you will be in the right place to exploit these opportunities and build a successful career in artificial intelligence.

AI enhances, but it does not replace, human capability. The professionals who will thrive are the ones with a combination of technical AI skills with the human attributes of creativity, critical thinking, and domain expertise. Your journey to master AI begins with step one – pick your starting point based on your knowledge and start learning today.