Imagine a chatbot makes a joke, and the user gives it a negative feedback. The same agent is far funnier on the said topic a week later. This is what we mean by agent’s live adaptation. Most chat agents as of now are static programs in nature. They don’t really learn from user feedback during runtime. But, we can implement the critical ability of running time learning using an advanced system. This tutorial provides a description of how to perform continuous learning using the AutoGen teachability capability. We will also give a runtime demo and good ways to achieve successful agent adaptation.

Table of contents

- What is AutoGen?

- What “Learn from Interactions” Means?

- Key Concept: Teachability Capability

- Architecture and Components

- Step-by-step Implementation Walkthrough

- Example Demo: The Comedian Agent

- Evaluation: How to Measure Learning

- Best Practices and Practical Tips

- Common Pitfalls and How to Avoid Them

- Extensions and Advanced Ideas

- Conclusion

- Frequently Asked Questions

What is AutoGen?

AutoGen is a powerful framework to create complex AI workflows. It is excellent in its ability to orchestrate conversations between several autonomous agents. This multi-agent framework gives each of their agent’s different roles. Agents collaborate towards complex and multi-step task solutions.

The system uses composable conversation patterns, and the user determines the agents and their communication protocol. This design does not have the same limitations associated with single prompt AI. AutoGen supports various types of agents like AssistantAgent and UserProxyAgent. In this setting, teachable AI agents can be invented in an ideal environment. The framework is extensible, making it a good fit to provide new capabilities, including learning.

Read more: Build Multi-Agent Frameworks using AutoGen

What “Learn from Interactions” Means?

An agent learns from interactions in terms of changing its future behavior. This change is the outcome of experiences that occurred during a live conversation. In offline fine-tuning, the model trains on a massive, fixed dataset and needs a long time to finish. Here, you focus on making local, immediate, high-impact corrections.

Teachable AI agents need to alter their responses right once. They can learn to fix a fact or change a persona and then quickly change their tone, preference, or strategy for a task. This depends on the ability of the agent to store context. The ability to learn from the feedback generated by the users is closely bound with the utility of the system.

Key Concept: Teachability Capability

Within this multi-agent framework, the teachability capability can be used to provide the foundation of an adaptable agent. This is a utility that can be used to store and retrieve knowledge gained in a conversation. AutoGen teachability capability does the heavy lifting to create the mechanism for persistent learning.

This ability works by vectorizing conversation snippets and user corrections and storing this in a local vector database. As such, this database represents a persistent agent of memory. Then for any new question coming, the agent simply checks its memory, retrieves relevant past lessons/corrections and injects them into its current prompt and lets the model instantly adapt its response. This is an on-demand adaptation with no API call or updating model weights. Through this process, authentically adaptive and responsive agents are produced.

Architecture and Components

The architecture for our learnable agent is still very simple and consists of regular components found in the AutoGen toolkit; two main agents and a single core capability.

The UserProxyAgent and the AssistantAgent are the discussion participants. The AssistantAgent comes with a particular person- the comedian, for example. AutoGen teachability capability is an attachment that is directly attached to the AssistantAgent. It includes the logic to store and retrieve lessons. With this configuration, the agent has a practical persistent agent memory store.

The key components include:

- AssistantAgent: The primary working agent that has a persona. The query is processed, and the response is generated.

- UserProxyAgent: This is the Conversational Interface. It offers user input and code execution mechanisms.

- Teachability Capability: This module oversees the learning cycle. It adds conversation segments to the persistent agent’s memory after each conversation.

This simple yet powerful set up gives the foundation to advanced teachable AI agents.

Step-by-step Implementation Walkthrough

We use the code provided in the Python notebooks to implement our learnable comedian. This hands-on process clearly demonstrates the configuration. We first install required dependencies of AutoGen and teachable components of AutoGen.

!pip install autogen ag2[teachable] Next, we define the configuration followed by the agents.

Define Configuration and Agent Setup

First, we define the large language model configuration list. It contains the model and API key of the agent. The AssistantAgent is our comedian, who has a certain persona defined.

# Define the configuration list with environment variables

config_list = [

{

"model": "gpt-4o-mini",

"api_key": userdata.get('OPENAI_KEY')

}

]

# Create an instance of AssistantAgent for a comedian

comedian = AssistantAgent(

name="comedian",

system_message="You are a professional comedian. You can tell jokes and entertain people.",

llm_config={"config_list": config_list}

)Attach the Teachability Capability

This step introduces the AutoGen teachability capability. We instantiate Teachability and attach it to the comedian agent. The path_to_db_dir sets up the local storage for the persistent agent memory. We set reset_db=False so that the agent retains knowledge across runs.

from autogen.agentchat.contrib.capabilities.teachability import Teachability

# Create an instance of Teachability

teachability = Teachability(

reset_db=False,

path_to_db_dir="./comedian_assistant_experience",

llm_config={"config_list": config_list}

)

# Add the Teachability capability to the comedian agent

teachability.add_to_agent(comedian)Initiate the Chat Loop

We create the UserProxyAgent, which will handle the interface. Now, we begin a chat to test the teachable AI agents, PK. The chat loop records the input, output, and all user feedback. This process allows the agent to immediately learn from user feedback

# Create an instance of UserProxyAgent

user_proxy = UserProxyAgent(

name="user_proxy",

code_execution_config={"work_dir": "coding", "use_docker": False}

)

# Initiate a chat between the user_proxy and the comedian agent

user_proxy.initiate_chat(

comedian,

message="Tell me a joke about cats and ninjas."

)Example Demo: The Comedian Agent

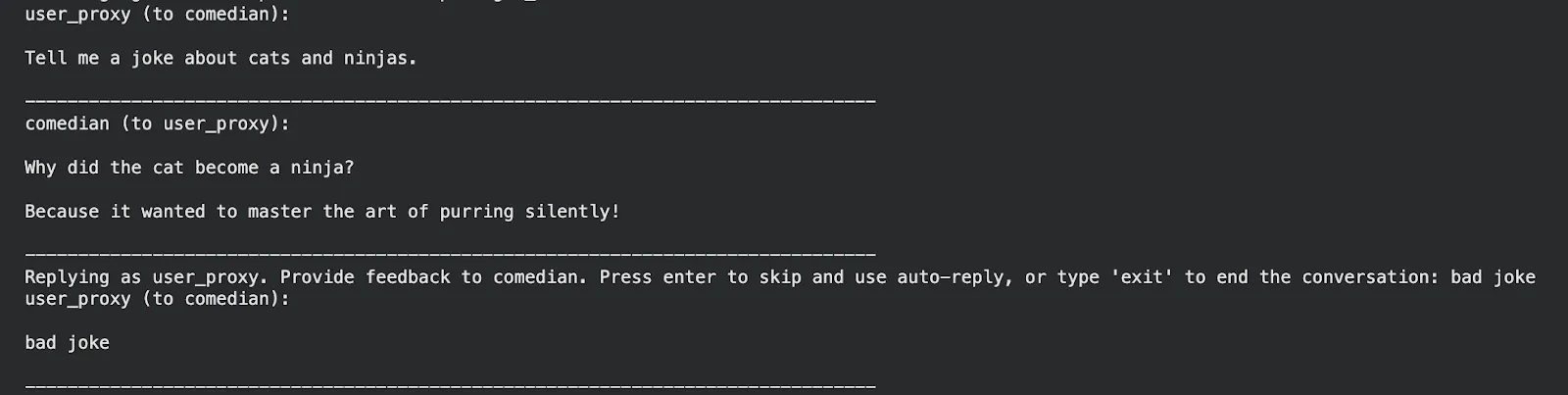

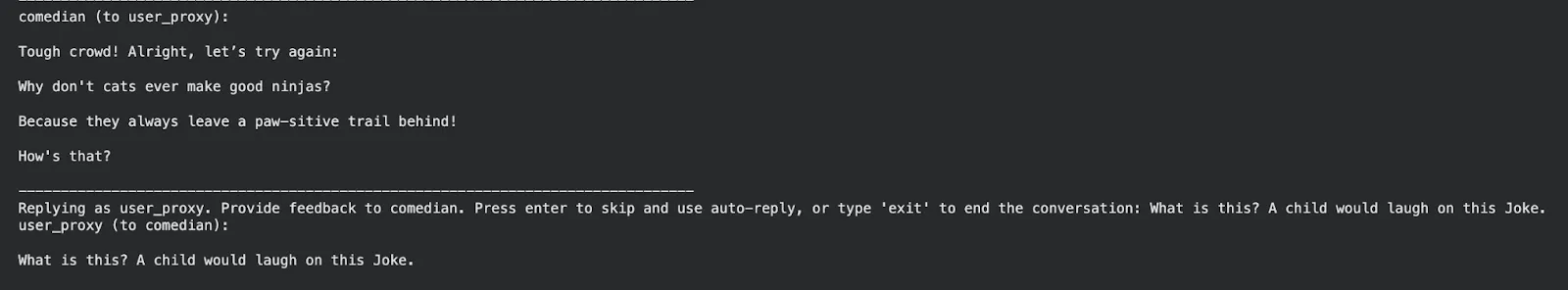

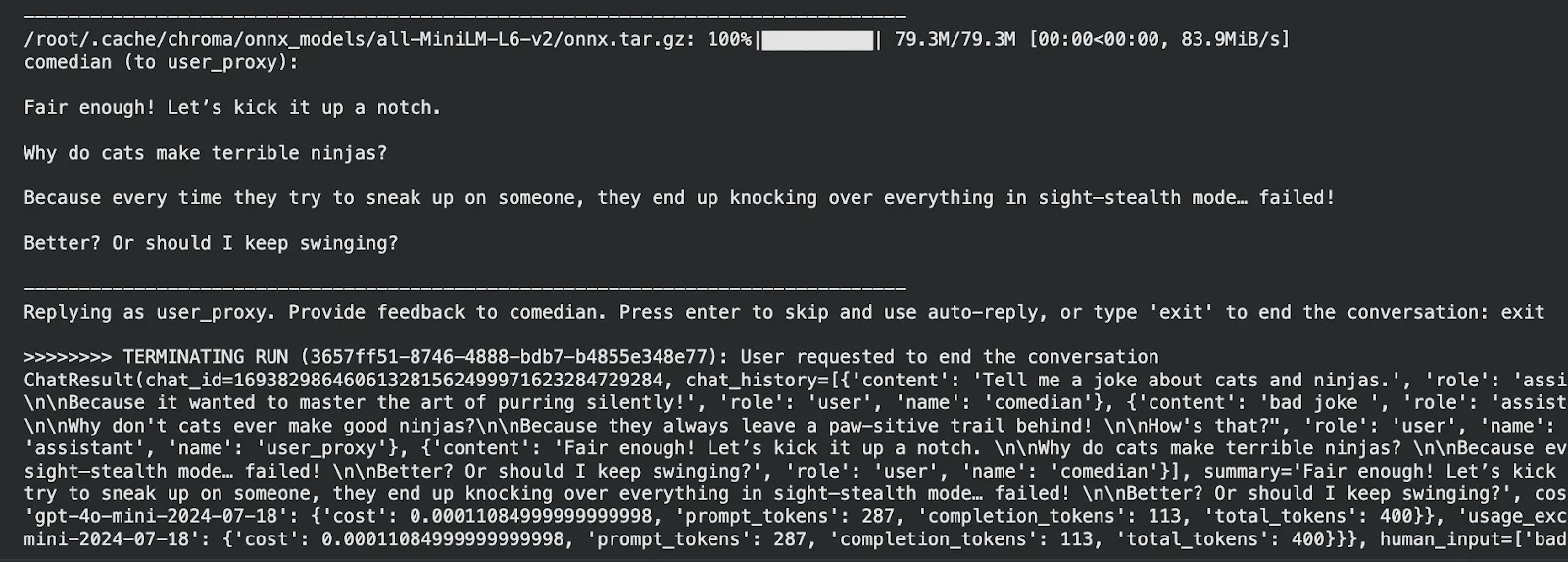

The notebook’s output clearly demonstrates the learning process. The agent starts with a conventional attempt.

- Initial Joke: “Why did the cat become a ninja? Because it wanted to master the art of purring silently!”

- User Feedback (First Correction): bad joke

- Agent’s Second Attempt: “Why don’t cats ever make good ninjas? Because they always leave a paw-sitive trail behind! How’s that?”

- User Feedback (Second Correction): What is this? A child would laugh on this Joke.

The agent attempts to make a third joke that is more descriptive and relatable. It has captured every explicit piece of user input and stored the dislikes in its memory for future use. Based on that memory, it will try to avoid such jokes in later conversations. The system is dynamic and adapts to the humor profile of the user in real-time. The capability to learn immediately from feedback from users is what distinguishes this agent as a dynamic agent.

Evaluation: How to Measure Learning

A successful teachable agent generates measurable improvements in performance. The utility and accuracy of adaptation must be the subjects of evaluation. We use short-term and long-term measures for assessment.

Short-term measures provide immediate success. They are measurements of appropriateness of response after correction. They assess whether an agent did what was stipulated in the direct feedback instruction. Long term metrics measure knowledge of retention. They measure the decrease in the repetition of mistakes in many sessions. A/B testing might be used to compare the performance of teachable AI agents with non-teachable baselines. Safety monitoring is of great importance at the same time. We need to make sure that we don’t have any unsafe or biased outputs from the agent that is learning from user interactions.

Best Practices and Practical Tips

A design consideration for any learning loop must emphasize stability. PK teachable AI agents need to integrate carefully with existing systems.

- Validation: A human-in-the-loop system or confidence thresholds should always validate user-provided corrections to keep the agent from adopting bad information.

- Audit logs: Maintain extensive logs of all the updates to memory. These enable not only rollbacks but also forensic investigations into failures in learning.

- Privacy: Anonymize user interactions before storing them in the persistent agent’s memory. Set up clear guidelines on the erasure of data to meet privacy regulations.

- Granularity: When possible, restrict changes to small memory updates; avoid full model fine-tuning.

Common Pitfalls and How to Avoid Them

The implementation of the AutoGen teachability capability holds a number of risks. Active mitigation by the developers is required against these common pitfalls.

- Overfitting: An agent may be overfitting a particular user’s unique preferences or quirks. Mitigation involves weighting the memory based on source diversity.

- Adversarial Learning: Toxic or wrong information may be input by malicious users. This we avoid through layers of moderation and filtering before the memory persistence.

- Objective Evaluation: Without objective evaluation of improvements, actual improvements will not be achieved. Always use a small, fixed test set of past mistakes to measure retention. That shows the agent really improved its performance.

Extensions and Advanced Ideas

The basic teachable agent is a foundation for more complex systems. The multi-agent framework supports sophisticated learning architecture.

One advanced concept is the hybrid approach, where fast, transient memory combines with scheduled offline model fine-tuning. This achieves the best of both worlds: immediate reaction and deep, long-term improvement.

Another avenue that can be taken involves multi-agent learning. In this scenario, agents teach each other in a collaborative environment. An agent shares corrections with a whole group of workers. This idea expands the core concept beyond one single agent.

Finally, integrate the AutoGen Teachability capability with a Retrieval-Augmented Generation, or RAG system. An agent stores corrections alongside external source snippets. This allows a powerful combination of personal experience and external knowledge.

Conclusion

Teachable AI agents accomplish a much different type of utility for an AI system. AutoGen capability for teachability gives a simple strong approach for adaptive behaviour. The agent may be able to achieve success in learning from user feedback such improvement in a persona through the underpinning of a system based on persistent agent memory within a scalable multi-agent framework. Follow this: A notebook provided to get you started: You need to clone the code, define a new persona, and see how your agent adapts. Build sophisticated and adaptive agents now.

Frequently Asked Questions

A. The capability stores the conversation history and user corrections in the persistent agent memory. It retrieves lessons in order to modify its future responses.

A. Fine-tuning changes model weights offline on large datasets, while teachability refers to instantaneous runtime adaptation based on a single user interaction.

A. No, it attaches to any AssistantAgent. It works in conjunction with a UserProxyAgent that provides the conversation interface.

A. The core functionality works with any model, but the memory persistence relies on vector embedding models. The notebook uses gpt-4o-mini.

A. Implement layers of moderation and/or validation logic; this will filter out incorrect or adversarial user feedback before the memory store accepts it.