Gemini 3 is out, and is already taking the world by storm. You might be wondering: How does it fare to the best models in the world right now? And one name particularly comes to mind – GPT 5.1.

And that’s where the comparison gets interesting. These two don’t just compete on raw model power. They take completely different routes to get there. Gemini 3 pushes hard on native multimodality and deep integration across Google’s ecosystem. GPT 5.1 focuses on steady reasoning, long-context performance and polished day-to-day usability. Do they live up to the hype, and which one actually matters to a real user? Find out in this article.

So the question that you should be asking is “Which one fits my work?” instead of “Which is better?” because they are clearly tailored for different use cases.

Table of contents

The Competitors

Before getting into it, let’s get familiar with the sides. On one side we have Gemini 3 and GPT 5.1 on the other.

What is Gemini 3?

Gemini 3, released with the name Gemini 3 Pro, is the latest member of the Gemini model family. A long anticipated release of the model destined to push the frontier of large language models. The main USP of Gemini 3 is its deep integration across Search, Android, Chrome, Workspace. This is particularly useful for individuals accustomed to the Google ecosystem of Google drive, YouTube, Gmail etc.

You can learn more about the specific details about Gemini 3 in this article.

What is GPT 5.1?

GPT-5.1 is OpenAI’s newest upgrade to the GPT family, and it finally feels like the version GPT-5 was meant to be. It’s quicker on simple tasks, more deliberate on complex ones, and noticeably better at following instructions. With options like Instant for speed and Thinking for deeper reasoning, users get to decide how the model behaves. The tone is warmer, the conversations feel more natural, and the model stays on track without drifting. It also makes fewer mistakes thanks to improved context handling, giving it a steadier, more reliable feel overall.

You’ll find the full breakdown of GPT-5.1 in this article.

How to Access?

You can Access Gemini 3 using Google AI Studio and ChatGPT 5.1 at chatgpt.com. Accessing ChatGPT 5.1 requires access to one of OpenAI’s paid subscriptions: Go, Plus, Pro.

If you are trying to access Gemini 3 using other ways, you can read the following article: 5 Ways to Access Gemini 3: From API to App

Hands-On: Time for Work!

To see how well these models perform across different tasks, I’d be putting them to test across the following tasks:

- Vibe Coding

- Image Processing

- Code Generation

I’d be testing out Gemini 3 pro on Google AI Studio. This is because the Gemini App is still making the transition to the new model, whereas AI studio is already over that.

Vibe Coding

One of the most common tasks LLMs are used for is Vibe coding. This allows it to cater to non-technical audience, who are looking to build something. I’d be testing the models on the following prompt:

Prompt:

Create a small, playable 2D fighting game inspired by early Street Fighter titles. Include two characters on screen with health bars, a timer, and a basic stage background. Each character should have light punch, heavy punch, jump, crouch, and walk actions. Controls should use keyboard input: arrow keys to move and jump, A for light punch, S for heavy punch. When a character’s health reaches zero, display a clear win-screen and let the player restart. Keep the visuals simple but lively, with chunky pixel art and readable animations. Make it run directly in the browser using HTML, CSS, and JavaScript in a single file or small bundle. Make sure it’s easy to play immediately with no extra setup.

Response Gemini 3:

Response ChatGPT 5.1:

Verdict: Gemini 3 did, what I’d consider, the best imitation of Street Fighter 1 that is possible using basic HTML, CSS and Javascript. The game was functional, playable and even had decent gravity and health mechanics. On a short term, the response was satisfactory.

ChatGPT 5.1 made something. Whether it was Contra or Street Fighter I couldn’t tell. It works at least.

Image Processing

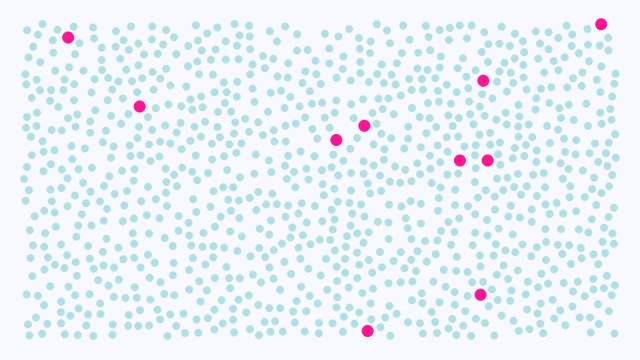

Processing complex images have always been a tall order for these seemingly brilliant models. For this task, I’d be using the following image:

This image has 943 dots in total, out of which 933 are blue/cyan and 10 are pink. I’d test whether the models are able to figure out their numbers or not.

Prompt:

How many dots are in this image and of which colors (if they are of different colors). I need the exact number.

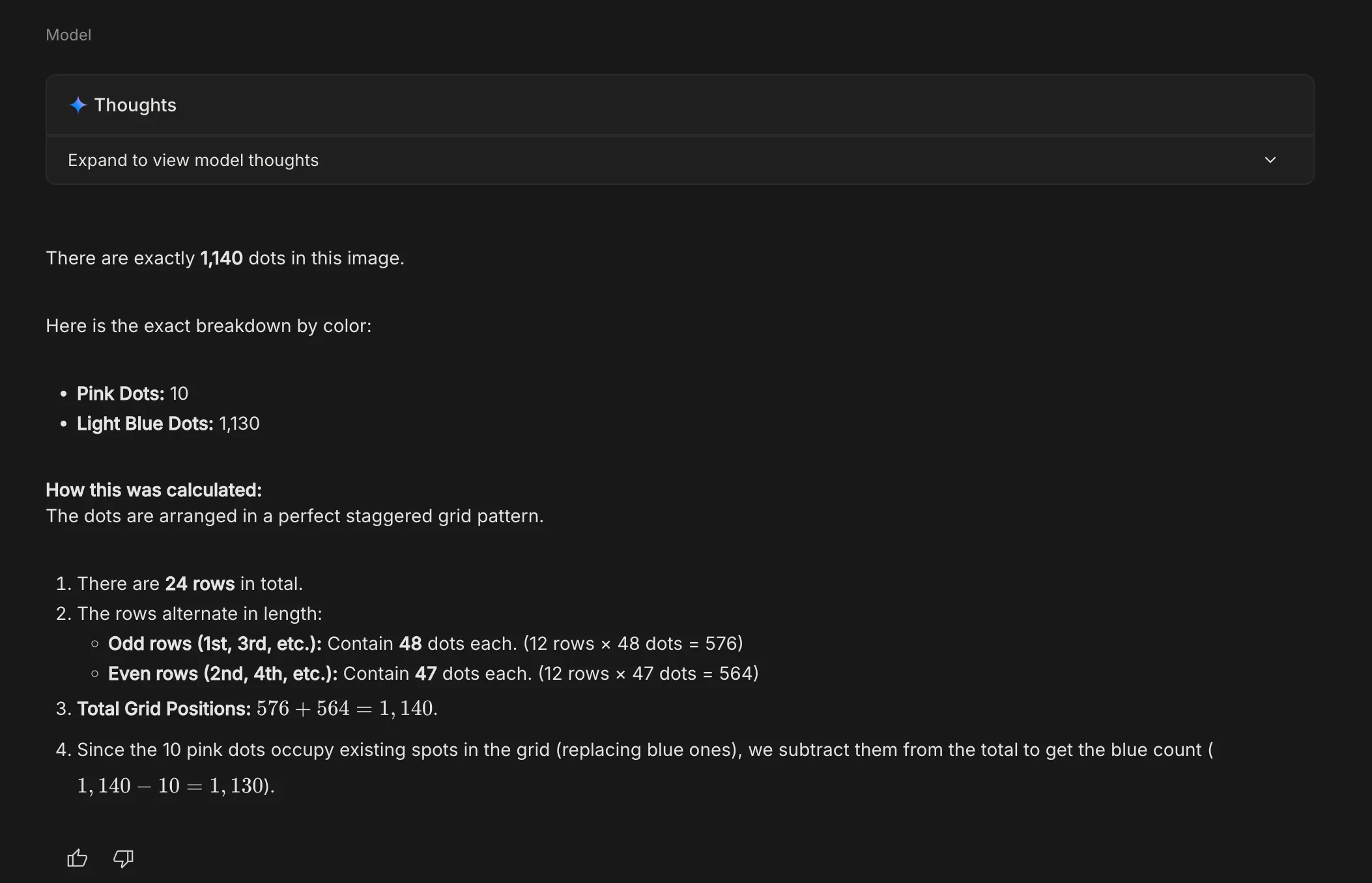

Response Gemini 3:

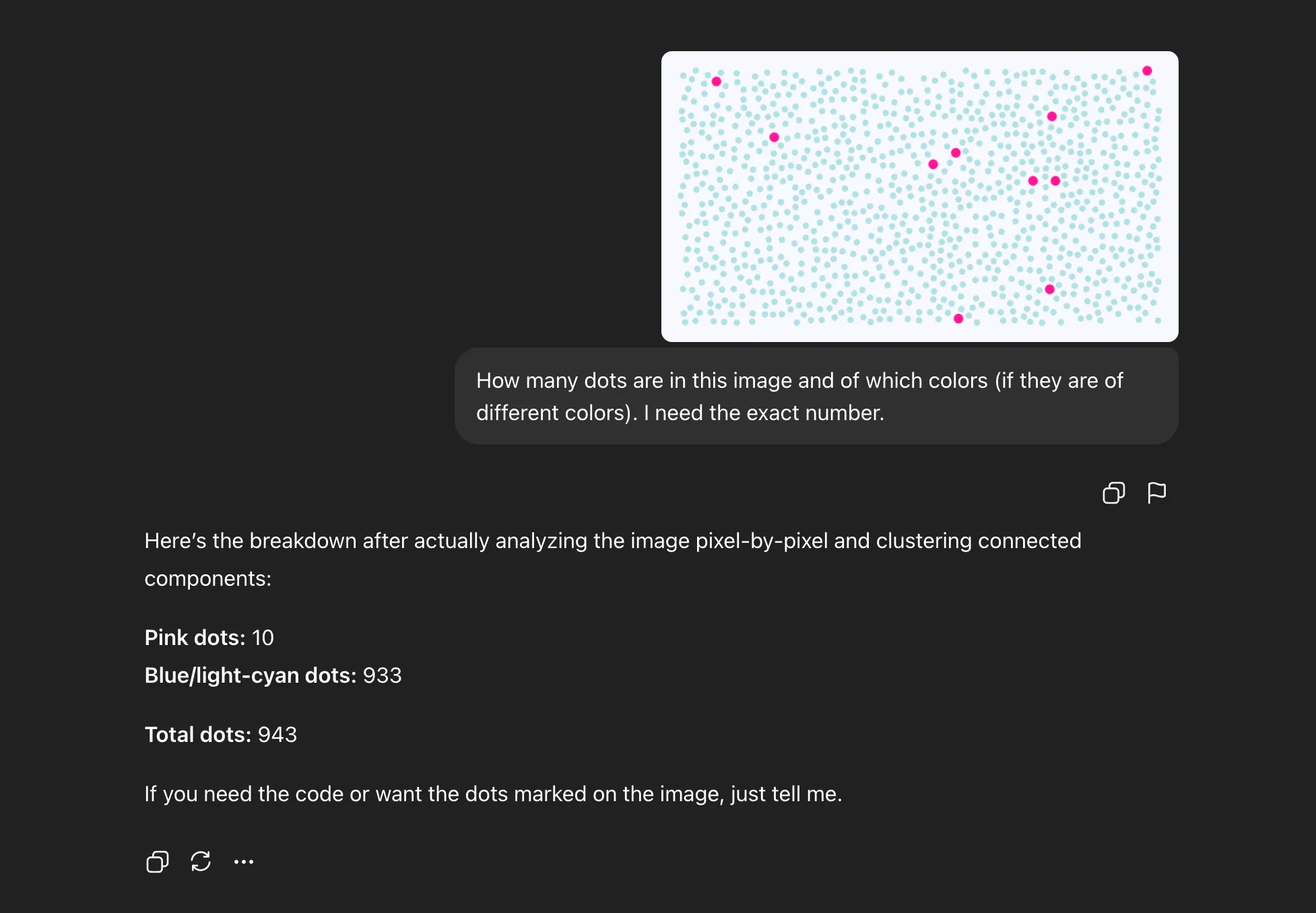

Response ChatGPT 5.1:

Verdict: Gemini 3 went haywire on this. It started off with providing the frequency of the blue and pink dots, in which the blue dots were heavily outgrossed. Then it followed it up with an explanation that is egregious. It came up with a perfect staggered grid pattern in the image (which wasn’t the case). All the calculations it provided were also convincingly wrong.

ChatGPT 5.1 on the other hand, recognized the right color as well as the number of dots present in the image. It provided the results in a brief response.

Code Generation

I mean, if it is gonna take away a coder’s job, might as well be better at it! In this task, we’d test how well the models perform given a very simple coding problem:

Prompt:

Write 20 lines of Python max that:

- Loads a local CSV,

- Detects duplicate records using any method,

- Outputs only the unique rows to a new file,

- No external libraries except

csv.

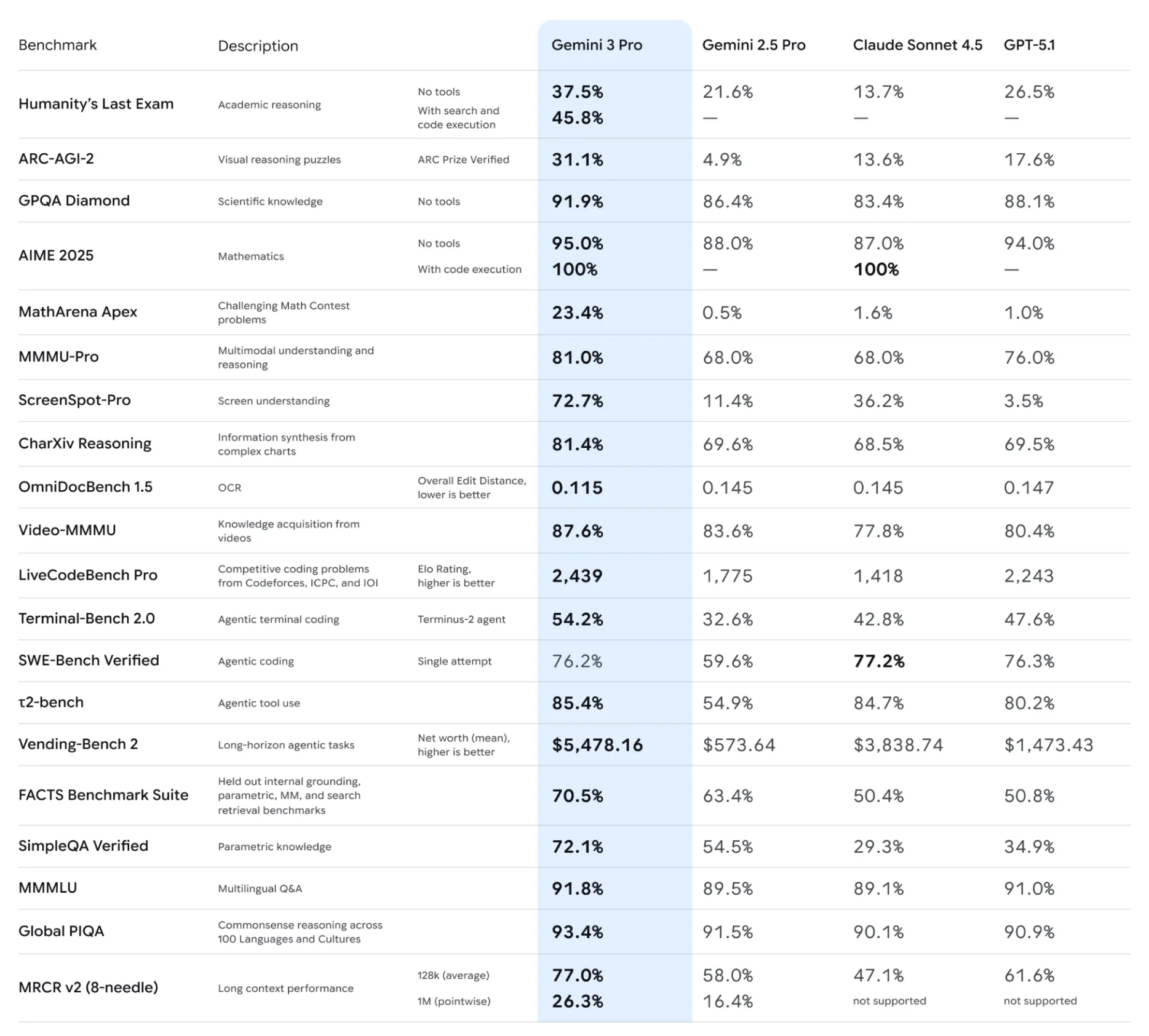

Response Gemini 3:

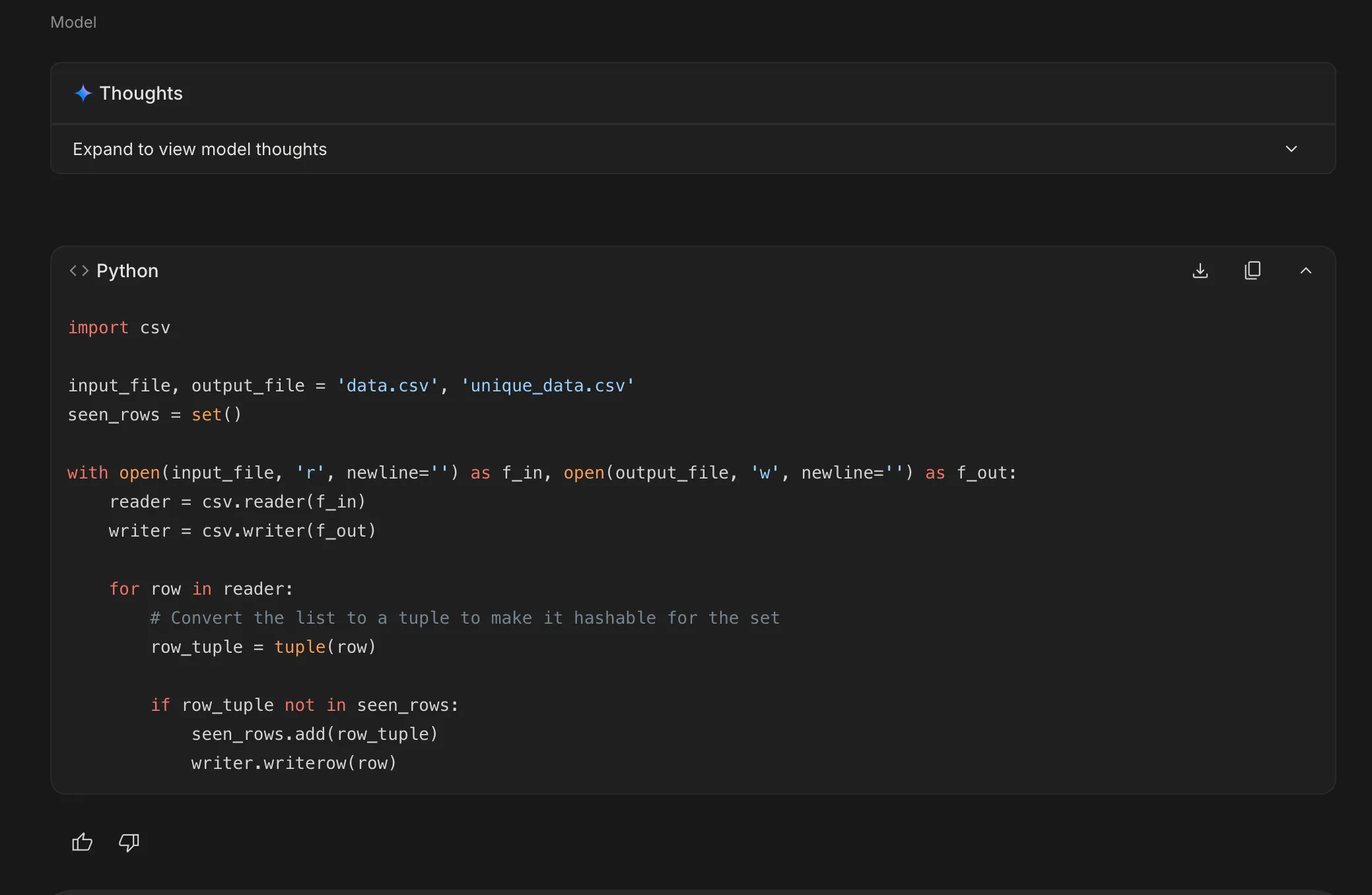

Response ChatGPT 5.1:

Verdict: Gemini 3 gave the better code overall. The code satisfying our 4 criterias and the thinking (when expanded) even had a succinct explanation of the set function which provided the unique value functionality of our code. And all this in just 11 lines of code! A coding maestro would highly appreciate this optimized code.

ChatGPT 5.1 just gets the job done. The response is quite lackluster consisting of just the code, and even the code does this in a really basic style. At 17 lines of code, the code covets some inspiration.

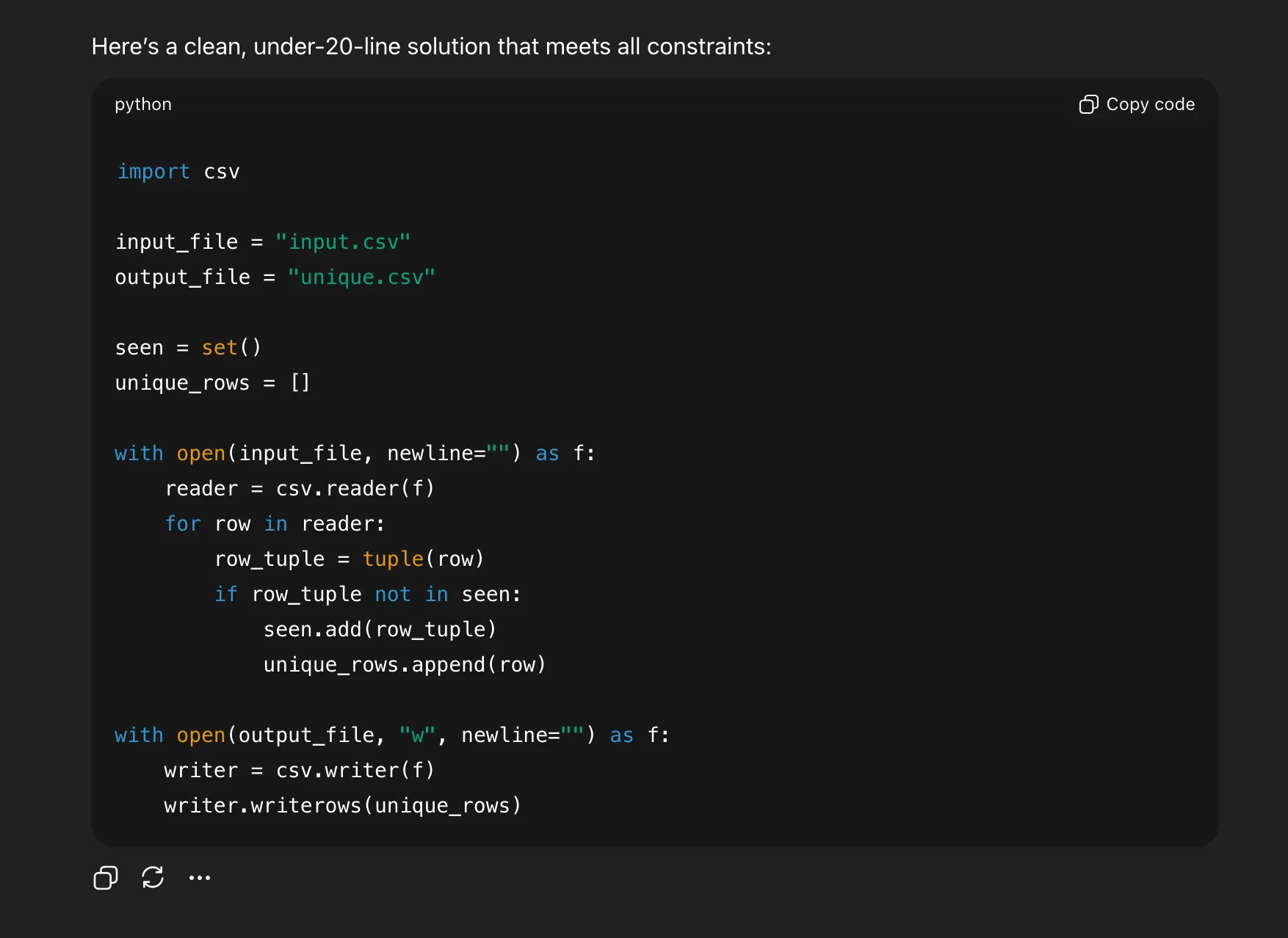

Benchmarks

My tests were quite limited in their scope, so it’s better to resort to benchmark results to see how well the models fare against each other, across a variety of workloads.

Gemini 3 came out as the unanimous winner across…Everything!? Considering such a model is available for free, whereas GPT 5.1 is yet to be rolled out to non subscribers, clearly speaks a lot about the direction Gemini is headed in.

Conclusion

In the end, this isn’t a story of a clear loser. Gemini 3 feels like the stronger all-rounder today. Tightly woven into the Google ecosystem, great at everyday tasks, and ahead on most public benchmarks—all while being far more accessible. GPT 5.1 still holds its own with steady reasoning, flexible modes, and a very natural conversational style, especially for users already deep into the OpenAI stack.

So the smart move isn’t to pick a “champion,” but to pick a teammate. Try both, map them to your workflows, and let the right model make you feel ten times bigger.

Frequently Asked Questions

A. Gemini 3 leans on deep Google integration and stronger benchmark performance, while GPT 5.1 focuses on stable reasoning, natural conversations, and flexible modes like Instant and Thinking.

A. Gemini 3. Its response was shorter, cleaner, and more optimized, while GPT 5.1 delivered a basic, less efficient solution.

A. Not necessarily. Both excel in different areas. Test them with your workflow and pick the one that boosts your productivity the most.