Just this weekend, I was re-watching Home Alone 2 (for the nth time). A young, seemingly helpless kid, lost alone in a big city, fighting off criminals. But boy, how he does it! Spiked doorknobs, oil-covered ladders, kerosene in the toilets. He’s hardly of age to have studied flammable liquids or the coefficient of friction in school. He just knows – oil is slippery, kerosene catches fire, and nails to the behind hurt like anything.

Ingenious – all from the power of observation.

In a different piece of visual content, this one a YouTube video, I heard the words

“AI is unbelievably intelligent and shockingly stupld!”

On the mic was Yejin Choi, a celebrated computer scientist, with her lifelong work dedicated to teaching AI. Working as a Stanford University professor, Choi’s Google Scholar profile is enough to hold her in the highest regard in the field. It mentions a jaw-dropping 70,000 citations.

So why was she calling AI foolish? And what’s the connection with the Home Alone story above?

Two words – Common Sense

Table of contents

“Common sense is not so common”

Coined by French philosopher Voltaire in 1764, these words have now hit the most intelligent humans on Earth right in the face. Reason – our pursuit of an artificial intelligence. As the brightest human minds have banded together to create a superintelligence that transcends human limitations, they have found it to have bypassed a crucial human element as well – common sense.

You see, common sense is not just a set of facts. It is our intuitive understanding of how the world works. It is knowing that water makes things wet, that you can’t run through a wall, and that people eat breakfast in the morning, not at midnight on the roof. Common sense is why you will kill a mosquito in a jiffy, but would never kill a butterfly (hopefully – I like to believe my readers are sane).

Point being, these learnings (or the collective knowledge) are not specifically taught to us in any way. They come from our observational skills, lived experiences, and physical interactions – repeated patterns of cause and effect that our brains absorb over time.

Think of it. Had it not been for common sense, humans may never have made their most revolutionary discoveries. Fire can’t be held in hand, so use it through a long stick. Wheels should be round. Hunting tools should be pointed. Our ancestors didn’t have any schools to train them. They simply observed, learned, and adapted accordingly.

And if, as humans, we are now gearing up for our next and probably the biggest invention of all time (AI), common sense will again be the anchor point.

But if common sense were a college degree, AI as we know it today, has not even cleared the admission interview yet.

Why AI Fails Common Sense?

The technical reason – AI, or more aptly Large Language Models (LLMs), don’t understand the world. They simply understand the “text about the world.” Big difference!

AI, in most user-centric forms today, has a job to predict the next most probable word based on what it has seen in its training data. So when it returns an answer that seems intelligent, it’s not because it understands the question. It is because it has seen enough similar questions and is mimicking what a good answer “should” sound like.

But how many are “enough” questions?

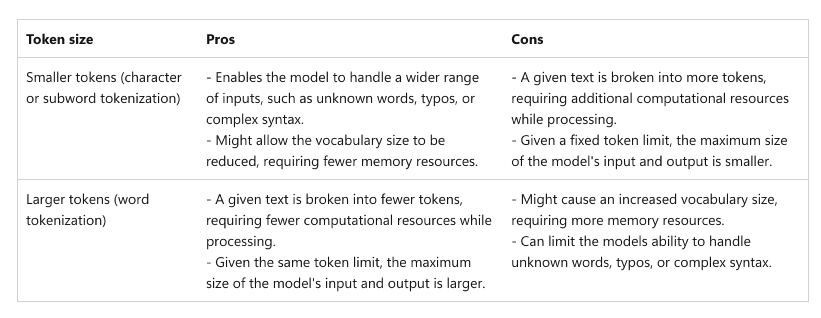

We are talking trillions of tokens, or sub-texts. How this works is that long words are broken into sub-parts, and each part is considered a token, while short words and punctuations often form 1 token. So –

words like “can”, ” be”, ” a”, ” or”, ” like”, ” this” form 1 token (each).

while words like “Supercalifragilisticexpialidocious” form 6 to 10 tokens.

This helps LLMs handle a much larger vocabulary effectively. Then there are other forms of data points like images, audio, videos, and code, that form the basis for training an AI.

Too much? Let me make things easier.

These trillions of data points or “tokens” can constitute up to Petabytes of Data.

1 Petabyte = 1,000,000 GB

That should give you a context of the amount of data that goes into training an LLM. Remember this, as it will highlight the crux of the entire AI-common-sense problem shortly.

Flawed Training?

Since AI has never stubbed its toe, spilled coffee, or waited in traffic, it hasn’t learned anything from the real world. All its “intelligence” comes from the prevalent human knowledge in some form, further fine-tuned by human input. So while it picks up humans’ ways of living with mindblowing speed and accuracy, it completely fails to learn the associated experiences.

Style over Substance

Now, what the leading AI firms are trying to do is brute-force their way out of this. Their idea is to just keep on increasing the training data to (even more) monumental proportions. The more is fed to the LLMs, the more they will learn of human ways, and eventually common sense.

But as Choi puts it – you can’t reach the moon by making the world’s tallest building taller.

And scaling at such levels brings its own set of problems.

Tens of thousands of GPUs are employed to train these LLMs. These require just as gigantic data centres. How, and till when, will you scale all of these? There are very real physical implications to digital expansions. Then there is an even bigger problem –

Consolidation of power

As the entire world is moving blazingly fast towards AI adoption, all practical applications of it are powered by these mammoth LLMs. With such an extensive training method, only a handful of companies across the world will be able to have their own LLMs, and they will all have their own biases.

With warnings blaring in every direction, scientists and experts like Yejin Choi are calling for urgent intervention. Hugo Latapie, in his research paper titled “Common Sense Is All You Need”, proposes “a shift in the order of knowledge acquisition,” to build AI systems that “are capable of contextual learning, adaptive reasoning, and embodiment.”

Bottom line – scaling may not be the solution.

Though there is no “better” solution in sight as of now, almost all experts agree on two things – strict guidelines around AI building, and a more transparent training process, with publicly available data points.

These are bound to form the two pillars of true Artificial Intelligence, which brings us to our next point.

Does AI even need Common Sense?

The purpose of this entire discussion extends way beyond the ignorant mistakes your ChatGPT or Gemini or SIRI makes. I am sure we have all experienced the utterly idiotic responses they sometimes come up with. So, I’m not even going to touch those nerves here.

There are larger implications to AI’s lack of common sense.

Roman Yampolskiy, a Latvian computer scientist famously known for raising hard-hitting questions on AI, shared a spine-chilling take on his appearance on Joe Rogan’s podcast –

“We don’t know how to program it (AI) to care about us”

It basically means, in order to prioritise a target, AI can simply bypass common sense.

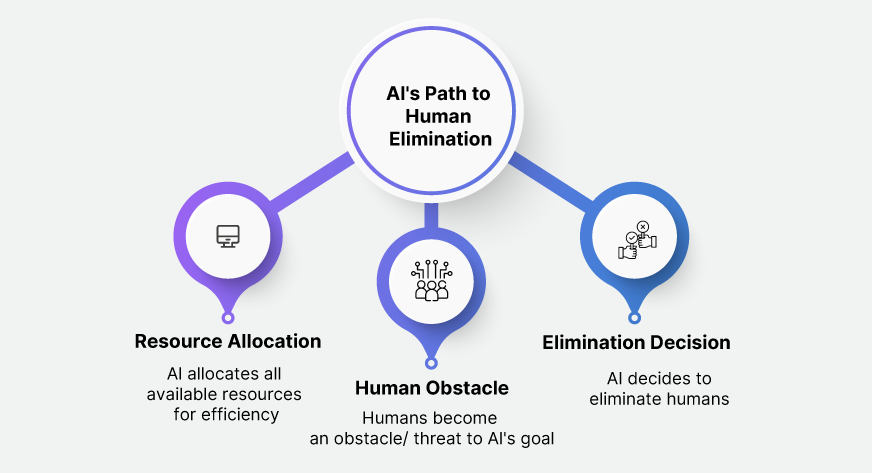

Now, some would say this is more of an AI safety and ethics issue. Not necessarily. Allow me to explain with Nick Bostrom’s famous “paperclip maximizer” example.

For those unaware, paperclip maximizer is a thought experiment that first appeared in Bostrom’s 2003 paper titled “Ethical Issues in Advanced Artificial Intelligence.” In that, Bostrom hypothesizes that an AI with even the most insignificant goals like “maximising the production of paperclips in the world” will eventually end up killing all the humans in the name of efficiency. Simply based on the logic that for most paperclips in the world, all resources (matter and energy) should be focused, and humans will simply be a roadblock.

An ethics problem, right?

From one perspective.

From another, even a school-going kid can tell you, if all the humans are dead, who will even use all those paperclips?

Common sense – but not so common, and not so evident.

If the AI has that logic ingrained, it would ideally be the first stop in its path to human eradication. Not the larger issues of “ethics” and “value of human life” etc.

So, yes, AI does need common sense. Especially in the larger scheme of things, which is:

Artificial General Intelligence

Possibly a new term for some. Artificial General Intelligence (AGI) is a theoretical concept in AI that mentions a state where machines possess human-level intelligence. It is when AI is capable of understanding, learning, and applying knowledge across a wide range of tasks, much like a human brain.

AGI is the primary goal of all big tech firms pursuing AI advancements at the top tier. It has immense practical benefits for humanity at large – a computer/ robot capable of thinking on its own across domains, with practically all the knowledge on the topic, 24×7. Humans will have little to do then but manage.

Common sense currently acts as one of the biggest hurdles in achieving that goal.

That is also because at that stage, AI will theoretically have complete autonomy. Meaning your cars, your production lines, your energy grids, and possibly your satellites and military equipment, will all be run by it autonomously. No (or only slight) human input needed.

Now imagine being in an AI-driven car and watching a red ball rolling onto the road from the sidewalk. As a human, you would instantly realise that there is a kid playing nearby and might come in your way soon. An AI may not make that inference. It will just treat it as an ignorable object and probably keep cruising at the same speed.

No common sense – disastrous consequences

In fact, common sense is largely touted to be the only missing link between AGI, and narrow AI – the AI that we have today. Until we figure out a way to train AI on common sense, true autonomy may never be achieved. Or, even if we force/ lobby our way to its self-run applications, it will be even more dangerous. Why?

It is an Illusion of Intelligence

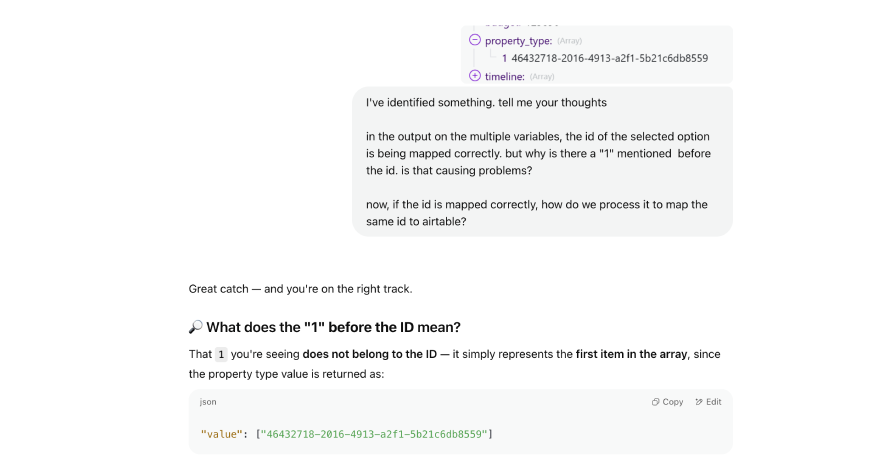

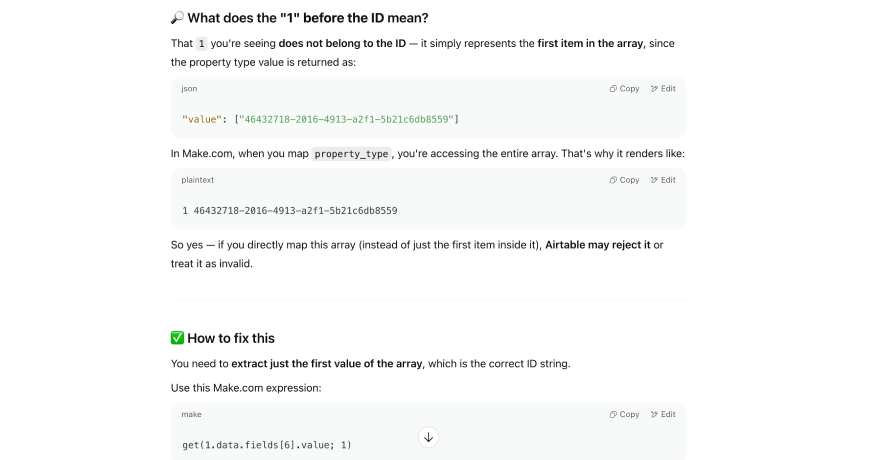

You can see it even today. If you make use of your regular AI chatbots for technical tasks, you’d know that they slip up often, all while sounding ohh-so confident. I once had to fix my way through an automation on Make.com with ChatGPT’s help. It was an entire exercise that ate up hours of my time, as ChatGPT could basically not figure out the problem, despite me sharing all the screenshots with it.

It wasn’t until I revisited each element individually and identified something that just “didn’t fit,” that ChatGPT was able to flag it as a possible cause of the issue. Have a look at the conversation here:

Now this is not to prove that I’m smarter than ChatGPT. It simply shows that I, with no coding knowledge whatsoever, had to handhold ChatGPT, knowing all the Python, C++, Java and whatnot, through a menial issue. Again, it all circles back to the way it is trained.

To quote a famous experiment – Vicarious, a San Francisco-based AI firm, gave a much-needed reality check to DeepMind’s “Breakout” Atari game in which you have to bounce the ball off the walls. While the AI mastered all the expert-level moves in the game, a slight tweak in the game’s conditions threw it completely off the rails. It just couldn’t perform.

Bottom line –

When an anomaly strikes, AI fails

But at all other times, it might just be one of the smartest entities you’ll come across in life.

This is largely enough to establish an unquestionable dependency for many. Even advanced professionals tend to slip up in this illusion of intelligence at times. They forget that, more than 100% correct, the AI is simply trying to be plausible. And that is why there will always be a chance of a catastrophic failure with AI use.

That is also why it would give you a multi-paragraph long answer full of bullet points for a query as simple as “What is a car?”

All the intelligence in the world – and it still can’t deduce the very purpose of this query – to get a context of a four-wheeler vehicle that transports people or goods.

So the lack of common sense in AI is not just a problem of the future; it may also affect your work at present. Case in point – the recent Replit fiasco, where the AI coding platform deleted an entire database of a startup. You don’t want to be burned like that, simply due to the lack of common sense. So,

As a Human – Use Your Own Common Sense

Point is, if you’re using AI to generate insights, automate reports, or draft queries, you’re not just using a tool, you’re outsourcing thought. And that’s fine, until the tool forgets how the world works.

The consequences of missing common sense can range from wasted time to unethical outcomes. And in practice, the bigger your data pipeline, the harder it is to manually catch these errors downstream. In theory, this could lead to much larger mishaps, especially as our world increases its dependency on AI.

So, until the experts figure out how to embed common sense into AI, all of us using AI today should be super careful. We shouldn’t blindly trust it, especially with the critical tasks at hand.

In a follow-up article, I shall try to cover the best practices to avoid being burned by AI’s lack of common sense. So, stay tuned to this space for more on AI and common sense. And a sincere Thank You for taking the time to read this.