How do machines discover the most relevant information from millions of records of big data? They use embeddings – vectors that represent meaning from text, images, or audio files. Embeddings allow computers to compare and ultimately understand more complex forms of data by giving their relation a measure in mathematical space. But how do we know that embeddings are leading to relevant search results? The answer is optimizing. Optimizing the models, curating the data, tuning embeddings, and choosing the correct measure of similarity matter a lot. This article introduces some simple and effective techniques for optimizing embeddings to improve retrieval accuracy.

But before we start with how to optimize embedding, let’s understand what embedding is and how retrieval using embedding works.

Table of contents

- What are Embeddings?

- Optimizing Embeddings for Better Retrieval

- Choose the Right Embedding Model

- Pretrained vs Custom Models

- Domain-Specific vs General Models

- Text, Image, and Multimodal Embeddings

- Clean and Prepare Your Data

- Fine-Tune Embeddings for Your Specific Task

- Select Appropriate Similarity Measures

- Manage Embedding Dimensionality

- Use Efficient Indexing and Search Algorithms

- Evaluate and Iterate

- Advanced Optimization Strategies

- Conclusion

- Frequently Asked Questions

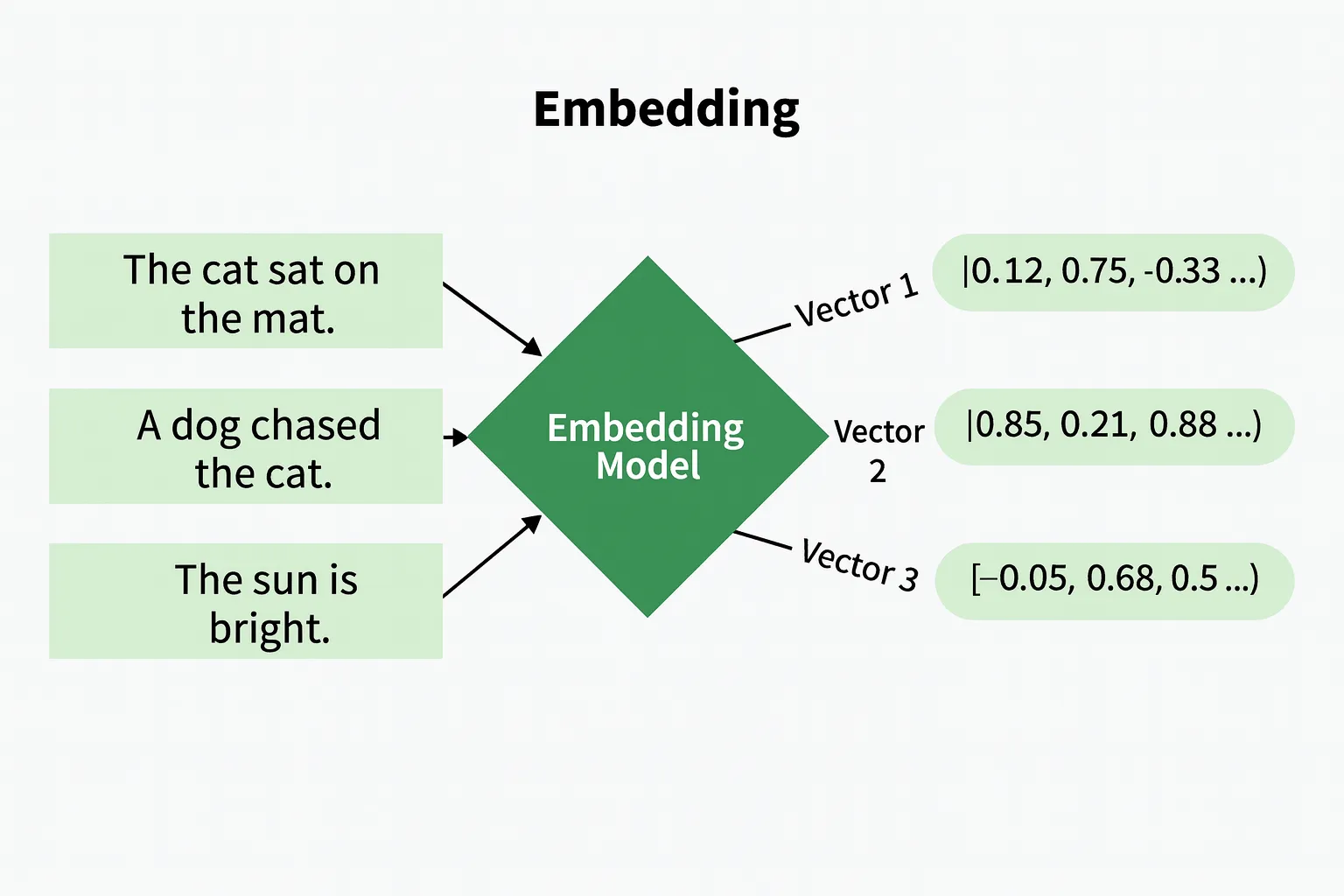

What are Embeddings?

Embeddings create dense, fixed-size vectors that represent information. Data isn’t raw text or pixels but is mapped into vector space. This mapping preserves semantic relationships, placing similar objects close together. From embeddings, new text is also represented in that space. Vectors can then be compared with measures like cosine similarity or Euclidean distance. These measures quantify similarity, revealing meaning beyond keyword matching.

Read more: Practical Guide to Word Embedding Systems

Retrieval Using Embeddings

Embeddings matter in retrieval because both the query and database items are represented as vectors. The system calculates similarity between the query embedding and each candidate item, then ranks candidates by similarity score. Higher scores mean stronger relevance to the query. This is important because embeddings let the system find semantically related results. They can surface relevant results even when words or features don’t perfectly match. This flexible approach retrieves items based on conceptual similarity, not just symbolic matches.

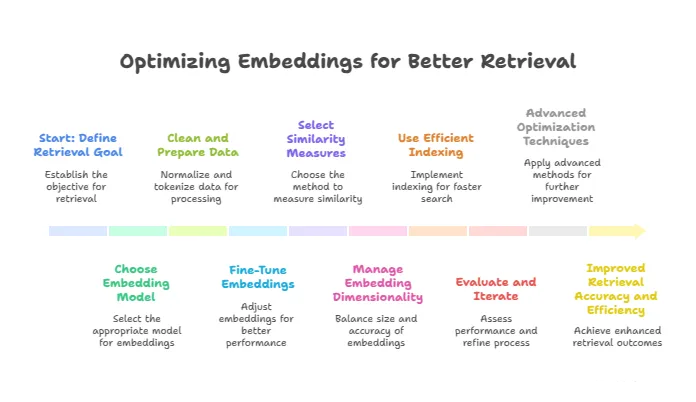

Optimizing Embeddings for Better Retrieval

Optimizing the embeddings is the key to improving how accurately and efficiently the system will find relevant results:

Choose the Right Embedding Model

Selecting an embedding model is an important first step for use in retrieving accurate results. Embeddings are produced by embedding models – these models simply take raw data and convert it into vectors. However, not all embedding models are well-suited for every purpose.

Pretrained vs Custom Models

There are pre-trained models, which are trained on large general datasets. Pre-trained models can generally provide you with a good baseline embedding. An example of a pre-trained model would be BERT for text or ResNet for images. Examples of pre-trained models will provide us with time and resources, and, while they might be a poor fit, they might have a good fit. Custom models are ones that you have trained or fine-tuned on your data. These are preferred models and return or compute embeddings that are unique to your needs, whether they be particular language-related, jargon, or consistent patterns related to your use case, where the custom models may yield better retrieval distances.

Domain-Specific vs General Models

General models work well on general tasks but often do not capture meaning with context that is important in domain-specific fields, such as medicine, law, or finance. Domain-specific models, which are trained or fine-tuned on relevant corpora, will capture the subtle semantic differences and terminology in those fields, resulting in a more accurate set of embeddings for niche retrieval tasks.

Text, Image, and Multimodal Embeddings

When working with your data, consider models optimized for your type of data. Text embeddings (e.g., Sentence-BERT) analyze the semantic meaning in language. Image embeddings are performed by CNN-based models and evaluate the visual properties or features in images. Multimodal models (e.g., CLIP) align text and image embeddings into a common space so that cross-modal retrieval is possible. Therefore, selecting an embedding model that closely aligns with your data type will be necessary for efficient retrieval.

Clean and Prepare Your Data

The quality of your input data has a direct effect on the quality of your embeddings and, thus, retrievals.

- Importance of High-Quality Data: The quality of the input data is really important because embedding models learn from the data that they see. Noisy input data and/or inconsistent data will cause the embeddings to reflect the noise and inconsistency, which will likely affect retrieval performance.

- Text Normalization and Preprocessing: Normalization and preprocessing for text can be as simple as removing all the HTML tags and lowercasing the text by removing all the special characters and converting the contractions. Then, simple tokenization and lemmatization methods make it easier to deal with the text by standardizing your data, reducing vocabulary size, and making the embeddings more consistent across data.

- Handling Noise and Outliers: Outliers or bad data that are not relevant to the intended retrieval can distort embedding spaces. Filtering out any erroneous or off-topic data allows the models to focus on relevant patterns. In cases of images, filtering out broken images or wrong labels will lead to better quality of embeddings.

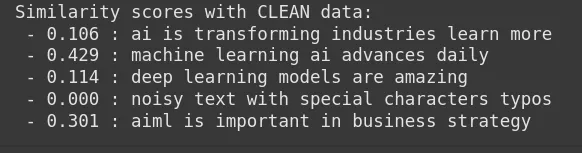

Now, let’s compare retrieval similarity scores from a sample query to documents in two scenarios:

- Using Raw, Nosy Documents: The text in this contains HTML tags and special characters.

- Using Cleaned and Normalized Document: In this, the HTML tags have been cleaned using a simple function to remove noise and standardize formatting.

import numpy as np

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity

# Example documents (one with noise)

raw_docs = [

"AI is transforming industries. <html> Learn more! </html>",

"Machine learning & AI advances daily!",

"Deep Learning models are amazing!!!",

"Noisy text with #@! special characters & typos!!",

"AI/ML is important in business strategy."

]

# Clean and normalize text function

def clean_text(doc):

import re

# Remove HTML tags

doc = re.sub(r'<.*?>', '', doc)

# Lowercase

doc = doc.lower()

# Remove special characters

doc = re.sub(r'[^a-z0-9\s]', '', doc)

# Replace contractions - simple example

doc = doc.replace("isn't", "is not")

# Strip extra whitespace

doc = re.sub(r'\s+', ' ', doc).strip()

return doc

# Cleaned documents

clean_docs = [clean_text(d) for d in raw_docs]

# Query

query_raw = "AI and machine learning in business"

query_clean = clean_text(query_raw)

# Vectorize raw and cleaned docs

vectorizer_raw = TfidfVectorizer().fit(raw_docs + [query_raw])

vectors_raw = vectorizer_raw.transform(raw_docs + [query_raw])

vectorizer_clean = TfidfVectorizer().fit(clean_docs + [query_clean])

vectors_clean = vectorizer_clean.transform(clean_docs + [query_clean])

# Compute similarity for raw and clean

sim_raw = cosine_similarity(vectors_raw[-1], vectors_raw[:-1]).flatten()

sim_clean = cosine_similarity(vectors_clean[-1], vectors_clean[:-1]).flatten()

print("Similarity scores with RAW data:")

for doc, score in zip(raw_docs, sim_raw):

print(f" - {score:.3f} : {doc}")print("\nSimilarity scores with CLEAN data:")

for doc, score in zip(clean_docs, sim_clean):

print(f" - {score:.3f} : {doc}")

We can see from the output that the similarity score in the raw data is lower and less consistent, while in the cleaned data, the similarity score for the relevant documents has improved, showing how cleaning helps embedding focus on meaningful patterns.

Fine-Tune Embeddings for Your Specific Task

The pre-trained embeddings can be fine-tuned to better suit your retrieval task.

- Supervised Fine-Tuning Approaches: Models are trained on labelled pairs (query, relevant item) or triplets (query, relevant item, irrelevant item) to move relevant items closer together in the embedding space, and move irrelevant items further apart in the embedding space. This performance-oriented fine-tuning approach is useful for improving relevance on your retrieval task.

- Contrastive Learning and Triplet Loss: Contrastive loss aims to put similar pairs as close in the embedding space as possible while keeping distance from a dissimilar pair. Triplet loss is a generalized version of this process where an anchor, positive, and negative sample are used to tune the embedding space to become more discriminative for your specific task.

- Hard Negative Mining: Hard negative samples, where they are very close to positive samples but irrelevant, push the model to learn finer distinctions and to increase retrieval accuracy.

- Domain Adaptation and Data Augmentation: Fine-tuning on task or domain-specific data includes specific vocabulary and contexts and has the effect of adjusting the embedding to reflect those audience contexts. Data augmentation techniques, like paraphrasing, translating item descriptions, or even synthetically creating samples, provide another dimension to training data, making it more robust.

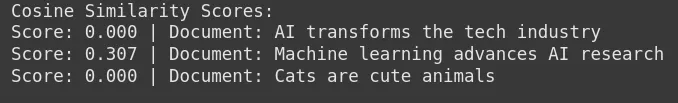

Select Appropriate Similarity Measures

The measure used to compare embeddings tells us how the retrieval candidates rank in similarity.

- Cosine Similarity vs. Euclidean Distance: Cosine similarity represents the angle between vectors and, as such, focuses solely on direction, ignoring magnitude. As a result, it is generally the most frequently used measure for normalized text embeddings, as it accurately measures semantic similarity. On the other hand, Euclidean distance measures straight-line distance in vector space and is useful for situations when the differences in magnitude are relevant.

- When to Use Learned Similarity Metrics: Sometimes, it’s probably best to train a neural network to learn similarity functions suited for your data and task. In such cases, the learned metrics will likely produce impressive results. This method is particularly advantageous as learned metrics will be able to encapsulate complex relationships and hence increase the retrieval performance significantly.

Let’s see a code example of Cosine Similarity vs Euclidean Distance:

import numpy as np

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.metrics.pairwise import cosine_similarity, euclidean_distances

# Sample documents

docs = [

"AI transforms the tech industry",

"Machine learning advances AI research",

"Cats are cute animals",

]

# Query

query = "Artificial intelligence and machine learning"

# Vectorize documents and query using TF-IDF

vectorizer = TfidfVectorizer().fit(docs + [query])

doc_vectors = vectorizer.transform(docs)

query_vector = vectorizer.transform([query])

# Compute Cosine Similarity

cos_sim = cosine_similarity(query_vector, doc_vectors).flatten()

# Compute Euclidean Distance

euc_dist = euclidean_distances(query_vector, doc_vectors).flatten()

# Display results

print("Cosine Similarity Scores:")

for doc, score in zip(docs, cos_sim):

print(f"Score: {score:.3f} | Document: {doc}")

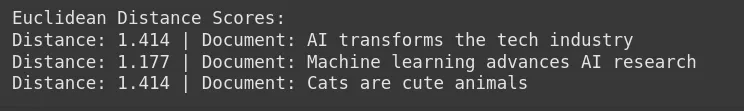

print("\nEuclidean Distance Scores:")

for doc, dist in zip(docs, euc_dist):

print(f"Distance: {dist:.3f} | Document: {doc}")

From both the outputs, we can see that Cosine similarity tends to be better in capturing semantic similarity, whereas Euclidean distance can be useful if the absolute difference in magnitude matters.

Manage Embedding Dimensionality

Embeddings are subject to the cost of size in terms of performance as well as computational management.

- Balancing Size vs. Performance: Larger embeddings have more capacity for representation but will take time to store, use, and require more processing. Smaller embeddings require less time to use and reduce complexity, but real-world applications can lose some nuance. Based on your application’s performance and speed requirements, you may need to find some middle ground.

- Dimensionality Reduction Techniques and Risks: Methods like PCA or UMAP can minimize the size of embeddings while preserving the structure. But with too much reduction, it removes a lot of semantic meaning-highly degrading retrieval tasks. Always evaluate effects before applying.

Use Efficient Indexing and Search Algorithms

If you need to scale your retrieval to millions or billions of items, efficient search algorithms are required.

- ANN (Approximate Nearest Neighbor) Methods: Exact nearest neighbor search can be costly to scale. So ANN algorithms give a fast approximate search with little loss of accuracy, which is easier to work with when working with large data sets.

- FAISS, Annoy, HNSW Overview:

- FAISS (Facebook AI Similarity Search) provides high-throughput ANN searches with a GPU, implementing an indexing scheme to enable this.

- Annoy (Approximate Nearest Neighbors Oh Yeah) is lightweight and optimized for read-heavy systems.

- HNSW (Hierarchical Navigable Small World) structured graphs provide satisfactory results for recall and search time by traversing layered small-world graphs.

- Trade-offs Between Speed and Accuracy: Adjust parameters like search depth or number of probes to manage retrieval speed and accuracy, based on the specific requirements of any given application.

Evaluate and Iterate

Evaluation and iteration are important for continuously optimizing retrieval.

- Benchmarking with Standard Metrics: Use standard metrics such as Precision@k, Recall@k, and Mean Reciprocal Rank (MRR) to evaluate the retrieval performance quantitatively on validation datasets.

- Error Analysis: Think about the error cases to identify patterns such as mis-categorisation, regularity, or ambiguous queries. It helps guide data clean-up efforts, for tuning a model, or for their intent on improving training.

- Continuous Improvement Strategies: Creating a plan for continuous improvement that incorporates user feedback and data updates alongside learning, there is new training data from scans, retraining models with the newest training data, and testing completely different architectures with hyperparameter variation.

Advanced Optimization Strategies

There are several advanced strategies to further increase retrieval accuracy.

- Contextualized Embeddings: Instead of just embedding single words, consider utilizing sentence or paragraph embeddings, which reflect a richer meaning and context. Finding models that also work well, such as Sentence-BERT, will provide you with the right embeddings.

- Ensemble and Hybrid Embeddings: Combine the embeddings from multiple models and even data types. You might think of mixing text and image embeddings or embedding various models together. This will allow you to retrieve even more information.

- Cross-Encoder Re-ranking: Using embedding retrieval for initial candidates, you can take images returned as candidates and use a cross-encoder to re-rank against the query by encoding the query and the item as a single joint encoding, or processing the model multiple times. It will provide a much more precise ranking, but with a longer retrieval time.

- Knowledge Distillation: Large models will perform well, but will not be fast in retrieving. Once you have your large model, distill that knowledge into smaller models. Your smaller models will allow you to achieve image retrieval results just as before, but will be much faster and with a very minuscule loss of accuracy. This is great in production.

Conclusion

The optimization of embeddings enhances retrieval accuracy and speed. First, select the best available training model, and follow with cleaning your data. Next, select your embeddings and fine-tune them. Then, select your measures of similarity, and pick the best search index you can have. There are also advanced methods that you can apply to improve your retrieval, including contextual embeddings, ensemble approaches, re-ranking, and distillation.

Remember, optimization never stops. Keep testing, learning, and improving your system. This ensures your retrieval stays relevant and effective over time.

Frequently Asked Questions

A. Embeddings are numerical vectors that represent data (i.e., text, images, or audio) in a way that retains semantics. They provide a distance measure to allow machines to compare and then quickly find information that is relevant to the embedding. In turn, this improves retrieval.

A. Pretrained embeddings work for most general tasks, and they are a time saver. However, training or fine-tuning your embeddings on your data is usually better and can always improve accuracy, especially if the subject matter is a niche domain.

A. Fine-tuning means to “adjust” a pretrained embedding model. Fine-tuning adjusts the model based on a set of task-specific, labeled data. This teaches the model the nuances of that domain and improves retrieval relevance.