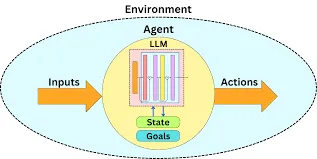

Agentic AI systems act as autonomous digital workers for performing complex tasks with minimum supervision. They are currently growing with a rapid attraction, to the point that one estimate surmises that by 2025, 35% of firms will implement AI agents. However, autonomy raises concerns in high-stakes even subtle errors in these fields can have serious consequences. Hence, it makes people believe that human feedback in Agentic AI ensures safety, accountability, and trust.

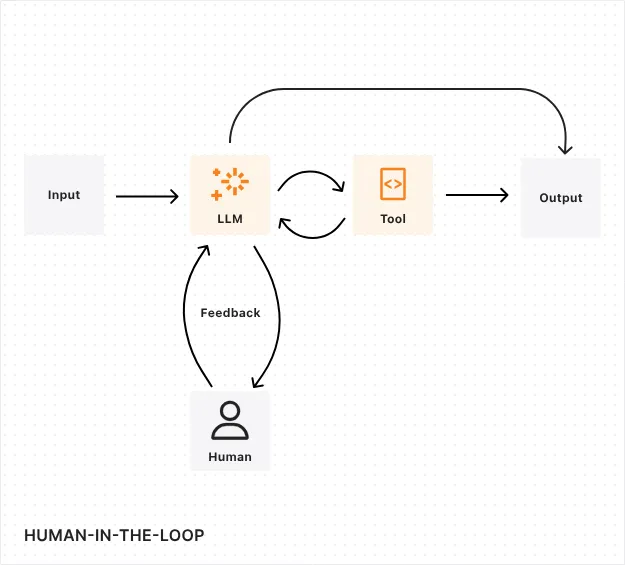

The human-in-the-loop-validation (HITL) approach is one collaborative design in which humans validate or influence an AI’s outputs. Human checkpoints catch errors earlier and keep the system oriented toward human values, which in turn helps better compliance and trust towards the agentic AI. It acts as a safety net for complex tasks. In this article, we’ll compare workflows with and without HITL to illustrate these trade-offs.

Table of contents

Human-in-the-Loop: Concept and Benefits

Human-in-the-Loop (HITL) is a design pattern where an AI workflow explicitly includes human judgment at key points. The AI may generate a provisional output and pause to let the human review, approve, or edit this output. In such a workflow, the human review step is interposed between the AI component and the final output.

Benefits of Human Validation

- Error reduction and accuracy: Human-in-the-loop will review the potential mistakes in the outputs provided by the AI and will fine-tune the output.

- Trust and accountability: Human validation makes a system comprehensible and accountable in its decisions.

- Compliance and safety: Human interpretation of laws and ethics ensures AI actions conform to regulations and safety issues.

When NOT to Use Human-in-the-Loop

- Routine or high-volume tasks: Humans are a bottleneck when speed matters. Externally, in such conditions, the full automation generation might be more effective.

- Time-critical systems: Real-time response cannot wait for human input. For instance, rapid content filtering or live alerts; HITL might hold the system back.

What Makes the Difference: Comparing Two Scenarios

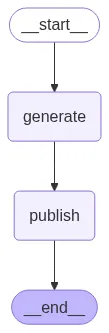

Without Human-in-the-Loop

In the fully automated scenario, the agentic workflow proceeds autonomously. As soon as input is provided, the agent generates content and takes the action. For example, an AI assistant could, in some cases, submit a user’s time-off request without confirming. This benefits from the greatest speed and potential scalability. Of course, the downside is that nothing is checked by a human. There is a conceptual difference between an error made by a Human and an error made by an AI Agent. An agent might misinterpret instructions or perform an undesired action that could lead to harmful outcomes.

With Human-in-the-Loop

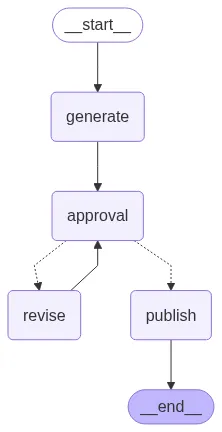

In the HITL (human-in-the-loop) scenario, we are inserting a Human step. After generating a rough draft, the agent stops and asks a person to approve or make changes to the draft. If the draft meets approval, the agent publishes the content. If the draft is not approved, the agent revises the draft based on feedback and circles back. This scenario offers a greater degree of accuracy and trust, since humans can catch mistakes prior to finalizing. For example, adding a confirmation step shifts actions to reduce “accidental” actions and confirms that the agent did not misunderstand input. The downside to this, of course, is that it requires more time and human effort.

Example Implementation in LangGraph

Below is an example using LangGraph and GPT-4o-mini. We define two workflows: one fully automated and one with a human approval step.

Scenario 1: Without Human-in-the-Loop

So, in the first scenario, we’ll create an agent with a simple workflow. It will take the user’s input, like which topic we want to create the content for or on which topic we want to write an article. After getting the user’s input, the agent will use gpt-4o-mini to generate the response.

from langgraph.graph import StateGraph, END

from typing import TypedDict

from openai import OpenAI

from dotenv import load_dotenv

import os

load_dotenv()

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

# --- OpenAI client ---

client = OpenAI(api_key=OPENAI_API_KEY) # Replace with your key

# --- State Definition ---

class ArticleState(TypedDict):

draft: str

# --- Nodes ---

def generate_article(state: ArticleState):

prompt = "Write a professional yet engaging 150-word article about Agentic AI."

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

state["draft"] = response.choices[0].message.content

print(f"\n[Agent] Generated Article:\n{state['draft']}\n")

return state

def publish_article(state: ArticleState):

print(f"[System] Publishing Article:\n{state['draft']}\n")

return state

# --- Autonomous Workflow ---

def autonomous_workflow():

print("\n=== Autonomous Publishing ===")

builder = StateGraph(ArticleState)

builder.add_node("generate", generate_article)

builder.add_node("publish", publish_article)

builder.set_entry_point("generate")

builder.add_edge("generate", "publish")

builder.add_edge("publish", END)

graph = builder.compile()

# Save diagram

with open("autonomous_workflow.png", "wb") as f:

f.write(graph.get_graph().draw_mermaid_png())

graph.invoke({"draft": ""})

if __name__ == "__main__":

autonomous_workflow()Code Implementation: This code sets up a workflow with two nodes: generate_article and publish_article, linked sequentially. When run, it has the agent print its draft and then publish it immediately.

Agent Workflow Diagram

Agent Response

“””

Agentic AI refers to advanced artificial intelligence systems that possess the ability to make autonomous decisions based on their environment and objectives. Unlike traditional AI, which relies heavily on predefined algorithms and human input, agentic AI can analyze complex data, learn from experiences, and adapt its behavior accordingly. This technology harnesses machine learning, natural language processing, and cognitive computing to perform tasks ranging from managing supply chains to personalizing user experiences.

The potential applications of agentic AI are vast, transforming industries such as healthcare, finance, and customer service. For instance, in healthcare, agentic AI can analyze patient data to provide tailored treatment recommendations, leading to improved outcomes. As businesses increasingly adopt these autonomous systems, ethical considerations surrounding transparency, accountability, and job displacement become paramount. Embracing agentic AI offers opportunities to enhance efficiency and innovation, but it also calls for careful contemplation of its societal impact. The future of AI is not just about automation; it's about intelligent collaboration.

”””

Scenario 2: With Human-in-the-Loop

In this scenario, first, we’ll create 2 tools, revise_article_tool and publish_article_tool. The revise_article_tool will revise/change the article’s content as per the user’s feedback. Once the user is done with the feedback and satisfied with the agent response, just by writing publish the 2nd tool publish_article_tool, it will get executed, and it will provide the final article content.

from langgraph.graph import StateGraph, END

from typing import TypedDict, Literal

from openai import OpenAI

from dotenv import load_dotenv

import os

load_dotenv()

# --- OpenAI client ---

OPENAI_API_KEY = os.getenv("OPENAI_API_KEY")

client = OpenAI(api_key=OPENAI_API_KEY)

# --- State Definition ---

class ArticleState(TypedDict):

draft: str

approved: bool

feedback: str

selected_tool: str

# --- Tools ---

def revise_article_tool(state: ArticleState):

"""Tool to revise article based on feedback"""

prompt = f"Revise the following article based on this feedback: '{state['feedback']}'\n\nArticle:\n{state['draft']}"

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

revised_content = response.choices[0].message.content

print(f"\n[Tool: Revise] Revised Article:\n{revised_content}\n")

return revised_content

def publish_article_tool(state: ArticleState):

"""Tool to publish the article"""

print(f"[Tool: Publish] Publishing Article:\n{state['draft']}\n")

print("Article successfully published!")

return state['draft']

# --- Available Tools Registry ---

AVAILABLE_TOOLS = {

"revise": revise_article_tool,

"publish": publish_article_tool

}

# --- Nodes ---

def generate_article(state: ArticleState):

prompt = "Write a professional yet engaging 150-word article about Agentic AI."

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

state["draft"] = response.choices[0].message.content

print(f"\n[Agent] Generated Article:\n{state['draft']}\n")

return state

def human_approval_and_tool_selection(state: ArticleState):

"""Human validates and selects which tool to use"""

print("Available actions:")

print("1. Publish the article (type 'publish')")

print("2. Revise the article (type 'revise')")

print("3. Reject and provide feedback (type 'feedback')")

decision = input("\nWhat would you like to do? ").strip().lower()

if decision == "publish":

state["approved"] = True

state["selected_tool"] = "publish"

print("Human validated: PUBLISH tool selected")

elif decision == "revise":

state["approved"] = False

state["selected_tool"] = "revise"

state["feedback"] = input("Please provide feedback for revision: ").strip()

print(f"Human validated: REVISE tool selected with feedback")

elif decision == "feedback":

state["approved"] = False

state["selected_tool"] = "revise"

state["feedback"] = input("Please provide feedback for revision: ").strip()

print(f"Human validated: REVISE tool selected with feedback")

else:

print("Invalid input. Defaulting to revision...")

state["approved"] = False

state["selected_tool"] = "revise"

state["feedback"] = input("Please provide feedback for revision: ").strip()

return state

def execute_validated_tool(state: ArticleState):

"""Execute the human-validated tool"""

tool_name = state["selected_tool"]

if tool_name in AVAILABLE_TOOLS:

print(f"\n Executing validated tool: {tool_name.upper()}")

tool_function = AVAILABLE_TOOLS[tool_name]

if tool_name == "revise":

# Update the draft with revised content

state["draft"] = tool_function(state)

# Reset approval status for next iteration

state["approved"] = False

state["selected_tool"] = ""

elif tool_name == "publish":

# Execute publish tool

tool_function(state)

state["approved"] = True

else:

print(f"Error: Tool '{tool_name}' not found in available tools")

return state

# --- Workflow Routing Logic ---

def route_after_tool_execution(state: ArticleState) -> Literal["approval", "end"]:

"""Route based on whether the article was published or needs more approval"""

if state["selected_tool"] == "publish":

return "end"

else:

return "approval"

# --- HITL Workflow ---

def hitl_workflow():

print("\n=== Human-in-the-Loop Publishing with Tool Validation ===")

builder = StateGraph(ArticleState)

# Add nodes

builder.add_node("generate", generate_article)

builder.add_node("approval", human_approval_and_tool_selection)

builder.add_node("execute_tool", execute_validated_tool)

# Set entry point

builder.set_entry_point("generate")

# Add edges

builder.add_edge("generate", "approval")

builder.add_edge("approval", "execute_tool")

# Add conditional edges after tool execution

builder.add_conditional_edges(

"execute_tool",

route_after_tool_execution,

{"approval": "approval", "end": END}

)

# Compile graph

graph = builder.compile()

# Save diagram

try:

with open("hitl_workflow_with_tools.png", "wb") as f:

f.write(graph.get_graph().draw_mermaid_png())

print("Workflow diagram saved as 'hitl_workflow_with_tools.png'")

except Exception as e:

print(f"Could not save diagram: {e}")

# Execute workflow

initial_state = {

"draft": "",

"approved": False,

"feedback": "",

"selected_tool": ""

}

graph.invoke(initial_state)

if __name__ == "__main__":

hitl_workflow()

"""Human Feedback:

Keep the discussion big and simple so that both tech and non-tech people can understand

"""Agent Workflow Diagram

Agent Response

“””

Understanding Agentic AI: The Future of Intelligent Assistance Agentic AI represents a groundbreaking advancement in the field of artificial intelligence, characterized by its ability to operate independently while exhibiting goal-directed behavior. Unlike traditional AI systems that require constant human intervention, Agentic AI can analyze data, make decisions, and execute tasks autonomously. This revolutionary technology has the potential to transform various sectors, including healthcare, finance, and customer service, by streamlining processes and enhancing efficiency. One of the most notable features of Agentic AI is its adaptability; it learns from interactions and outcomes, continuously improving its performance. As more businesses adopt this technology, the opportunities for personalized user experiences and advanced predictive analytics expand significantly. However, the rise of Agentic AI also raises important discussions about ethics, accountability, and security. Striking the right balance between leveraging its capabilities and ensuring responsible usage will be crucial as we navigate this new era of intelligent automation. Embracing Agentic AI could fundamentally change our interactions with technology, ultimately enriching our daily lives and reshaping industries. Article successfully published!

”””

Observations

This demonstration reflected common HITL outcomes. With human review, the final article was clearer and more accurate, consistent with findings that HITL improves AI output quality. Human feedback removed errors and refined phrasing, confirming these benefits. Meanwhile, each review cycle added latency and workload. The automated run finished nearly instantly, whereas the HITL workflow paused twice for feedback. In practice, this trade-off is expected: machines provide speed, but humans provide precision.

Conclusion

In conclusion, human feedback could significantly improve agentic AI output. It acts as a safety net for errors and can keep outputs aligned with human intent. In this article, we highlighted that even a simple review step improved text reliability. The decision to use HITL should ultimately be based on context: you should use human review in important cases and let it go in routine situations.

As the use of agentic AI increases, the challenge of when to use automated processes versus using oversight of those processes becomes more important. Regulations and best practices are increasingly requiring some level of human review in high-risk AI implementations. The overall idea is to use automation for its efficiency, but still have human beings take ownership of key decisions taken once a day! Flexible human checkpoints will help us to use agentic AI we can safely and responsibly.

Read more: How to get into Agentic AI in 2025?

Frequently Asked Questions

A. HITL is a design where humans validate AI outputs at key points. It ensures accuracy, safety, and alignment with human values by adding review steps before final actions.

A. HITL is unsuitable for routine, high-volume, or time-critical tasks where human intervention slows performance, such as live alerts or real-time content filtering.

A. Human feedback reduces errors, ensures compliance with laws and ethics, and builds trust and accountability in AI decision-making.

A. Without HITL, AI acts autonomously with speed but risks unchecked errors. With HITL, humans review drafts, improving reliability but adding time and effort.

A. Oversight ensures that AI actions remain safe, ethical, and aligned with human intent, especially in high-stakes applications where errors have serious consequences.