Tired of the endless debate about which AI coding assistant is “the best”? What if the secret isn’t finding one perfect tool, but mastering a symphony of them? Forget the one-size-fits-all approach. The future of coding isn’t about loyalty; it’s about leveraging a diverse toolbox, each instrument tuned for a specific task.

This is the code post-scarcity era, where lines of code are no longer precious but a disposable commodity. In a world where you can generate 1,000 lines of code just to find a single bug and then delete it, the rules of the game have fundamentally changed.

“We are not just writing code anymore; we are orchestrating intelligence.”

In a recent post on X, Andrej Karpathy, a leading voice in the AI world, shared a deep look into his evolving relationship with large language models (LLMs) in the coding workflow. His perspective reflects a broader shift: developers are now moving toward building an LLM workflow – a layered system of tools and practices that adapts to context, task, and personal style.

What follows is a deep dive into Karpathy’s multi-layered approach to LLM-assisted coding, an exploration of the real-world implications for developers at all levels, and a set of actionable insights to help you craft your own optimal AI coding stack.

Table of contents

AI-Powered Coding Stack: A Multi-Layered Approach to Productivity

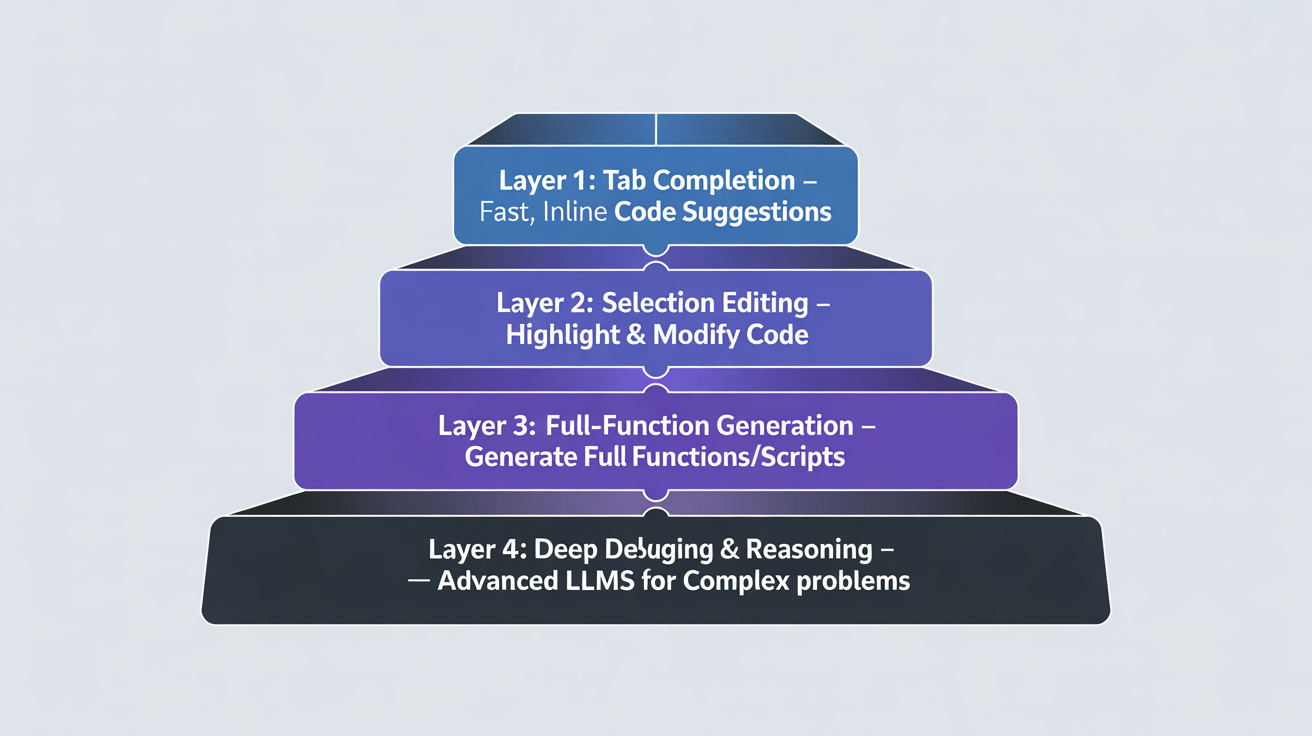

The landscape of AI-assisted coding is vast and ever-evolving. Andrej Karpathy recently shared his personal workflow, revealing a nuanced, multi-layered approach to leveraging different AI tools. Instead of relying on a single perfect solution, he expertly navigates a stack of tools, each with its own strengths and weaknesses. This isn’t just about using AI; it’s about understanding its different forms and applying them strategically.

Karpathy’s LLM workflow for developers involves the following layers:

Let’s break down this powerful, unconventional workflow, layer by layer, and see how you can adopt a similar mindset to supercharge your own productivity.

Layer 1: Tab Complete

At the core of Karpathy’s workflow is one simple task – tab completion. He estimates it makes up about 75% of his AI assistance. Why? Because it’s the most efficient way to communicate with the model. Writing a few lines of code or comments is a high-bandwidth way of specifying a task. You’re not trying to describe a complex function in a text prompt; you’re simply demonstrating what you want, in the right place, at the right time.

This isn’t about AI writing the whole function for you. It’s about a collaborative, back-and-forth process. You provide the intent, and the AI provides the rapid, context-aware suggestions. This workflow is fast, low-latency, and keeps you in the driver’s seat. It’s the equivalent of a co-pilot that finishes your sentences, not one that takes over the controls. For this, Karpathy relies on Cursor, an AI-powered code editor.

- Why it Works: It minimizes the “communication overhead.” Text prompts can be verbose and suffer from ambiguity. Code is direct.

- Example: You type def calculate_ and the AI instantly suggests calculate_total_price(items, tax_rate):. You’re guiding the process with minimal effort.

Since even the best models can get annoying, it’s suggested to toggle the feature on and off. This way, one can maintain control and avoid unwanted suggestions.

Layer 2: Highlighting and Modifying

The next step up in complexity is a targeted approach: highlighting a specific chunk of code and asking for a modification. This is a significant jump from simple tab completion. You’re not just asking for a new line; you’re asking the AI to understand the logic of an existing block and transform it.

This is a powerful technique for refactoring and optimization: an essential part of an LLM workflow for developers. Need to convert a multi-line for loop into a concise list comprehension? Highlight the code and prompt the AI. Want to add error handling to a function? Highlight and ask. This workflow is perfect for micro-optimizations and focused improvements without rewriting the entire function from scratch.

- Why it Works: It provides a clear, bounded context. The AI isn’t guessing what you want from a broad prompt; it’s working within the explicit boundaries you’ve provided.

- Example: You highlight a block of nested if-then-else statements and prompt: “Convert this to a cleaner, more readable format.” The AI might suggest a switch statement or a series of logical and/or operations.

Layer 3: Side-by-Side Assistants (e.g., Claude Code, Codex)

When the task at hand is too large for a simple highlight-and-modify, the next layer of Karpathy’s stack comes into play: running a more powerful assistant like Claude Code or Codex on the side. These tools are for generating larger, more substantial chunks of code that are still relatively easy to specify in a prompt.

This is where the “YOLO mode” (you-only-live-once) becomes tempting, but also risky. Karpathy notes that these models can go off-track, introducing unwanted complexity or simply doing dumb things. The key is not to run in YOLO mode. Be ready to hit ESC frequently. This is about using the AI as a fast-drafting tool, not a perfect, hands-off solution. You still need to be the editor and the final authority.

Using these side-by-side assistants brings its own set of pros and cons.

The Pros:

- Speed: Generates large amounts of code quickly. This is a massive time-saver for boilerplate, repetitive tasks, or code you’d never write otherwise.

- “Vibe-Coding”: Invaluable when you’re working in an unfamiliar language or domain (e.g., Rust or SQL). The AI can bridge the gap in your knowledge, allowing you to focus on the logic.

- Ephemeral Code: This is the heart of the “code post-scarcity era.” Need a 1,000-line visualization to debug a single issue? The AI can create it in minutes. You use it, you find the bug, you delete it. No time lost.

The Cons:

- “Bad Taste”: Karpathy observes that these models often lack a sense of “code taste.” They can be overly defensive (too many try/catch), overcomplicate abstractions, or duplicate code instead of creating helper functions. A final “cleanup” pass is almost always necessary.

- Poor Teachers: Trying to get them to explain code as they write it is often a frustrating experience. They’re built for generation, not pedagogy.

- Over-bloating: They tend to create verbose, nested if-then-else constructs when a one-liner would suffice. This is a direct result of their training data and lack of human intuition.

Layer 4: The Final Frontier

When all other tools fail, Karpathy turns to his final, most powerful layer: a state-of-the-art model like GPT-5 Pro. This is the nuclear option, reserved for the hardest, most intractable problems. This tool isn’t for writing code snippets or boilerplate; it’s for deep, complex problem-solving.

In the context of an LLM workflow for developers, this layer represents the top of the stack—the point where raw model power is applied strategically, not routinely. It’s where subtle bug hunting, deep research, and complex reasoning come into play. Karpathy describes instances where he, his tab-complete, and his side-by-side assistant are all stuck on a bug for ten minutes, but GPT-5 Pro, given the entire context, can dig for ten minutes and find a truly subtle, hidden issue.

Why it’s the Last Resort?

GPT-5 Pro and models that are in its league are great, but they come with their own set of challenges, some of which are:

- Latency: It’s slower. You can’t use it for rapid, real-time coding. You have to wait for its deep, comprehensive analysis.

- Scope: Its power is in its ability to understand a massive context. It can “dig up all kinds of esoteric docs and papers,” providing a level of insight that simpler models can’t match.

- Strategic Use: This is not a daily driver. It’s a strategic weapon for otherwise impossible tasks, like complex architectural cleanup suggestions or a full literature review on a specific coding approach.

AI Coding Tools: Not About Which But How

Karpathy’s workflow is a masterclass in strategic tool usage. It’s not about finding the perfect tool but about building a cohesive, multi-layered system. Each tool has a specific role, a specific time, and a specific purpose.

- Tab complete for high-bandwidth, in-the-moment tasks.

- Highlighting for focused refactoring and quick modifications.

- Side-by-side assistants for generating larger chunks of code and exploring unfamiliar territory.

- A powerful, state-of-the-art model for debugging the truly intractable problems and performing deep research.

This is the future of work for leaders, students, and professionals alike. The era of the single-tool hero is over. Here, Karpathy clearly mentions a new kind of “anxiety” that is grappling with a lot of developers. This is the fear of not being at the frontier.

It is real, but the solution isn’t to chase the next big thing. The solution is to understand the ecosystem, learn to “stitch up” the pros and cons of different tools, and become an orchestra conductor, not just a single musician.

Conclusion

Your ability to thrive in this new era won’t be measured by the lines of code you write, but by the complexity of the problems you solve and the speed at which you do it. AI is a tool, but your human intuition, taste, and strategic thinking are the secret weapons. It’s not about finding one perfect tool for everything; it’s about understanding the requirements of each task. For developers, this means building an LLM workflow that complements their skills: recognizing your comfort with different tools and creating a system that works for you. Karpathy shared his own workflow, but you need to test, adapt, and create one that aligns with your unique style and goals.

Are you ready to stop chasing the perfect tool and start building the perfect workflow?