“China is going to win the AI race.” A recent statement made by the CEO of Nvidia, Jensen Huang, has created a lot of buzz. Models that have been made in China have outgrown the initial assumptions of them being “GPT knock-offs”. With model releases pushing the boundary of what was possible before, while being economical and open-source, they’ve garnered a lot of attention. Newer models even surpassing AI colossi like ChatGPT, Claude and Gemini, at a fraction of the cost.

This article would elucidate how Chinese AI models have advanced tremendously in such a short span, and what countries playing catch up in this race, can learn from them.

Table of contents

Why are Chinese Models Different?

“The best optimization is often subtraction.” This motto lies at the crux of the AI models that China produces. Chinese models are winning not because they have more resources, but because they’ve learned to use fewer resources better.

Western labs like OpenAI, Anthropic, and Google have built incredible hardware infrastructure by scaling computation and data. Chinese labs on the other hand, were forced by export bans, cost ceilings, and limited access to Nvidia chips, to do the opposite: make models that are smaller, faster, cheaper, and still competitive. The optimization route wasn’t a choice—it was a compulsion.

This is how the above phenomenon played out:

- MoE (Mixture-of-Experts) architectures like in DeepSeek V3 and Kimi K2 were devised, which cut compute costs drastically.

- Instead of brute-forcing with thousands of Nvidia H100s (which they couldn’t get their hands on), teams have optimized parallelization, compression, and inference.

- The result of it were models trained at a fraction of GPT-4’s cost performing within microseconds of it.

State Backing

Unlike AI development in the west where progress is made solely by companies or startups, China’s government heavily incentivised making progress in the domain of AI. This is done by offering:

- Subsidies and infrastructure support: The government is supporting AI components from chips to data-centres to energy. For smaller companies to utilize AI, they offer computing power vouchers to access AI training infrastructure at reduced cost.

- Funding: China launched a national venture-capital guidance fund of around 1 trillion yuan (~US$138 billion) to invest in “hard-technology” fields including AI, semiconductors and renewable energy.

- Industrial strategy & policy alignment: AI is designated a “frontier industry” in China’s national plans. The state issues guiding documents, aligns corporate goals with national goals, and fosters “AI+” integration across sectors.

The aforementioned attempts ensure that development of AI isn’t considered as separate from the rest, and instead is seen as an enabler by all.

This is a major problem for AI development in the US, as the government’s stringent policy towards foreign imports and exorbitant tariffs makes it hard for its tech hubs to do business with foreign countries. U.S. restrictions on sale of advanced AI chips to China backfired, by pushing China to build its own stack.

Closely-Fitted System

China took the Apple route to AI, creating tightly integrated systems, where everything from building specialized hardware, to creating aligned software, and all the way to having a local supply chain, was done in China. Instead of using GPUs that are generalized for a multitude of tasks like Gaming, Video Processing, Programming, the hardware was optimized specially for training models.

Many Western AI operations still lean heavily on general-purpose GPUs (originally built for graphics or broad compute workloads) rather than chips custom-designed for specific AI workloads. General-purpose hardware is optimized for a broad use case. This means less tailoring for certain AI-specific operations like massive matrix-multiplication, sparse models, and low-precision inference. This puts them at an inherent disadvantage, where they are using a fraction of the total compute capacity of what they offer as a whole.

The shift to specialized hardware is progressing, but at a slow pace. Where models in China are already being trained on tailored hardware, such scenarios in the west are still far and few.

Talent and Workforce

Most of the talent that is housed in the US, isn’t of the US itself. Western countries for the longest time, benefitted from the brains across the world, by offering them lucrative pay and better standard of living than what was on offer in their country. But with tariffs and other strict policies in place, this has become difficult.

China on the other hand, boasts one of the largest skilled population pools in the world. The statement “They (China) have many AI researchers, in fact 50% of the world’s AI researchers are in China. And they develop very good AI technology.” made by Jensen Huang, captures this perfectly. China not only benefits from a centralized system established by its government, but also from the massive resourceful population.

Open vs Closed

One aspect of AI in which China is the undisputed leader is in Open-Source. Now let me get this straight: China didn’t opt to take the open-source route due to altruism from its side. Instead, its due to competition, necessity, and strategy all colliding at once.

- Necessity: Due to the US export ban on leading Nvidia chips, Chinese labs couldn’t rely on brute-force training. So they emphasized on creating optimized systems. But this isn’t the end! Testing whether the optimized systems are working as expected or not, they required a lot of feedback—Which making models open-sourced helped them provide.

- Strategy: Making the most powerful models was out of the question for China, considering the lead that Western models had. Instead, it carved a niche for itself: Open-source. With global markets becoming ever more proprietary, the shift to open source helped them gain edge in the mindset game and become the go-to option for indie developers.

- Competition: With government funding in place, all that was left to do was be the best! Unlike the rivalries in Silicon Valley, that happens behind closed doors, China had an inclusive approach to growth. The startups, the companies, and individual contributors operated in a transparent manner with a single motive: Enhancing the models.

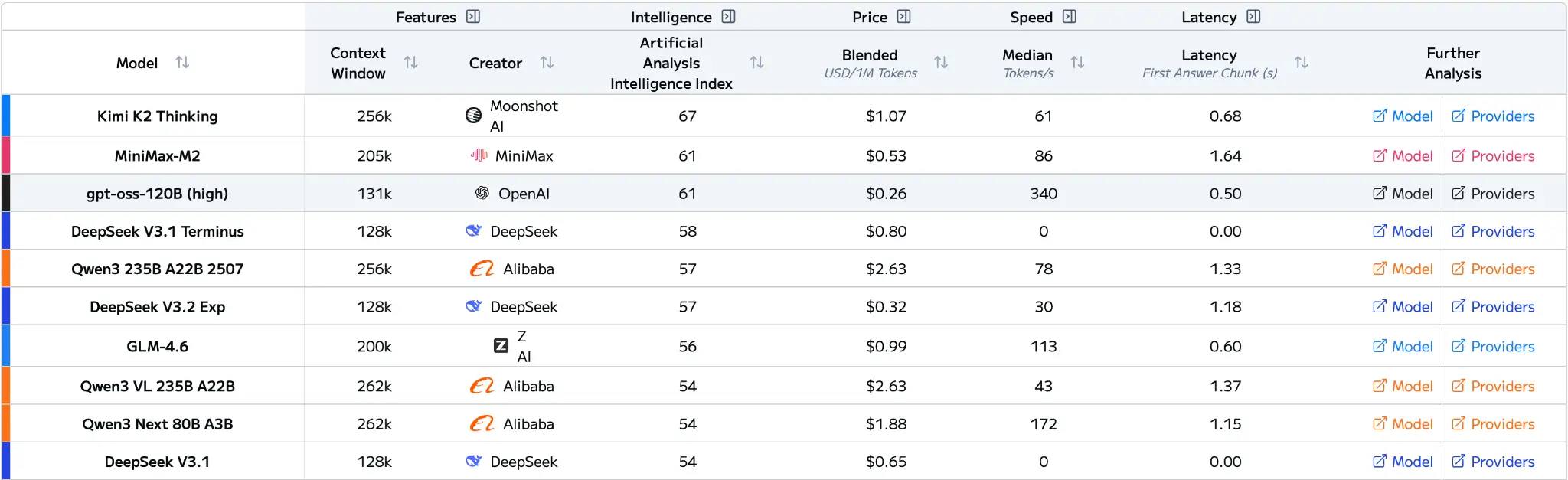

The open ended approach of China’s LLMs clearly paid off, considering that most of the top models that are open-sourced are developed in China.

Who Won?

There isn’t a single winner. Where the West leads in frontier intelligence, China leads in efficiency, scale, and accessible open-source models. When Western labs were closed, China filled the gap and basically took over the innovation loop for affordable and modifiable models. But Western models have an advantage when it comes to performance.

And the race is shifting from “who can build the biggest model?” to “who can deploy AI everywhere?”

Frequently Asked Questions

A. They were pushed into extreme optimization. Limited access to top Nvidia chips forced them to build smaller, cheaper, highly efficient models instead of brute-forcing scale.

A. Open-sourcing wasn’t charity. It gave them global feedback, boosted adoption, and created an edge where Western labs stayed closed.

A. It depends on the metric. The West still leads in raw capability, but China dominates efficiency, cost, scale, and open-source momentum.