Introduction

As a bibliophile who enjoys extracting knowledge from books and articles, I’ve observed how modern reading habits have shifted towards skimming for relevant information amidst information overload. Recently, while reading an article on India’s upcoming tour of Australia, I found myself quickly skimming through the text, focusing mainly on headlines and details about Virat Kohli. This experience prompted me to explore the possibilities in Natural Language Processing (NLP), particularly in relation extraction and summarization.

I realized the potential of building an information extraction model using machine learning techniques such as BERT and Bayes for automating data extraction tasks from diverse sources, including medical records. This project not only enhanced my NLP skills but also deepened my understanding of data science and the power of machine learning models in automating complex tasks.

Learning Outcomes

- Evaluate the performance of natural language processing (NLP) models using appropriate metrics such as precision, recall, F1-score, and accuracy.

- Design and develop advanced NLP pipelines using techniques such as named entity recognition (NER), sentiment analysis, and text summarization.

- Evaluate the performance of NLP models across various tasks and datasets using standard evaluation metrics.

- Develop custom NLP solutions using NLTK’s extensive collection of corpora, lexical resources, and algorithms.

- Apply regular expressions for pattern matching and text search in large datasets and documents.

- Design and implement information retrieval systems using techniques such as vector space models, term frequency-inverse document frequency (TF-IDF), and document similarity metrics.

- Develop knowledge base-driven applications for tasks such as question answering, entity recognition, and recommendation systems.

Table of contents

- Introduction

- What is Information Extraction?

- How Does Information Extraction Work?

- Where Do We Go from Here?

- Getting Familiar with the Text Dataset

- Speech Text Pre-Processing

- Split the Speech into Different Sentences

- Information Extraction using SpaCy

- Information Extraction #1 – Finding Mentions of Prime Minister in the Speech

- Information Extraction #2 – Finding Initiatives

- Finding Patterns in the Speeches

- Information Extraction #3 – Rule on Noun-Verb-Noun Phrases

- Information Extraction #4 – Rule on Adjective Noun Structure

- Information Extraction #5 – Rule on Prepositions

- Conclusion

- Frequently Asked Questions

What is Information Extraction?

Text data contains a plethora of information, yet not all of it may be relevant to your needs. Some may seek to extract specific relationships between entities, utilizing information extraction NLP techniques. Our intentions vary based on individual requirements and objectives.

Imagine having to go through all the legal documents to find legal precedence to validate your current case. Or having to go through all the research papers to find relevant information to cure a disease. There are many more examples like resume harvesting, media analysis, email scanning, etc.

But just imagine having to manually go through all of the textual data and extracting the most relevant information. Clearly, it is an uphill battle and you might even end up skipping some important information.

For anyone trying to analyze textual data, the difficult task is not of finding the right documents, but of finding the right information from these documents. Understanding the relationship between entities, understanding how the events have unfolded, or just simply finding hidden gems of information, is clearly what anyone is looking for when they go through a piece of text.

Therefore, coming up with an automated way of extracting the information from textual data and presenting it in a structured manner will help us reap a lot of benefits and tremendously reduce the amount of time we have to spend time skimming through text documents. This is precisely what information extraction strives to achieve.

Using information extraction nlp, we can retrieve pre-defined information such as the name of a person, location of an organization, or identify a relation between entities, and save this information in a structured format such as a database.

Let me show you another example I’ve taken from a cricket news article:

We can extract the following information from the text:

- Country – India, Captain – Virat Kohli

- Batsman – Virat Kohli, Runs – 2

- Bowler – Kyle Jamieson

- Match venue – Wellington

- Match series – New Zealand

- Series highlight – single fifty, 8 innings, 3 formats

This enables us to reap the benefits of powerful query tools like SQL for further analysis. Creating such structured data using information extraction will not only help us in analyzing the documents better but also help us in understanding the hidden relationships in the text.

How Does Information Extraction Work?

Given the capricious nature of text data that changes depending on the author or the context, Information Extraction seems like a daunting task. But it doesn’t have to be that way!

We all know that sentences are made up of words belonging to different Parts of Speech (POS). There are eight different POS in the English language: noun, pronoun, verb, adjective, adverb, preposition, conjunction, and intersection.

The POS determines how a specific word functions in meaning in a given sentence. For example, take the word “right”. In the sentence, “The boy was awarded chocolate for giving the right answer”, “right” is used as an adjective. Whereas, in the sentence, “You have the right to say whatever you want”, “right” is treated as a noun.

This goes to show that the POS tag of a word carries a lot of significance when it comes to understanding the meaning of a sentence. And we can leverage it to extract meaningful information from our text.

Let’s take an example to understand this. We’ll be using the popular spaCy library here.

Python Code:

import spacy

# load english language model

nlp = spacy.load('en_core_web_sm',disable=['ner','textcat'])

text = "This is a sample sentence."

# create spacy

doc = nlp(text)

for token in doc:

print(token.text,'->',token.pos_)We were easily able to determine the POS tags of all the words in the sentence. But how does it help in Information Extraction?

Well, if we wanted to extract nouns from the sentences, we could take a look at POS tags of the words/tokens in the sentence, using the attribute .pos_, and extract them accordingly.

It was that easy to extract words based on their POS tags. But sometimes extracting information purely based on the POS tags is not enough. Have a look at the sentence below:

If I wanted to extract the subject and the object from a sentence, I can’t do that based on their POS tags. For that, I need to look at how these words are related to each other. These are called Dependencies.

We can make use of spaCy’s displacy visualizer that displays the word dependencies in a graphical manner:

Pretty cool! This directed graph is known as a dependency graph. It represents the relations between different words of a sentence.

Each word is a node in the Dependency graph. The relationship between words is denoted by the edges. For example, “The” is a determiner here, “children” is the subject of the sentence, “biscuits” is the object of the sentence, and “cream” is a compound word that gives us more information about the object.

The arrows carry a lot of significance here:

- The arrowhead points to the words that are dependent on the word pointed by the origin of the arrow

- The former is referred to as the child node of the latter. For example, “children” is the child node of “love”

- The word which has no incoming arrow is called the root node of the sentence

Let’s see how we can extract the subject and the object from the sentence. Like we have an attribute for POS in SpaCy tokens, we similarly have an attribute for extracting the dependency of a token denoted by dep_:

Voila! We have the subject and object of our sentence.

Using POS tags and Dependency tags, we can look for relationships between different entities in a sentence. For example, in the sentence “The cat perches on the window sill”, we have the subject, “cat”, the object “window sill”, related by the preposition “on”. We can look for such relationships and much more to extract meaningful information from our text data.

I suggest going through this amazing tutorial which explains Information Extraction in detail with tons of examples.

Where Do We Go from Here?

We have briefly spoken about the theory regarding Information Extraction which I believe is important to understand before jumping into the crux of this article.

“An ounce of practice is generally worth more than a ton of theory.” –E.F. Schumacher

In the following sections, I am going to explore a text dataset and apply the information extraction technique to retrieve some important information, understand the structure of the sentences, and the relationship between entities.

So, without further ado, let’s get cracking on the code!

Getting Familiar with the Text Dataset

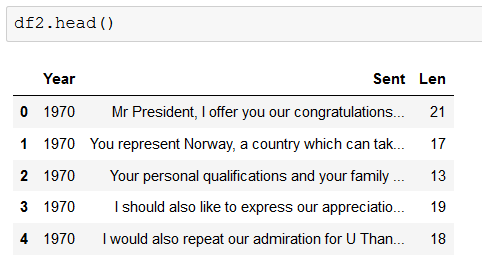

The dataset we are going to be working with is the United Nations General Debate Corpus. It contains speeches made by representatives of all the member countries from the year 1970 to 2018 at the General Debate of the annual session of the United Nations General Assembly.

But we will take a subset of this dataset and work with speeches made by India at these debates. This will allow us to stay on track and better understand the task at hand of understanding Information Extraction. This leaves us with 49 speeches made by India over the years, each speech ranging from anywhere between 2000 to 6000+ words.

Having said that, let’s have a look at our dataset:

I will print a snapshot of one of the speeches to give you a feel of what the data looks like:

Now let’s start working with our dataset!

Speech Text Pre-Processing

First, we need to clean our text data. When I went over a few speeches, I found each paragraph in the speech was numbered to distinctly identify it. There were obviously unwanted characters like newline character, a hyphen, salutations, and apostrophes, like in any other text dataset.

But another unique and unwanted information present were the references made in each speech to other documents. We obviously don’t want that either.

I have written a simple function to clean the speeches. An important point here is that I haven’t used lemmatization or changed the words to lowercase as it has the potential to change the POS tag of the word. We certainly don’t want to do that as you will see in the upcoming subsections.

Right, now that we have our minimally cleaned speeches, we can split it up into separate sentences.

Split the Speech into Different Sentences

Splitting our speeches into separate sentences will allow us to extract information from each sentence. Later, we can combine it to get cumulative information for any specific year.

Finally, we can create a dataframe containing the sentences from different years:

After performing this operation, we end up with 7150 sentences. Going over them and extracting information manually will be a difficult task. That’s why we are looking at Information Extraction using NLP techniques!

Information Extraction using SpaCy

Now, we can start working on the task of Information Extraction. We will be using the spaCy library for working with the text data. It has all the necessary tools that we can exploit for all the tasks we need for information extraction.

Let me import the relevant SpaCy modules that we will require for the task ahead:

We will need the spaCy Matcher class to create a pattern to match phrases in the text. We’ll also require the displaCy module for visualizing the dependency graph of sentences.

The visualise_spacy_tree library will be needed for creating a tree-like structure out of the Dependency graph. This helps in visualizing the graph in a better way. Finally, IPython Image and display classes are required to output the tree.

But you don’t need to worry about these too much. It will become clear as you look at the code.

Information Extraction #1 – Finding Mentions of Prime Minister in the Speech

When working on information extraction tasks, it is important to manually go over a subset of the dataset to understand what the text is like and determine if anything catches your attention at first glance. When I first went over the speeches, I found many of them referred to what the Prime Minister had said, thought, or achieved in the past.

We know that a country is nothing without its leader. The destination a country ends up in is by and large the result of the able guidance of its leader. Therefore, I believe it is important to extract those sentences from the speeches that referred to Prime Ministers of India, and try and understand what their thinking and perspective were, and also try to unravel any common or differing beliefs over the years.

To achieve this task, I used SpaCy’s Matcher class. It allows us to match a sequence of words based on certain patterns. For the current task, we know that whenever a Prime Minister is referred to in the speech, it will be in one of the following ways:

- Prime Minister of [Country] …

- Prime Minister [Name] …

Using this general understanding, we can come up with a pattern:

Let me walk you through this pattern:

- Here, each dictionary in the list matches a unique word

- The first and second dictionaries match the keyword “Prime Minister” irrespective of whether it is in uppercase or not, which is why I have included the key “LOWER”

- The third dictionary matches a word that is a preposition. What I am looking for here is the word “of”. Now, as discussed before, it may or may not be present in the pattern, therefore, an additional key, “OP” or optional, is mentioned to point out just that

- Finally, the last dictionary in the pattern should be a proper noun. This can either be the name of the country or the name of the prime minister

- The matched keywords have to be in continuation otherwise the pattern will not match the phrase

Here are some sample sentences from the year 1989 that matched our pattern:

Now, since only 58 sentences out of 7150 total sentences gave an output that matched our pattern, I have summarised the relevant information from these outputs here:

- PM Indira Gandhi and PM Jawaharlal Nehru believed in working together in unity and with the principles of the UN

- PM Indira Gandhi believed in striking a balance between global production and consumption. She set out policies dedicated to national reconstruction and the consolidation of a secular and pluralistic political system

- PM Indira Gandhi emphasized that India does not intervene in the internal affairs of other countries. However, this stand on foreign policy took a U-turn under PM Rajiv Gandhi when he signed an agreement with the Sri Lankan Prime Minister which brought peace to Sri Lanka

- Both PM Indira Gandhi and PM Rajiv Gandhi believed in the link between economic development and protection of the environment

- PM Rajiv Gandhi advocated for the disarmament of nuclear weapons, a belief that was upheld by India over the years

- Indian, under different PMs, has always extended a hand of peace towards Pakistan over the years

- PM Narendra Modi believes that economic empowerment and upliftment of any nation involves the empowerment of its women

- PM Narendra Modi has launched several schemes that will help India achieve its SGD goals

Using information extraction, we were able to isolate only a few sentences that we required that gave us maximum results.

Information Extraction #2 – Finding Initiatives

The second interesting thing I noticed while going through the speeches is that there were a lot of initiatives, schemes, agreements, conferences, programs, etc. that were mentioned in the speeches. For example, ‘Paris agreement’, ‘Simla Agreement’, ‘Conference on Security Council’, ‘Conference of Non Aligned Countries’, ‘International Solar Alliance’, ‘Skill India initiative’, etc.

Extracting these would give us an idea about what are the priorities for India and whether there is a pattern as to why they are mentioned quite often in the speeches.

I am going to refer to all the schemes, initiatives, conferences, programmes, etc. keywords as initiatives.

To extract initiatives from the text, the first thing I am going to do is identify those sentences that talk about the initiatives. For that, I will use simple regex to select only those sentences that contain the keyword ‘initiative’, ‘scheme’, ‘agreement’, etc. This will reduce our search for the initiative pattern that we are looking for:

Now, you might be thinking that our task is done here as we have already identified the sentences. We can easily look these up and determine what is being talked about in these sentences. But, think about it, not all of these will contain the initiative name. Some of these might be generally talking about initiatives but no initiative name might be present in them.

Therefore, we need to come up with a better solution that extracts only those sentences that contain the initiative names. For that, I am going to use the spaCy Matcher, once again, to come up with a pattern that matches these initiatives.

Have a look at the following example sentences and see if you can come up with a pattern to extract these initiatives:

As you might have noticed, the initiative name is a proper noun that starts with a determiner and ends with either ‘initiative’/’programme’/’agreement’ etc. words in the end. It also includes an occasional preposition in the middle. I also noticed that most of the initiative names were between two to five words long. Keeping this in mind, I came up with the following pattern to match the initiative names:

We got 62 sentences that match our pattern – not bad. Have a look at the output from the year 2018:

But one thing I must point out here is that there were a lot more initiatives in the speeches that did not match our pattern. For example, in the year 2018, there were other initiatives too like “MUDRA”, ”Ujjwala”, ”Paris Agreement”, etc. So is there a better way to extract them?

Remember how we looked at dependencies at the beginning of the article? Well, we are going to use those to make some rules to match the initiative name. But before making a rule, you need to understand how a sentence is structured, only then can you come up with a general rule to extract relevant information.

To understand the structure of the sentence I am going to print the dependency graph of a sample example but in a tree fashion which gives a better intuition of the structure. Have a look below:

See how ‘Ujjwala’ is a child node of ‘programme’. Have a look at another example:

Notice how the ‘International Solar Alliance’ is structured.

You must have got the idea by now that the initiative names are usually children of nodes that contain words like ‘initiative’, ‘programme’, etc. Based on this knowledge we can develop our own rule.

The rule I am suggesting is pretty simple. Let me walk you through it:

- I am going to look for tokens in sentences that contain my initiative keywords

- Then I am going to look at its subtree (or words dependent on it) using token.subtree and extract only those nodes/words that are proper nouns, since they are most likely going to contain the name of the initiative

This time we matched 282 entries. That is a significant improvement over the previous result. Let’s go over the 2018 output and see if we did any better this time:

Out of 7000+ sentences, we were able to zero down to just 282 sentences that talked about initiatives. I looped over these outputs and below is how I would summarise the output:

- There are a lot of different international initiatives or schemes that India has mentioned in its speeches. This goes to show that India has been an active member of the international community working towards building a better future by solving problems through these initiatives

- Another point to highlight here is that the initiatives mentioned in the initial years have been more focused on those that concern the international community. However, during recent times, especially after 2014, a lot of domestic initiatives have been mentioned in the speeches like ‘Ayushman Bharat’, ‘Pradhan Mantri Jan Dhan Yojana’, etc. This shows a shift in how the country perceives its role in the community. By mentioning a lot of domestic initiatives, India has started to put more of the domestic work in front of the international community to witness and, probably, even follow in their footsteps

Having said that, the results were definitely not perfect. There were instances when unwanted words were also getting extracted with the initiative names. But the output derived by making our own rules was definitely better than the ones derived by using SpaCy’s pattern matcher. This goes to show the flexibility we can achieve by making our own rules.

Finding Patterns in the Speeches

So far, we extracted only that information that met our analytical eye when we skimmed over the data. But is there any other information hidden in our dataset? Surely there is and we are going to explore that by making our own rules using the dependency of the words, as we did in the previous section.

But before that, I want to point out two things.

First, when we are trying to understand the structure of the speech, we cannot look at the entire speech, that would take an eternity, and time is of the essence here. What we are going to do instead is look at random sentences from the dataset and then, based on their structure, try to come up with general rules to extract information.

But how do we test the validity of these rules? That’s where my second point comes in! Not all of the rules that we come up with will yield satisfactory results. So, to sift out the irrelevant rules, we can look at the percentage of sentences that matched our rule out of all sentences. This will give us a fair idea about how well the rule is performing, and whether, in fact, there is any such general structure in the corpus!

Another very important point that needs to be highlighted here is that any corpus is bound to contain long complex sentences. Working with these sentences to try and understand their structure will be a very difficult task. Therefore, we are going to look at smaller sentences. This will give us the opportunity to better understand their structure. So what’s the magic number? Let’s first look at how the sentence length varies in our corpus.

Looking at the histogram, we can see that most of the sentences range from 15-20 words. So I am going to work with sentences that contain no more than 15 words:

Now, let’s write a simple function that will generate random sentences from this dataframe:

Finally, let’s make a function to evaluate the result of our rule:

Right, let’s get down to the business of making some rules!

Information Extraction #3 – Rule on Noun-Verb-Noun Phrases

When you look at a sentence, it generally contains a subject (noun), action (verb), and an object (noun). The rest of the words are just there to give us additional information about the entities. Therefore, we can leverage this basic structure to extract the main bits of information from the sentence. Take for example the following sentence:

What will be extracted from this sample sentence based on the rule is – “countries face threats”. This should give us a fair idea about what the sentence is trying to say.

So let’s look at how this rule fairs what we run it against the short sentences that we are working with:

We are getting more than 20% pattern match for our rule and we can check it for all the sentences in the corpus:

We are getting more than a 30% match for our rules, which means 2226 out of 7150 sentences matched this pattern. Let’s form a new dataframe containing only those sentences that have an output and then segregate the verb from the nouns:

Let’s take a look at the top 10 most occurring verbs used in the sentences:

Now, we can look at specific verbs to see what kind of information is present. For example, ‘welcome’ and ‘support’ could tell us what India encourages. And verbs like ‘face’ could maybe tell us what kind of problems we face in the real world.

By looking at the output, we can try to make out what is the context of the sentence. For example, we can see that India supports ‘efforts’, ‘viewpoints’, ‘initiatives’, ‘struggles’, ‘desires, ‘aspirations’, etc. While India believes that the world faces ‘threat’, ‘conflicts’, ‘colonialism’, ‘pandemics’, etc.

We can select sentences to explore in-depth by looking at the output. This will definitely save us a lot of time than just going over the entire text.

Information Extraction #4 – Rule on Adjective Noun Structure

In the previous rule that we made for information extraction in NLP, we extracted the noun subjects and objects, but the information did not feel complete. This is because many nouns have an adjective or a word with a compound dependency that augments the meaning of a noun. Extracting these along with the noun will give us better information about the subject and the object.

Have a look at the sample sentence below:

What we are looking to achieve here is – “better life”.

The code for this rule is simple, but let me walk you through how it works:

- We look for tokens that have a Noun POS tag and have subject or object dependency

- Then we look at the child nodes of these tokens and append it to the phrase only if it modifies the noun

51% of the short sentences match this rule. We can now try to check it on the entire corpus:

On the entire corpus of 7150, 76% or 5117 sentences matched our pattern rule, since most of them are bound to contain the noun and its modifier.

Now we can combine this rule along with the rule we created previously. This will give us a better perspective of what information is present in a sentence:

We get a 31% output match some of which are displayed below:

Here, we end up with phrases like “we take a fresh pledge”, “we have a sizeable increase”, “people expecting better life”, etc. which included the nouns and their modifiers. This gives us better information about what is being extracted here.

As you can see, we not only came up with a new rule to understand the structure of the sentences but also combined two rules to get better information from the extracted text.

Information Extraction #5 – Rule on Prepositions

Thank god for prepositions! They tell us where or when something is in a relationship with something else. For example, The people of India believe in the principles of the United Nations. Clearly extracting phrases including prepositions will give us a lot of information from the sentence. This is exactly what we are going to achieve with this rule.

Let’s try to understand how this rule works by going over it on a sample sentence – “India has once again shown faith in democracy.”

- We iterate over all the tokens looking for prepositions. For example, in this sentence

- On encountering a preposition, we check if it has a headword that is a noun. For example, the word faith in this sentence

- Then we look at the child tokens of the preposition token falling on its right side. For example, the word democracy

This should finally extract the phrase faith in democracy from the sentence. Have a look at the dependency graph of the sentence below:

Now let’s apply this rule to our short sentences:

About 48% of the sentences follow this rule:

We can test this pattern on the entire corpus since we have a good amount of sentences matching the rule:

74% of the total sentences match this pattern. Let’s separate the preposition from the nouns and see what kind of information we were able to extract:

The following dataframe shows the result of the rule on the entire corpus, but the preposition and nouns are separated for better analysis:

We can look at the top 10 most occurring prepositions in the entire corpus:

We look at certain prepositions to explore the sentences in detail. For example, the preposition ‘against’ can give us information about what India does not support:

Skimming over the nouns, some important phrases like:

- efforts against proliferation

- fight against terrorism, action against terrorism, the war against terrorism

- discrimination against women

- war against poverty

- struggle against colonialism

… and so on. This should give us a fair idea about which sentences we want to explore in detail. For example, efforts against proliferation talk about efforts towards nuclear disarmament. Or the sentence on the struggle against colonialism talks about the historical links between India and Africa borne out of their common struggle against colonialism.

As you can see, prepositions provide vital connections between two nouns, aiding in information extraction in artificial intelligence. With some domain expertise, sifting through extensive data becomes manageable, helping identify India’s stances and actions, among other insights.

But the output seems a bit incomplete. For example, in the sentence efforts against proliferation, what kind of proliferation are we talking about? Certainly, we need to include the modifiers attached to the nouns in the phrase as we did in Information Extraction #4. This would definitely increase the comprehensibility of the extracted phrase.

This rule can be easily modified to include the new change. I have created a new function to extract the noun modifiers for nouns that we extracted from Information Extraction #4:

All we have to do is call this function whenever we encounter a noun in our phrase:

This definitely has more information than before. For example, ‘impediments in economic development’ instead of ‘impediments in development’ and ‘greater transgressor of human rights’ rather than ‘transgressor of rights’.

Once again, combining rules has given us more power and flexibility to explore only those sentences in detail that have a meaningful extracted phrase.

Code

You can find the complete code file here.

Conclusion

The field of natural language processing (NLP) and its applications such as named entity recognition, semantic annotation, and sentiment analysis have revolutionized our ability to extract meaning from unstructured data. Through APIs and advanced algorithms, we can now classify and extract relations from vast amounts of text, transforming raw information into actionable insights. As we continue to innovate in this space, the potential for NLP to enhance decision-making and drive efficiencies across industries remains profound.

Going forward, you can explore the following courses to expand your knowledge in the field of NLP:

- Natural Language Processing (NLP) Using Python

- A Comprehensive Learning Path to Understand and Master NLP

Key Takeaways

- Understanding the trade-offs between time-consuming NLP tasks and computational efficiency.

- Importance of preprocessing steps like tokenization and normalization in NLP techniques.

- Application of advanced NLP tasks like sentiment analysis and summarization in healthcare.

- Integration of part-of-speech tagging with other NLP techniques for comprehensive analysis of healthcare data.

- Techniques for classifying medical texts based on their content and context.

- Challenges specific to using NLP in healthcare, such as data privacy and security concerns.

Frequently Asked Questions

A. Information extraction in NLP refers to automatically extracting structured information from unstructured textual data. It involves identifying and extracting specific entities, relationships, and attributes to uncover valuable insights or knowledge hidden within the text.

A. NLP, or Natural Language Processing, is utilized in information extraction to analyze and understand human language text. It helps extract meaningful information from unstructured text data using techniques like parsing, entity recognition, and relationship extraction.

A.Information extraction refers to the process of automatically extracting structured information from unstructured or semi-structured text data. It involves identifying and extracting specific pieces of information, such as entities, relationships, and events, to make them usable for further analysis or applications.

A. Information extraction aims to transform unstructured or semi-structured data into structured and organized information that can be easily analyzed, understood, and used for various purposes such as decision-making, knowledge discovery, or data integration.

There are several approaches to information extraction, including:

Rule-based systems: Using predefined rules and patterns to extract information from text.

Statistical methods: Employing statistical models and machine learning algorithms to automatically learn patterns and extract information.

Hybrid approaches: Combining rule-based and statistical methods to improve the accuracy and flexibility of information extraction systems.

Deep learning techniques: Utilizing neural networks and deep learning architectures to learn complex patterns and relationships for information extraction tasks.

Excellent article!

Great Article, congratulation. Is there any place when we can find the full code? Thanks a lot

Very Useful Information...!

Very informative. I will share this link to my students. Thank you.

Thanks for sharing!

Nice writeup Anniruddha. Thanks for sharing.

What an amazing and detailed article Aniruddha , kudos. Haven't seen any article with such detail on NLP Spacy. Great work

I was coding along and noticed an issue. You did not create a "Speech_clean" column in your df2 construction. It threw a KeyError for me. Very informative stuff, though. Thank you.

I have corrected the code, it should work now.

Informative and well structured. I hope people don't apply same technique on your blog. Liked going through each word and sentence.

Hello Sir It was a very informative tutorial. Thank you for that. But, I want to know that how can I extract data from a scientific journal for machine learning? Thanks