Introduction

Prompt engineering has become pivotal in leveraging Large Language models (LLMs) for diverse applications. As you all know, basic prompt engineering covers fundamental techniques. However, advancing to more sophisticated methods allows us to create highly effective, context-aware, and robust language models. This article will delve into multiple advanced prompt engineering techniques using LangChain. I have added code examples and practical insights for developers.

In advanced prompt engineering, we craft complex prompts and use LangChain’s capabilities to build intelligent, context-aware applications. This includes dynamic prompting, context-aware prompts, meta-prompting, and using memory to maintain state across interactions. These techniques can significantly enhance the performance and reliability of LLM-powered applications.

Learning Objectives

- Learn to create multi-step prompts that guide the model through complex reasoning and workflows.

- Explore advanced prompt engineering techniques to adjust prompts based on real-time context and user interactions for adaptive applications.

- Develop prompts that evolve with the conversation or task to maintain relevance and coherence.

- Generate and refine prompts autonomously using the model’s internal state and feedback mechanisms.

- Implement memory mechanisms to maintain context and information across interactions for coherent applications.

- Use advanced prompt engineering in real-world applications like education, support, creative writing, and research.

This article was published as a part of the Data Science Blogathon.

Table of contents

Setting Up LangChain

Make sure you set up LangChain correctly. A robust setup and familiarity with the framework are crucial for advanced applications. I hope you all know how to set up LangChain in Python.

Installation

First, install LangChain using pip:

pip install langchainBasic setup

from langchain import LangChain

from langchain.models import OpenAI

# Initialize the LangChain framework

lc = LangChain()

# Initialize the OpenAI model

model = OpenAI(api_key='your_openai_api_key')

Advanced Prompt Structuring

Advanced prompt structuring is an advanced version that goes beyond simple instructions or contextual prompts. It involves creating multi-step prompts that guide the model through logical steps. This technique is essential for tasks that require detailed explanations, step-by-step reasoning, or complex workflows. By breaking the task into smaller, manageable components, advanced prompt structuring can help enhance the model’s ability to generate coherent, accurate, and contextually relevant responses.

Applications of Advanced Prompt Structuring

- Educational Tools: Advanced prompt engineering tools can create detailed educational content, such as step-by-step tutorials, comprehensive explanations of complex topics, and interactive learning modules.

- Technical Support:It can help provide detailed technical support, troubleshooting steps, and diagnostic procedures for various systems and applications.

- Creative Writing: In creative domains, advanced prompt engineering can help generate intricate story plots, character developments, and thematic explorations by guiding the model through a series of narrative-building steps.

- Research Assistance: For research purposes, structured prompts can assist in literature reviews, data analysis, and the synthesis of information from multiple sources, ensuring a thorough and systematic way.

Key Components of Advanced Prompt Structuring

Here are advanced prompt engineering structuring:

- Step-by-Step Instructions: By providing the model with a clear sequence of steps to follow, we can significantly improve the quality of its output. This is particularly useful for problem-solving, procedural explanations, and detailed descriptions. Each step should build logically on the previous one, guiding the model through a structured thought process.

- Intermediate Goals: To help ensure the model stays on track, we can set intermediate goals or checkpoints within the prompt. These goals act as mini-prompts within the main prompt, allowing the model to focus on one aspect of the task at a time. This approach can be particularly effective in tasks that involve multiple stages or require the integration of various pieces of information.

- Contextual Hints and Clues: Incorporating contextual hints and clues within the prompt can help the model understand the broader context of the task. Examples include providing background information, defining key terms, or outlining the expected format of the response. Contextual clues ensure that the model’s output is aligned with the user’s expectations and the specific requirements of the task.

- Role Specification: Defining a specific role for the model can enhance its performance. For instance, asking the model to act as an expert in a particular field (e.g., a mathematician, a historian, a medical doctor) can help tailor its responses to the expected level of expertise and style. Role specification can improve the model’s ability to adopt different personas and adapt its language accordingly.

- Iterative Refinement: Advanced prompt structuring often involves an iterative process where the initial prompt is refined based on the model’s responses. This feedback loop allows developers to fine-tune the prompt, making adjustments to improve clarity, coherence, and accuracy. Iterative refinement is crucial for optimizing complex prompts and achieving the desired output.

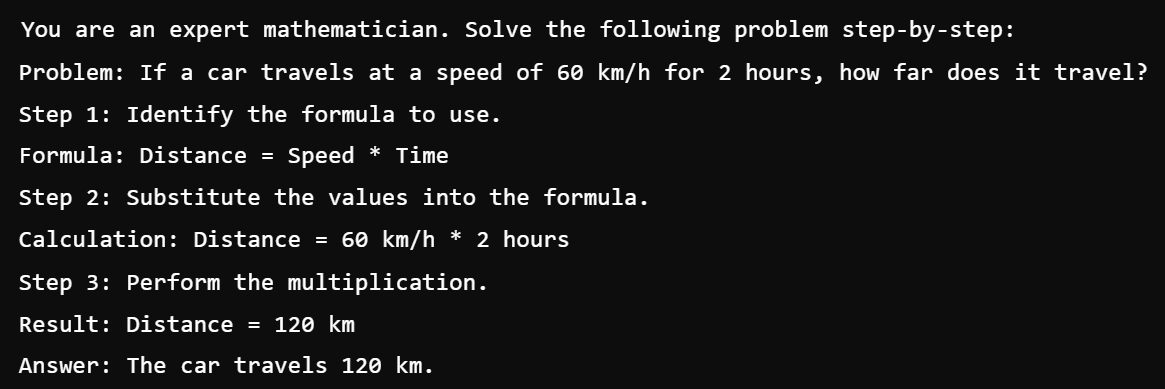

Example: Multi-Step Reasoning

prompt = """

You are an expert mathematician. Solve the following problem step-by-step:

Problem: If a car travels at a speed of 60 km/h for 2 hours, how far does it travel?

Step 1: Identify the formula to use.

Formula: Distance = Speed * Time

Step 2: Substitute the values into the formula.

Calculation: Distance = 60 km/h * 2 hours

Step 3: Perform the multiplication.

Result: Distance = 120 km

Answer: The car travels 120 km.

"""

response = model.generate(prompt)

print(response)

Dynamic Prompting

In Dynamic prompting, we adjust the prompt based on the context or previous interactions, enabling more adaptive and responsive interactions with the language model. Unlike static prompts, which remain fixed throughout the interaction, dynamic prompts can evolve based on the evolving conversation or the specific requirements of the task at hand. This flexibility in Dynamic prompting allows developers to create more engaging, contextually relevant, and personalized experiences for users interacting with language models.

Applications of Dynamic Prompting

- Conversational Agents: Dynamic prompting is essential for building conversational agents that can engage in natural, contextually relevant dialogues with users, providing personalized assistance and information retrieval.

- Interactive Learning Environments: In educational sectors, dynamic prompting can enhance interactive learning environments by adapting the instructional content to the learner’s progress and preferences and can provide tailored feedback and support.

- Information Retrieval Systems: Dynamic prompting can improve the effectiveness of information retrieval systems by dynamically adjusting and updating the search queries based on the user’s context and preferences, leading to more accurate and relevant search results.

- Personalized Recommendations: Dynamic prompting can power personalized recommendation systems by dynamically generating prompts based on user preferences and browsing history. This system suggests relevant content and products to users based on their interests and past interactions.

Techniques for Dynamic Prompting

- Contextual Query Expansion: This involves expanding the initial prompt with additional context gathered from the ongoing conversation or the user’s input. This expanded prompt gives the model a richer understanding of the current context, enabling more informed and relevant responses.

- User Intent Recognition: By analyzing the user’s intent and extracting the key information from their queries, developers can dynamically generate prompts that address the specific needs and requirements expressed by the user. This will ensure the model’s responses are tailored to the user’s intentions, leading to more satisfying interactions.

- Adaptive Prompt Generation: Dynamic prompting can also generate prompts on the fly based on the model’s internal state and the current conversation history. These dynamically generated prompts can guide the model towards generating coherent responses that align with the ongoing dialogue and the user’s expectations.

- Prompt Refinement through Feedback: By adding feedback mechanisms into the prompting process, developers can refine the prompt based on the model’s responses and the user’s feedback. This iterative feedback loop enables continuous improvement and adaptation, leading to more accurate and effective interactions over time.

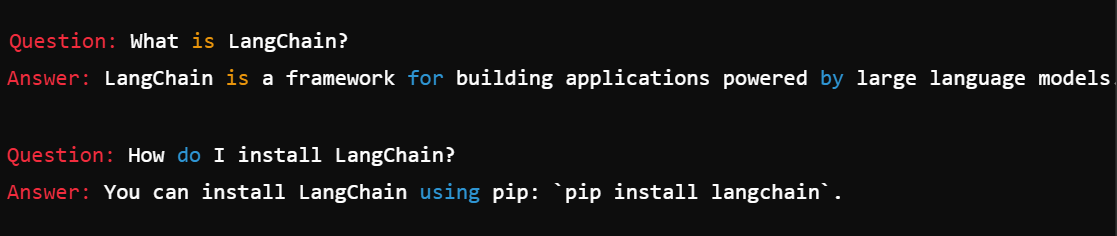

Example: Dynamic FAQ Generator

faqs = {

"What is LangChain?": "LangChain is a framework for building applications powered by large language models.",

"How do I install LangChain?": "You can install LangChain using pip: `pip install langchain`."

}

def generate_prompt(question):

return f"""

You are a knowledgeable assistant. Answer the following question:

Question: {question}

"""

for question in faqs:

prompt = generate_prompt(question)

response = model.generate(prompt)

print(f"Question: {question}\nAnswer: {response}\n")

Context-Aware Prompts

Context-aware prompts represent a sophisticated approach to engaging with language models. It involves the prompt to dynamically adjust based on the context of the conversation or the task at hand. Unlike static prompts, which remain fixed throughout the interaction, context-aware prompts evolve and adapt in real time, enabling more nuanced and relevant interactions with the model. This technique leverages the contextual information during the interaction to guide the model’s responses. It helps in generating output that is coherent, accurate, and aligned with the user’s expectations.

Applications of Context-Aware Prompts

- Conversational Assistants: Context-aware prompts are essential for building conversational assistants to engage in natural, contextually relevant dialogues with users, providing personalized assistance and information retrieval.

- Task-Oriented Dialog Systems: In task-oriented dialog systems, context-aware prompts enable the model to understand and respond to user queries in the context of the specific task or domain and guide the conversation toward achieving the desired goal.

- Interactive Storytelling: Context-aware prompts can enhance interactive storytelling experiences by adapting the narrative based on the user’s choices and actions, ensuring a personalized and immersive storytelling experience.

- Customer Support Systems: Context-aware prompts can improve the effectiveness of customer support systems by tailoring the responses to the user’s query and historical interactions, providing relevant and helpful assistance.

Techniques for Context-Aware Prompts

- Contextual Information Integration: Context-aware prompts take contextual information from the ongoing conversation, including previous messages, user intent, and relevant external data sources. This contextual information enriches the prompt, giving the model a deeper understanding of the conversation’s context and enabling more informed responses.

- Contextual Prompt Expansion: Context-aware prompts dynamically expand and adapt based on the evolving conversation, adding new information and adjusting the prompt’s structure as needed. This flexibility allows the prompt to remain relevant and responsive throughout the interaction and guides the model toward generating coherent and contextually appropriate responses.

- Contextual Prompt Refinement: As the conversation progresses, context-aware prompts may undergo iterative refinement based on feedback from the model’s responses and the user’s input. This iterative process allows developers to continuously adjust and optimize the prompt to ensure that it accurately captures the evolving context of the conversation.

- Multi-Turn Context Retention: Context-aware prompts maintain a memory of previous interactions and then add this historical context to the prompt. This enables the model to generate coherent responses with the ongoing dialogue and provide a conversation that is more updated and coherent than a message.

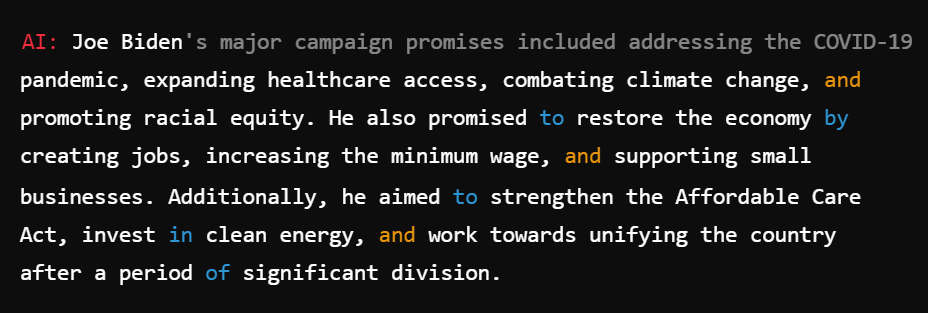

Example: Contextual Conversation

conversation = [

"User: Hi, who won the 2020 US presidential election?",

"AI: Joe Biden won the 2020 US presidential election.",

"User: What were his major campaign promises?"

]

context = "\n".join(conversation)

prompt = f"""

Continue the conversation based on the following context:

{context}

AI:

"""

response = model.generate(prompt)

print(response)

Meta-Prompting

Meta-prompting is used to enhance the sophistication and adaptability of language models. Unlike conventional prompts, which provide explicit instructions or queries to the model, meta-prompts operate at a higher level of abstraction, which guides the model in generating or refining prompts autonomously. This meta-level guidance empowers the model to adjust its prompting strategy dynamically based on the task requirements, user interactions, and internal state. It results in fostering a more agile and responsive conversation.

Applications of Meta-Prompting

- Adaptive Prompt Engineering: Meta-prompting enables the model to adjust its prompting strategy dynamically based on the task requirements and the user’s input, leading to more adaptive and contextually relevant interactions.

- Creative Prompt Generation: Meta-prompting explores prompt spaces, enabling the model to generate diverse and innovative prompts. It inspires new heights of thought and expression.

- Task-Specific Prompt Generation: Meta-prompting enables the generation of prompts tailored to specific tasks or domains, ensuring that the model’s responses align with the user’s intentions and the task’s requirements.

- Autonomous Prompt Refinement: Meta-prompting allows the model to refine prompts autonomously based on feedback and experience. This helps the model continuously improve and refine its prompting strategy.

Also read: Prompt Engineering: Definition, Examples, Tips & More

Techniques for Meta-Prompting

- Prompt Generation by Example: Meta-prompting can involve generating prompts based on examples provided by the user from the task context. By analyzing these examples, the model identifies similar patterns and structures that inform the generation of new prompts tailored to the task’s specific requirements.

- Prompt Refinement through Feedback: Meta-prompting allows the model to refine prompts iteratively based on feedback from its own responses and the user’s input. This feedback loop allows the model to learn from its mistakes and adjust its prompting strategy to improve the quality of its output over time.

- Prompt Generation from Task Descriptions: Meta-prompting can provide natural language understanding techniques to extract key information from task descriptions or user queries and use this information to generate prompts tailored to the task at hand. This ensures that the generated prompts are aligned with the user’s intentions and the specific requirements of the task.

- Prompt Generation based on Model State: Meta-prompting generates prompts by taking account of the internal state of the model, including its knowledge base, memory, and inference capabilities. This happens by leveraging the model’s existing knowledge and reasoning abilities. This allows the model to generate contextually relevant prompts and align with its current state of understanding.

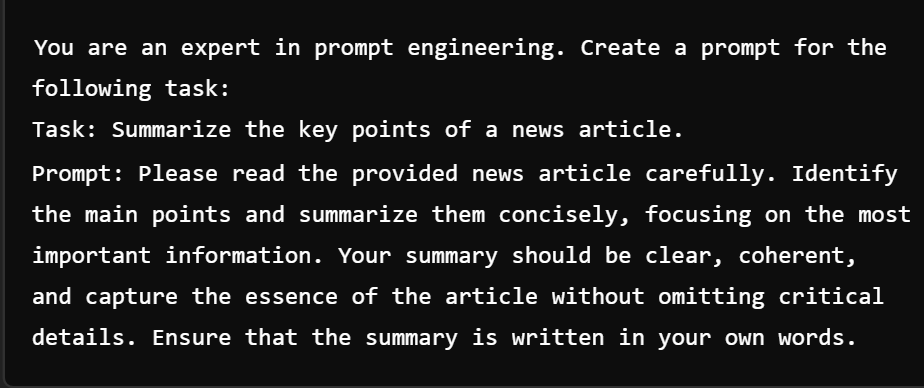

Example: Generating Prompts for a Task

task_description = "Summarize the key points of a news article."

meta_prompt = f"""

You are an expert in prompt engineering. Create a prompt for the following task:

Task: {task_description}

Prompt:

"""

response = model.generate(meta_prompt)

print(response)

Leveraging Memory and State

Leveraging memory and state within language models enables the model to retain context and information across interactions, which helps empower language models to exhibit more human-like behaviors, such as maintaining conversational context, tracking dialogue history, and adapting responses based on previous interactions. By adding memory and state mechanisms into the prompting process, developers can create more coherent, context-aware, and responsive interactions with language models.

Applications of Leveraging Memory and State

- Contextual Conversational Agents: Memory and state mechanisms enable language models to act as context-aware conversational agents, maintaining context across interactions and generating responses that are coherent with the ongoing dialogue.

- Personalized Recommendations: In this, language models can provide personalized recommendations tailored to the user’s preferences and past interactions, improving the relevance and effectiveness of recommendation systems.

- Adaptive Learning Environments: It can enhance interactive learning environments by tracking learners’ progress and adapting the instructional content based on their needs and learning trajectory.

- Dynamic Task Execution: Language models can execute complex tasks over multiple interactions while coordinating their actions and responses based on the task’s evolving context.

Techniques for Leveraging Memory and State

- Conversation History Tracking: Language models can maintain a memory of previous messages exchanged during a conversation, which allows them to retain context and track the dialogue history. By referencing this conversation history, models can generate more coherent and contextually relevant responses that build upon previous interactions.

- Contextual Memory Integration: Memory mechanisms can be integrated into the prompting process to provide the model with access to relevant contextual information. This helps developers in guiding the model’s responses based on its past experiences and interactions.

- Stateful Prompt Generation: State management techniques allow language models to maintain an internal state that evolves throughout the interaction. Developers can tailor the prompting strategy to the model’s internal context to ensure the generated prompts align with its current knowledge and understanding.

- Dynamic State Update: Language models can update their internal state dynamically based on new information received during the interaction. Here, the model continuously updates its state in response to user inputs and model outputs, adapting its behavior in real-time and improving its ability to generate contextually relevant responses.

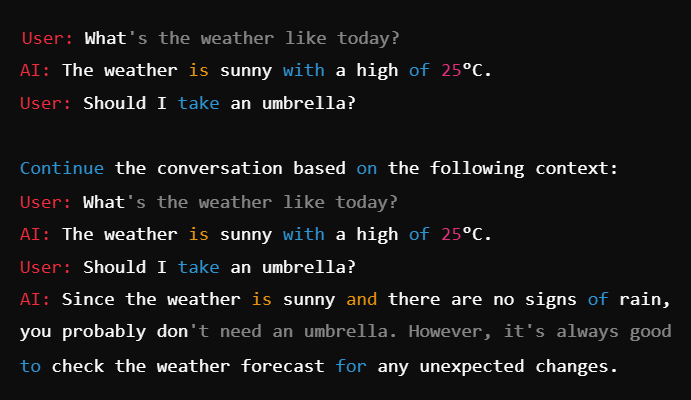

Example: Maintaining State in Conversations

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory()

conversation = [

"User: What's the weather like today?",

"AI: The weather is sunny with a high of 25°C.",

"User: Should I take an umbrella?"

]

for message in conversation:

memory.add_message(message)

prompt = f"""

Continue the conversation based on the following context:

{memory.get_memory()}

AI:

"""

response = model.generate(prompt)

print(response)

Practical Examples

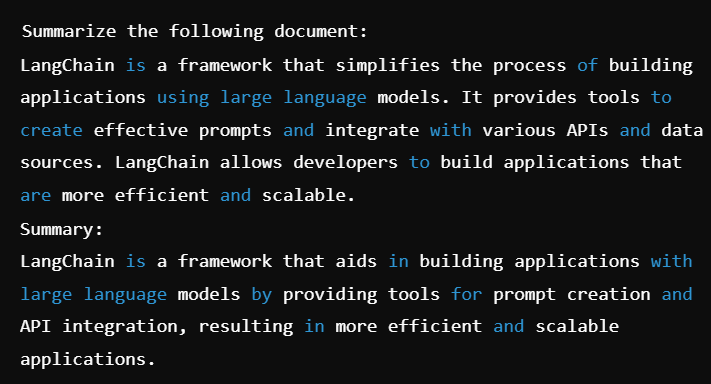

Example 1: Advanced Text Summarization

Using dynamic and context-aware prompting to summarize complex documents.

#importdocument = """

LangChain is a framework that simplifies the process of building applications using large language models. It provides tools to create effective prompts and integrate with various APIs and data sources. LangChain allows developers to build applications that are more efficient and scalable.

"""

prompt = f"""

Summarize the following document:

{document}

Summary:

"""

response = model.generate(prompt)

print(response)

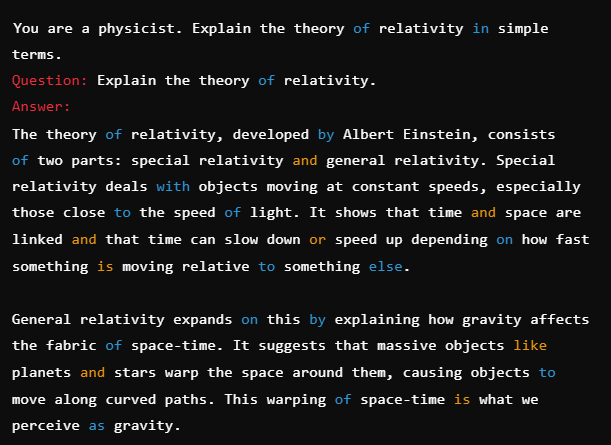

Example 2: Complex Question Answering

Combining multi-step reasoning and context-aware prompts for detailed Q&A.

question = "Explain the theory of relativity."

prompt = f"""

You are a physicist. Explain the theory of relativity in simple terms.

Question: {question}

Answer:

"""

response = model.generate(prompt)

print(response)

Conclusion

Advanced prompt engineering with LangChain helps developers to build robust, context-aware applications that leverage the full potential of large language models. Continuous experimentation and refinement of prompts are essential for achieving optimal results.

For comprehensive data management solutions, explore YData Fabric. For tools to profile datasets, consider using ydata-profiling. To generate synthetic data with preserved statistical properties, check out ydata-synthetic.

Key Takeaways

- Advanced Prompt Engineering Structuring: Guides model through multi-step reasoning with contextual cues.

- Dynamic Prompting: Adjusts prompts based on real-time context and user interactions.

- Context-Aware Prompts: Evolves prompts to maintain relevance and coherence with conversation context.

- Meta-Prompting: Generates and refines prompts autonomously, leveraging the model’s capabilities.

- Leveraging Memory and State: Maintains context and information across interactions for coherent responses.

The media shown in this article are not owned by Analytics Vidhya and is used at the Author’s discretion.

Frequently Asked Questions

A. LangChain can integrate with APIs and data sources to dynamically adjust prompts based on real-time user input or external data. You can create highly adaptive and context-aware interactions by programmatically constructing prompts incorporating this information.

A. LangChain provides memory management capabilities that allow you to store and retrieve context across multiple interactions, essential for creating conversational agents that remember user preferences and past interactions.

A. Handling ambiguous or unclear queries requires designing prompts that guide the model in seeking clarification or providing context-aware responses. Best practices include:

a. Explicitly Asking for Clarification: Prompt the model to ask follow-up questions.

b. Providing Multiple Interpretations: Design prompts allow the model to present different interpretations.

A. Meta-prompting leverages the model’s own capabilities to generate or refine prompts, enhancing the overall application performance. This can be particularly useful for creating adaptive systems that optimize behavior based on feedback and performance metrics.

A. Integrating LangChain with existing machine learning models and workflows involves using its flexible API to combine outputs from various models and data sources, creating a cohesive system that leverages the strengths of multiple components.