As we are wrapping up 2025, I thought it would be good to take a look back at the AI models that have left a lasting impact throughout the year. This year brought new AI models to the limelight, whereas some of the older models have also surged in popularity. From Natural Language Processing to Computer Vision, these models have influenced a multitude of AI domains. This article will showcase the models which have produced the most impact in the year 2025.

Table of contents

- Model Selection Criteria

- Sentence Transformer MiniLM

- Google Electra Base Discriminator

- FalconsAI NSFW Image Detection

- Google Uncased BERT

- Fairface Image Age Detection

- MobileNet Image Classification Model

- Laion CLAP

- DistilBERT

- Pyannote Segmentation 3

- FacebookAI Roberta Large

- Conclusion

- Frequently Asked Questions

Model Selection Criteria

The AI models listed in this article have been selected from HuggingFace leaderboards based on the following criteria:

- The number of downloads

- Having either an Apache 2.0 or MIT open-source licence

This includes a mix of the models that came out this year, or in the previous year which experienced a surge in popularity. You can view the complete list at HuggingFace leaderboard from here: https://huggingface.co/models?license=license:apache-2.0&sort=downloads

1. Sentence Transformer MiniLM

Category: Natural Language Processing

A compact English sentence embedding model optimized for semantic similarity, clustering, and retrieval. It distills MiniLM into a 6-layer transformer (384-dimensional embeddings) trained on millions of sentence pairs. Despite its size, it delivers strong performance across semantic search and topic modelling tasks, rivalling larger models.

License: Apache 2.0

HuggingFace Link: https://huggingface.co/sentence\-transformers/all-MiniLM-L6-v2

2. Google Electra Base Discriminator

Category: Natural Language Processing

ELECTRA redefines masked language modeling by training models to detect replaced tokens instead of predicting them. The base version (110M parameters) achieves performance comparable to BERT-base while requiring much less computation. It’s widely used for feature extraction and fine-tuning in classification and QA pipelines.

License: Apache 2.0

HuggingFace Link: https://huggingface.co/google/electra-base-discriminator

3. FalconsAI NSFW Image Detection

Category: Computer Vision

A CNN-based model designed to detect NSFW or unsafe content in images. People using sites like Reddit would be aware of a infamous “NSFW Blocker”. Built on architectures like EfficientNet or MobileNet, it outputs probabilities for “safe” versus “unsafe” categories, making it a key moderation component for AI-generated or user-uploaded visuals.

License: Apache 2.0

HuggingFace Link: https://huggingface.co/Falconsai/nsfw_image_detection

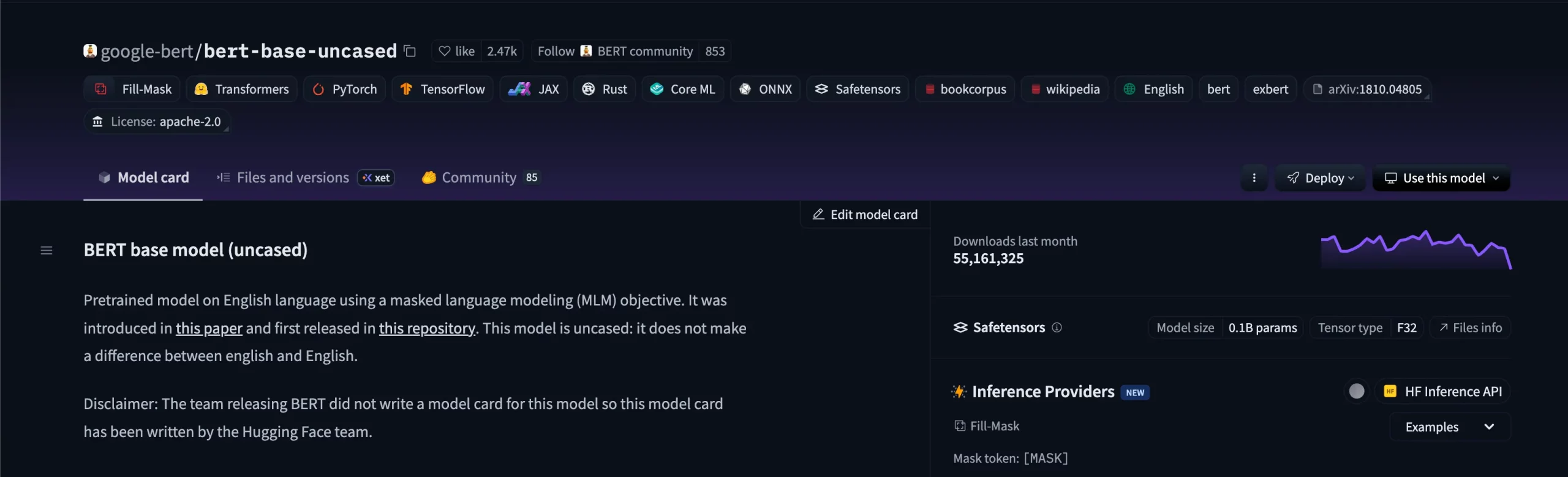

4. Google Uncased BERT

Category: Natural Language Processing

The original BERT-base model from Google Research, trained on BooksCorpus and English Wikipedia. With 12 layers and 110M parameters, it laid the groundwork for modern transformer architectures and remains a strong baseline for classification, NER, and question answering.

License: Apache 2.0

HuggingFace Link: https://huggingface.co/google-bert/bert-base-uncased

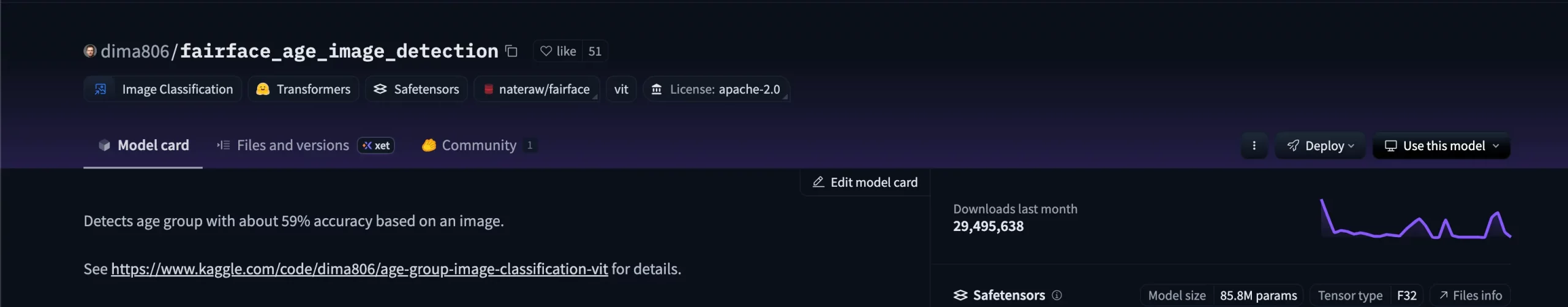

5. Fairface Image Age Detection

Category: Computer Vision

A facial age prediction model trained on the FairFace dataset, emphasizing balanced representation across ethnicity and gender. It prioritizes fairness and demographic consistency, making it suitable for analytics and research pipelines involving facial attributes.

License: Apache 2.0

HuggingFace Link: https://huggingface.co/dima806/fairface_age_image_detection

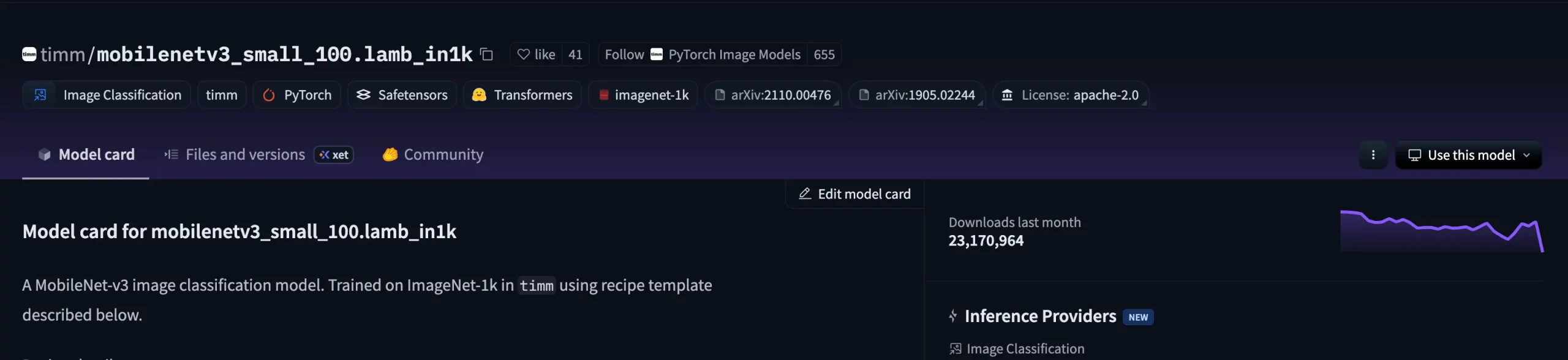

6. MobileNet Image Classification Model

Category: Computer Vision

A lightweight convolutional image classifier from the timm library, designed for efficient deployment on resource-limited devices. MobileNetV3 Small, trained on ImageNet-1k using the LAMB optimizer, achieves solid accuracy with low latency, making it ideal for edge and mobile inference.

License: Apache 2.0

HuggingFace Link: https://huggingface.co/timm/mobilenetv3_small_100.lamb_in1k

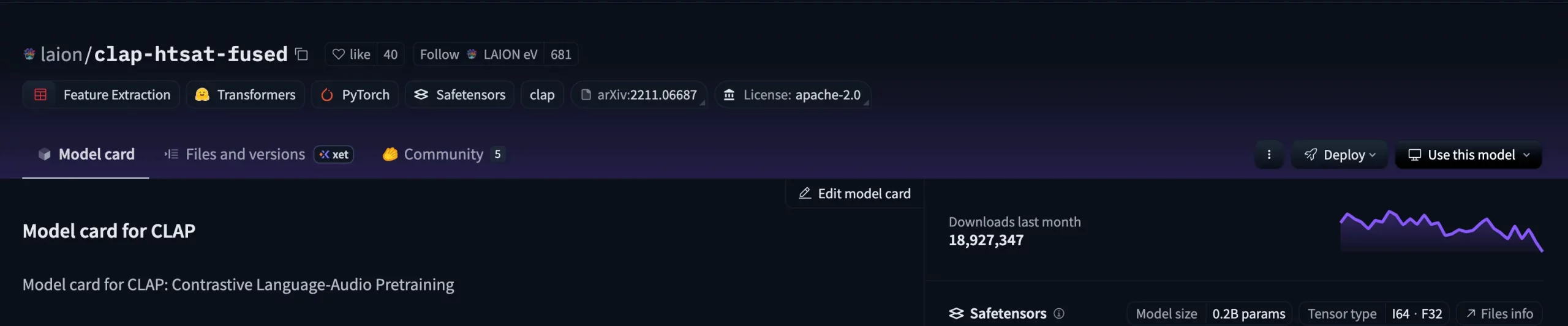

7. Laion CLAP

Category: Multimodal (Audio to Language)

A fusion of CLAP (Contrastive Language–Audio Pretraining) and HTS-AT (Hierarchical Token-Semantic Audio Transformer) that maps audio and text into a shared embedding space. It supports zero-shot audio retrieval, tagging, and captioning, bridging sound understanding and natural language.

License: Apache 2.0

HuggingFace Link: https://huggingface.co/laion/clap-htsat-fused

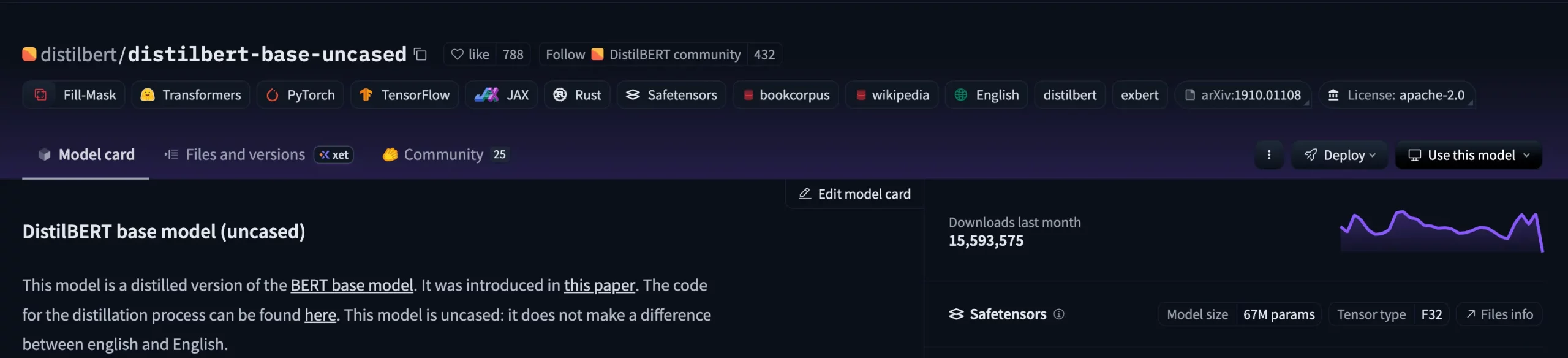

8. DistilBERT

Category: Natural Language Processing

A distilled version of BERT-base developed by Hugging Face to balance performance and efficiency. Retaining about 97% of BERT’s accuracy while being 40% smaller and 60% faster, it’s ideal for lightweight NLP tasks like classification, embeddings, and semantic search.

License: Apache 2.0

HuggingFace Link: https://huggingface.co/distilbert/distilbert-base-uncased

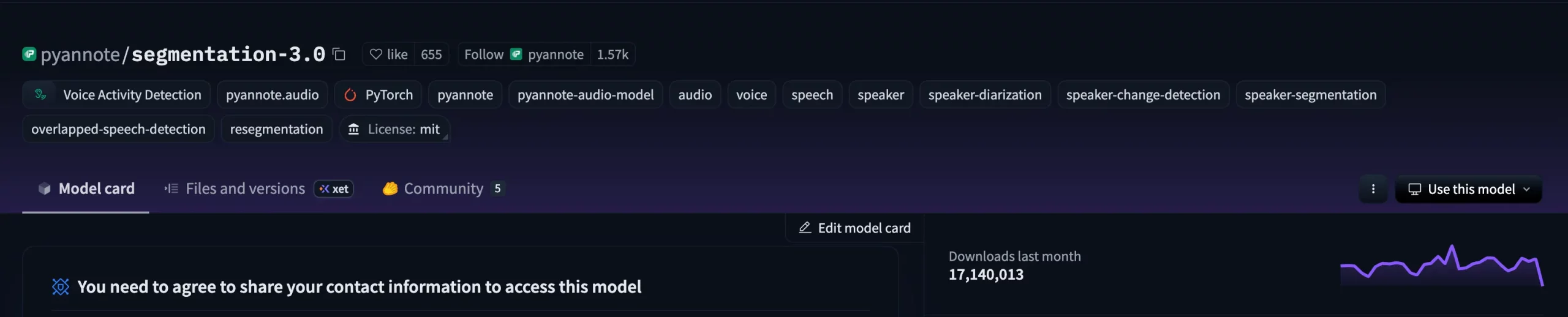

9. Pyannote Segmentation 3

Category: Speech Processing

A core component of the Pyannote Audio pipeline for detecting and segmenting speech activity. It identifies regions of silence, single-speaker, and overlapping speech, performing reliably even in noisy environments. Commonly used as the foundation for speaker systems.

License: MIT

HuggingFace Link: https://huggingface.co/pyannote/segmentation-3.0

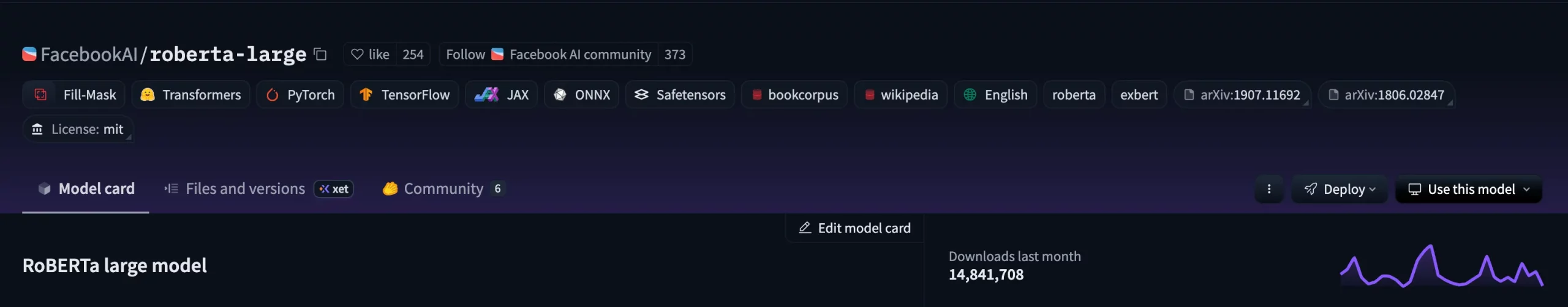

10. FacebookAI Roberta Large

Category: Natural Language Processing

A robustly optimized BERT variant trained on 160 GB of English text with dynamic masking and no next-sentence prediction. With 24 layers and 355M parameters, RoBERTa-large consistently outperforms BERT-base across GLUE and other benchmarks, powering high-accuracy NLP applications.

License: MIT

HuggingFace Link: https://huggingface.co/FacebookAI/roberta-large

Conclusion

This listicle isn’t exhaustive, and there are several models out there that have had tremendous impact, but didn’t make it to the list. Some which were just as impactful, but lacked an open-source license. And others just didn’t have the numbers. But what they all did was contribute towards solving a part of a bigger problem. The models shared in this list might not have the buzz that follows models like Gemini, ChatGPT, Claude, but what they offer is an open-letter to data science enthusiasts which are looking to create things from scratch, without housing a data center.

Frequently Asked Questions

A. The models were chosen based on two key factors: total downloads on Hugging Face and having a permissive open-source license (Apache 2.0 or MIT), ensuring they’re both popular and freely usable.

A. Most are, but some—like Falconsai/nsfw_image_detection or FairFace-based models—may have usage restrictions. Always check the model card and license before deploying in production.

A. Open-source models give researchers and developers freedom to experiment, fine-tune, and deploy without vendor lock-in or heavy infrastructure needs—making innovation more accessible.

A. Most of them are released under permissive licenses like Apache 2.0 or MIT, meaning you can use them for both research and commercial projects.

A. Yes. Many production systems chain models from different domains — for example, using a speech segmentation model like Pyannote to isolate dialogue, then a language model like RoBERTa to analyze sentiment or intent, and finally a vision model to moderate accompanying images.