GPT-5.3-Codex represents a new generation of the Codex model built to handle real, end-to-end work. Instead of focusing only on writing code, it combines strong coding ability with planning, reasoning, and execution. The model runs faster than earlier versions and handles long, multi-step tasks involving tools and decisions more effectively.

Rather than producing isolated answers, GPT-5.3-Codex behaves more like a working agent. It can stay on task for long periods, adjust its approach mid-way, and respond to feedback without losing context.

Table of contents

Codex 5.3 Benchmarks

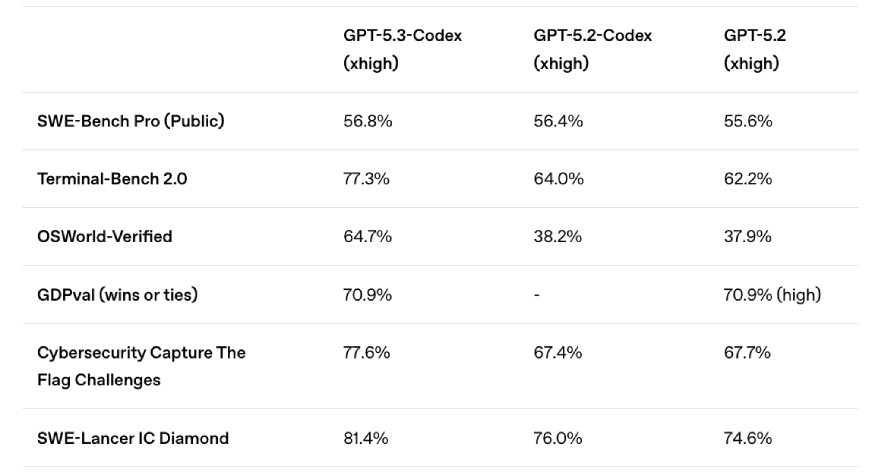

OpenAI’s GPT-5.3 Codex sets new performance standards on real-world coding and agentic benchmarks, outperforming prior models on tests like SWE-Bench Pro and Terminal-Bench 2.0 with stronger accuracy. It also shows substantial gains on OSWorld and GDPval evaluations, which measure computer-use and professional knowledge work, while running about 25% faster than GPT-5.2 Codex. This marks a significant step toward AI that can handle longer, multi-step development tasks and broader software workflows.

Key Features

Here’s what makes OpenAI Codex interesting:

Built With Codex, For Codex

One of the most interesting aspects of GPT-5.3-Codex is that the team used early versions of the model during its own development. Engineers relied on it to debug training runs, investigate failures, and analyze evaluation results. This helped speed up iteration and exposed issues earlier in the process.

This self-use is a strong signal of maturity. The OpenAI team not only tested the model on benchmarks but also trusted it in real internal workflows.

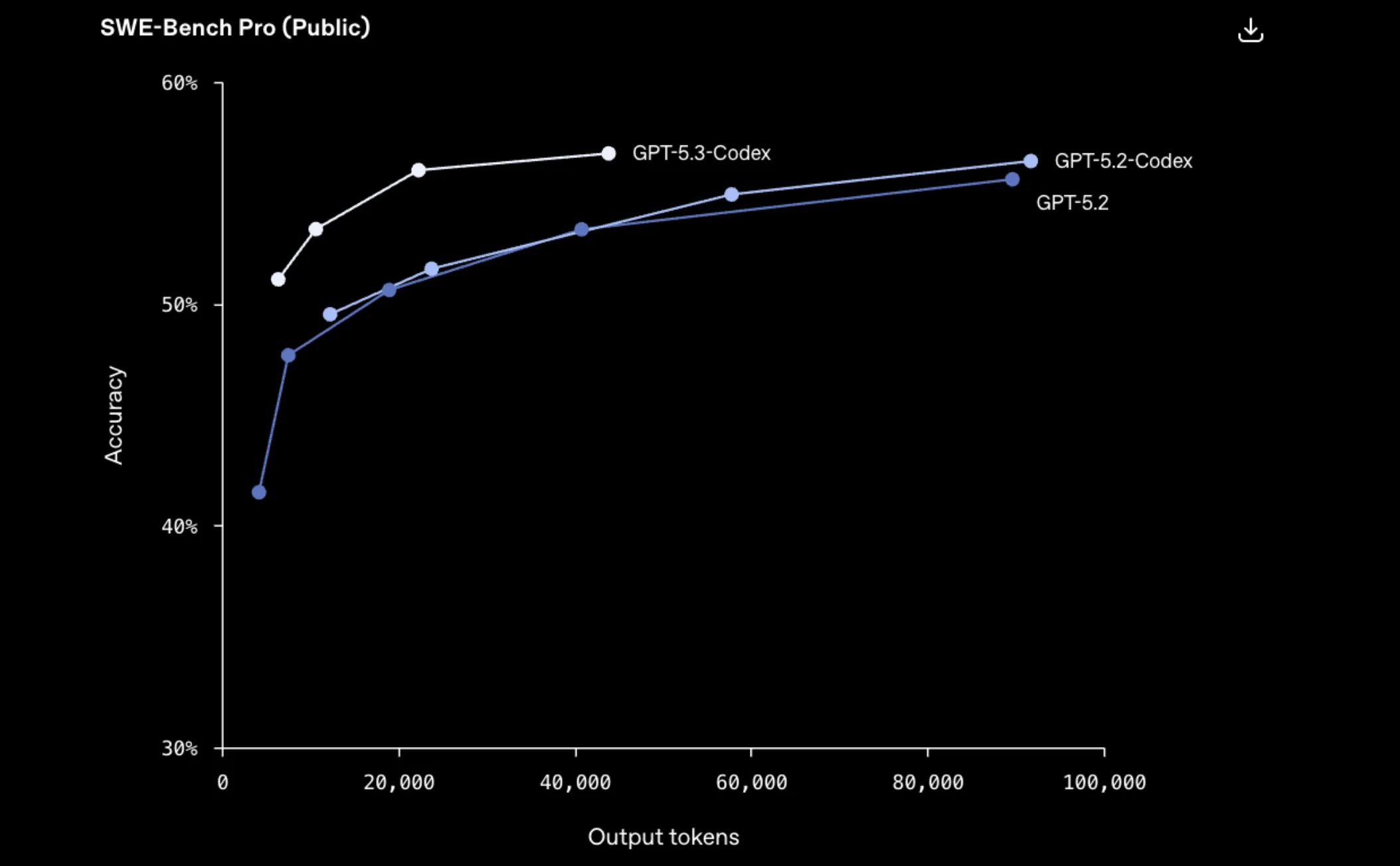

From the benchmark image, we can see that GPT-5.3-Codex maintains higher accuracy as output tokens increase. It performs better on longer and more complex tasks. This shows stronger consistency compared to earlier models.

Anthropic also launched their new coding model recently. Find all about it on our detailed blog on Claude Opus 4.6.

Beyond Writing Code

GPT-5.3-Codex is designed to handle more than just code generation. It can help with debugging, refactoring, deployment tasks, documentation, data analysis, and even non-coding work like writing specs or preparing reports.

It operates best when given goals rather than detailed instructions. The model can decide what to do next, run commands, inspect outputs, and keep going until the task is complete.

Designed for Safe, Practical Use

To support hands-on work, GPT-5.3-Codex runs inside controlled environments. By default, it works in sandboxes that limit file access and network usage, reducing the risk of accidental damage. The model also pauses and asks for clarification before performing potentially destructive actions.

These choices make it easier to experiment, especially when working on real projects or unfamiliar systems.

Working Together With the Model

Interaction with GPT-5.3-Codex is continuous rather than one-off. As it works, it shares progress, explains decisions, and reacts to feedback. You can interrupt, redirect, or refine the task at any point.

This makes it feel less like a command-based tool and more like a collaborator you supervise.

How to Access Codex 5.3?

Now that the high-level picture is clear, it’s time to move from description to action.

In the next section, we’ll try Codex hands-on. We’ll start by downloading and setting it up, then walk through a simple workflow step by step. This will show how GPT-5.3-Codex behaves in practice and how to work with it effectively on real tasks.

Let’s see the steps:

1. Drag the Codex icon into your Application folder

2. Open Codex

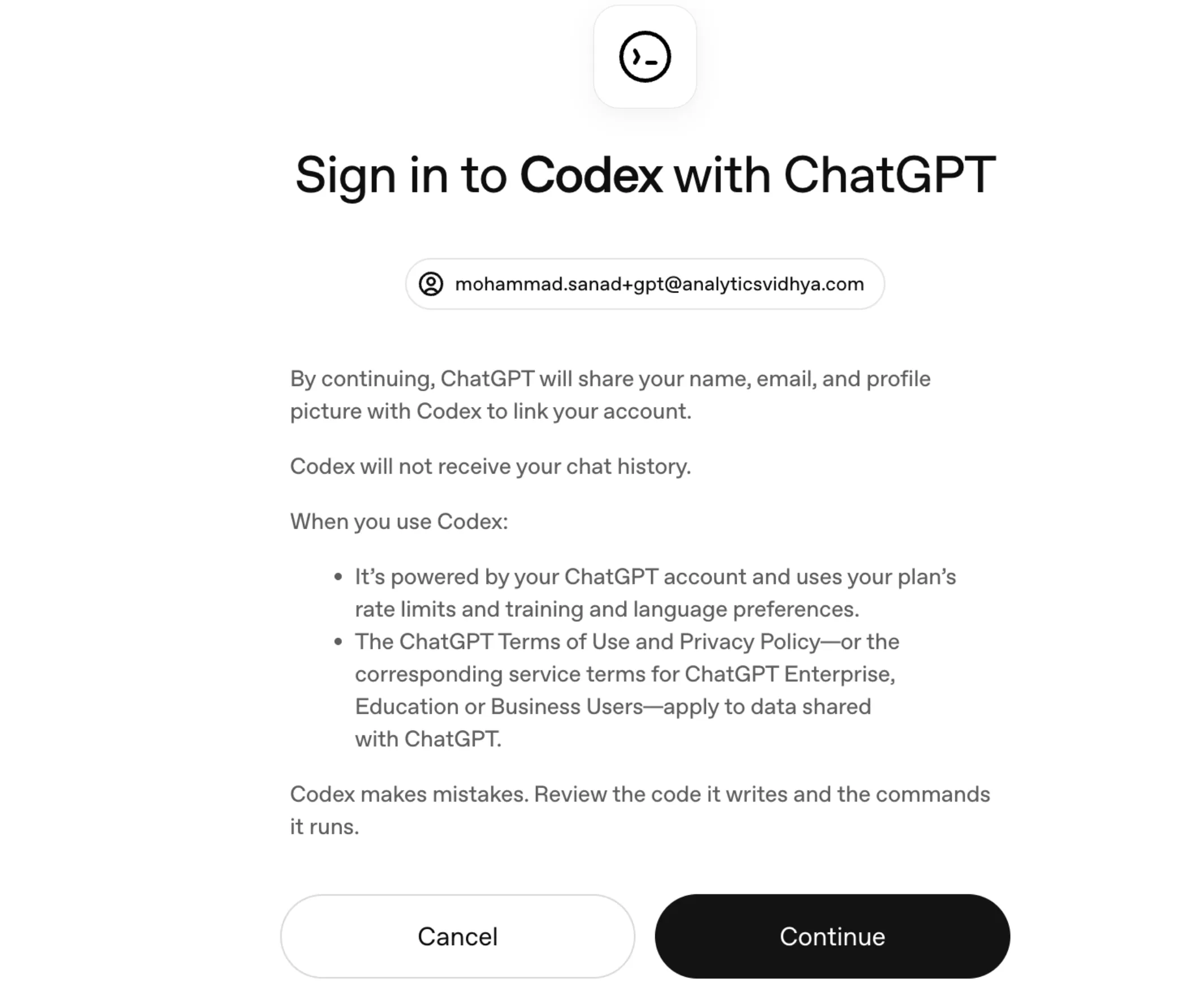

3. Sign in with ChatGPT

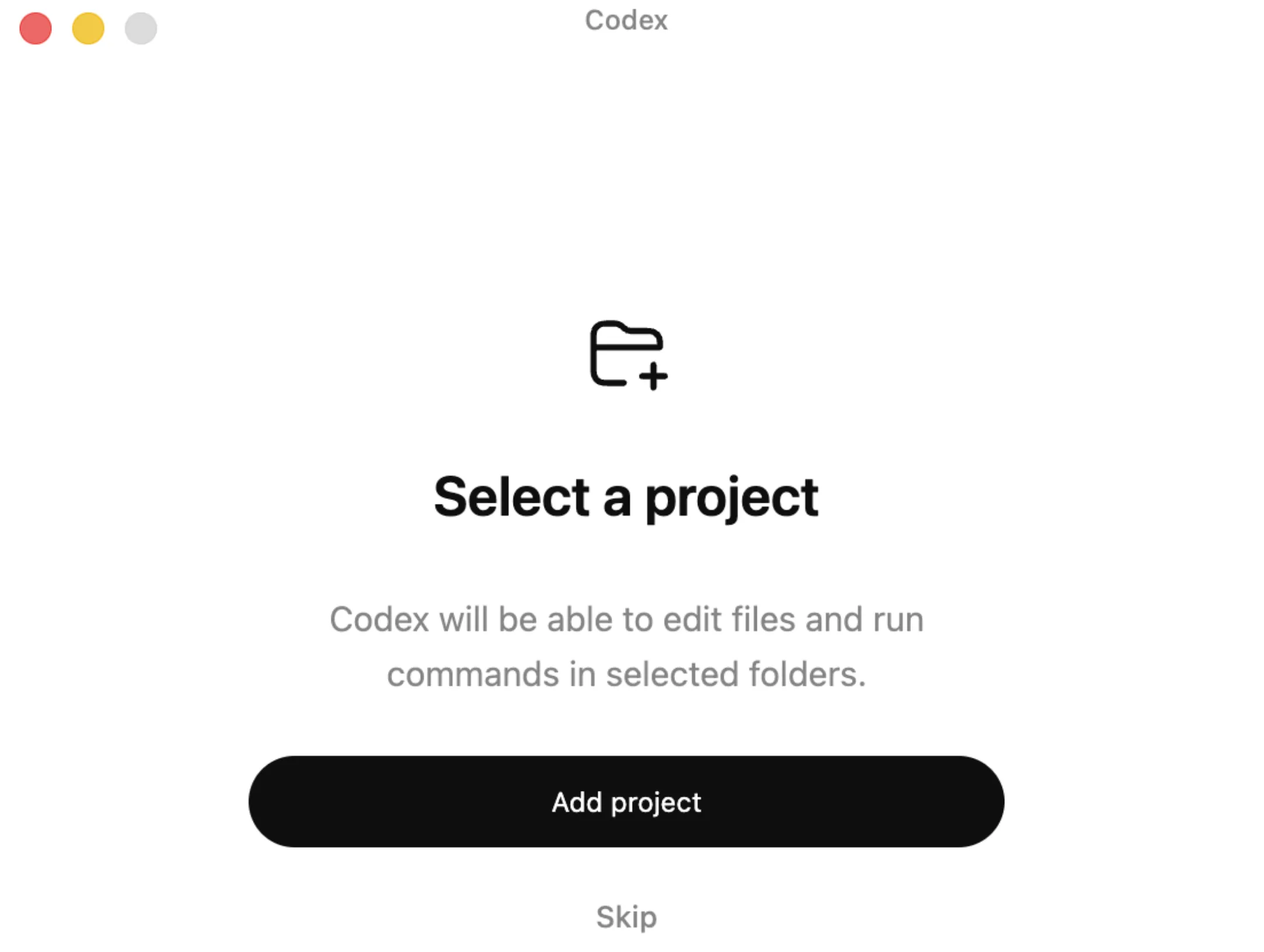

4. After signing in, select a folder or git repository on your computer where Codex will work

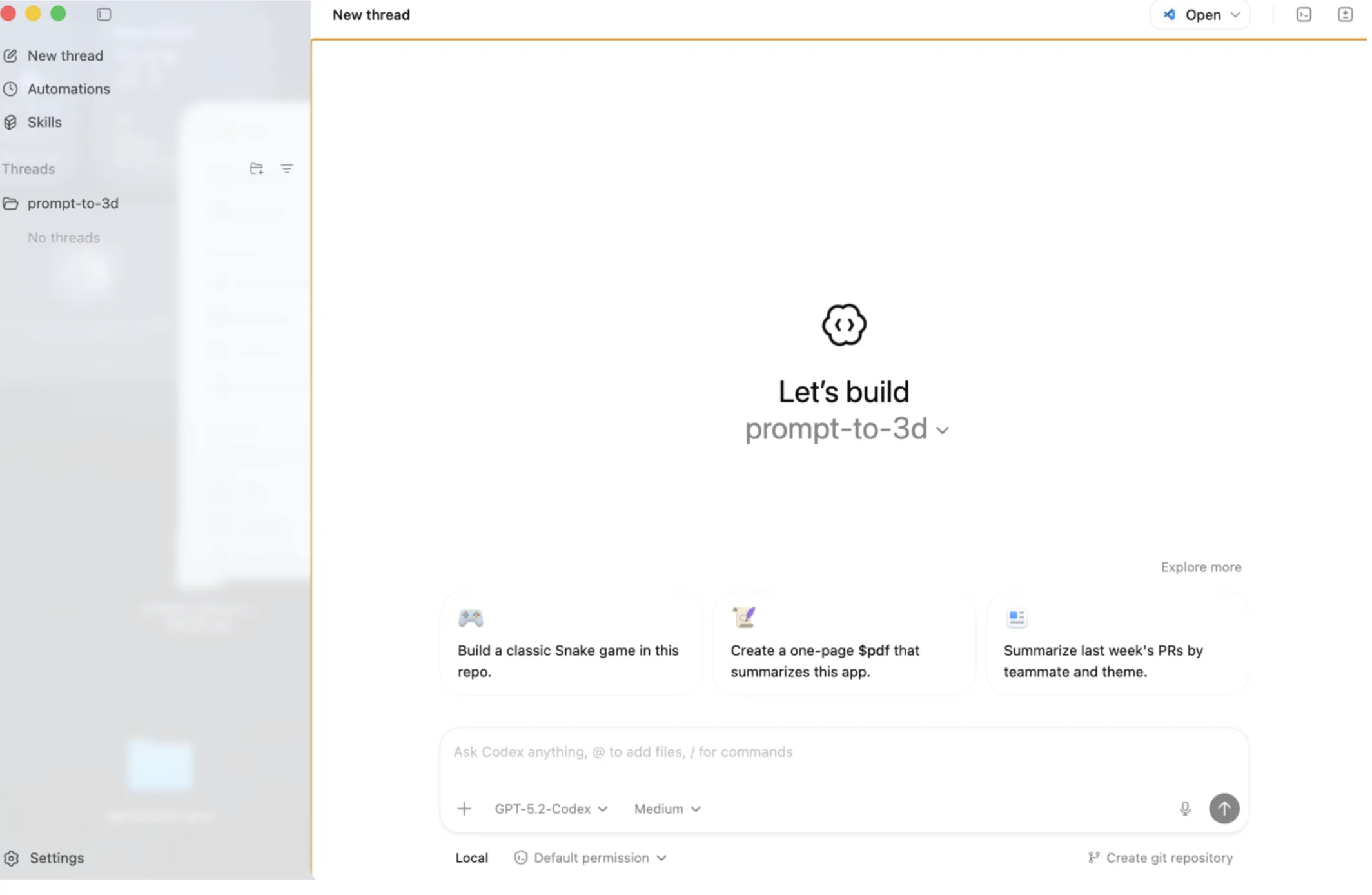

5. Kick off your first task

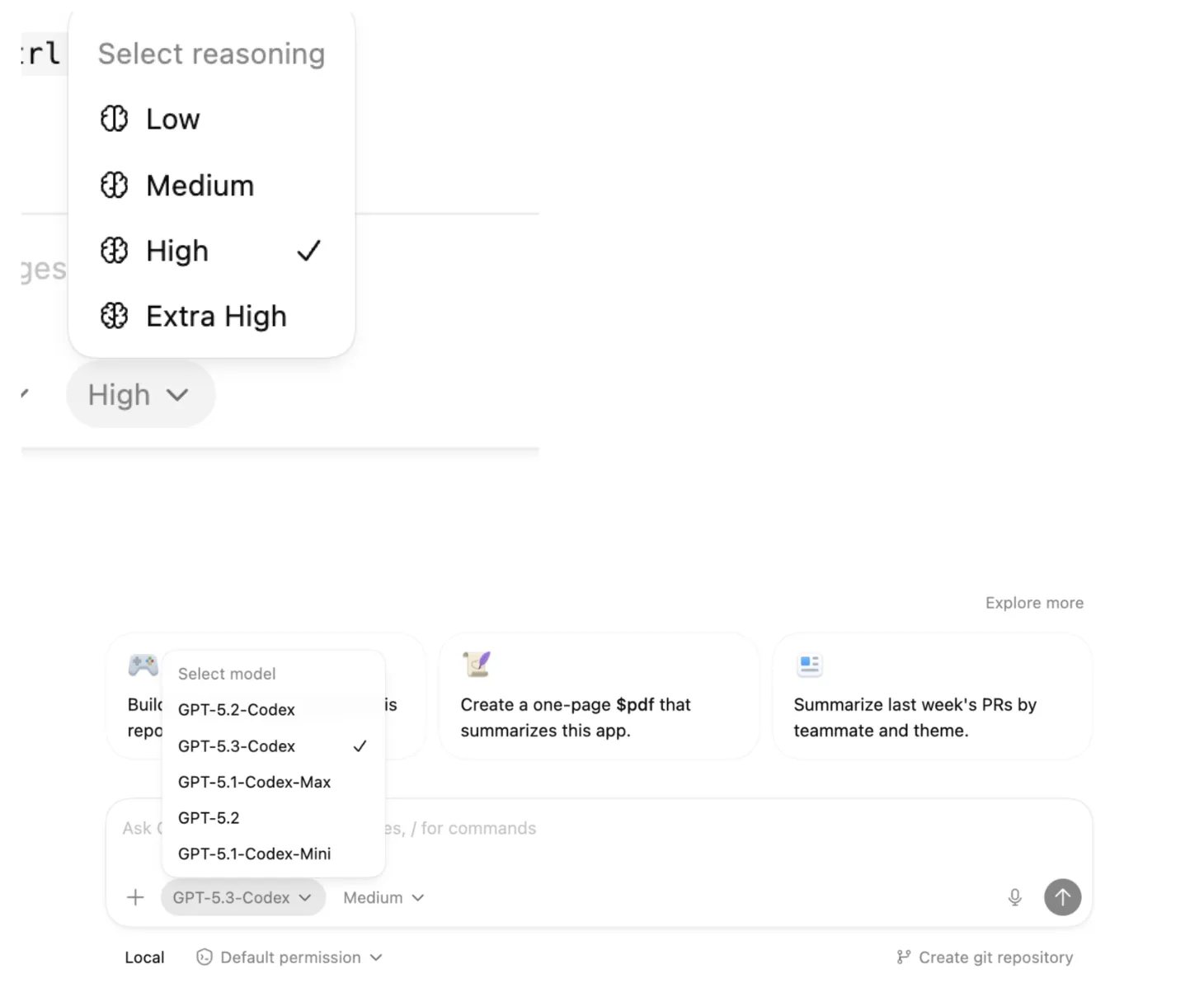

5. Select the model from here and Reasoning as per your choice.

Task 1: Text to 3D Scene Generator

The first task I worked on with Codex was building a simple text-to-3D scene generator. The goal was intentionally minimal. I wanted to test how well Codex could take a loosely defined idea and turn it into a working visual project without overengineering.

The Initial Prompt

The very first prompt I gave Codex was straightforward:

Build a simple text-to-3D scene generator.

The requirements were clear but limited. It had to be a single HTML file, use Three.js through a CDN, and run directly in the browser with no build tools. The scene needed a text input where a user could describe something like “3 trees and a house”, and the output should be a basic 3D scene using simple shapes, lighting, and slow rotation. I also asked it to start with a minimal working version.

This prompt was meant to test fundamentals, not polish.

First Working Version

Codex created a clean index.html from scratch. It set up a Three.js scene with a camera, lights, ground plane, and a simple animation loop. A text input and submit button were added. Basic keywords like tree, house, cloud, and sun were parsed and mapped to simple shapes. The focus was correctness. The scene loaded, objects appeared, and everything rotated smoothly. The result was already usable.

Iterations

I iterated step by step. I improved parsing so phrases like “3 trees” worked correctly, with a default of one object. Next, I fixed object spacing to prevent overlap and added scene cleanup so each submission rebuilt the scene instead of stacking objects. In another pass, I focused on readability by simplifying comments and clarifying the structure for beginners. Each change was small and quick to implement.

Result

By the third version, multiple objects rendered correctly, but it took more time than expected and the result was still not very strong. The scene did clear and rebuild on every submit, but the behavior was inconsistent. In the video, you can also see that when I entered “cone,” nothing changed in the scene. The final output ran in the browser, but it clearly showed that Codex could do more and that the solution was far from its full potential.

Task 2: Space Flight Sandbox

This task focused on building a real-time space flight sandbox with a strong emphasis on structure and performance. The goal was to create a smooth and believable experience where the system could scale without breaking.

Core Gameplay

The player flies a ship in open space with inertial movement. Mouse input controls pitch and yaw, while the keyboard handles thrust, strafe, roll, and reverse. A large asteroid field surrounds the player and continuously streams as the ship moves. The player can fire lasers to destroy asteroids, which split into smaller pieces when hit.

Performance and Structure

Performance was treated as a hard constraint. Asteroids were rendered using InstancedMesh and recycled to maintain a stable instance count. Collision checks relied on a spatial grid to stay efficient. Physics ran on a fixed timestep, while rendering remained smooth and decoupled. No external physics engines or frameworks were used.

System Design

The project followed a clean modular design. Each major system lived in its own file, with main.js handling the scene and loop, ship.js managing flight physics, asteroids.js handling instancing and streaming, weapons.js managing lasers and collisions, and controls.js handling input. This structure remained unchanged throughout development.

Audio Feedback

Audio was added to improve clarity and impact. Laser shots trigger a sharp firing sound, and asteroid hits play a heavier explosion-like thud. All audio uses Three.js Audio and is attached to the camera to stay consistent with the player’s perspective.

Result

The final sandbox is fully playable and stable, but it took much longer to build than expected. The ship feels weighty and responsive, asteroids stream endlessly without performance drops, and lasers feel powerful and visible. However, the development time was noticeably high, possibly due to the reasoning model I chose. After seeing the result, I was not very happy with it, as other models do much better, or this could have been made much better overall.

Conclusion

GPT-5.3-Codex shows clear strengths in long, complex tasks and benchmark performance. It behaves more like an agent than a simple code generator. It plans, executes, and adapts over time. Benchmarks suggest strong consistency at scale. However, hands-on work revealed gaps. Some tasks took longer than expected. Results were not always as strong as they could have been. In practice, iteration speed and output quality varied. While the model is powerful and mature, the workflow did not always feel optimal. With better choices or tuning, the same tasks could likely be done faster and better.