Efficient ML models and frameworks for building or even deploying are the need of the hour after the advent of Machine Learning (ML) and Artificial Intelligence (AI) in various sectors. Although there are several frameworks for that, PyTorch and TensorFlow emerge as the most famous and commonly used ones. PyTorch and Tensorflow have similar features, integrations, and language support, which are quite diverse, making them applicable to any machine learning practitioner. The article compares the PyTorch and TensorFlow frameworks regarding their variations, integrations, support, and basic syntaxes to expose these powerful tools.

Table of contents

What’s a Machine Learning Framework?

Machine learning frameworks are interfaces that contain a set of pre-built functions and structures designed to simplify many of the complexities of the machine learning lifecycle, which includes data preprocessing, model building, and optimization. Almost all businesses today use machine learning in some way, from the banking sector to health insurance providers and from marketing teams to healthcare organizations.

Key Features of Machine Learning Frameworks

- High-level APIs can help simplify the development process.

- Pre-built components include ready-to-use layers, loss functions, optimizers, and other elements.

- Provide tools for visualizing data and modeling performance.

- GPU and TPU acceleration to speed up calculations.

- Ability to handle massive datasets and distributed computing.

What is PyTorch?

PyTorch is an open-source machine learning framework developed by Facebook’s AI Research lab. Its dynamic computation graph makes it flexible and easy to use during model development and debugging.

Key Features of PyTorch

- Dynamic Computation Graph: Also known as “define-by-run,” it allows the graph to be built on the fly, making it easily modifiable during runtime.

- Tensors and Autograd: This package supports n-dimensional arrays (tensors) with automatic differentiation (using AutoGrad) for gradient calculation.

- Extensive Library: Includes numerous pre-built layers, loss functions, and optimizers.

- Interoperability: Can be easily integrated with other Python libraries like NumPy, SciPy, and more.

- Community and Ecosystem: A solid community support system with various extensions and tools.

Also read: A Beginner-Friendly Guide to PyTorch and How it Works from Scratch

What is TensorFlow?

It’s a Google Brain-based open-source machine learning framework that is highly adaptive and scalable. It extends support to various platforms, from mobile devices to distributed computing clusters.

Key Features of TensorFlow

- TensorFlow Computation: TensorFlow originally used a static computation graph, where you define the entire computation graph first and then execute it. This was done using TensorFlow 1.x and the

tf.GraphAPI. With TensorFlow 2.x, eager execution was introduced by default, which means operations are executed immediately rather than being added to a static graph. This allows for more intuitive debugging and interaction with the code, similar to Python’s default behavior. - TensorFlow Extended (TFX): TFX is a platform for deploying production ML pipelines.

- TensorFlow Lite: This version of TensorFlow has been designed especially for mobile/embedded devices.

- TensorBoard: It provides visualization tools to keep track of the ML workflow.

Also read: A Basic Introduction to Tensorflow in Deep Learning

Sample Codes

The following are the code snippets for PyTorch and TensorFlow, used to demonstrate the structure and syntax of the two frameworks:

PyTorch Syntax

import torch

import torch.nn as nn

import torch.optim as optim

# Define a simple neural network

class SimpleNet(nn.Module):

def __init__(self):

super(SimpleNet, self).__init__()

self.fc1 = nn.Linear(6, 3) # 6 input features, 3 output features

self.fc2 = nn.Linear(3, 1) # 3 input features, 1 output feature

def forward(self, x):

x =torch.relu(self.fc1(x))

x =self.fc2(x)

return x

# Initialize the network, loss function, and optimizer

net = SimpleNet()

criterion = nn.MSELoss()

optimizer = optim.SGD(net.parameters(), lr=0.01)

# Dummy input and target

inputs = torch.randn(1, 6)

target = torch.randn(1, 1)

# Forward pass

output = net(inputs)

loss = criterion(output, target)

# Backward pass

optimizer.zero_grad()

loss.backward()

optimizer.step()

print("Inputs (independent variables):", inputs)

print("Target: (dependent variable):", target)

print("Output:", output)

print("Loss:", loss.item()) # MSE lossOutput:

This basic artificial neural network is trained for 1 epoch (forward pass and backward pass) in PyTorch. PyTorch uses Torch tensors instead of numpy arrays in the model.

TensorFlow Syntax

import tensorflow as tf

# Define a simple neural network using Keras API

model = tf.keras.Sequential([

tf.keras.layers.Dense(3, activation='relu', input_shape=(6,)), # 6 input features, 3 output features

tf.keras.layers.Dense(1) # 3 input features, 1 output feature

])

# Compile the model

model.compile(optimizer='sgd', loss='mse')

# Dummy input and target

inputs = tf.random.normal([1, 6])

target = tf.random.normal([1, 1])

# Forward pass (calculate loss inside training function)

with tf.GradientTape() as tape:

output = model(inputs, training=True)

loss = tf.keras.losses.MeanSquaredError()(target, output)

# Backward pass (apply gradients)

gradients = tape.gradient(loss, model.trainable_variables)

tf.keras.optimizers.SGD(learning_rate=0.01).apply_gradients(zip(gradients, model.trainable_variables))

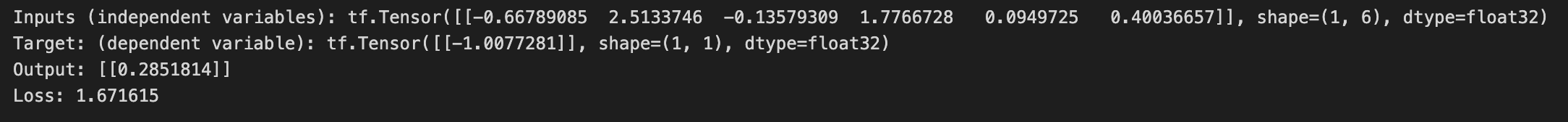

print("Inputs (independent variables):", inputs)

print("Target: (dependent variable):", target)

print("Output:", output.numpy())

print("Loss:", loss.numpy())Output:

This is the basic code for the training phase of an artificial neural network in Tensorflow. It is just to demonstrate a few of the modules and the syntax.

Note that one forward pass and a backward pass make for one epoch.

Also read: TensorFlow for Beginners With Examples and Python Implementation

PyTorch vs TensorFlow

Here is the tabular comparison of PyTorch and TensorFlow in different aspects:

| Aspect | PyTorch | TensorFlow |

| Ease of Use | Intuitive | Complex |

| Developed by | ||

| API level | Low level | High level and low level |

| Debugging | Easier with dynamic graphs | Improved with eager execution |

| Performance | Research-focused | Production-optimized |

| Deployment | TorchServe | TensorFlow Serving, Lite, JS |

| Visualization | Integrates with TensorBoard | TensorBoard |

| Mobile Support | Limited | TensorFlow Lite, JS |

| Community | Growing, academia-focused | Larger, industry-adopted |

| Graph Execution | Dynamic (define-by-run) | Eager execution |

GPU and Parallel Processing Comparison: TensorFlow vs PyTorch

Parallel processing is an essential factor determining the usage of machine learning tools. The following table summarizes the different parallelization capabilities of the two machine learning frameworks:

| Aspect | TensorFlow | PyTorch |

|---|---|---|

| Ease of Use | Built-in GPU support; tf.distribute for multi-GPU training |

Easy GPU use with .cuda(); supports distributed training |

| Configuration | Requires CUDA/cuDNN; uses tf.device() |

Requires CUDA/cuDNN; explicit device control |

| Performance | XLA compiler; supports mixed-precision training |

Eager execution; mixed-precision with torch.cuda.amp |

| Parallel Processing | tf.data API; tf.distribute for GPU/TPU training |

DataLoader supports parallel loading; dynamic graphs help custom tasks |

| Best For | Production, deployment, scalability, complex models | Research, custom models, prototyping, experimentation |

Additional Considerations

Community and Support

- PyTorch has a strong presence in research communities, with many academic papers and courses built around it.

- TensorFlow has robust industrial support, extensive documentation, and numerous production use cases.

Performance

- TensorFlow’s eager execution offers immediate operation execution, simplifying debugging, but may be slower for complex models compared to its static graph mode.

- PyTorch’s dynamic computation graphs provide flexibility and ease of debugging but may consume more memory and lack optimizations.

Ecosystem and Tools

- TensorFlow’s ecosystem is more extensive, with tools like TFX for end-to-end ML workflows and TensorBoard for visualization.

- While smaller, PyTorch’s ecosystem grows rapidly with strong community contributions and tools like PyTorch Lightning for streamlined training.

Also Read: An Introduction to PyTorch – A Simple yet Powerful Deep Learning Library

Conclusion

We have investigated both frameworks, what they can do, and what their usage looks like. Choosing a framework (PyTorch vs TensorFlow) to use in a project depends on your objectives. PyTorch has one of the most flexible dynamic computation graphs and an easy interface, making it suitable for research and rapid prototyping. Nevertheless, TensorFlow is good for large-scale production environments because it provides strong solutions and numerous tooling and deployment options. These two frameworks continue to stretch the frontiers of AI/ML’s possibilities. Being familiar with both their advantages and disadvantages allows developers and researchers to choose better whether to opt for PyTorch or TensorFlow.

Frequently Asked Questions

A. For example, researchers tend to favor PyTorch over this kind of thing due to its dynamic computation graph, which makes it easy to try out new ideas flexibly. On the other hand, TensorFlow is popularly used in production environments because it is scalable and has good deployment support.

A. PyTorch uses an imperative programming paradigm, i.e., define-by-run approach, where operations are defined as they are executed, whereas TensorFlow originally used static computation graphs in TensorFlow 1.x but now defaults to eager execution in TensorFlow 2.x for immediate operation execution. However, TensorFlow 2.x still supports static graphs through tf.function.

A. In general, TensorFlow has a bigger and more established user community because it was released earlier by Google. Nevertheless, PyTorch’s community is blossoming with significant growth and is known for its huge support base, including researchers.