When it comes to building better AI, the usual strategy is to make models bigger. But this approach has a major problem: it becomes incredibly expensive.

But, DeepSeek-V3.2-Exp took a different path…

Instead of just adding more power, they focused on working smarter. The result is a new kind of model that delivers top-tier performance for a fraction of the cost. By introducing their “sparse attention” mechanism, DeepSeek isn’t just tweaking the engine; it’s redesigning the fuel injection system for unprecedented efficiency.

Let’s break down exactly how they did it.

Table of contents

Highlights of the Update

- Adding Sparse Attention: The only architectural difference between the new V3.2 model and its predecessor (V3.1) is the introduction of DSA. This shows they focused all their effort on solving the efficiency problem.

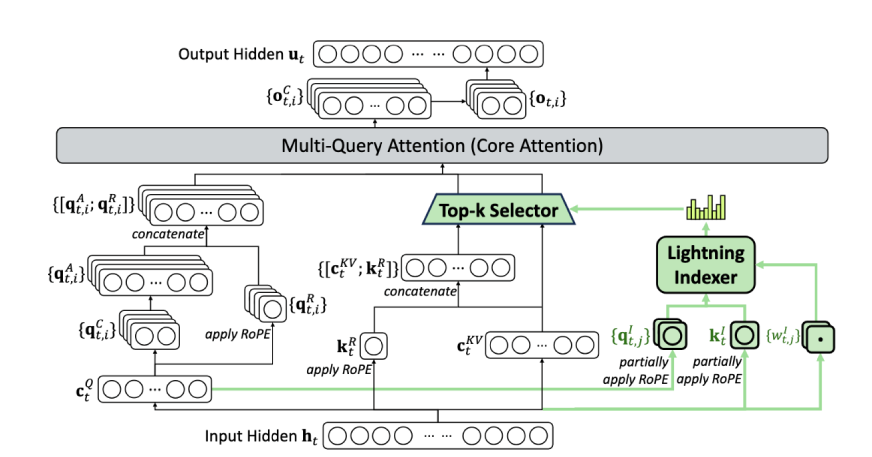

- A “Lightning Indexer”: DSA works by using a fast, lightweight component called a lightning indexer. This indexer quickly scans the text and picks out only the most important words for the model to focus on, ignoring the rest.

- A Massive Complexity Reduction: DSA changes the core computational problem from an exponentially difficult one

O(L²)to a much simpler, linear oneO(Lk). This is the mathematical secret behind the huge speed and cost improvements. - Built for Real Hardware: The success of DSA relies on highly optimized software designed to run perfectly on modern AI chips (like H800 GPUs). This tight integration between the smart algorithm and the hardware is what delivers the final, dramatic gains.

Read about the Previous update here: Deepseek-V3.1-Terminus!

DeepSeek Sparse Attention (DSA)

At the heart of every LLM is the “attention” mechanism: the system that determines how important each word in a sentence is to every other word.

The problem?

Traditional “dense” attention is wildly inefficient. Its computational cost scales quadratically (O(L²)), meaning that doubling the text length quadruples the computation and cost.

DeepSeek Sparse Attention (DSA) is the solution to this bloat. It doesn’t look at everything; it smartly selects what to focus on. The system is composed of two key parts:

- The Lightning Indexer: This is a lightweight, high-speed scanner. For any given word (a “query token”), it rapidly scores all the preceding words to determine their relevance. Crucially, this indexer is designed for speed: it uses a small number of heads and can run in FP8 precision, making its computational footprint remarkably small.

- Fine-Grained Token Selection: Once the indexer has scored everything, DSA doesn’t just grab blocks of text. It performs a precise, “fine-grained” selection, plucking only the top-K most relevant tokens from across the entire document. The main attention mechanism then only processes this carefully selected, sparse set.

The Result: DSA reduces the core attention complexity from O(L²) to O(Lk), where k is a fixed number of selected tokens. This is the mathematical foundation for the massive efficiency gains. While the lightning indexer itself still has O(L²) complexity, it’s so lightweight that the net effect is still a dramatic reduction in total computation.

The Training Pipeline: A Two-Stage Tune-Up

You can’t just slap a new attention mechanism onto a billion-parameter model and hope it works. DeepSeek employed a meticulous, two-stage training process to integrate DSA seamlessly.

- Stage 1: Continued Pre-Training (The Warm-Up)

- Dense Warm-up (2.1B tokens): Starting from the V3.1-Terminus checkpoint, DeepSeek first “warmed up” the new lightning indexer. They kept the main model frozen and ran a short training stage where the indexer learned to predict the output of the full, dense attention mechanism. This aligned the new indexer with the model’s existing knowledge.

- Sparse Training (943.7B tokens): This is where the real magic happened. After the warm-up, DeepSeek switched on the full sparse attention, selecting the top 2048 key-value tokens for each query. For the first time, the entire model was trained to operate with this new, selective vision, learning to rely on the sparse selections rather than the dense whole.

- Stage 2: Post-Training (The Finishing School)

To ensure a fair comparison, DeepSeek used the exact same post-training pipeline as V3.1-Terminus. This rigorous approach proves that any performance differences are due to DSA, not changes in training data.- Specialist Distillation: They created five powerhouse specialist models (for Math, Coding, Reasoning, Agentic Coding, and Agentic Search) using heavy-duty Reinforcement Learning. The knowledge from these experts was then distilled into the final V3.2 model.

- Mixed RL with GRPO: Instead of a multi-stage process, they used Group Relative Policy Optimization (GRPO) in a single, blended stage. The reward function was carefully engineered to balance key trade-offs:

- Length vs. Accuracy: Penalizing unnecessarily long answers.

- Language Consistency vs. Accuracy: Ensuring responses remained coherent and human-like.

- Rule-Based & Rubric-Based Rewards: Using automated checks for reasoning/agent tasks and tailored rubrics for general tasks.

The Hardware Secret Sauce: Optimized Kernels

A brilliant algorithm is useless if it runs slowly on actual hardware. DeepSeek’s commitment to efficiency shines here with deeply optimized, open-source code.

The model leverages specialized kernels like FlashMLA, which are custom-built to run the complex MLA and DSA operations with extreme efficiency on modern Hopper GPUs (like the H800). These optimizations are publicly available in pull requests to repositories like DeepGEMM, FlashMLA, and tilelang, allowing the model to achieve near-theoretical peak memory bandwidth (up to 3000 GB/s) and compute performance. This hardware-aware design is what transforms the theoretical efficiency of DSA into tangible, real-world speed.

Performance & Cost – A New Balance

So, what’s the final outcome of this engineering marvel? The data reveals a clear and compelling story.

Cost Reduction

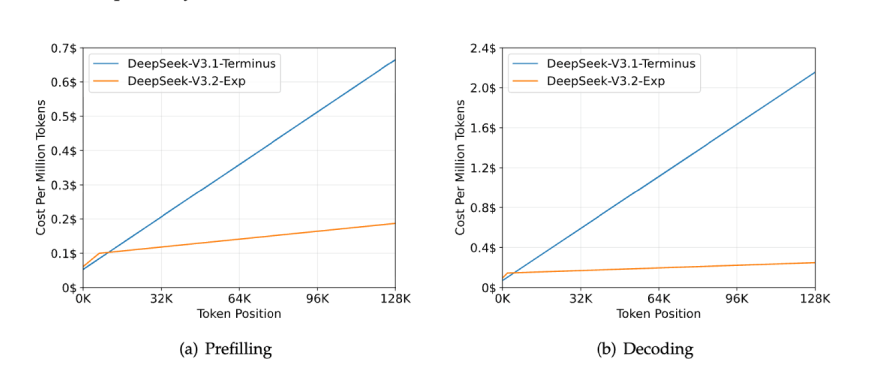

The most immediate impact is on the bottom line. DeepSeek announced a >50% reduction in API pricing. The technical benchmarks are even more striking:

- Inference Speed: 2–3x faster on long contexts.

- Memory Usage: 30–40% lower.

- Training Efficiency: 50% faster.

The real-world inference cost for decoding a 128K context window plummets to an estimated $0.25, compared to $2.20 for dense attention, making it 10x cheaper.

Better Performance

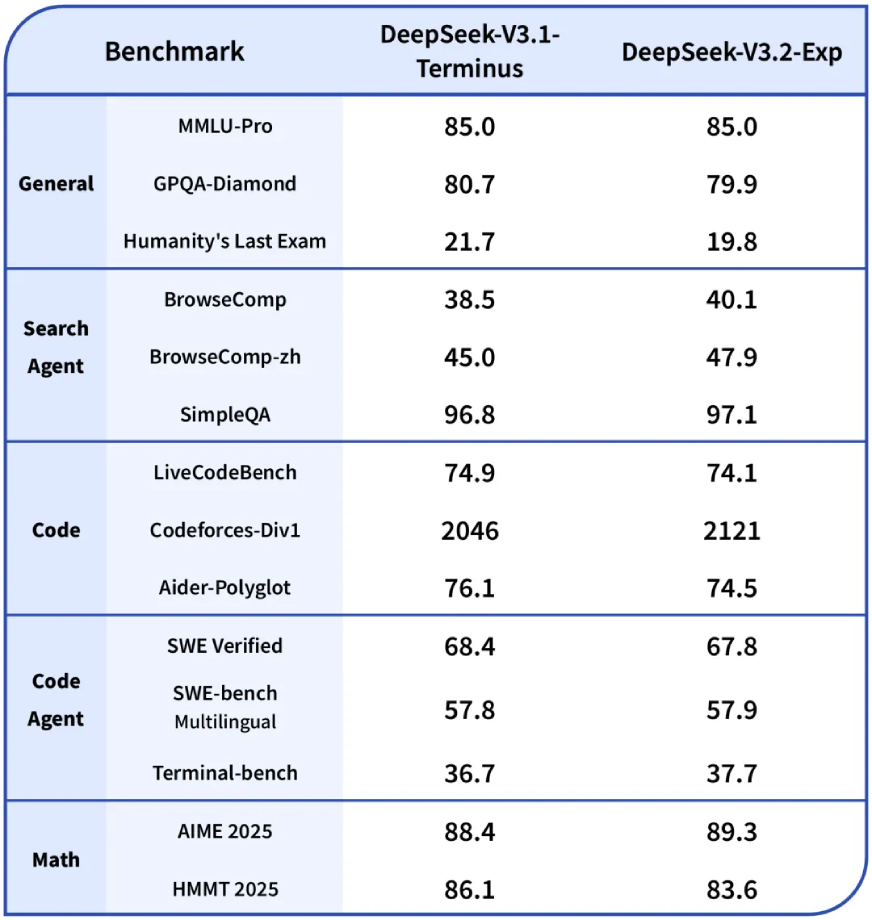

On aggregate, V3.2-Exp maintains performance parity with its predecessor. However, a closer look reveals a logical trade-off:

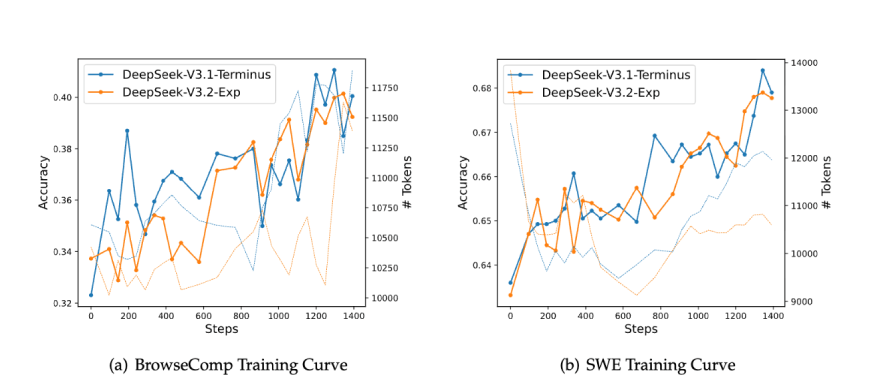

- The Wins: The model shows significant gains in coding (Codeforces) and agentic tasks (BrowseComp). This makes perfect sense: code and tool-use often contain redundant information, and DSA’s ability to filter noise is a direct advantage.

- The Trade-Offs: There are minor regressions in a few ultra-complex, abstract reasoning benchmarks (like GPQA Diamond and HMMT). The hypothesis is that these tasks rely on connecting very subtle, long-range dependencies that the current DSA mask might occasionally miss.

Deepseek-V3.1-Terminus vs DeepSeek-V3.2-Exp

Let’s Try the New DeepSeek-V3.2-Exp

The tasks I will be doing here will be same as we did in one of our previous articles on Deepseek-V3.1-Terminus. This will help in identifying how the new update is better.

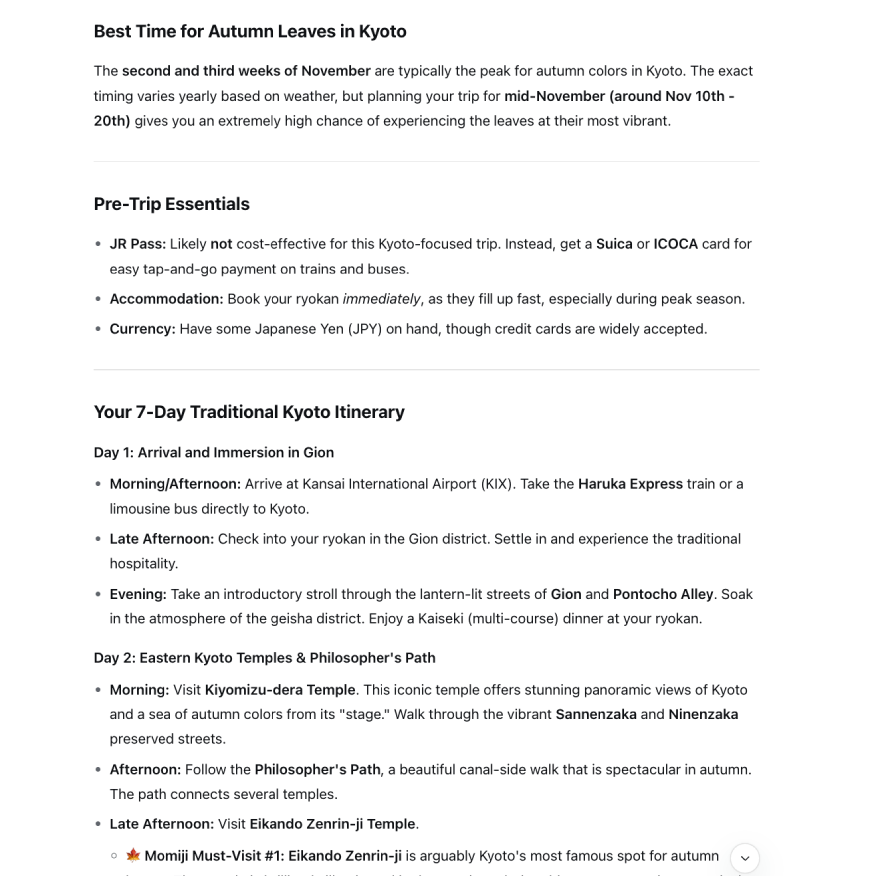

Task 1: Travel Plan

I need to plan a 7-day trip to Kyoto, Japan, for mid-November. The itinerary should focus on traditional culture, including temples, gardens, and tea ceremonies. Find the best time to see the autumn leaves, a list of three must-visit temples for ‘Momiji’ (autumn leaves), and a highly-rated traditional tea house with English-friendly services. Also, find a well-reviewed ryokan (traditional Japanese inn) in the Gion district. Organize all the information into a clear, day-by-day itinerary.

Output:

You can view the full output here.

Deepseek-V3.1-Terminus vs DeepSeek-V3.2-Exp Output Comparison

| Feature | DeepSeek-V3.1-Terminus | DeepSeek-V3.2-Exp | Winner |

|---|---|---|---|

| Structure & Organization | Single continuous paragraph | Well-structured with clear sections and bullet points | V3.2-Exp |

| Command Presentation | Commands embedded in running text | Dedicated code blocks with proper formatting | V3.2-Exp |

| Completeness & Depth | Basic explanation only | Includes practical examples and use cases | V3.2-Exp |

| Beginner Friendliness | Assumes prior knowledge | Explains concepts clearly for newcomers | V3.2-Exp |

Task 2: Coding Agent

I need a Python script that scrapes a public list of the top 100 films of all time from a website (you can choose a reliable source like IMDb, Rotten Tomatoes, or a well-known magazine’s list). The script should then save the film titles, release years, and a brief description for each movie into a JSON file. Include error handling for network issues or changes in the website’s structure. Can you generate the full script and explain each step of the process?

Output:

class IMDbTop100Scraper:

def __init__(self):

self.base_url = "https://www.imdb.com/chart/top"

self.headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36'

}

self.movies = []

def fetch_page(self, url: str) -> Optional[BeautifulSoup]:

"""

Fetch the webpage and return BeautifulSoup object.

Handles network errors and retries.

"""

try:

response = requests.get(url, headers=self.headers, timeout=10)

response.raise_for_status() # Raises an HTTPError for bad responses

# Check if we got a valid HTML response

if 'text/html' not in response.headers.get('content-type', ''):

raise ValueError("Received non-HTML response")

return BeautifulSoup(response.content, 'html.parser')

except requests.exceptions.RequestException as e:

print(f"Network error occurred: {e}")

return None

except Exception as e:

print(f"Unexpected error while fetching page: {e}")

return None

def parse_movie_list(self, soup: BeautifulSoup) -> List[Dict]:

"""

Parse the main movie list page to extract titles and years.

"""

movies = []

try:

# IMDb's top chart structure - this selector might need updating

movie_elements = soup.select('li.ipc-metadata-list-summary-item')

if not movie_elements:

# Alternative selector if the primary one fails

movie_elements = soup.select('.cli-children')

if not movie_elements:

raise ValueError("Could not find movie elements on the page")

for element in movie_elements[:100]: # Limit to top 100

movie_data = self.extract_movie_data(element)

if movie_data:

movies.append(movie_data)

except Exception as e:

print(f"Error parsing movie list: {e}")

return moviesDeepseek-V3.1-Terminus vs DeepSeek-V3.2-Exp Output Comparison

| Feature | DeepSeek-V3.1-Terminus | DeepSeek-V3.2-Exp | Winner |

|---|---|---|---|

| Structure & Presentation | Single dense paragraph | Clear headings, bullet points, summary table | V3.2-Exp |

| Safety & User Guidance | No safety warnings | Bold warning about unstaged changes loss | V3.2-Exp |

| Completeness & Context | Basic two methods only | Adds legacy `git checkout` method and summary table | V3.2-Exp |

| Actionability | Commands embedded in text | Dedicated command blocks with explicit flag explanations | V3.2-Exp |

Also Read: Evolution of DeepSeek: How it Became a Global AI Game-Changer!

Conclusion

DeepSeek-V3.2-Exp is more than a model; it’s a statement. It proves that the next great leap in AI won’t necessarily be a leap in raw power, but a leap in efficiency. By surgically attacking the computational waste in traditional transformers, DeepSeek has made long-context, high-volume AI applications financially viable for a much broader market.

The “Experimental” tag is a candid admission that this is a work in progress, particularly in balancing performance across all tasks. But for the vast majority of enterprise use cases, where processing entire codebases, legal documents, and datasets is the goal. DeepSeek hasn’t just released a new model; it has started a new race.

To know more about the model, checkout this link.