Just as you wouldn’t teach a child to ride a bike on a busy highway, AI agents need controlled environments to learn and improve. The environment shapes how an agent perceives the world, learns from experience, and makes decisions, whether it’s a self-driving car or a chatbot. Understanding these environments is essential to building AI systems that work reliably. In this article, we explore the different types of environments in AI and why they matter.

Table of contents

- What is an Environment in AI

-

Types of Environments in AI

- Fully Observable vs Partially Observable Environments

- Deterministic vs Stochastic Environments

- Competitive vs Collaborative Environments

- Single-Agent vs Multi-Agent Environment

- Static vs Dynamic Environments

- Discrete vs Continuous Environments

- Episodic vs Sequential Environments

- Known vs Unknown Environments

- Why Environment Types Matter for AI Development

- Conclusion

- Frequently Asked Questions

What is an Environment in AI

In AI, an environment is a stage where AI agents perform its role. Think of it as the complete ecosystem surrounding an intelligent system from which agent can sense, interact and learn from. An environment is the collection of all external factors and conditions that an AI agent must navigate to achieve its goal.

The agent interacts with this environment through two critical mechanisms: sensors and actuators. Sensors are the agent’s eyes and ears, they gather information about the current state of the environment and provide input to the agent’s decision-making system. Actuators, on the other hand, are the agent’s hands and voice, they execute the agent’s decision and produce output that directly affect the environment.

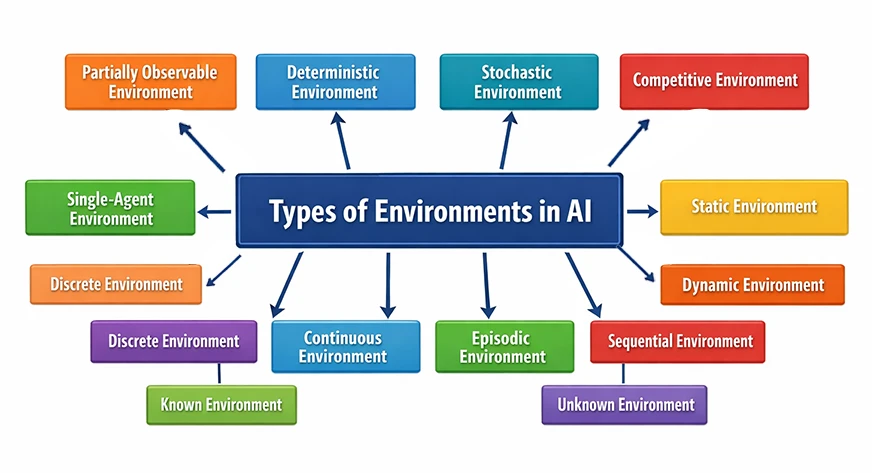

This all works in pairs: Fully vs Partially, Chaotic vs Stable, Deterministic vs Stochastic etc. Meaning, for every environment that is available there is an opposite of it, also in use. Therefore, the types would be outlined in a comparative manner.

Types of Environments in AI

1. Fully Observable vs Partially Observable Environments

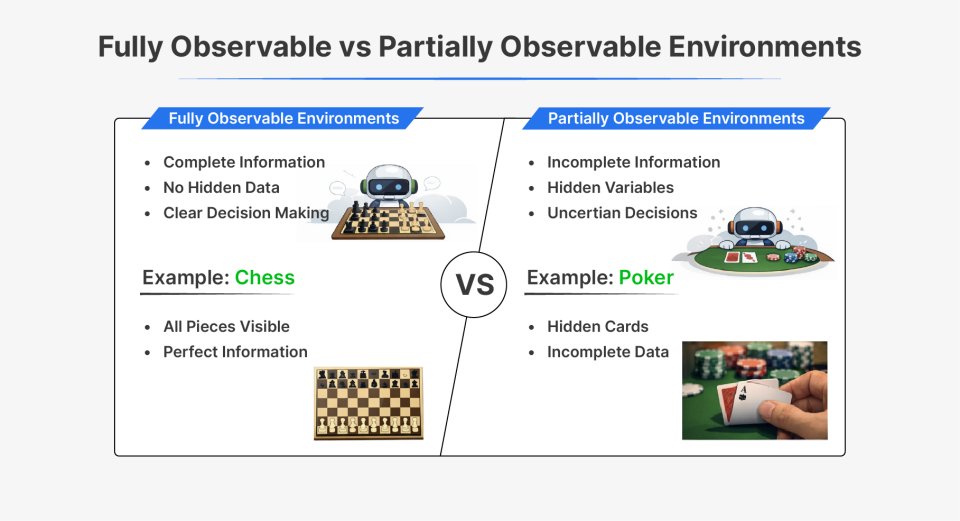

Fully observable environments are those where the AI agent has complete visibility into the current state of the environment. Every piece of information needed to make an informed decision is readily available to the agent through its sensors. There are no hidden surprises or missing pieces of the puzzle.

Partially observable environment is the opposite. The agent only has incomplete information about the environment’s current state. Crucial details are hidden, making decision-making more challenging because the agent must work with uncertainty and incomplete knowledge.

| Aspect | Fully Observable | Partially Observable |

|---|---|---|

| State visibility | Complete access to environment state | Incomplete or hidden information |

| Decision certainty | High | Low, requires inference |

| Example | Chess | Poker |

2. Deterministic vs Stochastic Environments

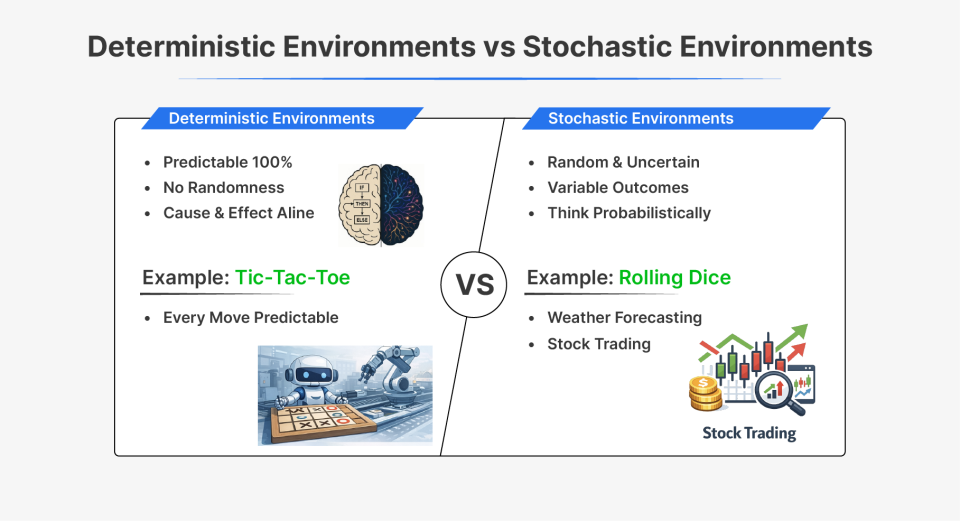

Deterministic environments are entirely predictable. When an agent takes an action, the outcome is always the same and can be predicted with 100% certainty. There is no randomness and variability, cause and effect are perfectly corelated.

Stochastic environment introduce randomness and uncertainity. The same action taken in identical conditions might produce different outcomes due to random factors. This requires agents to think probabilistically and adapt to unexpected results.

| Aspect | Deterministic | Stochastic |

|---|---|---|

| Outcome predictability | Fully predictable | Involves randomness |

| Same action result | Always same | Can differ |

| Example | Tic-Tac-Toe | Stock market |

3. Competitive vs Collaborative Environments

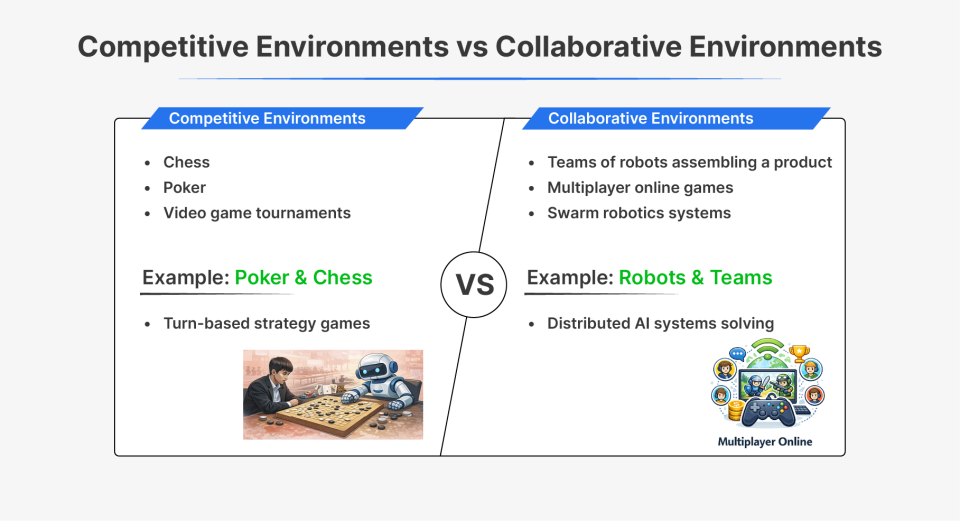

Competitive environments feature agents working against each other, often opposing goals. When one agent wins, others lose, it’s a zero-sum dynamic where success is relative.

Collaborative environment feature agents working toward shared goals. Success is measured by collective achievements rather than individual wins, and agent’s benefits from this cooperation.

| Aspect | Competitive | Collaborative |

|---|---|---|

| Agent goals | Conflicting | Shared |

| Outcome nature | Zero-sum | Mutual benefit |

| Example | Chess | Robot teamwork |

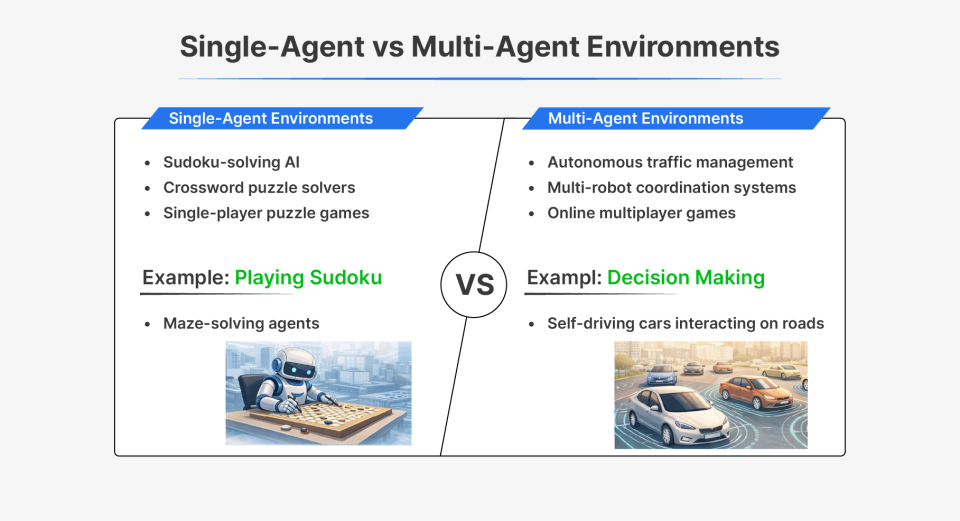

4. Single-Agent vs Multi-Agent Environment

Single-Agent environment involves only one AI agent making decisions and taking actions. The complexity comes from the environment itself, not from interactions with other agents.

Multi-Agent environments involve multiple AI agents or mix of AI and human agents operating simultaneously, each making decisions and influencing the overall system. This increases complexity because agents must consider not just the environment but also other agent’s behaviour and strategies.

| Aspect | Single-Agent | Multi-Agent |

|---|---|---|

| Number of agents | One | Multiple |

| Interaction complexity | Low | High |

| Example | Sudoku solver | Autonomous traffic |

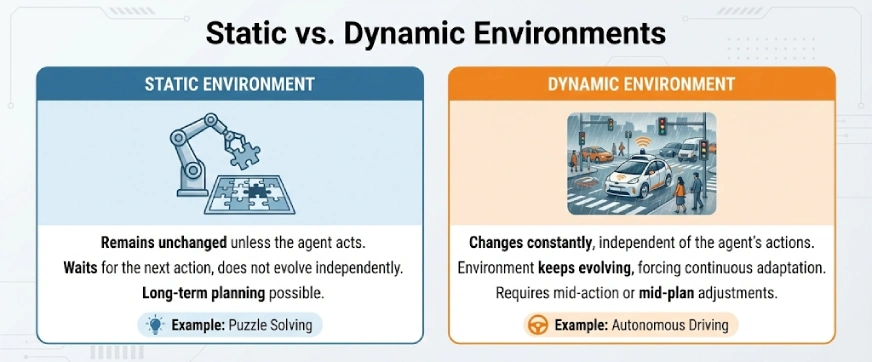

5. Static vs Dynamic Environments

Static environments remain unchanged unless the agent acts. Once an action is completed, the environment waits for the next action, it doesn’t evolve independently.

Dynamic environments change constantly, independent of the agent’s actions. The environment keeps evolving, often forcing the agent to adapt mid-action or mid plan.

| Aspect | Static | Dynamic |

|---|---|---|

| Environment change | Only after agent acts | Changes independently |

| Planning style | Long-term planning | Continuous adaptation |

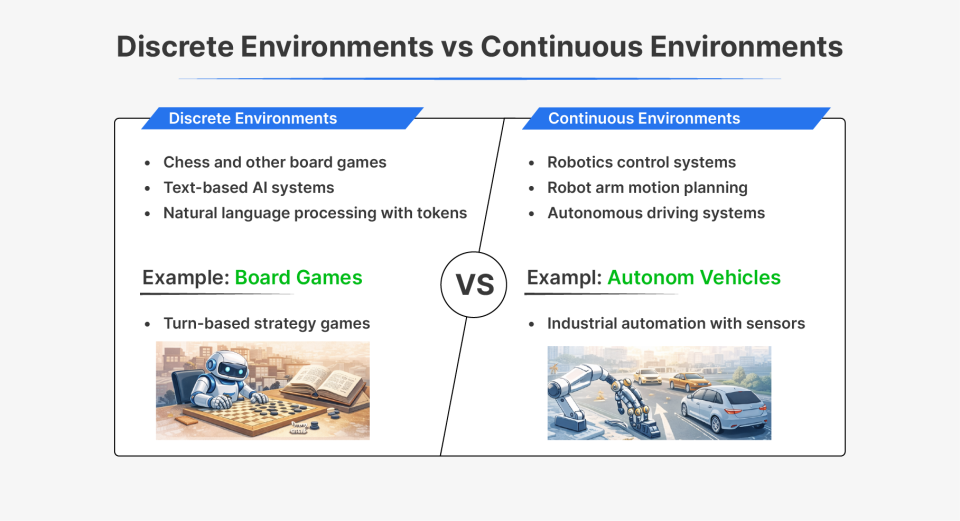

6. Discrete vs Continuous Environments

Discrete environments have finite, well-defined states and actions. Things exist in distinct, separate categories with no values in between.

Continuous Environments have infinite or near-infinite states and actions. Values flow smoothly along a spectrum rather than jumping between distinct points.

| Aspect | Discrete | Continuous |

|---|---|---|

| State space | Finite | Infinite |

| Action space | Countable | Continuous range |

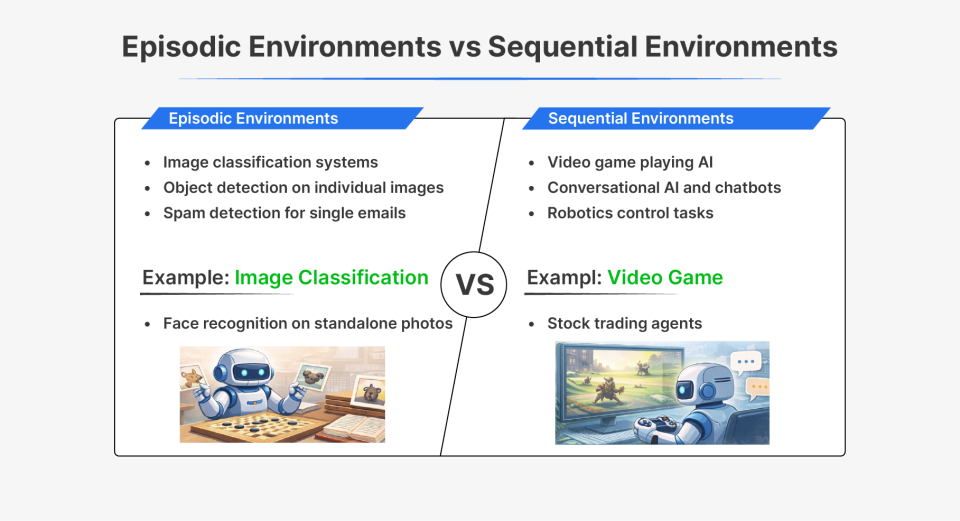

7. Episodic vs Sequential Environments

Episodic environments break the agent’s interaction into independent episodes or isolated instances. Each episode doesn’t significantly affect future episodes, they’re effectively reset or independent.

Sequential environments have events where current decision directly influence future situations. The agent must think long-term, understanding that today’s choices create tomorrow’s challenges and opportunities.

| Aspect | Episodic | Sequential |

|---|---|---|

| Past dependence | None | Strong |

| Planning horizon | Short | Long-term |

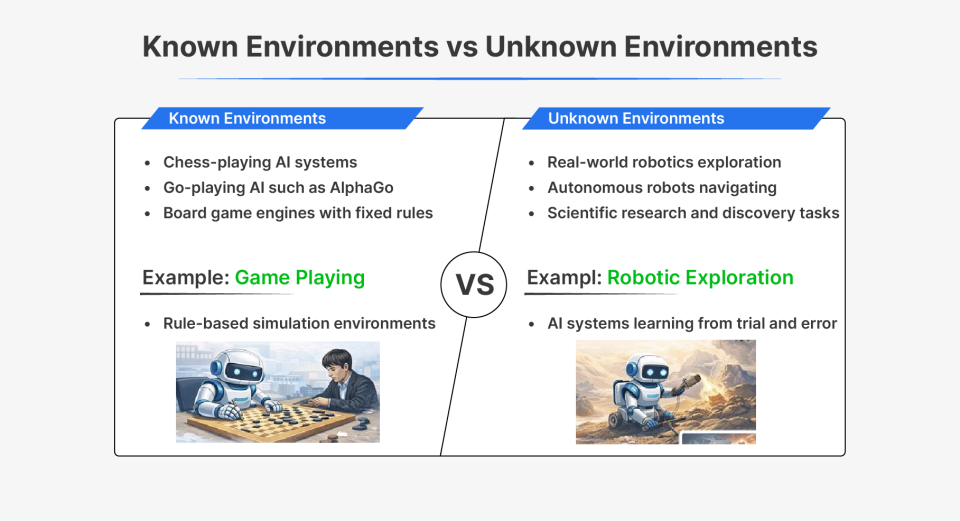

8. Known vs Unknown Environments

Known environments are those where the agent has a complete model or understanding of how the environments works, the rules are known and fixed.

Unknown environments are those where the agent must learn how the environments work through exploration and experience, discovering rules, patterns, and cause-effect relationship dynamically.

| Aspect | Known | Unknown |

|---|---|---|

| Environment model | Fully specified | Learned through interaction |

| Learning requirement | Minimal | Essential |

Why Environment Types Matter for AI Development

Understanding environment types directly influence how you build and train AI systems.

- Algorithm Selection: Deterministic environments allow exact algorithms; stochastic ones need probabilistic approaches.

- Training strategy: Episodic environments allow independent training samples; sequential ones need approaches that preserve history and learn pattern over time.

- Scalability: Single-agent discrete environments are simpler to scale than multi agent continuous ones.

- Real-World Testing: Simulated environments that accurately capture the target environment’s characteristics are crucial for safe testing before deploying into the real world

Also Read: What is Model Collapse? Examples, Causes and Fixes

Conclusion

AI environments aren’t background scenery, they are the foundation of intelligent behaviour. Chess thrives in fully observable, deterministic worlds while self-driving cars battle partially observable, stochastic chaos. These 8 dimensions, observability, determinism, competition, agency, dynamics, continuity, episodes, and knowledge dictate algorithm choice, training strategy, and deployment success. As AI powers transportation, healthcare, and finance, agents perfectly matched to their environments will dominate, intelligence without the right stage remains mere potential.

Frequently Asked Questions

A. An environment is everything external an AI agent interacts with, senses, and acts upon while trying to achieve its goal.

A. Environment types determine algorithm choice, training strategy, and whether an AI system can perform reliably in real-world conditions.

A. Factors like observability, randomness, and dynamics decide how much information an agent has and how it plans actions over time.